The urlretrieve() function provided by the urllib module. The urlretrieve () method downloads the remote data directly to the local.

urlretrieve(url, filename=None, reporthook=None, data=None)

- The parameter filename specifies the save local path (if the parameter is not specified, urllib will generate a temporary file to save the data.)

- The parameter reporthook is a callback function, which will trigger when the server is connected and the corresponding data block is transferred. We can use this callback function to display the current download progress.

- Parameter data refers to the data of the post import server. This method returns a tuple containing two elements (filename, headers). Filename represents the path saved to the local, and header represents the response header of the server

- #!/usr/bin/env python

- # coding=utf-8

- import os

- import urllib

- def cbk(a,b,c):

- '''''Callback function

- @a:Downloaded data block

- @b:Block size

- @c:Size of the remote file

- '''

- per=100.0*a*b/c

- if per>100:

- per=100

- print '%.2f%%' % per

- url='http://www.baidu.com'

- dir=os.path.abspath('.')

- work_path=os.path.join(dir,'baidu.html')

- urllib.urlretrieve(url,work_path,cbk)

- #!/usr/bin/env python

- # coding=utf-8

- import os

- import urllib

- def cbk(a,b,c):

- '''''Callback function

- @a:Downloaded data block

- @b:Block size

- @c:Size of the remote file

- '''

- per=100.0*a*b/c

- if per>100:

- per=100

- print '%.2f%%' % per

- url='http://www.baidu.com'

- dir=os.path.abspath('.')

- work_path=os.path.join(dir,'baidu.html')

- urllib.urlretrieve(url,work_path,cbk)

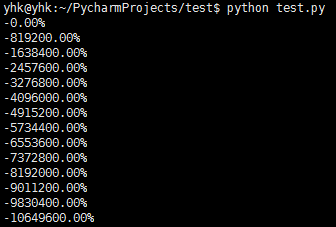

The following is an example of urlretrieve() downloading file, which can show the download progress.

- #!/usr/bin/env python

- # coding=utf-8

- import os

- import urllib

- def cbk(a,b,c):

- '''''Callback function

- @a:Downloaded data block

- @b:Block size

- @c:Size of the remote file

- '''

- per=100.0*a*b/c

- if per>100:

- per=100

- print '%.2f%%' % per

- url='http://www.python.org/ftp/python/2.7.5/Python-2.7.5.tar.bz2'

- dir=os.path.abspath('.')

- work_path=os.path.join(dir,'Python-2.7.5.tar.bz2')

- urllib.urlretrieve(url,work_path,cbk)

- #!/usr/bin/env python

- # coding=utf-8

- import os

- import urllib

- def cbk(a,b,c):

- '''''Callback function

- @a:Downloaded data block

- @b:Block size

- @c:Size of the remote file

- '''

- per=100.0*a*b/c

- if per>100:

- per=100

- print '%.2f%%' % per

- url='http://www.python.org/ftp/python/2.7.5/Python-2.7.5.tar.bz2'

- dir=os.path.abspath('.')

- work_path=os.path.join(dir,'Python-2.7.5.tar.bz2')

- urllib.urlretrieve(url,work_path,cbk)

urlopen() can easily obtain the remote html page information, and then use the Python Analyze the required data regularly, match the desired data, and then use urlretrieve() to download the data to the local. For the remote url address with limited access or limited number of connections, you can use proxies to connect. If the remote connection data is too large and the single thread download is too slow, you can use multi-threaded download. This is the legendary crawler