The Spark mentioned here includes SparkCore/SparkSQL/SparkStreaming. In fact, all operations are the same. The following shows the code in the actual project.

Method 1: write the entire DataFrame to MySQL at one time (the Schema of DataFrame should be consistent with the domain name defined in the MySQL table)

Dataset<Row> resultDF = spark.sql("select hphm,clpp,clys,tgsj,kkbh from t_cltgxx where id in (" + id.split("_")[0] + "," + id.split("_")[1] + ")");

resultDF.show();

Dataset<Row> resultDF2 = resultDF.withColumn("jsbh", functions.lit(new Date().getTime()))

.withColumn("create_time", functions.lit(new Timestamp(new Date().getTime())));

resultDF2.show();

resultDF2.write()

.format("jdbc")

.option("url","jdbc:mysql://lin01.cniao5.com:3306/traffic?characterEncoding=UTF-8")

.option("dbtable","t_tpc_result")

.option("user","root")

.option("password","123456")

.mode(SaveMode.Append)

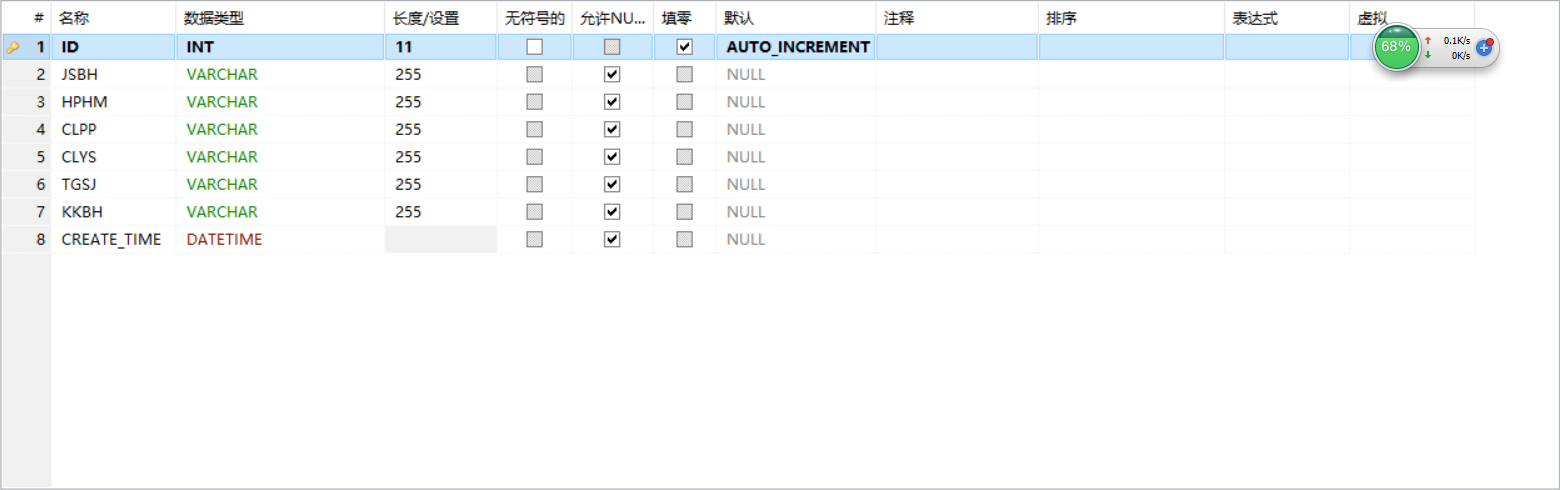

.save();MySQL table structure:

Method two: call foreach/foreachPartition in RDD, then build connection->prepare SQL->execute-> free connection. The advantage of this method is that data can be processed on demand and then update to the table, not necessarily the entire DataFrame.

RDD in SparkCore:

resultRDD.foreach(new VoidFunction<String>() {

@Override

public void call(String s) throws Exception {

String kkbh = s.split("&")[0];

String hphm = s.split("&")[1];

long jsbh = System.currentTimeMillis();

Connection conn = JdbcUtils.getConnection();

String sql = "insert into t_txc_result (JSBH,HPHM,KKBH,CREATE_TIME) values(?,?,?,?)";

PreparedStatement psmt = conn.prepareStatement(sql);

psmt.setString(1,jsbh+"");

psmt.setString(2,hphm);

psmt.setString(3,kkbh);

psmt.setTimestamp(4,new Timestamp(jsbh));

psmt.executeUpdate();

JdbcUtils.free(psmt,conn);

System.out.println("mysql insert : kkbh = " + kkbh + ", hphm = "+ hphm);

}

});DStream in SparkStreaming:

resultDStream.foreachRDD(new VoidFunction<JavaRDD<String>>() {

@Override

public void call(JavaRDD<String> stringJavaRDD) throws Exception {

stringJavaRDD.foreachPartition(new VoidFunction<Iterator<String>>() {

@Override

public void call(Iterator<String> stringIterator) throws Exception {

Connection conn = JdbcUtils.getConnection();

PreparedStatement pstmt = null;

while (stringIterator.hasNext()) {

String data = stringIterator.next();

String [] fields = data.split(",");

String hphm=fields[0];

String clpp=fields[1];

String clys=fields[2];

String tgsj=fields[3];

String kkbh=fields[4];

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd hh:mm:ss");

Date tgsj_date = sdf.parse(tgsj);

String sql = "insert into t_scrc_result (JSBH,HPHM,KKBH,TGSJ,CREATE_TIME) values(?,?,?,?,?)";

pstmt=conn.prepareStatement(sql);

long jsbh = System.currentTimeMillis();

pstmt.setString(1,jsbh+"_streaming");

pstmt.setString(2,hphm);

pstmt.setString(3,kkbh);

pstmt.setTimestamp(4,new Timestamp(tgsj_date.getTime()));

pstmt.setTimestamp(5,new Timestamp(jsbh));

pstmt.executeUpdate();

}

JdbcUtils.free(pstmt,conn);

}

});

}

});getConnection() and free() used here are functions written by ourselves, and the source code is pasted for reference:

package utils;

import java.sql.*;

public class JdbcUtils {

private static String url = "jdbc:mysql://lin01.cniao5.com:3306/traffic?characterEncoding=UTF-8";

private static String user = "root";

private static String pwd = "123456";

private JdbcUtils() {

}

// 1. Register and drive oracle.jdbc.driver.OracleDriver

static {

try {

Class.forName("com.mysql.jdbc.Driver");

} catch (ClassNotFoundException e) {

e.printStackTrace();

System.out.println("Database driver loading failed!");

}

}

// 2. Establish a connection

public static Connection getConnection() throws SQLException {

return DriverManager.getConnection(url, user, pwd);

}

// 3. Close resources

public static void free(Statement stmt, Connection conn) {

try {

if (stmt != null) {

stmt.close();

}

} catch (SQLException e) {

e.printStackTrace();

} finally {

try {

if (conn != null) {

conn.close();

}

} catch (SQLException e) {

e.printStackTrace();

}

}

}

// 3. Close resource 2

public static void free2(ResultSet rs, Statement stmt, Connection conn) {

try {

if (rs != null)

rs.close();

} catch (SQLException e) {

// TODO Auto-generated catch block

e.printStackTrace();

} finally {

try {

if (stmt != null)

stmt.close();

} catch (SQLException e) {

// TODO Auto-generated catch block

e.printStackTrace();

} finally {

try {

if (conn != null)

conn.close();

} catch (SQLException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

}

}