1. Preface

With the development of Internet of things, speech recognition technology has attracted more and more attention. Speech recognition technology is actively promoting the revolution in the field of information and communication. Human-computer interaction based on speech recognition, such as voice dialing, voice mail, voice input and even voice control, is becoming more and more popular Despite the increasing number of biometric recognition methods, speech recognition is still the mainstream Compared with other biometric technologies, speech recognition technology not only has the characteristics of non-contact, non-invasive, easy to use, no loss and forgetting, no memory and so on.

This article uses the online voice recognition service provided by Huawei cloud to design an automatic voice search function for the browser. The programming language adopts C + +, the software framework adopts QT design, and the browser kernel adopts QWebEngineView. In Qt5 After 7, webkit will not be supported in QT. At present, the browser kernel is QWebEngineView, which can only be compiled by MSVC. mingw to use the browser, you can download the webkit library separately, or use COM components to call IE browser. The browser used in the current article is QWebEngineView, and the compiler adopts VS2017,32bit.

The voice acquisition function is realized by using the QAudioInput class of QT. The PCM data of the sound card is collected and saved. The text recognition is completed through the voice recognition HTTP interface of Huawei cloud. After the text is obtained, the text related content is searched through the browser.

The results are as follows:

Click on the interface "start speech acquisition" button, you can speak, then click stop collection, then call the speech recognition interface of HUAWEI cloud for speech recognition, display the recognized text in the following display box, and then complete the browser automatic search.

2. Create a voice server

2.1 using voice services

Log in to Huawei cloud official website: https://www.huaweicloud.com/

Choose products - Artificial Intelligence - voice interactive services - one sentence recognition.

Short speech recognition address: https://www.huaweicloud.com/product/asr.html

Short speech recognition is to convert oral audio into text and recognize audio streams or audio files from different audio sources for no more than one minute through API calls. It is suitable for speech search, human-computer interaction and other speech interaction recognition scenarios. Support free trial.

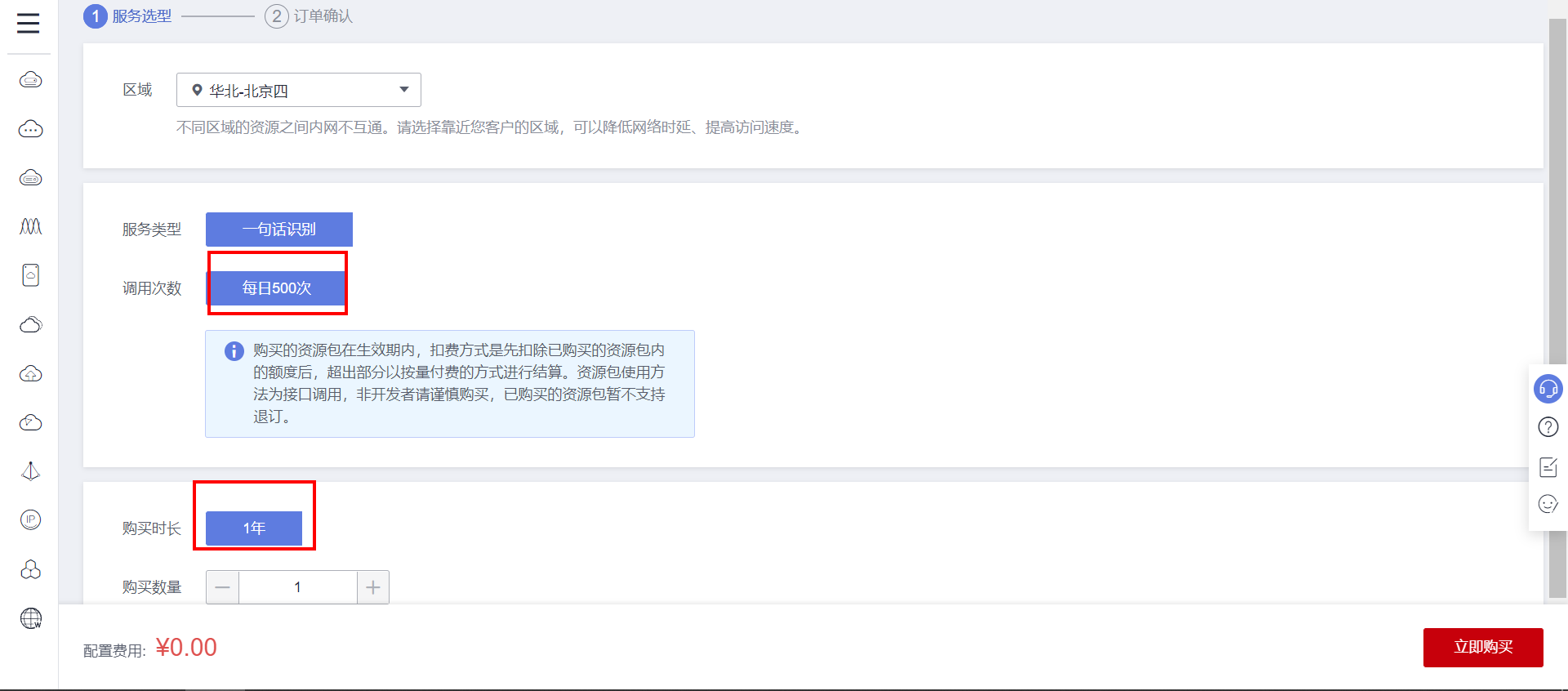

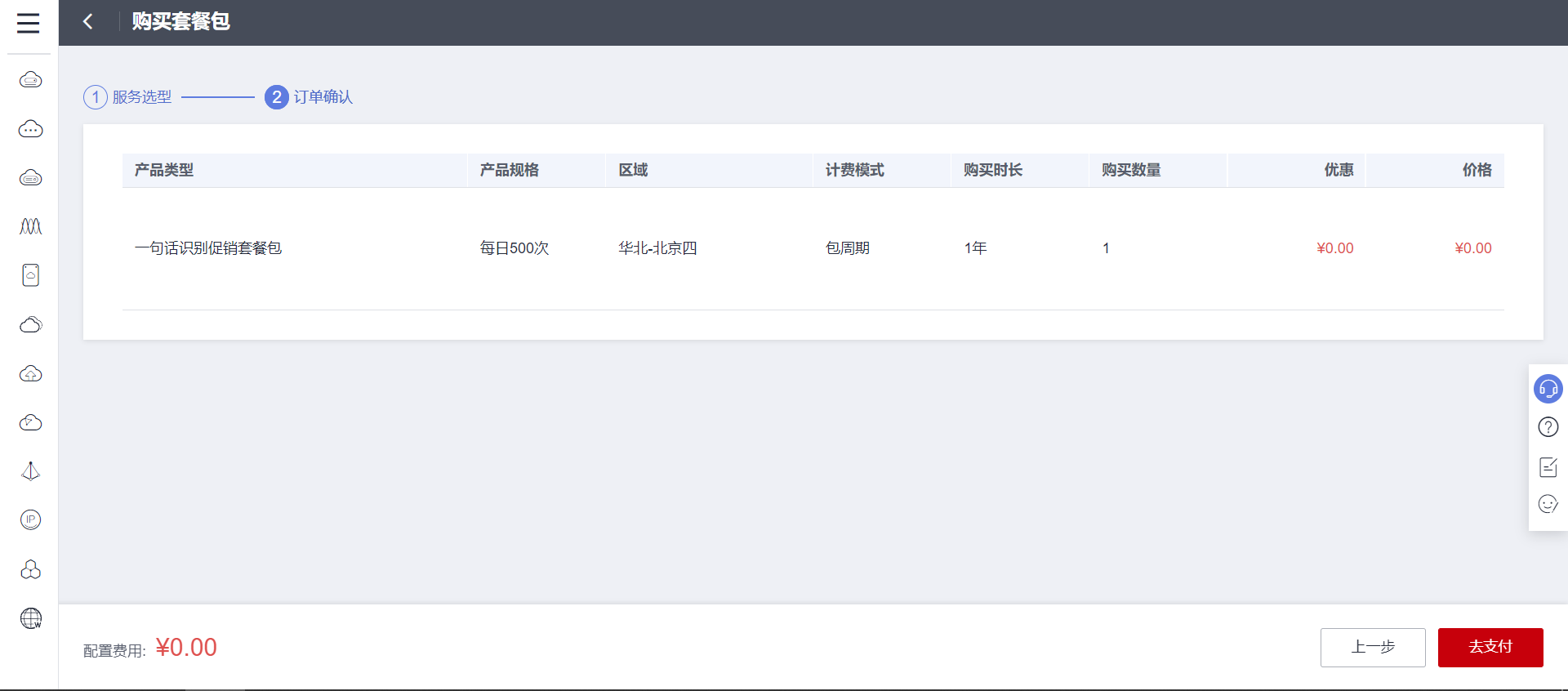

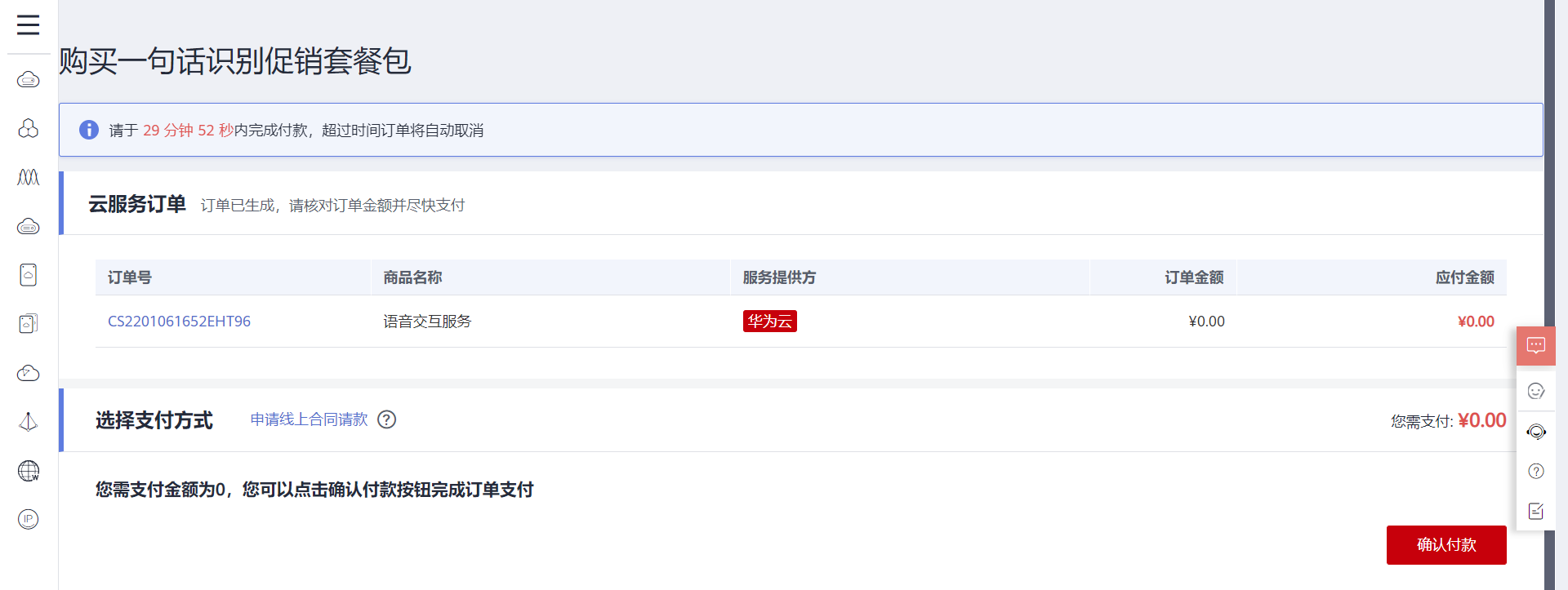

Free trial 500 times a day.

2.2 introduction to HTTP interface

Document address: https://support.huaweicloud.com/api-sis/sis_03_0094.html

Online debugging interface address: https://apiexplorer.developer.huaweicloud.com/apiexplorer/doc?product=SIS&api=RecognizeShortAudio

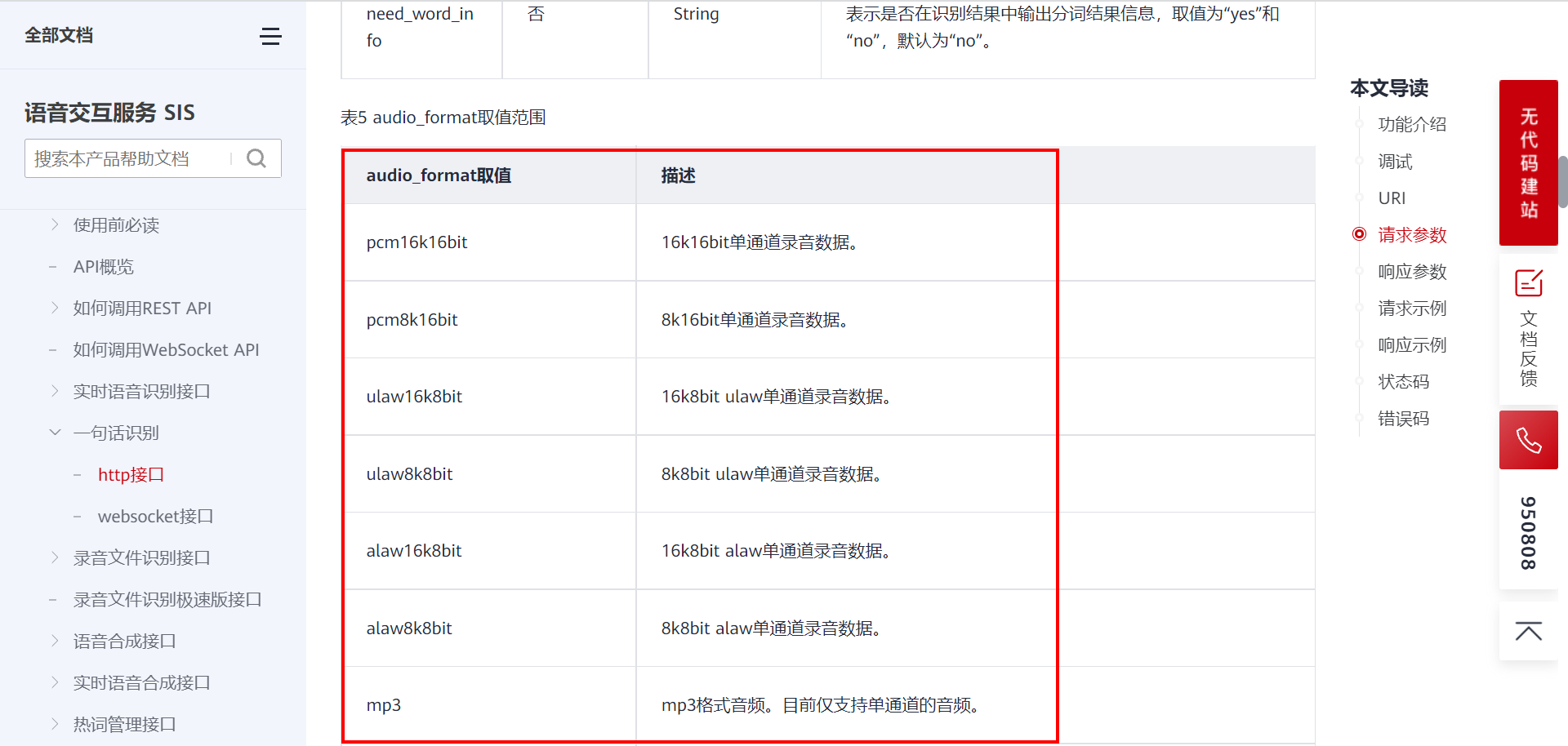

Several important parameters:

The frequency and channel number of local audio acquisition must match the parameters.

2.3 interface address summary

Request address: "https://{endpoint}/v1/{project_id}/asr/short-audio"

Request data:

{

"config": {

"audio_format": "ulaw8k8bit",

"property": "chinese_8k_common",

"add_punc": "yes",

"digit_norm": "yes",

"need_word_info": "yes"

},

"data": "/+MgxAAUeHpMAUkQAANhuRAC..."

}

Please bring it in your head: X-Auth-Token parameter

The parameters in the request data are introduced in the previous screenshot. Data is the base64 encoded data of the audio file.

endpoint field and project in the request address_ The ID field and X-Auth-Token field need to be filled in as long as they are used to access any API interface of Huawei cloud. The acquisition method is shown here: https://bbs.huaweicloud.com/blogs/317759 Turn to subsection 2.3.

Identify the data returned successfully:

{

"trace_id": "567e8537-a89c-13c3-a882-826321939651",

"result": {

"text": "Welcome to voice cloud service.",

"score": 0.9,

"word_info": [

{

"start_time": 150,

"end_time": 570,

"word": "welcome"

},

{

"start_time": 570,

"end_time": 990,

"word": "use"

},

{

"start_time": 990,

"end_time": 1380,

"word": "voice"

},

{

"start_time": 1380,

"end_time": 1590,

"word": "cloud"

},

{

"start_time": 1590,

"end_time": 2070,

"word": "service"

}

]

}

}

The text field is the recognized text data.

3. Project code example

The core code listed below is mainly the string splicing format. After splicing, you can send an http request.

3.1 voice to text request code

//Voice to text

void Widget::audio_to_text(QByteArray data)

{

function_select=0;

QString requestUrl;

QNetworkRequest request;

//BASE64 code for storing documents

QString base64_Data;

//Set request address

QUrl url;

//One sentence identification request address

requestUrl = QString("https://sis-ext.%1.myhuaweicloud.com/v1/%2/asr/short-audio")

.arg(SERVER_ID)

.arg(PROJECT_ID);

qDebug()<<"requestUrl:"<<requestUrl;

//Format data submission

request.setHeader(QNetworkRequest::ContentTypeHeader, QVariant("application/json"));

//Base64 encode the picture

base64_Data = QString(data.toBase64());

//Set token

request.setRawHeader("X-Auth-Token",Token);

//Construction request

url.setUrl(requestUrl);

request.setUrl(url);

//Set sampling rate

QString post_param=QString

("{"

"\"config\": {"

"\"audio_format\": \"%1\","

"\"property\": \"%2\","

"\"add_punc\": \"yes\","

"\"digit_norm\": \"yes\","

"\"need_word_info\": \"yes\""

"},"

"\"data\": \"%3\""

"}").arg("pcm16k16bit").arg("chinese_16k_common").arg(base64_Data);

/*

chinese_16k_common Support Chinese Mandarin speech recognition with a sampling rate of 16k.

pcm16k16bit 16k16bit Single channel recording data.

*/

//Send request

manager->post(request, post_param.toUtf8());

}

3.2 update token code

/*

Function: get token

*/

void Widget::GetToken()

{

//Indicates to get the token

function_select=3;

QString requestUrl;

QNetworkRequest request;

//Set request address

QUrl url;

//Get token request address

requestUrl = QString("https://iam.%1.myhuaweicloud.com/v3/auth/tokens")

.arg(SERVER_ID);

//Create your own TCP server for testing

//requestUrl="http://10.0.0.6:8080";

//Format data submission

request.setHeader(QNetworkRequest::ContentTypeHeader, QVariant("application/json;charset=UTF-8"));

//Construction request

url.setUrl(requestUrl);

request.setUrl(url);

QString text =QString("{\"auth\":{\"identity\":{\"methods\":[\"password\"],\"password\":"

"{\"user\":{\"domain\": {"

"\"name\":\"%1\"},\"name\": \"%2\",\"password\": \"%3\"}}},"

"\"scope\":{\"project\":{\"name\":\"%4\"}}}}")

.arg(MAIN_USER)

.arg(IAM_USER)

.arg(IAM_PASSWORD)

.arg(SERVER_ID);

//Send request

manager->post(request, text.toUtf8());

}

3.3 result processing returned by Huawei cloud

//Analyze feedback results

void Widget::replyFinished(QNetworkReply *reply)

{

QString displayInfo;

int statusCode = reply->attribute(QNetworkRequest::HttpStatusCodeAttribute).toInt();

//Read all data

QByteArray replyData = reply->readAll();

qDebug()<<"Status code:"<<statusCode;

qDebug()<<"Feedback data:"<<QString(replyData);

//Update token

if(function_select==3)

{

displayInfo="token Update failed.";

//Read the data of HTTP response header

QList<QNetworkReply::RawHeaderPair> RawHeader=reply->rawHeaderPairs();

qDebug()<<"HTTP Number of response headers:"<<RawHeader.size();

for(int i=0;i<RawHeader.size();i++)

{

QString first=RawHeader.at(i).first;

QString second=RawHeader.at(i).second;

if(first=="X-Subject-Token")

{

Token=second.toUtf8();

displayInfo="token Update successful.";

//Save to file

SaveDataToFile(Token);

break;

}

}

qDebug()<<displayInfo;

return;

}

//Judgment status code

if(200 != statusCode)

{

//Parse data

QJsonParseError json_error;

QJsonDocument document = QJsonDocument::fromJson(replyData, &json_error);

if(json_error.error == QJsonParseError::NoError)

{

//Determine whether it is an object, and then start parsing the data

if(document.isObject())

{

QString error_str="";

QJsonObject obj = document.object();

QString error_code;

//Parse error code

if(obj.contains("error_code"))

{

error_code=obj.take("error_code").toString();

error_str+="error code :";

error_str+=error_code;

error_str+="\n";

}

if(obj.contains("error_msg"))

{

error_str+="Error message:";

error_str+=obj.take("error_msg").toString();

error_str+="\n";

}

//Display error code

qDebug()<<error_str;

}

}

return;

}

//speech recognition

if(function_select==0)

{

//Parse data

QJsonParseError json_error;

QJsonDocument document = QJsonDocument::fromJson(replyData, &json_error);

if(json_error.error == QJsonParseError::NoError)

{

//Determine whether it is an object, and then start parsing the data

if(document.isObject())

{

QString error_str="";

QJsonObject obj = document.object();

QString error_code;

if(obj.contains("result"))

{

QJsonObject obj2=obj.take("result").toObject();

if(obj2.contains("text"))

{

QString text=obj2.take("text").toString();

qDebug()<<"Recognized text:"<<text;

ui->lineEdit_text_display->setText(text);

//Browser search

QString url="https://www.baidu.com/s?ie=UTF-8&wd="+text;

m_webView->load(QUrl(url));

}

}

//Display error code

qDebug()<<error_str;

}

}

}

}