I caught up with the company's mental health day last Friday and took three days off on weekends.

So I had three days to catch up with my geek time "Rust lesson 1" column. I thought about how I could hand in two manuscripts in three days. As a result, I worked hard all day on Friday and produced one.

Did I go to make soy sauce on Saturday and Sunday? Or did you eat candy with Xiaobao and Xiaobei in non mainstream clothes? No, No. early on Saturday morning, I sat next to my computer and prepared a new manuscript: "practical project: developing Python 3 / nodejs module using pyo3/neon".

Originally, there was no pressure to write this topic, but because I temporarily added a get hands dirty series at the beginning of the column, I had already practiced pyo3 and neon from the beginning, so this article will be nothing new.

So I thought: what is complex and useful enough that the python community or nodejs community lacks?

After thinking about it, I chose the embedded search engine. At present, search engine servers have blossomed everywhere in the open source and non open source world. There are elasticsearch with good reputation in the open source community and agolia, the industry benchmark in the entrepreneurship circle. Of course, Rust also has meilisearch, sonic and quickwit. These search engine servers can be accessed through clients written in any language. However, if you want to embed a search engine that has no server, runs locally, indexes locally, but can handle massive data in your system, Python and nodejs really don't have a good choice, especially python. At least others have nodejs and flexsearch. Forgive me for my ignorance, python really doesn't have a good choice. There is nothing wrong with pylucene based on lucene, but running a python search engine and building a java VM in Python is always a thorn in the throat. That's why I chose the embedded search engine as an example to talk about how to make Rust support Python and nodejs.

Under Rust, we have tantivy, an embedded search engine that performs quite well. It is also the underlying Library of quickwit (quickwit is the maintainer of tantivy). You can see https://tantivy-search.github.io/bench/ , here we compare the performance of tantivy, lucene, pisa, bleve and lucene. Basically, the performance of pisa written by tantivy and C + + is equal, but the functions are more comprehensive.

However, tantivy has its own tantivy py, and it doesn't make much sense for me to make another similar one. I looked through the code of tantivy py and found that it is basically the encapsulation of the Rust library. Since tantivy itself locates the underlying implementation, the API is not so friendly. Therefore, tantivy py is hardly a fool's version for search engine Xiaobai. Therefore, I can make a python search engine library with different positioning. What I hope is that its API feels like this:

In [1]: from xunmi import *

# Load (or create) the index directly from the configuration

In [2]: indexer = Indexer("./fixtures/config.yml")

# Gets the handle to modify the index

In [3]: updater = indexer.get_updater()

In [4]: f = open("./fixtures/wiki_00.xml")

# Get data to index

In [5]: data = f.read()

In [6]: f.close()

# Provide some simple rules to format data (such as data type, field renaming, type conversion)

# Support xml / json / yml and other data. The data needs to match the index, otherwise it needs to be used

# mapping and conversion rule conversion

In [7]: input_config = InputConfig("xml", [("$value", "content")], [("id", ("string", "number"))])

# Update index

In [8]: updater.update(data, input_config)

# Search the updated index, use the "title" and "content" fields to search, and find the five results at offset 0 to return

In [9]: result = indexer.search("history", ["title", "content"], 5, 0)

# The returned result contains the score and the data stored in the index (here, content is only indexed, not stored)

In [10]: result

Out[10]:

[(13.932347297668457,

'{"id":[22],"title":["history"],"url":["https://zh.wikipedia.org/wiki?curid=22"]}'),

(11.62932014465332,

'{"id":[399],"title":["African History"],"url":["https://zh.wikipedia.org/wiki?curid=399"]}'),

(11.526201248168945,

'{"id":[2239],"title":["American history"],"url":["https://zh.wikipedia.org/wiki?curid=2239"]}'),

(11.521516799926758,

'{"id":[374],"title":["Asian History"],"url":["https://zh.wikipedia.org/wiki?curid=374"]}'),

(11.342496871948242,

'{"id":[182],"title":["Chinese history"],"url":["https://zh.wikipedia.org/wiki?curid=182"]}')]The index configuration file looks like this:

---

path: /tmp/searcher_index # Index path

schema: # The schema of the index. For text, use CANG_JIE do Chinese word segmentation

- name: id

type: u64

options:

indexed: true

fast: single

stored: true

- name: url

type: text

options:

indexing: ~

stored: true

- name: title

type: text

options:

indexing:

record: position

tokenizer: CANG_JIE

stored: true

- name: content

type: text

options:

indexing:

record: position

tokenizer: CANG_JIE

stored: false

text_lang:

chinese: true # If true, the conversion from traditional Chinese to simplified Chinese will be performed automatically

writer_memory: 100000000Therefore, before writing this article, I need to write a Python FFI using pyo3 to encapsulate Rust code for Python to use. With this code, we can write articles. Before writing this code, I need to write a Rust library to encapsulate tantivy and provide a friendly API.

So there was the first dumpling: xunmi.

I wrote a simple tanvity package and put it under tyrchen/xunmi of github. How to use it:

use std::str::FromStr;

use xunmi::*;

fn main() {

// you can load a human-readable configuration (e.g. yaml)

let config = IndexConfig::from_str(include_str!("../fixtures/config.yml")).unwrap();

// then open or create the index based on the configuration

let indexer = Indexer::open_or_create(config).unwrap();

// then you can get the updater for adding / updating index

let mut updater = indexer.get_updater().unwrap();

// data to index could comes from json / yaml / xml, as long as they're compatible with schema

let content = include_str!("../fixtures/wiki_00.xml");

// you could even provide mapping for renaming fields and converting data types

// e.g. index schema has id as u64, content as text, but xml has id as string

// and it doesn't have a content field, instead the $value of the doc is the content.

// so we can use mapping / conversion to normalize these.

let config = InputConfig::new(

InputType::Xml,

vec![("$value".into(), "content".into())],

vec![("id".into(), (ValueType::String, ValueType::Number))],

);

// you could use add() or update() to add data into the search index

// if you add, it will insert new docs; if you update, and if the doc

// contains an "id" field, updater will first delete the term matching

// id (so id shall be unique), then insert new docs.

// all data added/deleted will be committed.

updater.update(content, &config).unwrap();

// by default the indexer will be auto reloaded upon every commit,

// but that has delays in tens of milliseconds, so for this example,

// we shall reload immediately.

indexer.reload().unwrap();

println!("total: {}", indexer.num_docs());

// you could provide a query and fields you want to search

let result = indexer.search("history", &["title", "content"], 5, 0).unwrap();

for (score, doc) in result.iter() {

println!("score: {}, doc: {:?}", score, doc);

}

}As you can see, it is almost consistent with the Python sample code.

In the process of writing xunmi, I found that the conversion tool from traditional Chinese to simplified Chinese is not ideal. I first found a character with a good download volume and marked myself as 1.0_ Converter library, found that converting a Wikipedia article is too slow to be seen by the naked eye. Later, I found the seemingly awesome opencc written in C + + and its package opencc trust. Unfortunately, opencc trust is not well done. During compilation, the system needs to install opencc before it can be used. When I ran in github action, even if "apt install opencc" would still make compilation errors, so I had the idea of writing one myself. I think, isn't it a mapping from traditional Chinese characters to simplified Chinese characters? That is, one or two hundred lines of code: I generate a mapping table during compilation, and convert the string character by character at run time?

So I began to toss about the second dumpling: fast2s (tyrchen/fast2s).

In the process of doing it, I suddenly thought of the fst library that I always thought was awesome, but I didn't know where to use it. fst is a library that uses finite automata to do ordered set / map queries. It is less efficient than HashMap, but it saves a lot of memory. Of course, the conversion from traditional Chinese characters to simplified Chinese characters is only 2000 Chinese characters, and the memory saving benefit is not great, but I just think I have found an application scenario of fst, and I want to try it. Making fast2s requires a conversion table from traditional Chinese to simplified Chinese. When looking for the conversion table, I found simplet2s RS, so I used its conversion table. The first version written soon is compared with several existing libraries:

| tests | fast2s | simplet2s-rs | opencc-rust | character_conver | | ----- | ------ | ------------ | ----------- | ---------------- | | zht | 446us | 616us | 5.08ms | 1.23s | | zhc | 491us | 798us | 6.08ms | 2.87s | | en | 68us | 2.82ms | 12.24ms | 26.11s | Test result (mutate existing string): | tests | fast2s | simplet2s-rs | opencc-rust | character_conver | | ----- | ------ | ------------ | ----------- | ---------------- | | zht | 438us | N/A | N/A | N/A | | zhc | 503us | N/A | N/A | N/A | | en | 34us | N/A | N/A | N/A |

It is found that fast2s and simplet2s rs are the best.

It is reasonable to say that fast2s made with fst is slower than simplet2s made with HashMap, but the result surprised me. After looking at the code of simplet2s ts, I found that I still have some special cases to deal with. So I changed the processing table of the special case corresponding to simplet2s to replace the string with a character array, so as to avoid additional pointer jump when accessing the hash table (if you look at the hash table in my Rust column, you can understand the difference between the two):

// The code of fast2s uses character / character arrays for both key and value

// thanks https://github.com/bosondata/simplet2s-rs/blob/master/src/lib.rs#L8 for this special logic

// Traditional Chinese -> Not convert case

static ref T2S_EXCLUDE: HashMap<char, HashSet<Word>> = {

hashmap!{

'Son' => hashset!{['Son','Wide']},

'cover' => hashset!{['answer', 'cover'], ['batch','cover'], ['return','cover']},

'many' => hashset!{['very','many']},

'By' => hashset!{['Comfort','By'], ['wolf','By']},

'Yes' => hashset!{['Yes','at']},

'Is it' => hashset!{['Single','Is it']},

'Single' => hashset!{['Single','Is it']},

'In' => hashset!{['Fan','In']}

}

};

// The code of simplet2s uses strings for both key and value

// Traditional Chinese -> Not convert case

static ref T2S_EXCLUDE: HashMap<&'static str, HashSet<&'static str>> = {

hashmap!{

"Son" => hashset!{"Er Kuan"},

"cover" => hashset!{"Reply", "Approve", "Reply"},

"many" => hashset!{"What"},

"By" => hashset!{"comfort", "Mess"},

"Yes" => hashset!{"Lookout"},

"Is it" => hashset!{"So"},

"Single" => hashset!{"So"},

"In" => hashset!{"Fan Yu"}

}

};After handling the special cases, the results of fast2s and simplet2s rs are similar, but because my fast2s uses some special optimizations, the performance is still equal to that of simplet2s when using fst:

| tests | fast2s | simplet2s-rs | opencc-rust | character_conver | | ----- | ------ | ------------ | ----------- | ---------------- | | zht | 596us | 579us | 4.93ms | 1.23s | | zhc | 643us | 750us | 5.89ms | 2.87s | | en | 59us | 2.68ms | 11.46ms | 26.11s | Test result (mutate existing string): | tests | fast2s | simplet2s-rs | opencc-rust | character_conver | | ----- | ------ | ------------ | ----------- | ---------------- | | zht | 524us | N/A | N/A | N/A | | zhc | 609us | N/A | N/A | N/A | | en | 48us | N/A | N/A | N/A |

In fast2s, I not only provide direct conversion, but also provide the function of modifying existing strings instead of generating new strings. This capability is very meaningful for the complex and simple conversion of large string or file (file can be mmap), because it can save memory allocation and consumption.

The second meal of dumplings fast2s wrapped, basically spent all the time last Saturday.

Then on Sunday I turned around and continued to make my first dumpling xunmi. After xunmi tossed around, I finished processing the xunmi py needed for the article to be written. It was already 12:00 on Sunday night. I paid nearly 700 lines (300 + 377) of code for 96 lines of xunmi py:

fast2s ❯ tokei . ------------------------------------------------------------------------------- Language Files Lines Code Comments Blanks ------------------------------------------------------------------------------- Markdown 3 56 56 0 0 Rust 5 365 300 13 52 Plain Text 8 6428 6428 0 0 TOML 3 259 99 142 18 ------------------------------------------------------------------------------- Total 19 7108 6883 155 70 ------------------------------------------------------------------------------- xunmi ❯ tokei . ------------------------------------------------------------------------------- Language Files Lines Code Comments Blanks ------------------------------------------------------------------------------- Markdown 2 62 62 0 0 Rust 5 458 377 19 62 TOML 2 239 84 142 13 XML 1 63054 59462 0 3592 YAML 3 348 344 0 4 ------------------------------------------------------------------------------- Total 13 64161 60329 161 3671 ------------------------------------------------------------------------------- geek-time-rust-resources/31/xunmi-py ❯ tokei . ------------------------------------------------------------------------------- Language Files Lines Code Comments Blanks ------------------------------------------------------------------------------- Makefile 1 24 18 0 6 Python 1 1 1 0 0 Rust 2 112 96 0 16 TOML 1 19 14 0 5 XML 1 63054 59462 0 3592 YAML 2 321 318 0 3 ------------------------------------------------------------------------------- Total 9 63531 59909 0 3622 -------------------------------------------------------------------------------

Although it took much longer than I expected to make dumplings, I learned some wonderful things in the process.

For example, I have been worried about how to integrate the data from multiple data sources (json / yaml / xml /...) into a set of schemes without defining Rust struct (if struct can be defined, then serde conversion can be used directly). For this reason, I even went in the wrong direction at first, trying to automatically detect text types and convert them into JSON (the code for detection and conversion is still a little challenging).

Later, I found that using serde, I can put serde_xml_rs provides the ability to convert XML text into a serde_json. It's like putting a pig's large intestine in a cow's stomach without rejecting differences:

let data: serde_json::Value = serde_xml_rs::from_str(&input);

Isn't it amazing?

Therefore, the unified processing of multiple data sources can be simplified as follows, which is so simple that people can't believe their eyes:

pub type JsonObject = serde_json::Map<String, JsonValue>;

pub struct JsonObjects(Vec<JsonObject>);

impl JsonObjects {

pub fn new(input: &str, config: &InputConfig, t2s: bool) -> Result<Self> {

let input = match t2s {

true => Cow::Owned(fast2s::convert(input)),

false => Cow::Borrowed(input),

};

let err_fn =

|| DocParsingError::NotJson(format!("Failed to parse: {:?}...", &input[0..20]));

let result: std::result::Result<Vec<JsonObject>, _> = match config.input_type {

InputType::Json => serde_json::from_str(&input).map_err(|_| err_fn()),

InputType::Yaml => serde_yaml::from_str(&input).map_err(|_| err_fn()),

InputType::Xml => serde_xml_rs::from_str(&input).map_err(|_| err_fn()),

};

let data = match result {

Ok(v) => v,

Err(_) => {

let obj: JsonObject = match config.input_type {

InputType::Json => serde_json::from_str(&input).map_err(|_| err_fn())?,

InputType::Yaml => serde_yaml::from_str(&input).map_err(|_| err_fn())?,

InputType::Xml => serde_xml_rs::from_str(&input).map_err(|_| err_fn())?,

};

vec![obj]

}

};

Ok(Self(data))

}

}OK, let's talk about dumplings first. Vinegar, you can taste it next week:)

Meditation moment

You asked me why it's been 9102 and two years and why I have to support xml that seems to be out of date? hmm... Because many data sources are xml, such as dump in wikipedia.

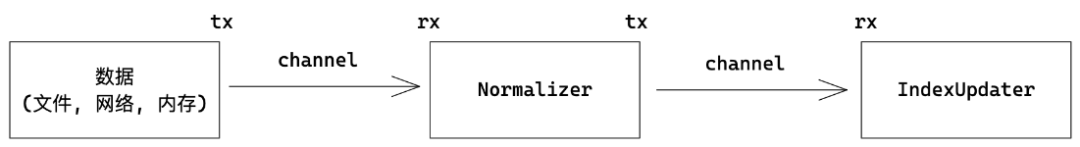

For xunmi, the current processing method is not good enough. When adding documents to the index, you should use channel to divide the processing process into several stages, so that the addition of the index will not affect the query, and the overall experience of python users will be better:

I'll continue to make this dumpling into thin skin and big filling when I'm free.