The first feature of Intel's things is that they are a little "expensive" (probably because I'm poor).

Introduction to Intel RealSense camera upper , I've written about this camera before.

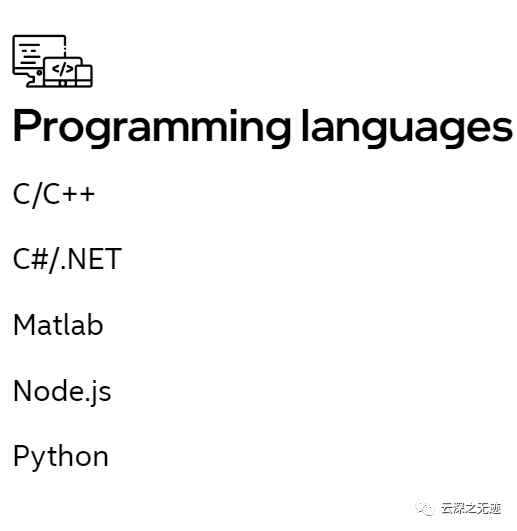

Now the latest version is D455i, the most cost-effective possible D435, but the price is more than 1000. I'm not saying it's expensive. I just can't use it. So I'll consider other possibilities. intel's things are good SDK, rich demo and rich supported languages.

Matlab can control, isn't it cool

The latest camera. I can't use it and I'm poor~

Then when I was surfing this evening, I saw a store out of R200, only 159!!! My mom, it's brand new. What are you waiting for!

Rush!!!

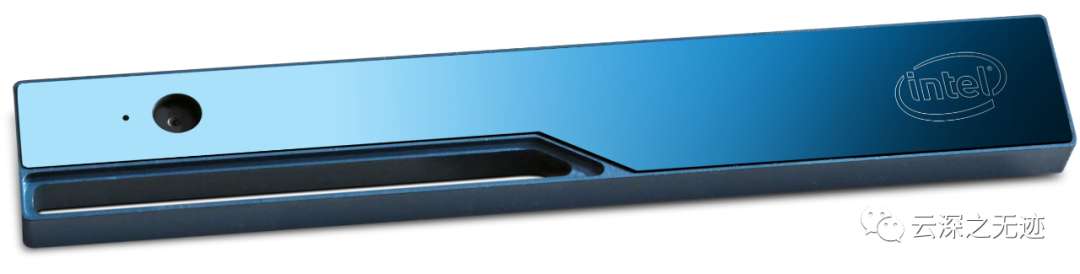

Maybe the machine looks like this

Because we want this thing, it's not for anything, it's to learn~~

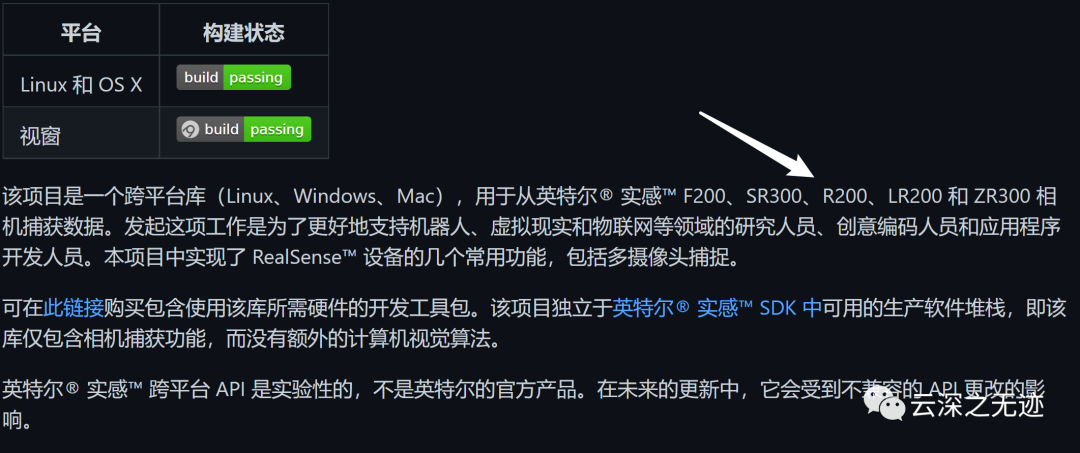

https://github.com/IntelRealSense/librealsense/tree/v1.12.1

The address of the last most important GitHub

See R200

https://github.com/IntelRealSense/librealsense

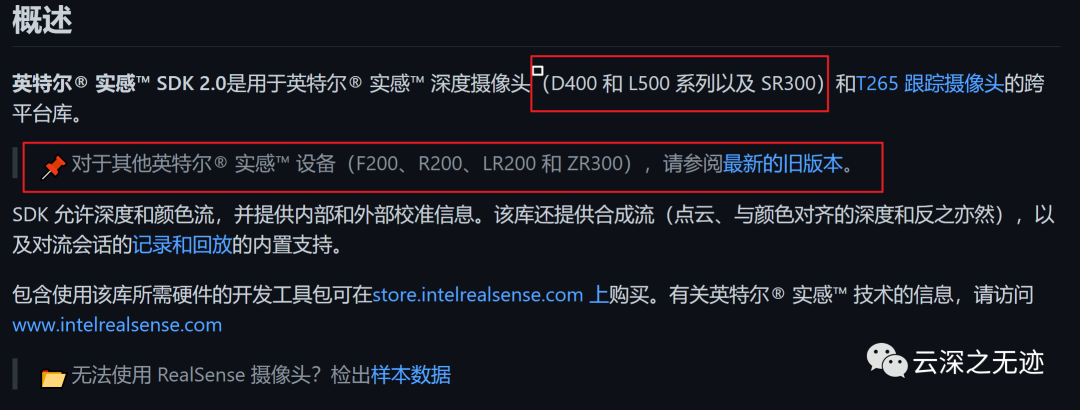

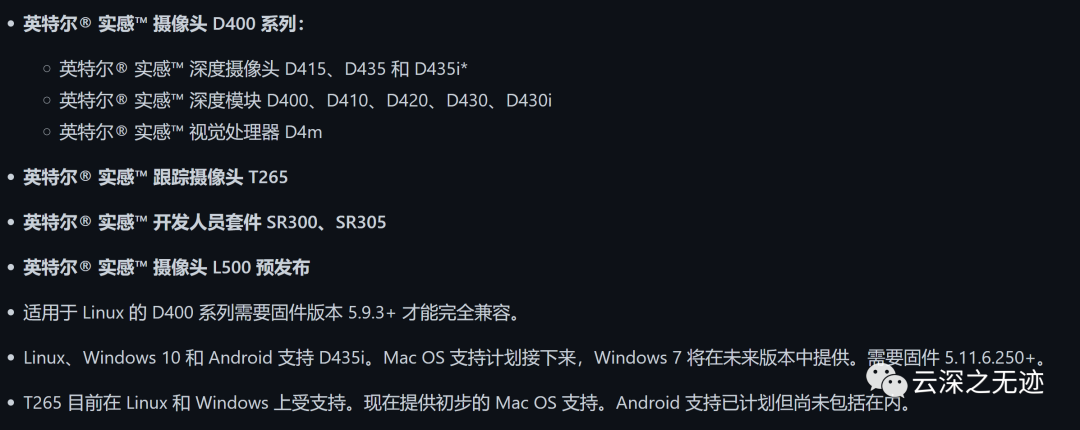

In fact, this is the latest SDK, but R200 is too old to support this~

Look where I draw

Because of poverty, many people don't say they don't support R200

I think it can be supported. Wait until the machine comes back to see me operate~

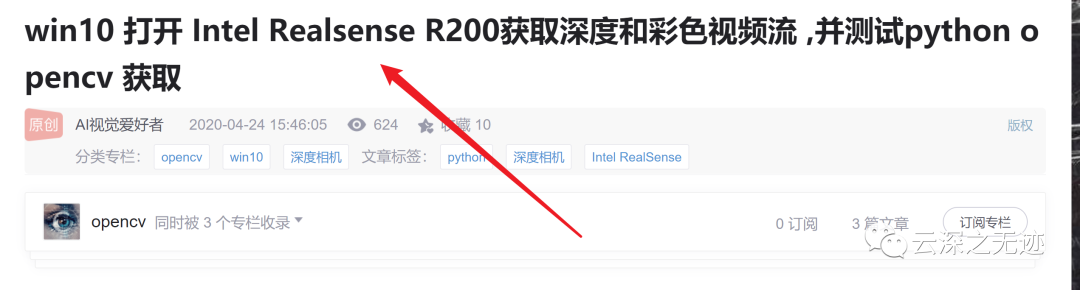

Find an article and have a general look:

https://blog.csdn.net/jy1023408440/article/details/105732098?utm_medium=distribute.wap_relevant.none-task-blog-2%7Edefault%7EBlogCommendFromBaidu%7Edefault-9.wap_blog_relevant_pic&depth_1-utm_source=distribute.wap_relevant.none-task-blog-2%7Edefault%7EBlogCommendFromBaidu%7Edefault-9.wap_blog_relevant_pic

title

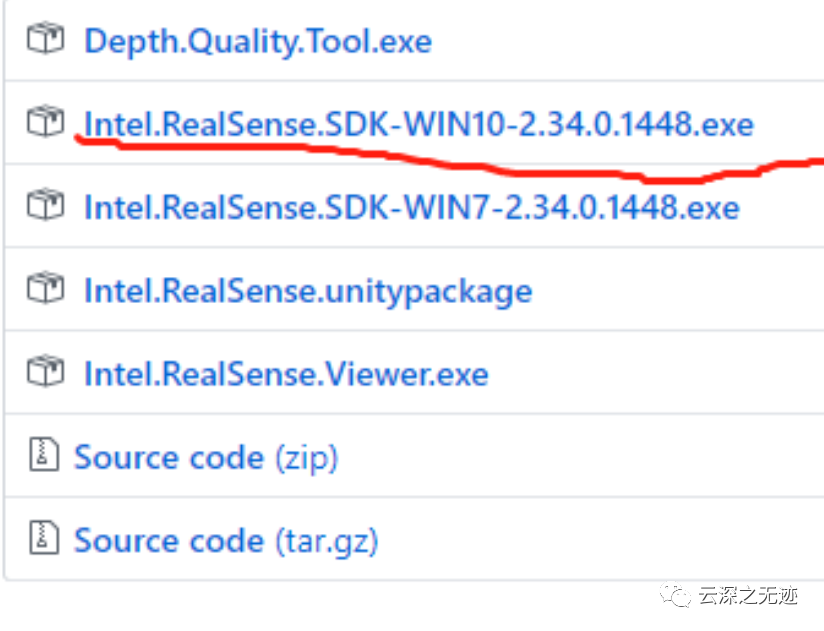

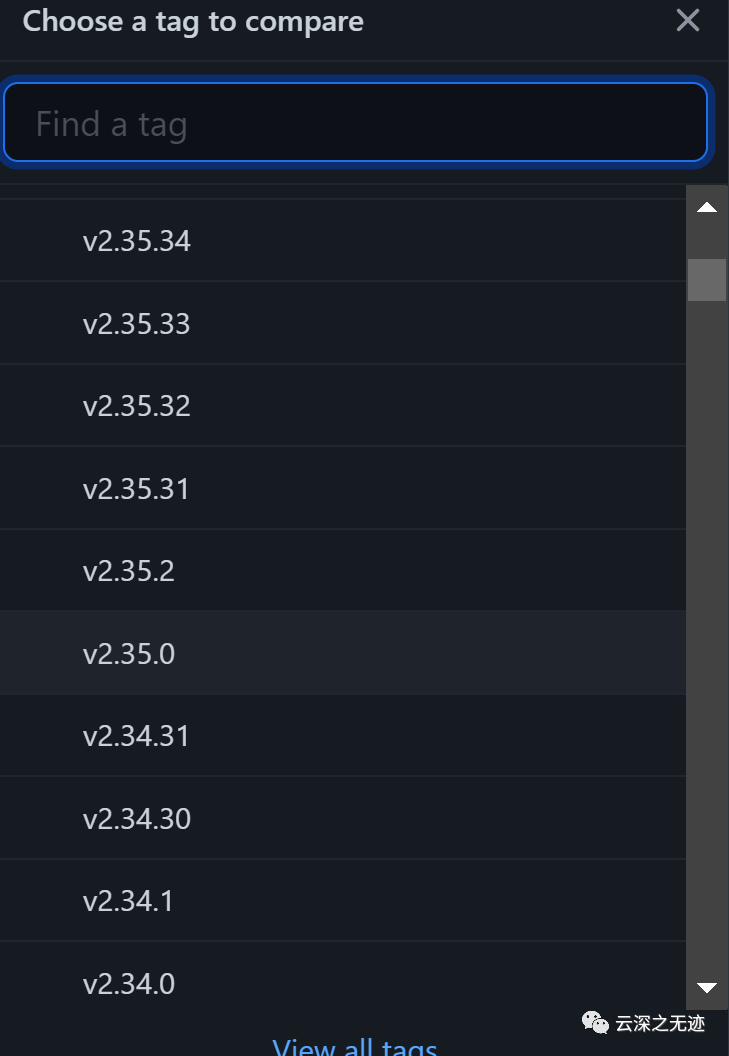

https://github.com/IntelRealSense/librealsense/releases/tag/v2.34.0

R200 is not supported here

This article is written in

2020-04-24

Unfortunately, it's September 21, 2020 now

The version is very old

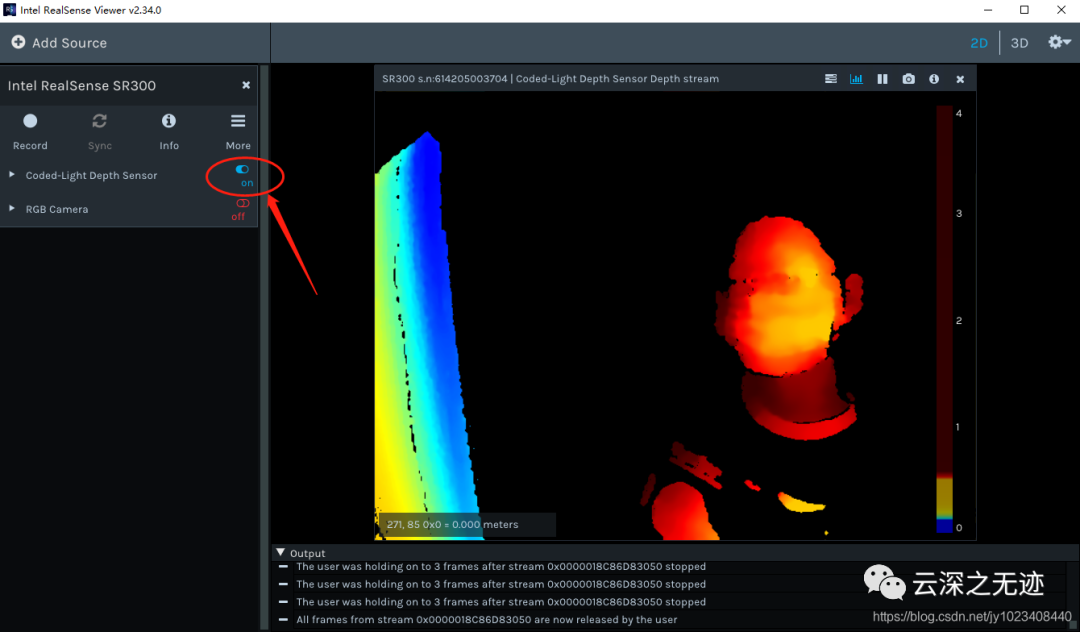

This is the picture of success in the article

pip install pyrealsense2

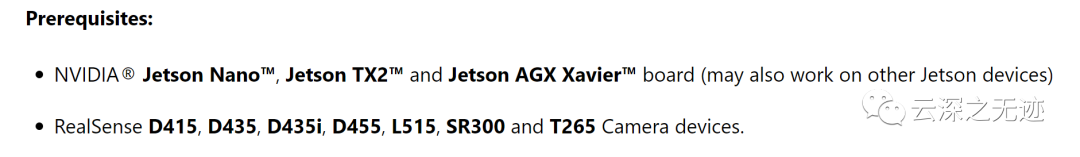

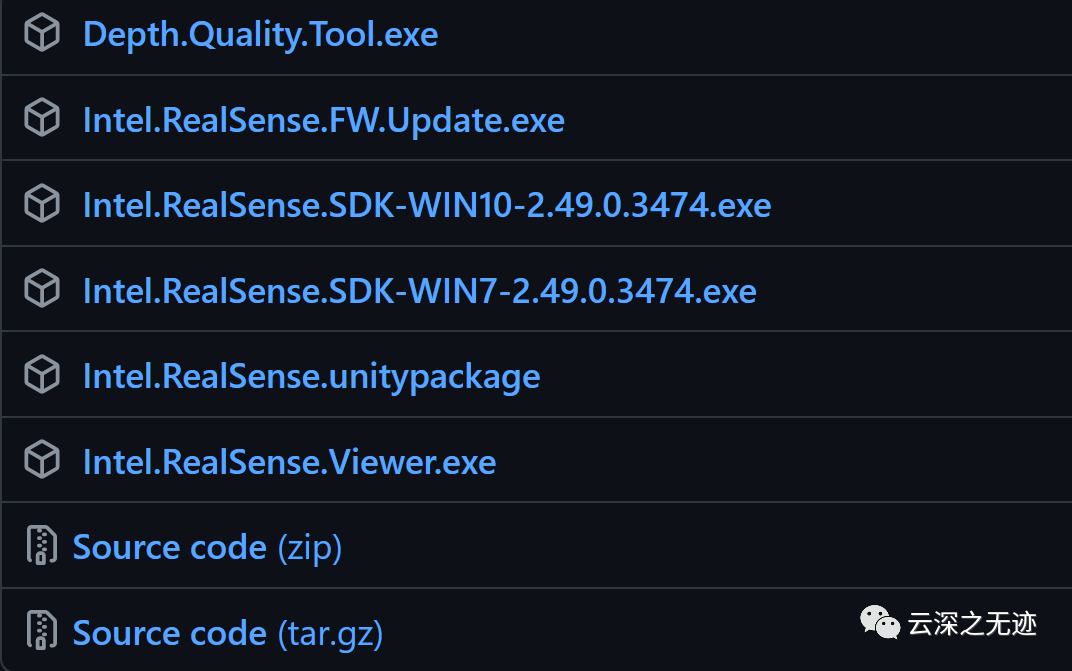

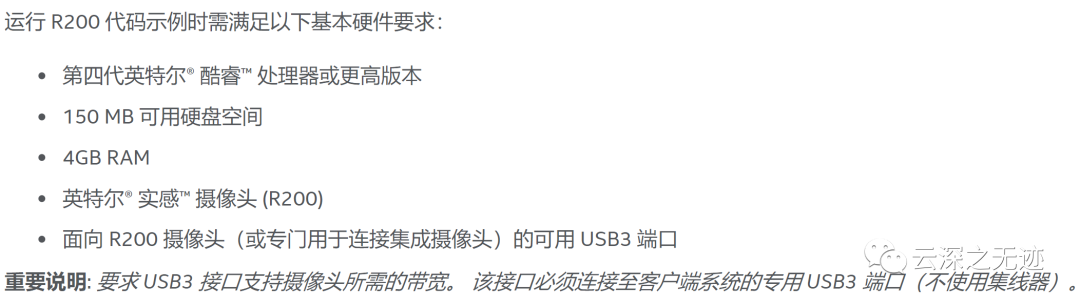

The minimum requirements for running the camera are found in an article

https://www.intel.cn/content/www/cn/zh/support/products/92256/emerging-technologies/intel-realsense-technology/intel-realsense-cameras/intel-realsense-camera-r200.html

The SDK of v10 can no longer be downloaded

https://www.intelrealsense.com/

This is the recommended location for the new SDK

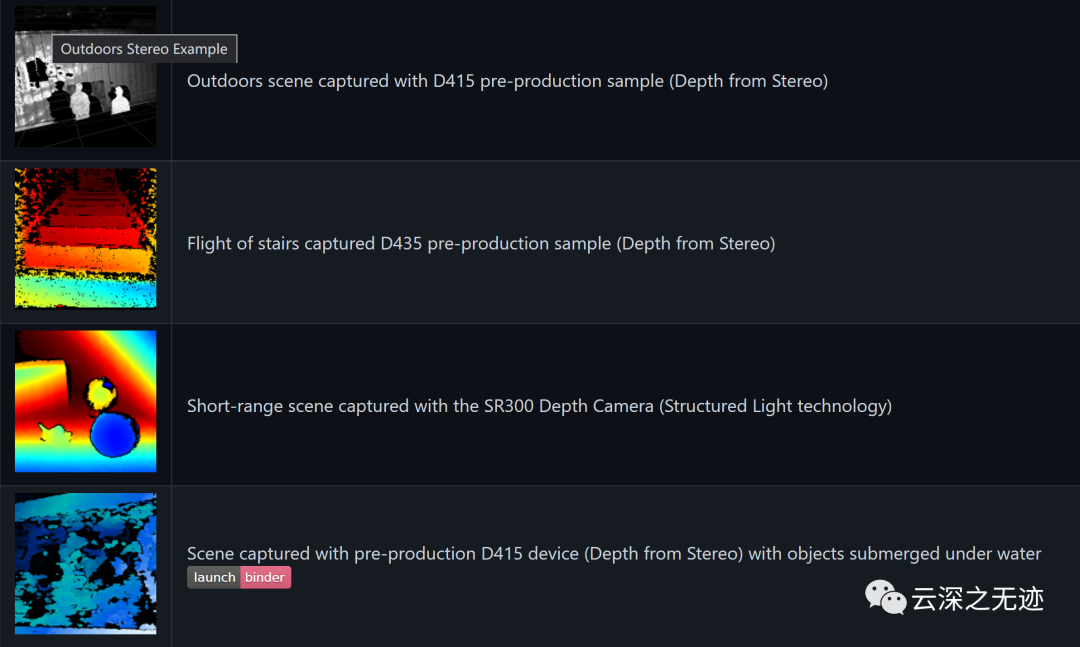

github.com/IntelRealSense/librealsense/blob/master/doc/sample-data.md

Then the SDK supports the recording of depth images

All kinds of can be seen without a camera

Next try

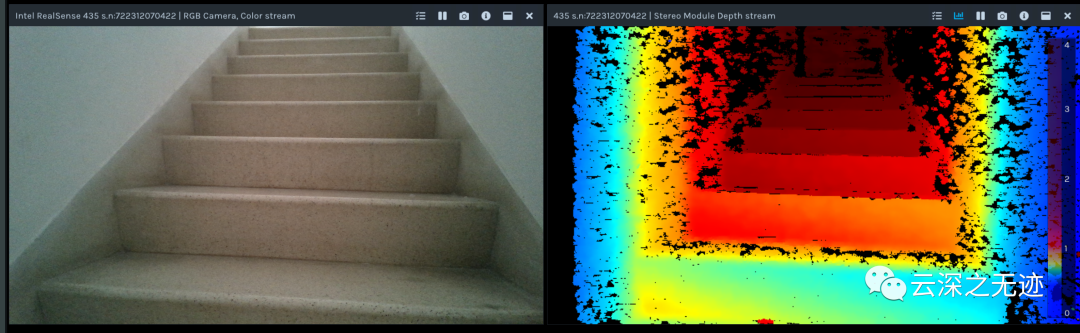

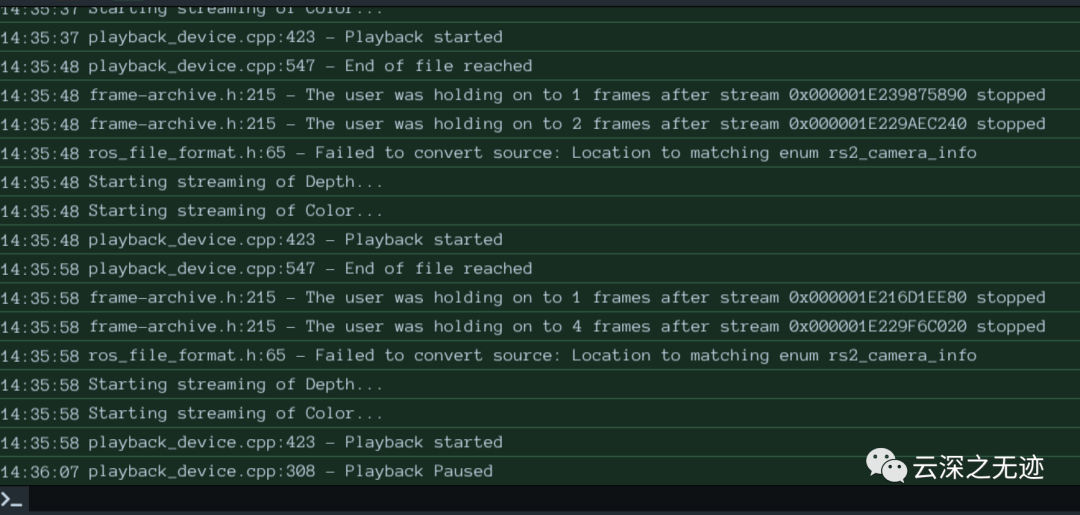

Open in our viwer

2D and 3D all open

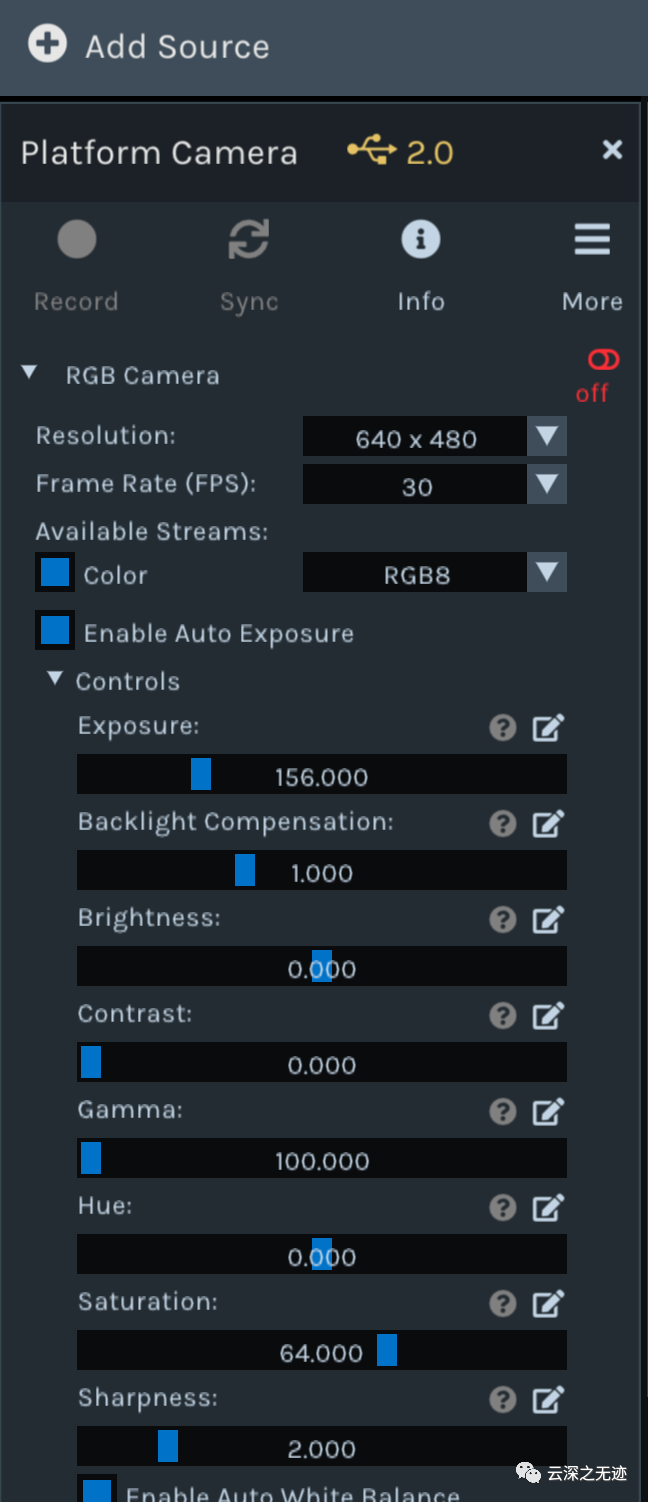

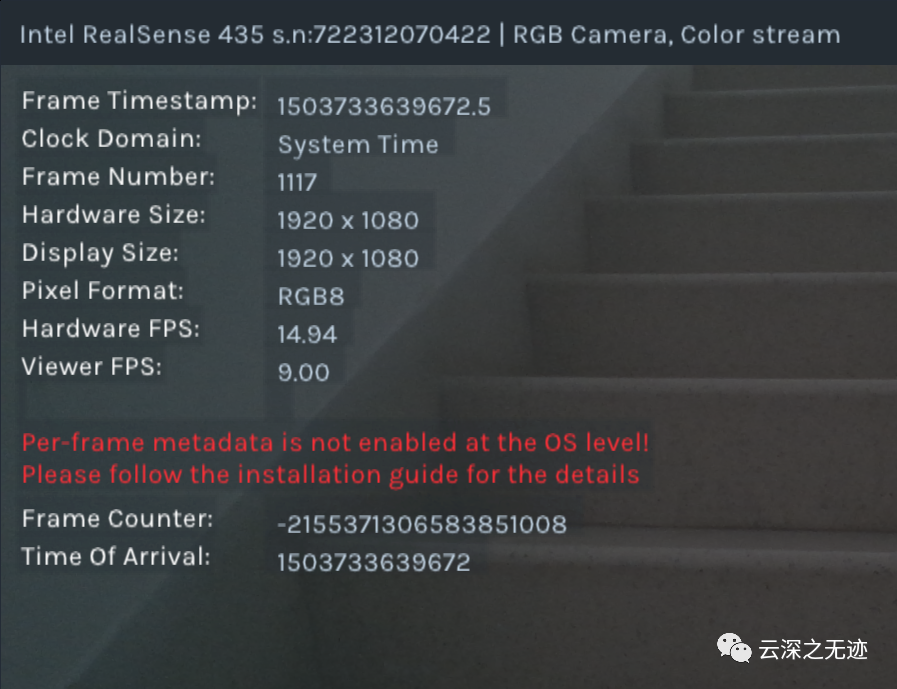

You can also see our video parameters

Future research

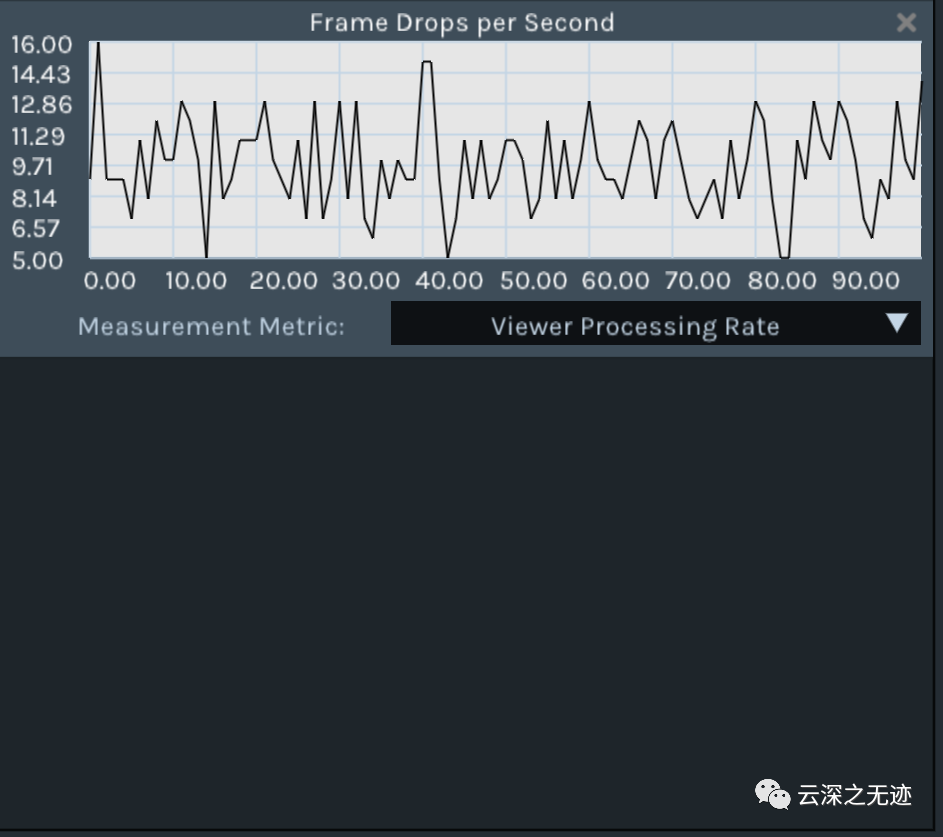

I accidentally adjusted this. I don't know what it is

And parameters

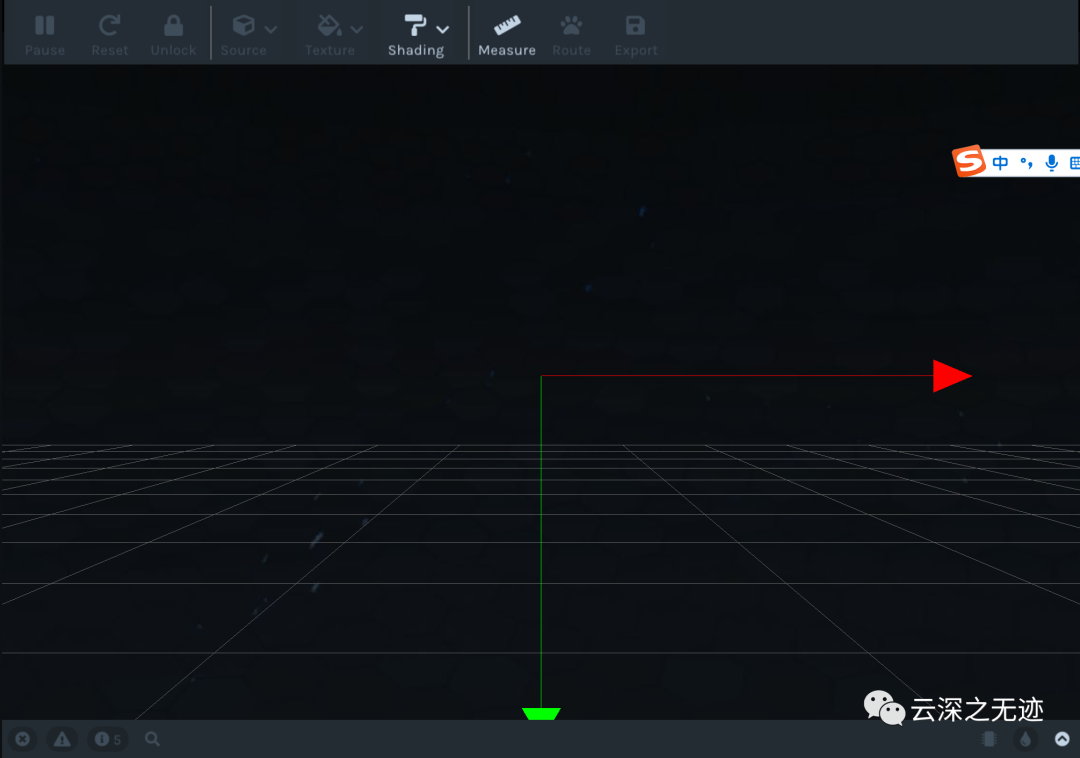

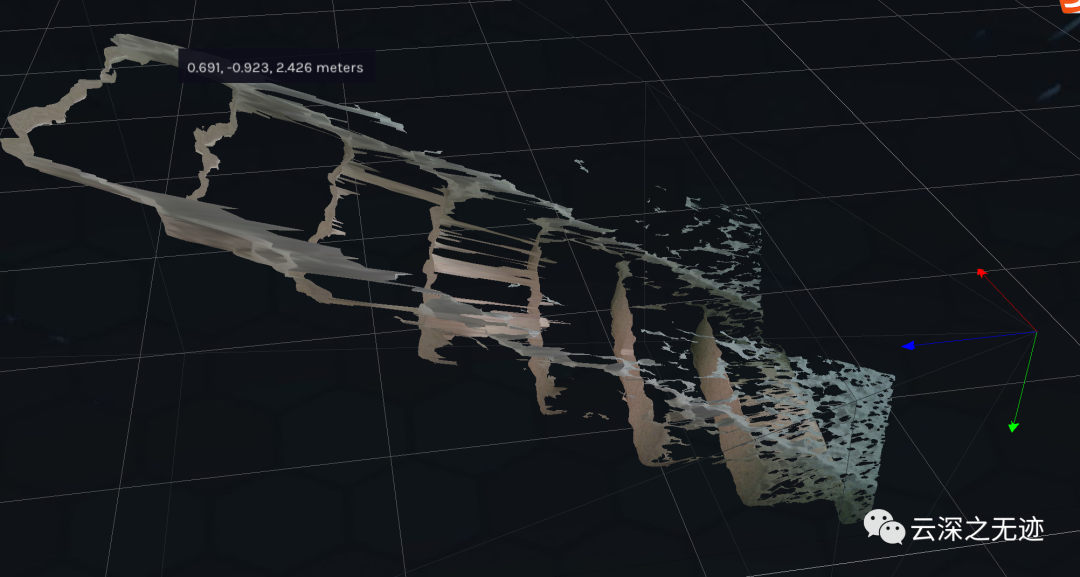

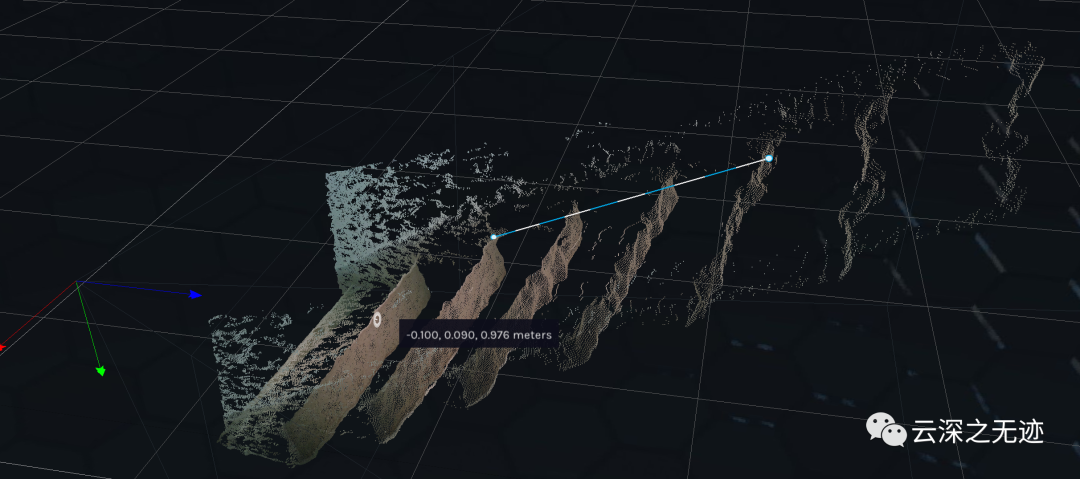

Stereoscopic point cloud

Survey map

Maybe many people don't know the meaning of this thing. I think the meaning lies in reality~

The processing codes of Matlab and Python are given below:

% Enable pipeline objects to manage streaming The Conduit = Real feeling.The Conduit(); % Defining point cloud objects pcl_obj = Real feeling.Point cloud(); % Start streaming on any camera using the default settings The Conduit.start(); % Get frame. We abandoned the first couple Percentage of camera settlement time about i = 1:5 frame = The Conduit.wait_for_frame(); end % Select depth frame depth = frame.get_depth_frame(); % Get point cloud points without color pnts = pcl_obj.calculation(depth); vertex = pnts.get_vertice(); % Optional:fill MATLAB Point cloud object pCloud = Point cloud(vertex); %Show point cloud pcshow(pCloud); The Conduit.stop it();

Get only point cloud

These codes are suitable for these products

R200 can also explain

In fact, new and old products are the difference in performance, and the software stack is universal

MATLAB: % Make Pipeline object to manage streaming pipe = realsense.pipeline(); % define point cloud object pcl_obj = realsense.pointcloud(); % define colorizer to give point cloud color colorizer = realsense.colorizer(); % Start streaming on an arbitrary camera with default settings profile = pipe.start(); % Get frames. We discard the first couple to allow % the camera time to settle for i = 1:5 frames = pipe.wait_for_frames(); end % Stop streaming pipe.stop(); % Select depth frame depth = frames.get_depth_frame(); % get point cloud points without color points = pcl_obj.calculate(depth); % get texture mapping color = frames.get_color_frame(); % map point cloud to color pcl_obj.map_to(color); % get vertices (nx3) vertices = points.get_vertices(); % get texture coordinates (nx2) tex_coords = points.get_texture_coordinates();

import pyrealsense2 as rs

import numpy as np

import cv2

if __name__ == "__main__":

# Configure depth and color streams

pipeline = rs.pipeline()

config = rs.config()

config.enable_stream(rs.stream.depth, 640, 480, rs.format.z16, 30)

config.enable_stream(rs.stream.color, 640, 480, rs.format.bgr8, 30)

# Start streaming

pipeline.start(config)

try:

while True:

# Wait for a coherent pair of frames: depth and color

frames = pipeline.wait_for_frames()

depth_frame = frames.get_depth_frame()

color_frame = frames.get_color_frame()

if not depth_frame or not color_frame:

continue

# Convert images to numpy arrays

depth_image = np.asanyarray(depth_frame.get_data())

color_image = np.asanyarray(color_frame.get_data())

# Apply colormap on depth image (image must be converted to 8-bit per pixel first)

depth_colormap = cv2.applyColorMap(cv2.convertScaleAbs(depth_image, alpha=0.03), cv2.COLORMAP_JET)

# Stack both images horizontally

images = np.hstack((color_image, depth_colormap))

# Show images

cv2.namedWindow('RealSense', cv2.WINDOW_AUTOSIZE)

cv2.imshow('RealSense', images)

key = cv2.waitKey(1)

# Press esc or 'q' to close the image window

if key & 0xFF == ord('q') or key == 27:

cv2.destroyAllWindows()

break

finally:

# Stop streaming

pipeline.stop()

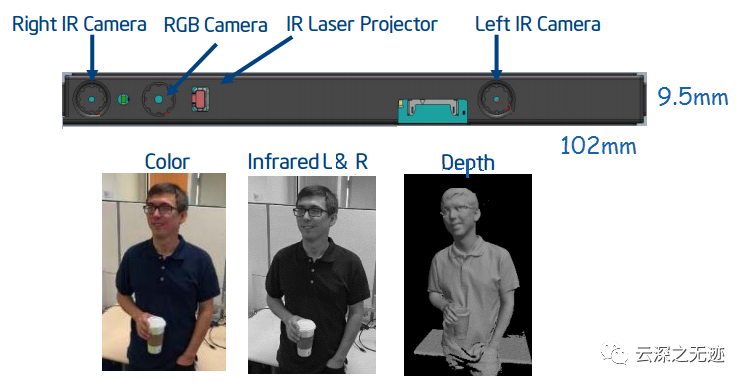

Three cameras

R200 actually has 3 cameras, which can provide RGB (color) and stereo infrared images to generate depth. With the help of laser projector, the camera can conduct three-dimensional scanning to obtain scene perception and enhanced photography. The internal range is about 0.5-3.5m, and the external range can reach 10m. Note: the scope depends largely on the module and lighting.

Intel is different from Intel ® Real feeling ™ F200 camera, R200 facing the world, not the user. Therefore, the realistic SDK focuses on the following use cases of R200.

In other words, R200 is suitable for outdoor use. Of course, it can also be used indoors~

- Capture the 3D world, then edit, share, and print 3D objects.

- Enhance your photography. Intel photorealistic R200 includes 3D filters that allow re illumination, Refocus, and background segmentation (remove / replace background)

- Add virtual content to the 3D captured version of the real world. In a feature called "Scene awareness", you can add virtual objects to the captured real-world scene, because the R200 camera understands the surface and objects and motion, and can create the scene by estimating the position / direction of the camera in the scene.

These use cases are based on the two functional areas of the R200 camera.

- Tracking / positioning: use depth, RGB and IMU data to estimate the position and orientation of the camera in real time.

- This shows that IMU really has sensors?

- 3D volume / surface reconstruction: build a real-time digital representation of the 3D scene observed by the camera

The most exciting thing about the R200 camera is the wider scanning capability, which is a new method of measuring depth. R200 includes stereo camera and RGB camera. Since this camera does not rely much on infrared, it can be used outdoors. Color cameras can provide human images, and two depth cameras can provide data for algorithm use. In addition, if an IMU (inertial measurement unit) is built into the system, the SDK can adjust the gravity influence on the objects added to the scene. However, if is written here. I don't know if there is IMU.

With stereo photography, triangulation can be used to calculate the depth / 3D from the parallax (pixel offset) between the two "separate" cameras to obtain the depth measurement. Note that this is based on a parallel plane (not an absolute range with the camera). The SDK contains a utility to help with plane detection. Ensure that the camera can see the horizon during initialization, which is crucial to the direction of the scanning target.

R200 can use 6 DOF (degrees of freedom) to track the movement of the camera in three-dimensional space. 3 degrees to front / rear / up / down / left / right and 3 degrees to yaw / pitch / roll motion.

To get the best 3D scan results:

- Use a 2m (cubic) FOV with at least 5000 pixels (640x480). For correct detection, use this chart: Distance / min rectangular object size 30cm 4.5cm x 3.5 cm 100 cm 16 cm x 11 cm 180 cm 28 cm x 21 cm

- Do not block more than 20% of the object.

- Move the camera, but keep the position of real scene objects as much as possible.

- Operate at 30 FPS or 60 FPS. A higher FPS is used to obtain a smaller inter frame displacement

- Do not use ordinary unstructured surfaces. The infrared emitter sends random uneven light patterns to add textures to the scene and run the data through filters in the infrared band. In addition, RGB input is added to stereo depth calculation.

- Moving the camera from medium to slow, remember that shooting at 60 FPS means a depth calculation of 18M per second.

- Allow time for the camera to initialize (shown at the bottom left of the screen), including placing the target in the center of the green line.

The color camera can perform 32-bit RGBA at 1080p @60FPS, using fixed focus and 16:3 aspect ratio. RGB cameras have a slightly larger FOV than dual cameras, but that doesn't mean they can be used as separate cameras. Here we can see that RGB images can be used even without the depth function, usb3 0 oh~

Look at these cameras

The dual depth camera uses a fixed focus 4:3 aspect ratio and a 70x59x46 degree field of view. IR is a class 1 laser in the 850 nm range, Available resolution:

@60FPS, depth 320x240; Color 640x480 @60FPS, depth 480x360, color 320x240 or 640x480 @30FPS, depth 320x240; The color is 640x480, 1280x720 or 1920x1080 @30FPS, depth 480x360; The color is 320x240, 640x480, 1280x720 or 1920x1080

The realistic SDK can provide docking from project depth to color, and vice versa.

The power consumption of R200 ranges from 0 to 100mw (idle) to 1.0-1.6w (active) (depending on the module used). It has a number of energy-saving features, including the use of USB3.