1 load balancing algorithm

The English name of Load Balance is Load Balance, which means to balance the load (work task) and allocate it to multiple operating units for operation, such as FTP server, Web server, enterprise core application server and other main task servers, so as to complete the work task together. Since it involves multiple machines, it involves how to distribute tasks, which is the problem of load balancing algorithm.

2 polling (RoundRobin)

2.1 general

Polling is to line up, one by one. The time slice rotation used in the previous scheduling algorithm is a typical polling. But the array and subscript are used earlier

Polling implementation. Here we try to manually write a two-way linked list to realize the request polling algorithm of server list.

2.2 realization

package com.oldlu.balance;

public class RR {

class Server{

Server prev;

Server next;

String name;

public Server(String name){

this.name = name;

}

}

//Current service node

Server current;

//Initialize the polling class. Mult ip le server IPS are separated by commas

public RR(String serverName){

System.out.println("init server list : "+serverName);

String[] names = serverName.split(",");

for (int i = 0; i < names.length; i++) {

Server server = new Server(names[i]);

if (current == null){

//If the current server is empty, it indicates that it is the first machine, and current points to the newly created server

this.current = server;

//At the same time, the front and back of the server point to itself.

current.prev = current;

current.next = current;

}else {

//Otherwise, it indicates that there is already a machine, and it shall be treated as a new one.

addServer(names[i]);

}

}

}

//Add machine

void addServer(String serverName){

System.out.println("add server : "+serverName);

Server server = new Server(serverName);

Server next = this.current.next;

//Insert a new node after the current node

this.current.next = server;

server.prev = this.current;

//Modify the prev pointer of the next node

server.next = next;

next.prev=server;

}

//Remove the current server and modify the pointers of the front and rear nodes to make them directly related

//The removed current will be recycled by the recycler

void remove(){

System.out.println("remove current = "+current.name);

this.current.prev.next = this.current.next;

this.current.next.prev = this.current.prev;

this.current = current.next;

}

//Request. It can be processed by the current node

//Note: after processing, the current pointer moves back

void request(){

System.out.println(this.current.name);

this.current = current.next;

}

public static void main(String[] args) throws InterruptedException {

//Initialize both machines

RR rr = new RR("192.168.0.1,192.168.0.2");

//Start an extra thread to simulate non-stop requests

new Thread(new Runnable() {

@Override

public void run() {

while (true) {

try {

Thread.sleep(500);

} catch (InterruptedException e) {

e.printStackTrace();

}

rr.request();

}

}

}).start();

//After 3s, machine 3 is added to the list

Thread.currentThread().sleep(3000);

rr.addServer("192.168.0.3");

//After 3s, the current service node is removed

Thread.currentThread().sleep(3000);

rr.remove();

}

}

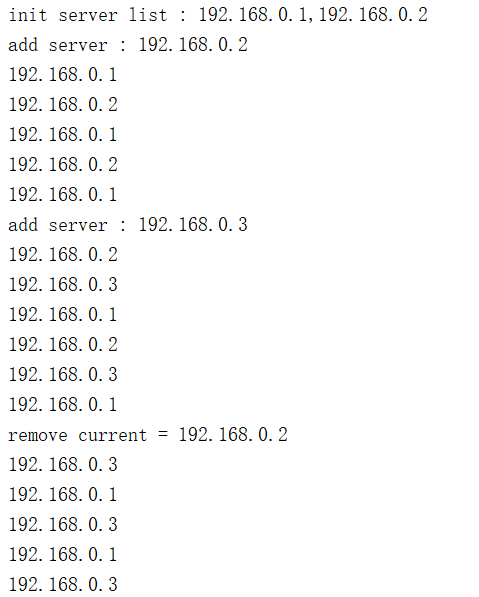

2.3 result analysis

After initialization, there are only 1 and 2 polling

After 3 joins, 1, 2, 3 and 3 poll

After removing 2, only 1 and 3 polls remain

2.4 advantages and disadvantages

The implementation is simple, the machine list can be added or subtracted freely, and the time complexity is o(1)

Biased customization cannot be made for nodes, and the processing capacity of nodes cannot be treated differently

3 Random

3.1 general

Randomly select one from the list of available services to provide a response. In the scenario of random access, it is suitable to use arrays to realize subscript random reading more efficiently.

3.2 realization

Define an array and take a random number within the length of the array as its subscript. It's simple

package com.oldlu.balance;

import java.util.ArrayList;

import java.util.Random;

public class Rand {

ArrayList<String> ips ;

public Rand(String nodeNames){

System.out.println("init list : "+nodeNames);

String[] nodes = nodeNames.split(",");

//Initialize the server list, and the length takes the number of machines

ips = new ArrayList<>(nodes.length);

for (String node : nodes) {

ips.add(node);

}

}

//request

void request(){

//Subscript, random number, attention factor

int i = new Random().nextInt(ips.size());

System.out.println(ips.get(i));

}

//Add nodes. Note that adding nodes will cause the internal array to expand

//A certain space can be reserved during initialization according to the actual situation

void addnode(String nodeName){

System.out.println("add node : "+nodeName);

ips.add(nodeName);

}

//remove

void remove(String nodeName){

System.out.println("remove node : "+nodeName);

ips.remove(nodeName);

}

public static void main(String[] args) throws InterruptedException {

Rand rd = new Rand("192.168.0.1,192.168.0.2");

//Start an extra thread to simulate non-stop requests

new Thread(new Runnable() {

@Override

public void run() {

while (true) {

try {

Thread.sleep(500);

} catch (InterruptedException e) {

e.printStackTrace();

}

rd.request();

}

}

}).start();

//After 3s, machine 3 is added to the list

Thread.currentThread().sleep(3000);

rd.addnode("192.168.0.3");

//After 3s, the current service node is removed

Thread.currentThread().sleep(3000);

rd.remove("192.168.0.2");

}

}

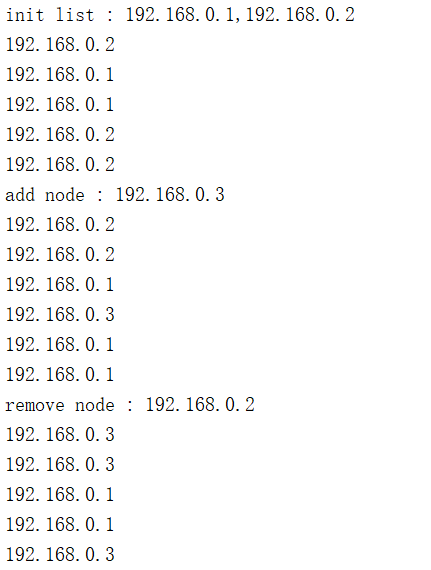

3.3 result analysis

Initialize to 1 and 2. They do not poll in order, but appear randomly

3 join the service node list

After removing 2, there are only 1 and 3 left, which are still random and disordered

4 source address Hash (Hash)

4.1 General

Make a hash value for the currently accessed ip address, and the same key will be routed to the same machine. Scenarios are common in distributed cluster environments where users log in

Request routing and session persistence.

4.2 realization

HashMap can be used to realize the service from the request value to the corresponding node, and the time complexity of searching is o(1). Fixed an algorithm that maps requests to

Key. For example, take the remainder of the source ip of the request as the key according to the number of machines:

package com.oldlu.balance;

import java.util.ArrayList;

import java.util.Random;

public class Hash {

ArrayList<String> ips ;

public Hash(String nodeNames){

System.out.println("init list : "+nodeNames);

String[] nodes = nodeNames.split(",");

//Initialize the server list, and the length takes the number of machines

ips = new ArrayList<>(nodes.length);

for (String node : nodes) {

ips.add(node);

}

}

//Add nodes. Note that adding nodes will cause internal Hash rearrangement. Think about why???

//This is a problem! The consistency hash will be discussed in detail

void addnode(String nodeName){

System.out.println("add node : "+nodeName);

ips.add(nodeName);

}

//remove

void remove(String nodeName){

System.out.println("remove node : "+nodeName);

ips.remove(nodeName);

}

//The algorithm mapped to the key takes the remainder as the subscript

private int hash(String ip){

int last = Integer.valueOf(ip.substring(ip.lastIndexOf(".")+1,ip.length()));

return last % ips.size();

}

//request

//Note that this is related to the visiting ip. A parameter is used to represent the current visiting ip

void request(String ip){

//subscript

int i = hash(ip);

System.out.println(ip+"‐‐>"+ips.get(i));

}

public static void main(String[] args) {

Hash hash = new Hash("192.168.0.1,192.168.0.2");

for (int i = 1; i < 10; i++) {

//Source ip of the impersonation request

String ip = "192.168.1."+ i;

hash.request(ip);

}

hash.addnode("192.168.0.3");

for (int i = 1; i < 10; i++) {

//Source ip of the impersonation request

String ip = "192.168.1."+ i;

hash.request(ip);

}

}

}

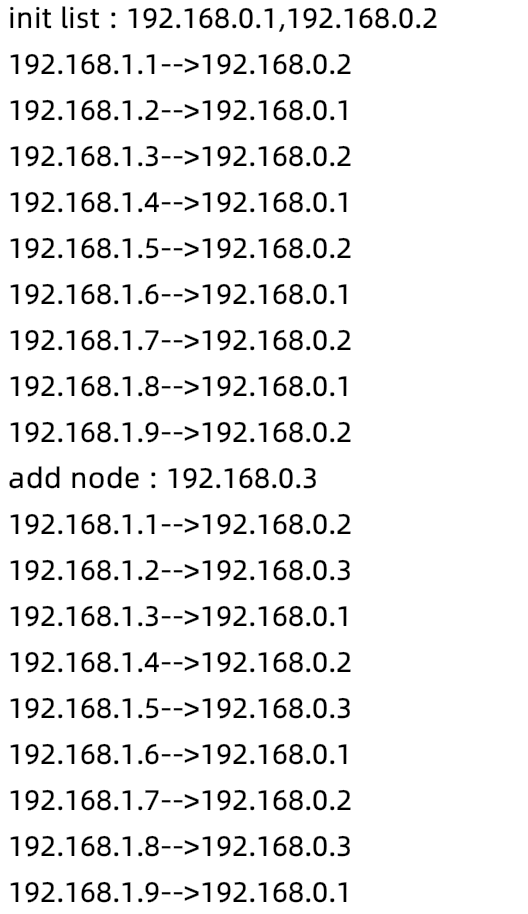

4.3 result analysis

After initialization, there are only 1 and 2. The subscript is the remainder of the end ip. It runs for many times, and the responding machine remains unchanged, realizing session retention

3 after adding, re hash, and the machine distribution changes

After 2 is removed, the original request from hash to 2 is relocated to 3 response

5 weighted polling (WRR)

5.1 general

WeightRoundRobin, polling is just a mechanical rotation. Weighted polling makes up for the disadvantage that all machines are treated equally. On the basis of polling, the machine carries a specific gravity during initialization.

5.2 realization

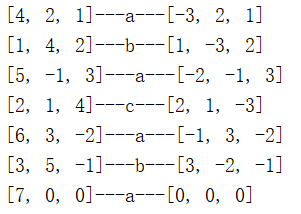

Maintain a linked list. Each machine occupies different numbers according to different weights. If there are more powerful ones during polling, the number of times to get them naturally becomes larger. For example

Example: the weights of three machines a, b and c are 4, 2 and 1 respectively. After ranking, there will be a, a, a, a, b, b and c. each time a request is made, the nodes will be taken from the list in turn, and the next request will be taken. At the end, start from the beginning. But there is a problem: the distribution of machines is not uniform enough, and piles appear

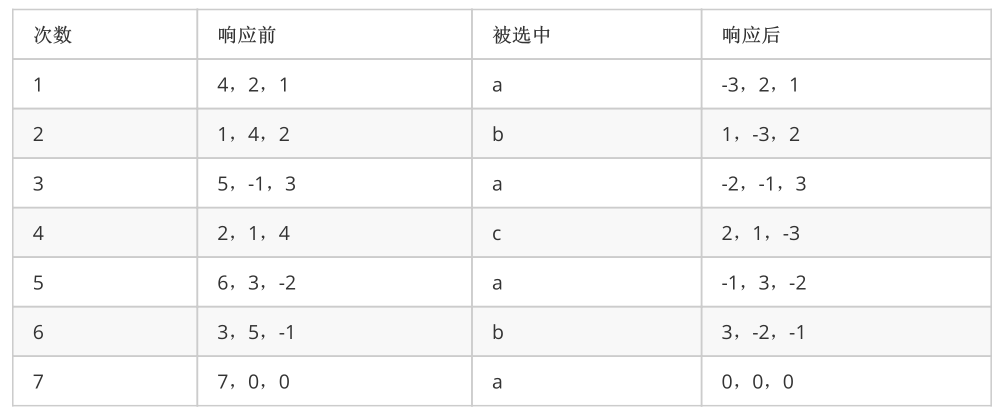

Solution: in order to solve the problem of machine smoothing, a smooth weighted polling algorithm is used in the source code of nginx. The rules are as follows:

Each node has two weights, weight and currentWeight. Weight is always the value during configuration. Current keeps changing. The change law is as follows: select all current+=weight before selection, select the largest response of current, and make its current-=total after response

Statistics: a=4, b=2, c=1 and the distribution is smooth and balanced

package com.oldlu.balance;

import java.util.ArrayList;

public class WRR {

class Node{

int weight,currentWeight;

String name;

public Node(String name,int weight){

this.name = name;

this.weight = weight;

this.currentWeight = 0;

}

@Override

public String toString() {

return String.valueOf(currentWeight);

}

}

//List of all nodes

ArrayList<Node> list ;

//Total weight

int total;

//Initialization node list, format: a#4,b#2,c#1

public WRR(String nodes){

String[] ns = nodes.split(",");

list = new ArrayList<>(ns.length);

for (String n : ns) {

String[] n1 = n.split("#");

int weight = Integer.valueOf(n1[1]);

list.add(new Node(n1[0],weight));

total += weight;

}

}

//Get current node

Node getCurrent(){

//Before execution, the current weight is reset

for (Node node : list) {

node.currentWeight += node.weight;

}

//Traversal, take the return with the highest weight

Node current = list.get(0);

int i = 0;

for (Node node : list) {

if (node.currentWeight > i){

i = node.currentWeight;

current = node;

}

}

return current;

}

//response

void request(){

//Get current node

Node node = this.getCurrent();

//The first column is the current before execution

System.out.print(list.toString()+"‐‐‐");

//In the second column, the selected node starts to respond

System.out.print(node.name+"‐‐‐");

//After response, current subtracts total

node.currentWeight ‐= total;

//The third column is current after execution

System.out.println(list);

}

public static void main(String[] args) {

WRR wrr = new WRR("a#4,b#2,c#1");

//Execute the request seven times and see the result

for (int i = 0; i < 7; i++) {

wrr.request();

}

}

}

5.3 result analysis

Compared with the above, it is expected

6 weighted random (WR)

6.1 general

WeightRandom: the machine is randomly screened, but a set of weighted values is made. The probability of selection is different according to different weights. In this concept, you can

It is considered that random is a special case of equal weight.

6.2 realization

The design idea is still the same. Different numbers of nodes are generated according to the weight. After the nodes are queued, they are obtained randomly. The data structure here mainly involves random reading, so it is preferably an array. The same as random, it is also a random filter for arrays. The difference is that there is only one random for each machine, which becomes multiple after weighting.

package com.oldlu.balance;

import java.util.ArrayList;

import java.util.Random;

public class WR {

//List of all nodes

ArrayList<String> list ;

//Initialize node list

public WR(String nodes){

String[] ns = nodes.split(",");

list = new ArrayList<>();

for (String n : ns) {

String[] n1 = n.split("#");

int weight = Integer.valueOf(n1[1]);

for (int i = 0; i < weight; i++) {

list.add(n1[0]);

}

}

}

void request(){

//Subscript, random number, attention factor

int i = new Random().nextInt(list.size());

System.out.println(list.get(i));

}

public static void main(String[] args) {

WR wr = new WR("a#2,b#1");

for (int i = 0; i < 9; i++) {

wr.request();

}

}

}

6.3 result analysis

Run for 9 times, a and b appear alternately, a=6,b=3, meeting the ratio of 2:1

be careful! Since it is random, there is randomness. It is not necessary to strictly control the proportion every time. When the sample tends to infinity, the proportion is approximately accurate

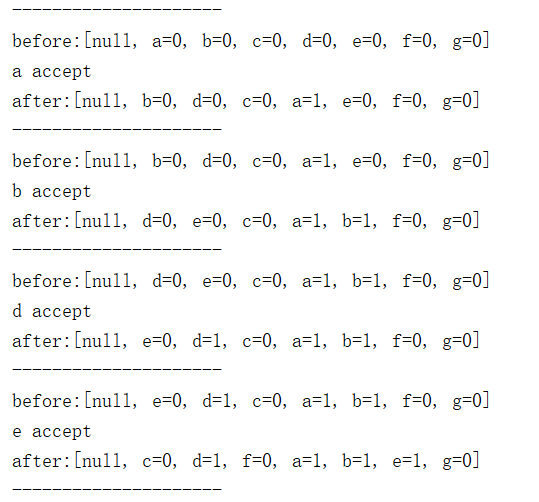

7 minimum number of connections (LC)

7.1 general

LeastConnections is to count the number of connections of the current machine and select the least to respond to new requests. The previous algorithm is to stand in the request dimension, and the minimum

The number of connections is the dimension of standing on the machine.

7.2 realization

Define a link table to record the node id of the machine and the counter of the number of machine connections. The minimum heap is used internally for sorting, and the node at the top of the heap is the minimum number of connections in response.

package com.oldlu.balance;

import java.util.Arrays;

import java.util.Random;

import java.util.concurrent.atomic.AtomicInteger;

public class LC {

//Node list

Node[] nodes;

//Initialize nodes and create heaps

// Since the number of connections of each node is 0 at the beginning, you can directly fill in the array

LC(String ns){

String[] ns1 = ns.split(",");

nodes = new Node[ns1.length+1];

for (int i = 0; i < ns1.length; i++) {

nodes[i+1] = new Node(ns1[i]);

}

}

//When the node sinks, compare it with the left and right child nodes and select the smallest exchange

//The goal is to always keep the vertex element value of the minimum heap to a minimum

//i: Vertex number to sink

void down(int i) {

//Vertex sequence number traversal, as long as 1.5, and the time complexity is O(log2n)

while ( i << 1 < nodes.length){

//Zuo Zi, why do you move 1 bit left? Review the binary tree sequence number

int left = i<<1;

//Right, left + 1

int right = left+1;

//Mark, pointing to this node. The smallest of the left and right child nodes takes i itself at the beginning

int flag = i;

//Judge whether the left child is smaller than this node

if (nodes[left].get() < nodes[i].get()){

flag = left;

}

//Judge right child

if (right < nodes.length && nodes[flag].get() > nodes[right].get()){

flag = right;

}

//If the smallest of the two is not equal to this node, it will be exchanged

if (flag != i){

Node temp = nodes[i];

nodes[i] = nodes[flag];

nodes[flag] = temp;

i = flag;

}else {

//Otherwise, the heap sorting is completed and the loop can be exited

break;

}

}

}

//Request. Very simple. Directly taking the top element of the smallest heap is the machine with the least number of connections

void request(){

System.out.println("‐‐‐‐‐‐‐‐‐‐‐‐‐‐‐‐‐‐‐‐‐");

//Take the heap top element to respond to the request

Node node = nodes[1];

System.out.println(node.name + " accept");

//Number of connections plus 1

node.inc();

//Heap before sorting

System.out.println("before:"+Arrays.toString(nodes));

//Pile top subsidence

down(1);

//Sorted heap

System.out.println("after:"+Arrays.toString(nodes));

}

public static void main(String[] args) {

//Suppose there are seven machines

LC lc = new LC("a,b,c,d,e,f,g");

//Simulate 10 requested connections

for (int i = 0; i < 10; i++) {

lc.request();

}

}

class Node{

//Node identification

String name;

//Counter

AtomicInteger count = new AtomicInteger(0);

public Node(String name){

this.name = name;

}

//Counter increase

public void inc(){

count.getAndIncrement();

}

//Get the number of connections

public int get(){

return count.get();

}

@Override

public String toString() {

return name+"="+count;

}

}

}

7.3 result analysis

After initialization, the heap node value is 0, that is, the number of connections per machine is 0

After the top of the heap is connected, it sinks, the heap is reordered, and the minimum heap rule remains valid

8 application cases

8.1 nginx upstream

upstream frontend {

#Source address hash

ip_hash;

server 192.168.0.1:8081;

server 192.168.0.2:8082 weight=1 down;

server 192.168.0.3:8083 weight=2;

server 192.168.0.4:8084 weight=3 backup;

server 192.168.0.5:8085 weight=4 max_fails=3 fail_timeout=30s;

}

ip_hash: the hash algorithm of the source address

down: indicates that the current server does not participate in the load temporarily

Weight: that is, the weighting algorithm. The default is 1. The greater the weight, the greater the weight of the load.

Backup: backup machine. Only when all other non backup machines are down or busy, request the backup machine.

max_ Failures: the maximum number of failures. The default value is 1. Here it is 3, that is, the maximum number of attempts is 3

fail_timeout: the timeout is 30 seconds. The default value is 10s.

be careful! weight and backup cannot match IP_ Use with hash keyword.

8.2 springcloud ribbon IRule

#Set the load balancing policy eureka ‐ application ‐ service as the name of the invoked service eureka‐application‐ service.ribbon.NFLoadBalancerRuleClassName=com.netflix.loadbalancer.RandomRule

Roundrobin rule: polling

RandomRule: random

Availability filtering rule: first filter out the services in the circuit breaker tripping state due to multiple access faults, and the number of concurrent connections exceeds

Services that exceed the threshold, and then poll the remaining services

WeightedResponseTimeRule: calculate the weight of all services according to the average response time. The faster the response time, the greater the service weight. Gang Qi

If the statistical information is insufficient, the roundrobin rule policy will be used. When the statistical information is sufficient, the policy will be switched to

RetryRule: first, according to the policy of roundrobin rule, if the service acquisition fails, try again within the specified time to obtain the available services

Best available rule: it will filter out the services in the circuit breaker tripping state due to multiple access faults, and then select a service with the least concurrency

Services

ZoneAvoidanceRule: the default rule, which comprehensively judges the performance of the region where the server is located and the availability of the server

8.3 dubbo load balancing

Using Service annotations

@Service(loadbalance = "roundrobin",weight = 100)

RandomLoadBalance: random. This method is dubbo's default load balancing strategy

Roundrobin loadbalance: polling

Leadactiveloadbalance: the minimum number of active times. The dubbo framework defines a Filter to calculate the number of times the service is called

Consistent hashloadbalance: consistency hash