Etcd distributed lock is not a function api provided by etcd server, but a tool for integrating various features (lease, watch, mvcc, etc.) based on etcd.

In the same process, in order to avoid data competition for shared variables, it can usually be avoided by locking and unlocking. However, if multiple processes operate on the same resource, ordinary locks cannot be used. At this time, the "lock" needs a shared medium to store. The processes using it can avoid simultaneous operation through ordinary locking and unlocking.

Issues considered

Next, let's analyze what should be considered in the next distributed lock from the most basic process.

Lock

When operating on shared resources, you first need to lock. When locking, the process that grabs the lock can directly return to operate the shared resources, while the process that does not grab the lock needs to wait for the release of the lock. For the same lock, only one process can hold it at the same time, which reflects the mutual exclusion of the lock.

Lock period

Due to the multi process situation, the process downtime needs to be considered. If the process that grabs the lock suddenly goes down, there needs to be a lock release mechanism to avoid the deadlock caused by the continuous blocking of the subsequent processes. The components that provide locks should also have high availability and can continue to provide services after a node goes down.

Unlock

After the operation on resources is completed, the lock needs to be released in time, but the lock of other processes cannot be released. The subsequent processes that do not grab the lock can obtain the lock. If there are too many lock grabbing processes, it may lead to the group shock effect. The components providing locks should avoid this phenomenon to a certain extent.

Realization idea

Several special mechanisms of etcd can be used as the basis of distributed locking. The key value pair of etcd can be used as the body of lock. The creation and deletion of lock correspond to the creation and deletion of key value pair. The distributed consistency and high availability of etcd can ensure the high availability of locks.

prefix

Because etcd supports prefix lookup, you can set the lock to the format of "lock name" + "unique id" to ensure the symmetry of the lock, that is, each client only operates the lock it holds.

lease

The lease mechanism can perform a keep alive operation for the lock, bind the lease when creating the lock, and renew the contract regularly. If the client goes down unexpectedly during the lock acquisition, the lock held will be automatically deleted to avoid deadlock.

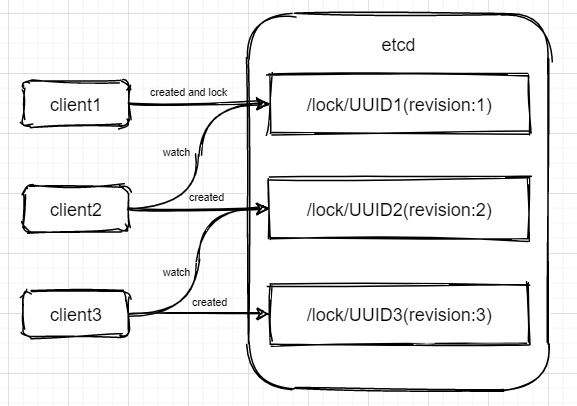

Revision

etcd internally maintains a global Revision value, which will increase with the increase of transactions. The order of obtaining locks can be determined by the size of the Revision value. The order of obtaining locks has been determined when locking, and the subsequent release of locks by the client will not produce a group shock effect.

watch

The watch mechanism can be used to monitor lock deletion events. It is more efficient to check whether the lock has been released without using busy polling. At the same time, you can monitor through Revision during watch. You only need to monitor the Revision closest to you and smaller than yourself to obtain the lock in real time.

Source code analysis

In the etcdv3 version of the client library, we already have the implementation of distributed locks. Let's take a look at the implementation logic.

Examples

func main() {

//Initialize etcd client

cli, _ := clientv3.New(clientv3.Config{

Endpoints: []string{"127.0.0.1:23790"},

DialTimeout: time.Second,

})

//Create a session and set the ttl of the lock according to the business situation

s, _ := concurrency.NewSession(cli, concurrency.WithTTL(3))

defer s.Close()

//Initialize a lock instance and unlock it.

mu := concurrency.NewMutex(s, "mutex-linugo")

if err := mu.Lock(context.TODO()); err != nil {

log.Fatal("m lock err: ", err)

}

//do something

if err := mu.Unlock(context.TODO()); err != nil {

log.Fatal("m unlock err: ", err)

}

}When the NewSession method is called, it actually initializes the lease of a user specified behavior (the behavior can be specifying ttl, or reusing other lease, etc.), and carries out keepalive asynchronously.

type Mutex struct {

s *Session //Saved lease related information

pfx string //Lock name, prefix of key

myKey string //Lock the complete key

myRev int64 //Own version number

hdr *pb.ResponseHeader

}

func NewMutex(s *Session, pfx string) *Mutex {

return &Mutex{s, pfx + "/", "", -1, nil}

}NewMutex actually creates a lock data structure, which can store some lock information. The "mutex linugo" of the input parameter is just a prefix of the key, as well as the complete key, revision and other information to be created later.

Lock

func (m *Mutex) Lock(ctx context.Context) error {

//First try to acquire the lock

resp, err := m.tryAcquire(ctx)

if err != nil {

return err

}

......

}

func (m *Mutex) tryAcquire(ctx context.Context) (*v3.TxnResponse, error) {

s := m.s

client := m.s.Client()

//The full key is the prefix name plus lease ID. because different processes generate different leases, the locks are different

m.myKey = fmt.Sprintf("%s%x", m.pfx, s.Lease())

//cmp determines whether the current key is created for the first time by comparing whether createRevision is 0

cmp := v3.Compare(v3.CreateRevision(m.myKey), "=", 0)

//put will bind the key to the lease and store it

put := v3.OpPut(m.myKey, "", v3.WithLease(s.Lease()))

//Get will get the value of the current key

get := v3.OpGet(m.myKey)

//getOwner searches the range by prefix, and WithFirstCreate() filters out the value corresponding to the current minimum revision

getOwner := v3.OpGet(m.pfx, v3.WithFirstCreate()...)

resp, err := client.Txn(ctx).If(cmp).Then(put, getOwner).Else(get, getOwner).Commit()

if err != nil {

return nil, err

}

//Assign the lock of the transaction to the myRev field

m.myRev = resp.Header.Revision

if !resp.Succeeded {

m.myRev = resp.Responses[0].GetResponseRange().Kvs[0].CreateRevision

}

return resp, nil

}

When acquiring a lock, try to lock it through transaction operation.

If the current key is created for the first time, bind the key to the lease and store it. Otherwise, get the details of the current key. getOwner searches the value corresponding to the minimum revision through the prefix in order to obtain the holder of the current lock (if the key of the minimum revision releases the lock, the key will be deleted, so the key of the minimum revision is the holder of the current lock).

! resp.Succeeded means that the key is not created for the first time, so the previous operation is to get, obtain the revision when the key is created and assign it to the myRev field of the lock.

Returning to the main function, the lock related information is already stored in etcd. Later, you will judge whether you have obtained the lock or need to wait for the lock by comparing the Revision. If your myRev is the same as the Revision of ownerKey, you are the lock holder.

func (m *Mutex) Lock(ctx context.Context) error {

resp, err := m.tryAcquire(ctx)

if err != nil {

return err

}

//ownerKey is the value of the lock currently held

ownerKey := resp.Responses[1].GetResponseRange().Kvs

//If the length of the ownerKey is 0 or the owner's Revision is the same as his own Revision, it means that he holds the lock and can return directly and operate on the shared resources

if len(ownerKey) == 0 || ownerKey[0].CreateRevision == m.myRev {

m.hdr = resp.Header

return nil

}

......

//Wait for the release of the lock

client := m.s.Client()

_, werr := waitDeletes(ctx, client, m.pfx, m.myRev-1)

if werr != nil {

m.Unlock(client.Ctx())

return werr

}

//Ensure that the session does not expire

gresp, werr := client.Get(ctx, m.myKey)

if werr != nil {

m.Unlock(client.Ctx())

return werr

}

if len(gresp.Kvs) == 0 {

return ErrSessionExpired

}

m.hdr = gresp.Header

return nil

}waitDeletes

If you don't get the lock, you need to wait for the release of the previous lock. The watch mechanism is mainly used here.

func waitDeletes(ctx context.Context, client *v3.Client, pfx string, maxCreateRev int64) (*pb.ResponseHeader, error) {

//getOpts will obtain the key set smaller than the Revision of the incoming maxCreateRev through two Option functions, and find the key corresponding to the largest Revision in the set

//It is mainly used to obtain the previous locked key, and then watch the deletion event of the key

getOpts := append(v3.WithLastCreate(), v3.WithMaxCreateRev(maxCreateRev))

for {

//get obtains key value pairs through the action of getOpts

resp, err := client.Get(ctx, pfx, getOpts...)

if err != nil {

return nil, err

}

//If the length is 0, it means that the key does not exist, which means that it has been deleted. The previous lock has been released and can be returned directly

if len(resp.Kvs) == 0 {

return resp.Header, nil

}

lastKey := string(resp.Kvs[0].Key)

//Otherwise, listen to the deletion event of the last lock through watch

if err = waitDelete(ctx, client, lastKey, resp.Header.Revision); err != nil {

return nil, err

}

}

}

func waitDelete(ctx context.Context, client *v3.Client, key string, rev int64) error {

cctx, cancel := context.WithCancel(ctx)

defer cancel()

var wr v3.WatchResponse

//watch key through Revsion, that is, the previous lock

wch := client.Watch(cctx, key, v3.WithRev(rev))

for wr = range wch {

for _, ev := range wr.Events {

//Listen for Delete events

if ev.Type == mvccpb.DELETE {

return nil

}

}

}

if err := wr.Err(); err != nil {

return err

}

if err := ctx.Err(); err != nil {

return err

}

return fmt.Errorf("lost watcher waiting for delete")

}

After waitDeletes returns normally, the process will obtain the lock and enter the operation shared resources.

UnLock

The unlocking operation will directly delete the corresponding kv, which will trigger the acquisition of the next lock.

func (m *Mutex) Unlock(ctx context.Context) error {

client := m.s.Client()

if _, err := client.Delete(ctx, m.myKey); err != nil {

return err

}

m.myKey = "\x00"

m.myRev = -1

return nil

}Summary

Behind the stability of etcd distributed lock is the full use of its own characteristics. In this section, we first analyze the characteristics satisfied by distributed locks, then list the support of various characteristics of etcd for distributed locks, and finally analyze how the concurrency package of clientV3 realizes the function of distributed locks.

Reference

- etcdV3.5.1 source code- https://github.com/etcd-io/et...

- Pull hook Education - how to realize distributed lock based on etcd?