In the current limiting algorithm, there is a token bucket algorithm, which can deal with short burst traffic, which is particularly useful for the situation of uneven traffic in the real environment. It will not trigger current limiting frequently and is friendly to the caller.

For example, the current limit is 10qps, which will not be exceeded in most cases, but it will occasionally reach 30qps, and then it will return to normal soon. Assuming that this sudden traffic will not affect the system stability, we can allow this instantaneous sudden traffic to a certain extent, so as to bring users a better usability experience. This is where the token bucket algorithm is used.

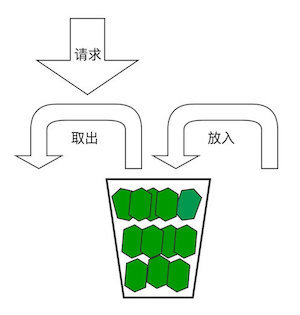

Principle of token bucket algorithm

As shown in the figure below, the basic principle of the algorithm is: there is a token bucket with a capacity of X, and Z tokens are put into the bucket every Y unit time. If the number of tokens in the bucket exceeds x, it will be discarded. When processing the request, you need to take out the token from the token bucket first. If you get the token, continue processing; If the token is not available, the request is rejected.

It can be seen that it is particularly important to set the number of X, Y and Z in the token bucket algorithm. Z should be slightly larger than the number of requests per Y unit time, and the system will be in this state for a long time; X is the maximum number of instantaneous requests allowed by the system, and the system should not be in this state for a long time, otherwise the current limit will be triggered frequently. At this time, it indicates that the flow exceeds the expectation, and the causes need to be investigated and corresponding measures need to be taken in time.

Redis implements token bucket algorithm

I've seen some token buckets implemented by programs before. The way to put tokens into the bucket is to start a thread and increase the number of tokens every Y unit time, or execute this process regularly in Timer. I am not satisfied with this method for two reasons: one is the waste of thread resources, and the other is the inaccurate execution time due to the scheduling problem.

Here, the method to determine the number of tokens in the token bucket is calculated. First, calculate how long it took from the last request to this request, whether it reached the time threshold of the issuing card, and then increase the number of tokens and how many tokens can be put into the bucket.

Talk is cheap!

Let's take a look at how it is implemented in Redis, because it involves multiple interactions with Redis. In order to improve the throughput of current limiting processing and reduce the number of interactions between programs and Redis, Lua script supported by Redis is adopted. The execution of lua script is atomic, so there is no need to worry about dirty data.

Code excerpt from FireflySoft.RateLimit , it supports not only common master-slave Redis deployment, but also cluster Redis, so the throughput can be improved by horizontal expansion. In order to facilitate reading, some notes are added here, which are actually not available.

-- Defines the return value, which is an array, including: whether to trigger current limit (1 current limit 0 passes), and the number of tokens in the current bucket

local ret={}

ret[1]=0

-- Redis Cluster fragmentation Key,KEYS[1]It is the current limiting target

local cl_key = '{' .. KEYS[1] .. '}'

-- Get the current setting of the current limit penalty. When the current limit penalty is triggered, a message with expiration time will be written KV

-- If there is a current limit penalty, the result is returned[1,-1]

local lock_key=cl_key .. '-lock'

local lock_val=redis.call('get',lock_key)

if lock_val == '1' then

ret[1]=1

ret[2]=-1

return ret;

end

-- Part of the code is omitted here

-- obtain[Time when the token was last dropped into the bucket],If the delivery time is not set, the token bucket does not exist. At this time:

-- In one case, the token bucket is defined to be full when it is executed for the first time.

-- Another situation is that the over current limit processing is not executed for a long time, resulting in the problem of carrying this time KV Released,

-- This expiration time will exceed the time when the token is naturally put into the bucket until the bucket is full, so the token bucket should also be full.

local last_time=redis.call('get',st_key)

if(last_time==false)

then

-- Number of tokens remaining after this execution: capacity of bucket- Number of tokens consumed in this execution

bucket_amount = capacity - amount;

-- Update the number of tokens to the token bucket. At the same time, there is an expiration time. If the program is not executed for a long time, the token bucket will expire KV Will be recycled

redis.call('set',KEYS[1],bucket_amount,'PX',key_expire_time)

-- set up[The last time a token was put into the bucket],Later, it is used to calculate the number of tokens that should be put into the bucket

redis.call('set',st_key,start_time,'PX',key_expire_time)

-- Return value[Number of tokens in the current bucket]

ret[2]=bucket_amount

-- No other treatment is required

return ret

end

-- The token bucket exists. Get the current number of tokens in the token bucket

local current_value = redis.call('get',KEYS[1])

current_value = tonumber(current_value)

-- Judge whether it is time to put a new token into the bucket: current time-Time of last launch >= Delivery interval

last_time=tonumber(last_time)

local last_time_changed=0

local past_time=current_time-last_time

if(past_time<inflow_unit)

then

-- Before delivery, take the token directly from the token bucket

bucket_amount=current_value-amount

else

-- Some tokens need to be put in, Estimated launch quantity = (Time since last launch/Delivery interval)*Quantity released per unit time

local past_inflow_unit_quantity = past_time/inflow_unit

past_inflow_unit_quantity=math.floor(past_inflow_unit_quantity)

last_time=last_time+past_inflow_unit_quantity*inflow_unit

last_time_changed=1

local past_inflow_quantity=past_inflow_unit_quantity*inflow_quantity_per_unit

bucket_amount=current_value+past_inflow_quantity-amount

end

-- Part of the code is omitted here

ret[2]=bucket_amount

-- If the remaining quantity in the bucket is less than 0, check whether the current limiting penalty is required. If necessary, write a penalty KV,The expiration time is the number of seconds of the penalty

if(bucket_amount<0)

then

if lock_seconds>0 then

redis.call('set',lock_key,'1','EX',lock_seconds,'NX')

end

ret[1]=1

return ret

end

-- Here, if the token can be deducted successfully, the token bucket needs to be updated KV

if last_time_changed==1 then

redis.call('set',KEYS[1],bucket_amount,'PX',key_expire_time)

-- New launch, update[Last launch time]This is the launch time

redis.call('set',st_key,last_time,'PX',key_expire_time)

else

redis.call('set',KEYS[1],bucket_amount,'PX',key_expire_time)

end

return retFrom the above code, we can see that the main processing process is:

1. Judge whether it has been punished by current limiting, return directly if it has, and enter the next step if it has not.

2. Judge whether the token bucket exists. If it does not exist, first create the token bucket, and then deduct the token to return. If it exists, go to the next step.

3. Judge whether to release the token. If not, deduct the token directly. If necessary, release the token first and then deduct the token.

4. Judge the number of tokens after deduction. If it is less than 0, the current limit is returned, and the current limit penalty is set. If it is greater than or equal to 0, proceed to the next step.

5. Update the number of tokens in the bucket to Redis.

You can submit and run this Lua script in Redis Library of any development language. If you use. NET platform, you can refer to this article: Using token bucket throttling in ASP.NET Core .

About FireflySoft.RateLimit

FireflySoft.RateLimit is a current limiting class library based on. NET Standard. Its kernel is simple and lightweight, and can flexibly respond to current limiting scenarios with various requirements.

Its main features include:

- A variety of current limiting algorithms: built-in fixed window, sliding window, leaky bucket and token bucket, which can also be customized and extended.

- Multiple count storage: memory and Redis are currently supported.

- Distributed friendly: support unified counting of distributed programs through Redis storage.

- Flexible current limiting target: various data can be extracted from the request to set the current limiting target.

- Support flow restriction penalty: the client can be locked for a period of time after triggering flow restriction, and its access is not allowed.

- Dynamic change rules: support dynamic change of flow restriction rules when the program is running.

- Custom error: you can customize the error code and error message after triggering current limiting.

- Universality: in principle, it can meet any current limiting scenario.

Github open source address: https://github.com/bosima/FireflySoft.RateLimit/blob/master/README.zh-CN.md

Get more knowledge of architecture. Please pay attention to the official account number FireflySoft. Original content, please indicate the source for reprint.