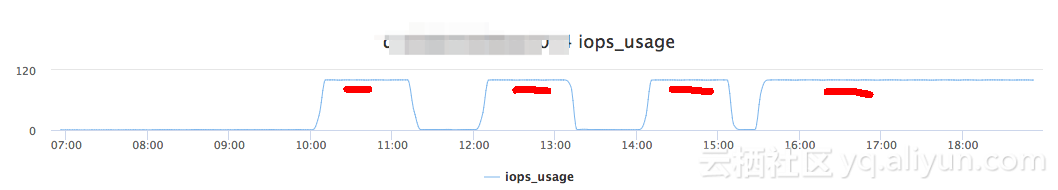

In an online business, IOPS usage of 100% is frequent (4000 IOPS per second), each lasting nearly 1 hour. Log discovery from slow requests takes 1 hour to make a getMore request, resulting in high IOPS; after further investigation, it is finally found that this is an index selection problem.

2017-11-01T15:04:17.498+0800 I COMMAND [conn5735095] command db.mycoll command: getMore { getMore: 215174255789, collection: "mycoll" } cursorid:215174255789 keyUpdates:0 writeConflicts:0 numYields:161127 nreturned:8419 reslen:4194961 locks:{ Global: { acquireCount: { r: 322256 } }, Database: { acquireCount: { r: 161128 } }, Collection: { acquireCount: { r: 161128 } } } protocol:op_command 3651743ms

Question Background

The business starts at each point and synchronizes the data from the past hour to another data source. The queries are sorted by _id. The two main query criteria are as follows: execute the find command first, then iterate through the cursor to read all the documents that meet the criteria.

* created_at: { $gte: "2017-11-01 13:00:00", $lte: "2017-11-01 13:59:59" } * sort: {_id: 1}

Characteristics of business data

- Each data is inserted with a created_at field with the current time stamp and an index of {created_at: -1}

- The _id field is user-defined (not the default ObjectId for mongodb), with random and irregular values

- The whole collection is very large, with over 100 million documents in total

MongoDB find, getMore features

- find command, returns the first batch (default 101 records) that meets the criteria, as well as a cursor

- getMore continues traversing based on the cursor returned by find, each traversal returns no more than 4 MB of data by default

Index Selection

Option 1: Use the created_at index

The entire execution path is

- Quickly locate qualified documents by creating_at index

- Read out all documents that satisfy the created_at query

- Sort all documents by _id field

Here are two typical logs from this index, which you can see

- Documents that meet the created_at criteria are approximately 7w+. After sorting them all, the first 101 are returned, which takes about 600 ms in total.

-

Next, getMore, because the results will be sorted by _id, getMore will have to continue to read out all the matching items and skip the first 101 and return to the next batch to the client.

2017-11-01T14:02:31.861+0800 I COMMAND [conn5737355] command db.mycoll command: find { find: "mycoll", filter: { created_at: { $gte: "2017-11-01 13:00:00", $lte: "2017-11-01 13:59:59" } }, projection: { $sortKey: { $meta: "sortKey" } }, sort: { _id: 1 }, limit: 104000, shardVersion: [ Timestamp 5139000|7, ObjectId('590d9048c628ebe143f76863') ] } planSummary: IXSCAN { created_at: -1.0 } cursorid:215494987197 keysExamined:71017 docsExamined:71017 hasSortStage:1 keyUpdates:0 writeConflicts:0 numYields:557 nreturned:101 reslen:48458 locks:{ Global: { acquireCount: { r: 1116 } }, Database: { acquireCount: { r: 558 } }, Collection: { acquireCount: { r: 558 } } } protocol:op_command 598ms 2017-11-01T14:02:32.036+0800 I COMMAND [conn5737355] command db.mycoll command: getMore { getMore: 215494987197, collection: "mycoll" } cursorid:215494987197 keyUpdates:0 writeConflicts:0 numYields:66 nreturned:8510 reslen:4194703 locks:{ Global: { acquireCount: { r: 134 } }, Database: { acquireCount: { r: 67 } }, Collection: { acquireCount: { r: 67 } } } protocol:op_command 120ms

Option 2: Use _id index

The entire execution path is

- Scan all records according to the _id index (in the order of the _id index, the created_at of the corresponding document is random and irregular)

- Return documents that meet the created_at criteria, the first find, to find 101 eligible documents to return

Here are two typical logs from this index, which you can see

- The first scan took 17w to find 101 eligible records, which took 46s

-

The second time nearly 4 MB of eligible documents (8419) will be returned, more documents will need to be scanned in the full table, which will take an hour. Because full table scanning is not very friendly to cache, it has always been read from disk, resulting in a lot of IO.

2017-11-01T14:03:25.648+0800 I COMMAND [conn5735095] command db.mycoll command: find { find: "mycoll", filter: { created_at: { $gte: "2017-11-01 13:00:00", $lte: "2017-11-01 13:59:59" } }, projection: { $sortKey: { $meta: "sortKey" } }, sort: { _id: 1 }, limit: 75000, shardVersion: [ Timestamp 5139000|7, ObjectId('590d9048c628ebe143f76863') ] } planSummary: IXSCAN { _id: 1 } cursorid:215174255789 keysExamined:173483 docsExamined:173483 fromMultiPlanner:1 replanned:1 keyUpdates:0 writeConflicts:0 numYields:2942 nreturned:101 reslen:50467 locks:{ Global: { acquireCount: { r: 5886 } }, Database: { acquireCount: { r: 2943 } }, Collection: { acquireCount: { r: 2943 } } } protocol:op_command 46232ms 2017-11-01T15:04:17.498+0800 I COMMAND [conn5735095] command db.mycoll command: getMore { getMore: 215174255789, collection: "mycoll" } cursorid:215174255789 keyUpdates:0 writeConflicts:0 numYields:161127 nreturned:8419 reslen:4194961 locks:{ Global: { acquireCount: { r: 322256 } }, Database: { acquireCount: { r: 161128 } }, Collection: { acquireCount: { r: 161128 } } } protocol:op_command 3651743ms

summary

IOPS is high because the selected index is not optimal, so why doesn't MongoDB choose the optimal index to perform this task?

- As you can see from the log, in most cases MongoDB is a walking created_at index

- The case above, which index is better, is actually related to the distribution of the data

- Sorting a large number of documents can also be expensive if there are especially many documents that satisfy the created_at query criteria

- If the created_at field distribution is very discrete, such as the data in this case, it will be more expensive to scan the entire table to find eligible documents

- MongoDB's index is based on the sampling cost model, and one index is better for the sampled dataset, not necessarily the entire dataset.

- When a MongoDB query is executed for the first time, if there are multiple execution plans, the best one is selected from the model and cached to improve efficiency

- When a collection create/delete index occurs in MongoDB, the cache execution plan is emptied and re-selected

- During execution, MongoDB also considers whether the execution plan is optimal and triggers the logic to rebuild the execution plan (the triggered strategy has not been studied in detail and shared later), such as the find query in scenario 2, execution plan.It contains {replanned:1} instructions for rebuilding the execution plan; when it finds that the execution plan actually performs worse, it will eventually reach a better execution plan.

- The data is best understood by the business itself. For case s where the query optimizer is uncertain, you can build an execution plan by adding hint to the query and specifying your own index.