preface

Code demonstration environment:

- Software environment: Windows 10

- Development tool: Visual Studio Code

- JDK version: OpenJDK 15

Sound effects and music

Basic knowledge of sound effect

When we play games, we may hear sound effects, but we don't really pay attention to them. Because I want to hear them, sound effect is very important in the game. In addition, the concert dynamics in the game are modified to match the development of the game's plot. So what is sound effect? Sound effect is the effect produced by media vibration. The medium is the vibration generated by the air and the speakers in the computer - which makes sound - transmitted to our ears; Then our eardrums will capture these signals and send them to our brain, so that humans can hear the sound.

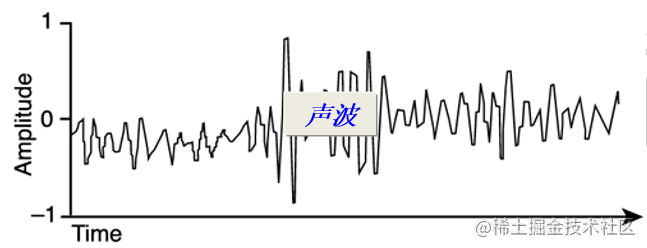

Resonance is produced by the compressed vibration of air. The fast vibration produces high-frequency sound effect, so that we can hear the high pitch. The number of compressions per vibration is expressed using amplitude. High amplitude will make us hear loud; In short, sound waves are just modifying the amplitude for a long time. As shown in the figure below:

Digital sound, CD and computer sound formats are a series of sound waves. The amplitude of sound waves per second is called audio sampling. Of course, highly sampled sound waves can more accurately represent sound. These samples use 16 bits to represent 65535 possible amplitudes. Many sounds allow multiple channels. For example, a CD has two channels - one for the left speaker and one for the right speaker.

Java sound API

Java can play 8-bit and 16 bit samples, ranging from 8000Hz to 48000hz. Of course, it can also play mono and stereo sound effects. So what sound to use depends on the plot of the game, such as 16 bit mono, 44100Hz sound. Java supports three audio format files: AIFF, AU and WAV files. We use the AudioSystem class when loading audio files. This class has several static methods. Generally, we use the getAudioInputStream() method to open an audio file, which can be opened from the local system or from the Internet, and then return the AudioInputStream object. Then use this object to read audio samples or query audio styles.

File file = new File("sound.wav");

AudioInputStream stream = AudioSystem.getAudioInputStream(file);

AudioFormat format = stream.getFormat();

AudioFormat class provides the function of obtaining sound effect sampling, such as sampling rate and number of channels. In addition, it provides frame size -- some bytes. For example, 16 bit stereo, its frame size is 4, or 2 bytes represent the sampling value, so we can easily calculate how much memory stereo can occupy. For example, the memory value of 16 bit two-thirds length stereo audio format sampling: 44100x 3x4 bytes = 517KB. If it is mono, the sampling capacity is half that of stereo.

After we know the size and format of audio sampling, the next step is to read the content from these audio files. Interface line is an API used to send and receive system audio. We can use line to send sound samples to the OS sound system to play, or receive the sound of the OS sound system, such as microphone sound. Line has several sub interfaces. The main sub interface is SourceDataLine, which allows us to write sound data to the sound system in the OS. The instance of line is obtained through the getLine() method of AudioSystem. We can pass the parameter line Info object to specify the line type returned. Because line Info has a dataline Info subclass, which knows that in addition to the SourceDataLine interface, there is another line called Clip() interface. This interface can do many things for us, such as loading samples from AudioInputStream stream stream into memory, and automatically transmitting these data to audio system for playback. The following is the code for playing sound using Clipe:

//Specify which line needs to be created DataLine.Info info = new DataLine.Info(Clip.class,format); //Create this line Clip clip = (Clip)AudioSystem.getLine(info); //Loading samples from stream objects clip.open(stream); //Start playing audio content clip.start();

The Clip interface is very easy to use. It is very similar to the AudioClip object in JDK version 1.0, but it has some disadvantages. For example, Java sound effect has a limit on the number of lines. This limit occurs when you open line at the same time. Generally, at most 32 line objects exist at the same time. In other words, we can only open a limited number of line objects for use. In addition, if we want to play multiple Clip objects at the same time, Clip can only play one sound at the same time. For example, we want to play two or three explosions at the same time, but one explosion can only be applied to one sound. Because of this defect, we will create a solution to overcome this problem.

Play sound

Let's create a simple sound player. We mainly use the AudioInputStream class to read the audio file into the byte array, and then use the Line object to play it automatically. Because the ByteArrayInputStream class encapsulates a byte array, we can play multiple copies of the same audio at the same time. getSamples(AudioInputStream) method reads the sampling data from the AudioInputStream stream stream, and then saves it to the byte array. Finally, use the play() method to read the data from the InputStream stream object to the cache, and then write it to the SourceDataLine object for playback.

Because there are bug s in the Java sound effect API, the java process will not exit by itself. Usually, the JVM only runs wizard threads, but when we use Java sound effect, non wizard threads run in the background, so we must call system Exit (0) ends the Java sound process.

SimpleSoundPlayer class

package com.funfree.arklis.sounds;

import java.io.*;

import javax.sound.sampled.*;

/**

Function: write a class to encapsulate the sound, open it from the file system, and then play it

*/

public class SimpleSoundPlayer {

private AudioFormat format;

private byte[] samples;//Save sound samples

/**

Opens a sound from a file.

*/

public SimpleSoundPlayer(String filename) {

try {

//Open an audio stream

AudioInputStream stream =

AudioSystem.getAudioInputStream(

new File(filename));

format = stream.getFormat();

//Get sampling

samples = getSamples(stream);

}

catch (UnsupportedAudioFileException ex) {

ex.printStackTrace();

}

catch (IOException ex) {

ex.printStackTrace();

}

}

/**

Gets the samples of this sound as a byte array.

*/

public byte[] getSamples() {

return samples;

}

/**

Get samples from AudioInputStream and save them as byte arrays-- The array here will be encapsulated in the ByteArrayInputStream class,

So that Line can play multiple audio files at the same time.

*/

private byte[] getSamples(AudioInputStream audioStream) {

//Gets the number of bytes read

int length = (int)(audioStream.getFrameLength() *

format.getFrameSize());

//Read the entire stream

byte[] samples = new byte[length];

DataInputStream is = new DataInputStream(audioStream);

try {

is.readFully(samples);

}

catch (IOException ex) {

ex.printStackTrace();

}

// Return sample

return samples;

}

/**

Play stream

*/

public void play(InputStream source) {

//Audio sampling every 100 milliseconds

int bufferSize = format.getFrameSize() *

Math.round(format.getSampleRate() / 10);

byte[] buffer = new byte[bufferSize];

//Create a line object to perform sound playback

SourceDataLine line;

try {

DataLine.Info info =

new DataLine.Info(SourceDataLine.class, format);

line = (SourceDataLine)AudioSystem.getLine(info);

line.open(format, bufferSize);

}catch (LineUnavailableException ex) {

ex.printStackTrace();

return;

}

// Start autoplay

line.start();

// Copy data to line object

try {

int numBytesRead = 0;

while (numBytesRead != -1) {

numBytesRead =

source.read(buffer, 0, buffer.length);

if (numBytesRead != -1) {

line.write(buffer, 0, numBytesRead);

}

}

}catch (IOException ex) {

ex.printStackTrace();

}

// Wait until all data is played, and then close the line object.

line.drain();

line.close();

}

}

If you need to broadcast circularly, you can modify the above class to realize this function.

LoopingByteInputStream class

package com.funfree.arklis.engine;

import static java.lang.System.*;

import java.io.*;

/**

Function: encapsulate ByteArrayInputStream class, which is used to play audio files in a loop. When loop playback is stopped

Call close() method

*/

public class LoopingByteInputStream extends ByteArrayInputStream{

private boolean closed;

public LoopingByteInputStream(byte[] buffer){

super(buffer);

closed = false;

}

/**

Read an array with length. If the subscript is set to the beginning of the array,

If it is closed, - 1

*/

public int read(byte[] buffer, int offset, int length){

if(closed){

return -1;

}

int totalBytesRead = 0;

while(totalBytesRead < length){

int numBytesRead = super.read(buffer,offset + totalBytesRead,

length - totalBytesRead);

if(numBytesRead > 0){

totalBytesRead += numBytesRead;

}else{

reset();

}

}

return totalBytesRead;

}

/**

Close flow

*/

public void close()throws IOException{

super.close();

closed = true;

}

}

Sound effect filter is a simple audio processor used for existing sound samples. This filter is generally used to process sound in real time. Therefore, the so-called sound effect filter is often referred to as digital signal processor - used for the processing of later sound effects, such as adding echo effect to guitar.

Create a real-time sound filtering framework

Because the sound filter can make the game more dynamic, we can balance the effect of game plot and sound effect. For example, we can add the echo effect of strike, or play a rock sound, etc. Let's create an echo filter and a 3D surround sound filter. Because there are many kinds of sound effect processors, we can create a filter frame. Here, the framework defines three very important methods:

- Filter sample

- Get the remaining dimensions

- reset

SoundFilter objects can contain status data. Different SoundFilter objects can be used to play different sounds. For simplicity, SoundFilter is allowed to play 16 bit, signed and little endian format samples. Therefore, little endian is a professional term, which represents the byte order of data. Little endian means to store 16 bit sample data in the least significant bit; Big endian indicates that 16 bit sample data is stored in the most significant bit. Signed means positive and negative values, such as - 32768 to 32767 values. WAV sound files are stored in little endian format by default.

Java sound is like byte data, so we have to convert these byte data into 16 bit signed format to work. The SoundFilter class provides this function, which is implemented by two static methods, setSample() and getSample(). Here is a simple way to use the SoundFilter class to play our sound files. We can directly in the sample array, but the flexibility is not good, so we can encapsulate the SimpleSoundPlayer class and the LoopingByteArrayInputStream tool class to filter the source music files in real time without modifying the source files.

As we said, the filter can be played as an echo. This effect is achieved by playing the sound effect after the original audio file is played, so as to produce an echo effect. However, in the filterdsoundstream class, if there are still data bytes left in the SoundFilter class, the read method must clear these bytes of data and silence them. Finally, after these actions are completed, return - 1 to indicate that the audio stream reading is over.

FilteredSoundStream class

package com.funfree.arklis.sounds;

import java.io.FilterInputStream;

import java.io.InputStream;

import java.io.IOException;

import com.funfree.arklis.engine.*;

/**

Function: this class is FilterInputStream class, which is used for SoundFilter to realize the underlying stream. See SoundFilter

Note: FilterInputStream contains other input streams. It takes these streams as the basic data source, which can be transmitted directly

Or provide additional functions.

*/

public class FilteredSoundStream extends FilterInputStream {

private static final int REMAINING_SIZE_UNKNOWN = -1;

private SoundFilter soundFilter;

private int remainingSize;

/**

Use the specified stream and the specified SoundFilter object

*/

public FilteredSoundStream(InputStream in,

SoundFilter soundFilter){

super(in);

this.soundFilter = soundFilter;

remainingSize = REMAINING_SIZE_UNKNOWN;

}

/**

Override the read method to filter the byte stream

*/

public int read(byte[] samples, int offset, int length)throws IOException{

// Read and filter sound samples in the stream

int bytesRead = super.read(samples, offset, length);

if (bytesRead > 0) {

soundFilter.filter(samples, offset, bytesRead);

return bytesRead;

}

// If there are no remaining bytes in the sound stream, check whether the filter has any remaining bytes (as echo)

if (remainingSize == REMAINING_SIZE_UNKNOWN) {

remainingSize = soundFilter.getRemainingSize();

// Round and multiply by 4 (size of standard frame)

remainingSize = remainingSize / 4 * 4;

}

if (remainingSize > 0) {

length = Math.min(length, remainingSize);

// Clear cache

for (int i=offset; i<offset+length; i++) {

samples[i] = 0;

}

// Filter the remaining bytes

soundFilter.filter(samples, offset, length);

remainingSize-=length;

// Return length

return length;

}

else {

// End flow

return -1;

}

}

}

Create a real-time echo filter

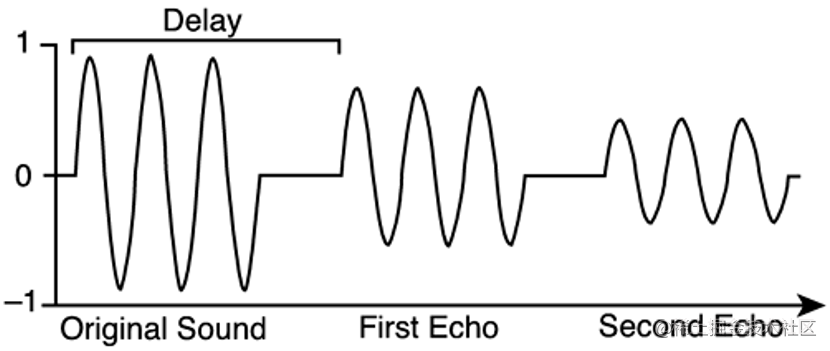

Echo indicates that there is a delay effect after the playback of the source audio file. The graphic representation is as follows:

- Delay -- delay

- Original Sound -- source sound

- First Echo -- First Echo

- Second Echo -- Second Echo

Because the format of the SoundFilter class is unknown, it doesn't matter whether the audio file we filter is a 44100HZ sampling rate or a 8000hz sampling rate, so we can't simply tell the delay time determined by the echo filter. Therefore, we can only tell the echo filter how many samples can be delayed. For example, let 44100HZ have a one to two delay effect, so tell the echo filter that 44100 samples are delayed. Note: because the delay timing is calculated from the beginning of audio processing. Therefore, decay itself uses 0 or samples representing delay. If 0 is used, it means no delay, or 1 means the echo time is as long as the original audio.

The code to add decay to the echo is as follows:

short newSample = (short)(oldSample + decay * delayBuffer[delayBuffersPos]);

See the EchoFilter class below

EchoFilter class

package com.funfree.arklis.sounds;

import com.funfree.arklis.engine.*;

/**

Function: this class is SoundFilter, which realizes the effect of analog echo. See class filterdsoundstream

*/

public class EchoFilter extends SoundFilter {

private short[] delayBuffer;

private int delayBufferPos;

private float decay;

/**

Use the specified number of delay samples and the specified delay ratio< p> The number of delayed samples refers to the number of delayed samples initially heard. If one

Second echo, then use mono, 44100 sound effects, and 44100 delay samples< p> The delay value is how to achieve echo from the source sample. One

A delay rate of. 5 indicates that the echo is half the source.

*/

public EchoFilter(int numDelaySamples, float decay) {

delayBuffer = new short[numDelaySamples];

this.decay = decay;

}

/**

Obtain the remaining size (expressed in bytes), and the sample filtering can start after the sound effect of the source is played. But we must ensure

The delay rate must be less than 1%.

*/

public int getRemainingSize() {

float finalDecay = 0.01f;

// Calculate math Pow (decay, x) < = final delay

int numRemainingBuffers = (int)Math.ceil(

Math.log(finalDecay) / Math.log(decay));

int bufferSize = delayBuffer.length * 2;

return bufferSize * numRemainingBuffers;

}

/**

Clear internal latency cache

*/

public void reset() {

for (int i=0; i<delayBuffer.length; i++) {

delayBuffer[i] = 0;

}

delayBufferPos = 0;

}

/**

Filter the sound effect sample to add an echo effect. Make the sample playback have a delay effect, and the result will be stored in the delay cache, so

Multiple echo effects can be heard.

*/

public void filter(byte[] samples, int offset, int length) {

for (int i=offset; i<offset+length; i+=2) {

// Update updatable samples

short oldSample = getSample(samples, i);

short newSample = (short)(oldSample + decay *

delayBuffer[delayBufferPos]);

setSample(samples, i, newSample);

// Update delay cache

delayBuffer[delayBufferPos] = newSample;

delayBufferPos++;

if (delayBufferPos == delayBuffer.length) {

delayBufferPos = 0;

}

}

}

}

Of course, see the source code of the SimpleSoundPlayer class. Where. 6f represents 60% delay effect. Let's talk about how to package 3D sound effects.

Simulate 3D surround effect

3D sound effect is also called directional hearing, which can create a three-dimensional three-dimensional experience game sound effect for players. The common functions of 3D sound effect are as follows:

- As the distance goes away, the sound will decrease, otherwise it will increase gradually

- The mono speaker will play on the left speaker. If the sound source plays on the right speaker, our right ear will hear it. The 3D sound effect can realize the sound playing effect of four speakers

- You can create an indoor echo effect

- Doppler sound effect can be realized

3D sound effect will not be used in 3D games, but can also be used in 2D games. Let's implement 3D filter. The theory is based on Pythagorean Theory:

This class determines the sound effect according to the moving position of Sprite object, and realizes 3D sound effect by controlling the echo effect after filtering.

Filter3d class

package com.funfree.arklis.sounds;

import com.funfree.arklis.graphic.Sprite;

import com.funfree.arklis.engine.*;

/**

Function: write a 3D filter, which inherits the SoundFilter class and is used to create 3D sound effects.

The implementation effect will change the size of the sound according to the distance. See filteredsound stream class

*/

public class Filter3d extends SoundFilter {

// Specifies the number of samples to be modified

private static final int NUM_SHIFTING_SAMPLES = 500;

private Sprite source;//data source

private Sprite listener;//monitor

private int maxDistance;//Maximum distance

private float lastVolume;//maximum volume

/**

When creating a Filter3D object, you need to specify the data source object and listener object (monster) when the filter is running

The position of the little monster can be changed.

*/

public Filter3d(Sprite source, Sprite listener,

int maxDistance)

{

this.source = source;

this.listener = listener;

this.maxDistance = maxDistance;

this.lastVolume = 0.0f;

}

/**

Filter sound based on distance

*/

public void filter(byte[] samples, int offset, int length) {

if (source == null || listener == null) {

// If there is no filter, then do nothing

return;

}

// Otherwise, calculate the distance from the listener to the sound source

float dx = (source.getX() - listener.getX());

float dy = (source.getY() - listener.getY());

float distance = (float)Math.sqrt(dx * dx + dy * dy);

// Set the volume from 0 (no sound)

float newVolume = (maxDistance - distance) / maxDistance;

if (newVolume <= 0) {

newVolume = 0;

}

// Set sample size

int shift = 0;

for (int i = offset; i < offset + length; i += 2) {

float volume = newVolume;

// Convert the last volume to the new volume

if (shift < NUM_SHIFTING_SAMPLES) {

volume = lastVolume + (newVolume - lastVolume) *

shift / NUM_SHIFTING_SAMPLES;

shift++;

}

// Modify the volume of the sample

short oldSample = getSample(samples, i);

short newSample = (short)(oldSample * volume);

setSample(samples, i, newSample);

}

lastVolume = newVolume;

}

}

Note: the lazilyExit() method in the GameCore class. However, this method uses multithreading, so we need to be careful when dealing with it. Next, we will write a SoundManager to deal with these system level problems. This class implements similar functions of the SimpleSoundPlayer class. The SoundManager class has an internal class SoundPlayer, which is used to copy sound data into the Line object. SoundPlayer implements the Runnable interface, so it can be used as a task thread in the thread pool. In addition, the difference between SoundPlayer and simplesound player is that if SoundManager handles the pause state, it will stop copying data, and SoundPlayer will call the wait() method to pause the thread until it waits for SoundManager to pass through all threads and it handles the non pause state.

Thread local variable - is a local thread variable. We hope that SoundManager can ensure that each thread has its own line object and byte cache, so we can reuse them instead of creating new objects every time we play. In order to get our own line and byte cache from the thread pool, we can use the thread local variable. Because the local variable represents the local code block, the thread local variable has different values for each thread. In this example, the SoundManager class has two local threads: localLine and localBuffer. That is, each thread can have its own line and byte cache objects, while other threads cannot access the variables of the local thread. The local thread variable is created using the class ThreadLocal. To make local variables work, we need to modify a ThreadPool class. We need a way to create local thread variables. The time of creation is when a thread starts, and then clean up these local thread variables when the thread dies. To achieve this effect, you need to have the following code in ThreadPool:

public void run(){

threadStarted(); //Identifies that the thread has started

while(!isInterrupted()){

Runnable task = null; //Get a running task

try{

task = getTask();

}catch(InterruptedException e){ }

//If getTask() returns null or stops

if(task == null){

break; //Jump out of circulation

}

//Otherwise, run the task without throwing an exception

try{

task.run();

}catch(Throwable t){

uncuaghtException(this,t);

}

}

threadStopped();//Identifies the end of the thread

}

Local thread variable

In the ThreadPool class, the threadStarted() and threadsstopped () methods are not used as, but they are useful in the SoundManager class. The former will be used to create a new Line object and a new cache object, and then added to the local thread object; In the latter method, the Line object is closed and cleaned up. As far as the SoundManager class is concerned, in addition to providing pause playback function, this class also provides a very simple method to play sound function.

Play music

Although background music is not played in every game, it is very important in the game. Because music can adjust the mood, and music can also indicate the development direction of the game's plot. For example, when a player fights with a Boss, the concert is more intense. When we decide what kind of music to use, how to get music in the game? It has three main ways:

- Get from CD track

- Compressed music or MP3 files

- Play MIDI music files

The first way is to achieve good sound quality and easy to achieve. Its defect is that CD takes up a lot of space. 30MB of space can only play music for three minutes. If you want to play four pieces of music for three minutes, it will take up at least 120MB of space. The second way is to play compressed files MP3 and Ogg format files. Its defect is that decompressing files will take up a lot of CPU processing time. The solution is to use a special Java decompressor, www.javazoom.com Net website can download these decompressors. The third way, MIDI, has instructions in addition to samples, so it is mixed. The file is very small, and the defect is that the sound quality will be distorted. To solve this problem, we need to use JDK's soundback to solve it.

summary

Javar deeply encapsulates the hardware design, so when we use Java to develop games, it should be said to be a very relaxed and pleasant thing.

I hope the explanation of relevant professional terms can help you. If there are deficiencies, please correct and supplement.