At the request of developers, we worked overtime to launch the second plug-in supported by RT-AK: supporting some development boards based on K210 chip,

At present, RT-AK can work normally on KD233 of kanzhi and YB-DKA01 of Yabo.

Note 1: we have been committed to launching nanny level tutorials

Note 2: address of K210 plug-in warehouse of rt-ak: https://github.com/RT-Thread/RT-AK-plugin-k210

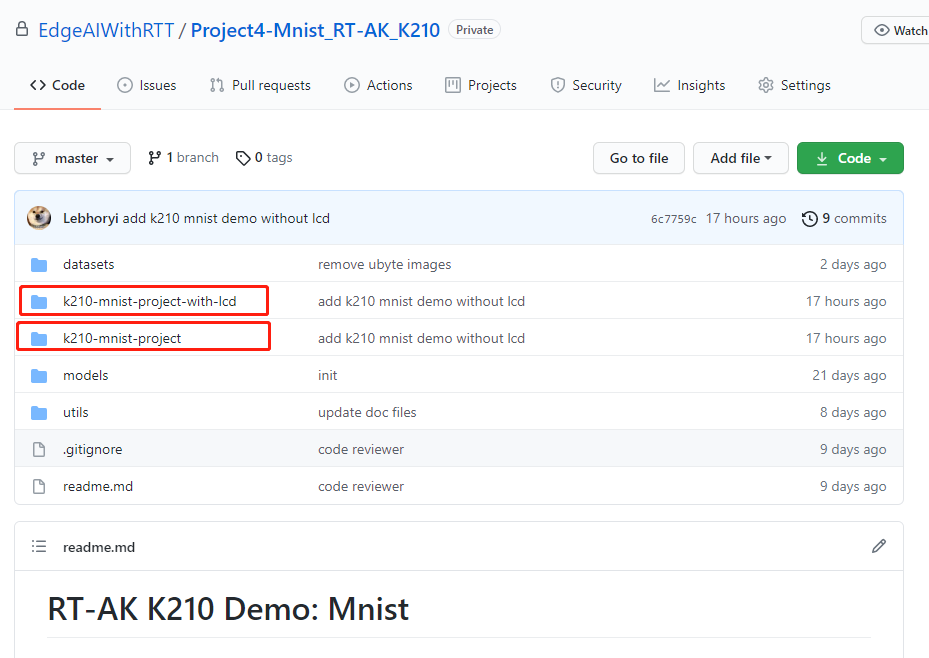

Note 3: the project code address used in this article: https://github.com/EdgeAIWithRTT/Project4-Mnist_RT-AK_K210

In the routine cited in this article, the camera and LCD are not used. However, in the project, there are routines containing LCD.

Clone can be downloaded and used. Welcome to fork, star and watch~

1. Preparations before running RT-AK

Prepare the following important materials:

| Index | Prepare | Example |

|---|---|---|

| 1 | Hardware and BSP and cross compilation tool chain | K210 BSP xpack-riscv-none-embed-gcc-8.3.0-1.2 |

| 2 | RT-AK | RT-AK code cloned to local |

| 3 | Neural network model | ./rt_ai_tools/Model/mnist.tflite |

| 4 | K210 original tools | NNCase: model conversion tool KFlash: firmware download tool |

1.1 BSP

-

Hardware

KD233 or Yabo YB-DKA01 of Jianan kanzhi, or other development boards based on K210 chip (you may need to customize BSP, please contact us)

-

Here, we have prepared a BSP,

Download address: http://117.143.63.254:9012/www/RT-AK/sdk-bsp-k210.zip

-

Cross compilation tool chain (Windows)

xpack-riscv-none-embed-gcc-8.3.0-1.2-win32-x64.zip | Version: v8.3.0-1.2

1.2 RT-AK preparation

Clone RT-AK locally

$ git clone https://github.com/RT-Thread/RT-AK.git edge-ai

1.3 neural network model

Because the k210 original tool only supports three model formats: TFLite, Caffe and ONNX

Therefore, the keras neural network model is transformed into tflite model in advance, which is located in RT-AK/rt_ai_tools/Models/mnist.tflite

If the model is not found, please update the RT-AK warehouse

Or it can be seen here:

https://github.com/EdgeAIWithRTT/Project5-Mnist_RT-AK_K210/tree/master/models

1.4 original tools

-

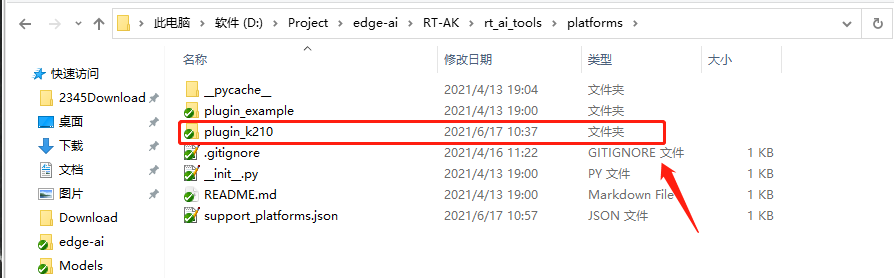

NNCase: it has been downloaded in advance and is located in RT-AK/rt_ai_tools/platforms/plugin_k210/k_tools path

-

K-Flash burning tool, please select K-Flash to download zip

Github download address: https://github.com/kendryte/kendryte-flash-windows/releases

2. Execution steps

The code will automatically use the NNCase model transformation tool to obtain a BSP integrated with AI

See the source code or plugin for the internal process_ readme document under k210 warehouse

2.1 basic operation command

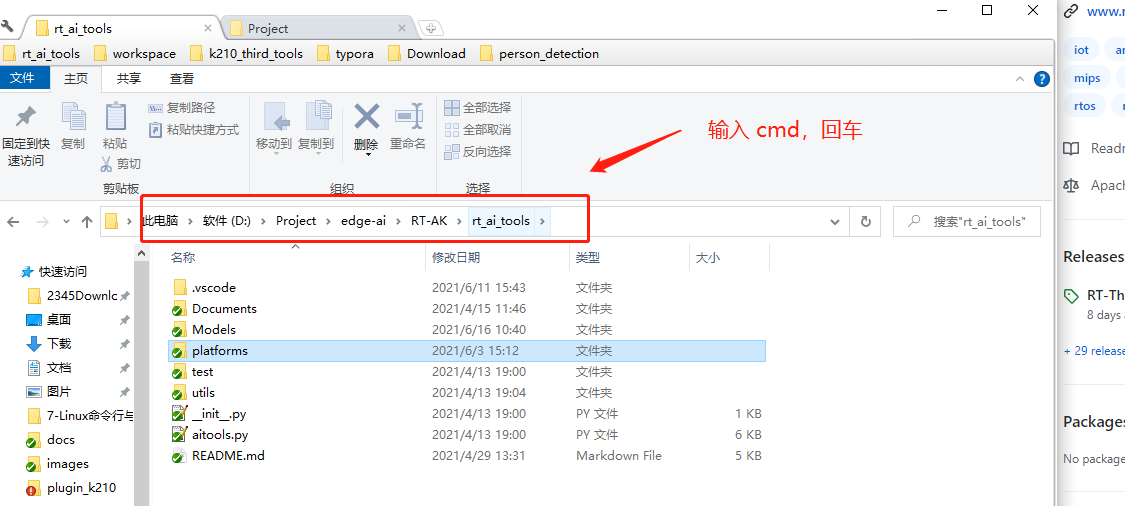

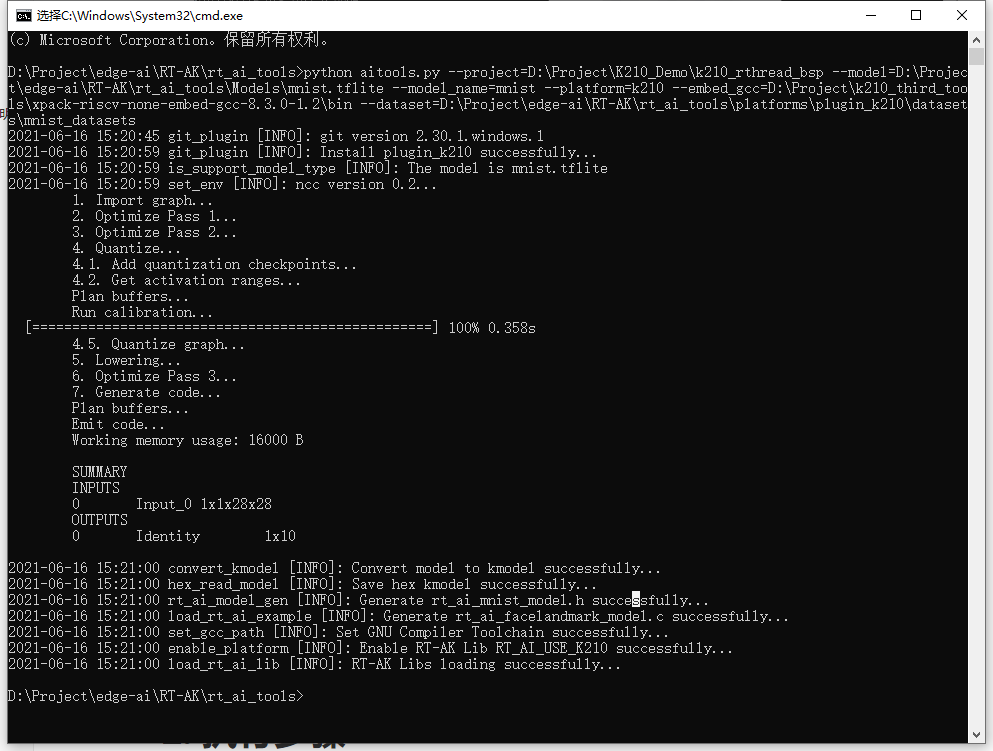

Please run the program in the path of edge AI / rtak / tools.

During RT-AK operation

- The warehouse of K210 plug-in will be automatically pulled to RT-AK/rt_ai_tools/platforms path

- AI models will be integrated on the basis of BSP, excluding application codes such as model reasoning. See the following for application codes

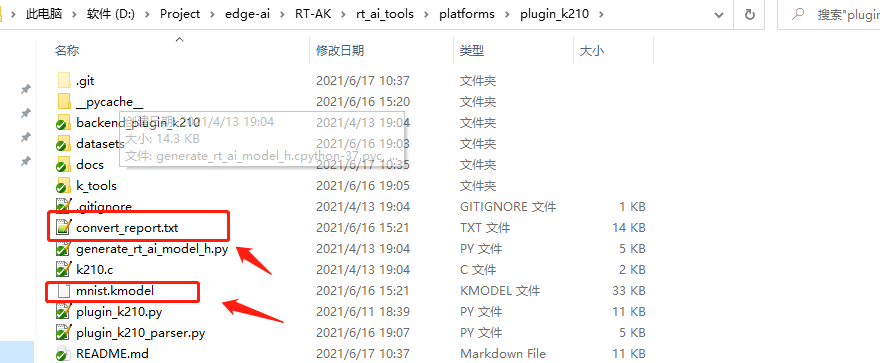

- At rt-ak / RT_ ai_ tools/platforms/plugin_ < model will be generated under k210 path_ name>. Kmodel and convert_report.txt two files

- <model_ name>. Kmodel model after conversion of kmodel AI model

- convert_ report. Txt log of AI model conversion process

# Basic operation command python aitools.py --project=<your_project_path> --model=<your_model_path> --model_name=<your_model_name> --platform=k210 --clear # Examples $ D:\Project\edge-ai\RT-AK\rt_ai_tools>python aitools.py --project=D:\Project\K210_Demo\k210_rthread_bsp --model=.\Models\mnist.tflite --model_name=mnist --platform=k210 --embed_gcc=D:\Project\k210_third_tools\xpack-riscv-none-embed-gcc-8.3.0-1.2\bin --dataset=.\platforms\plugin_k210\datasets\mnist_datasets

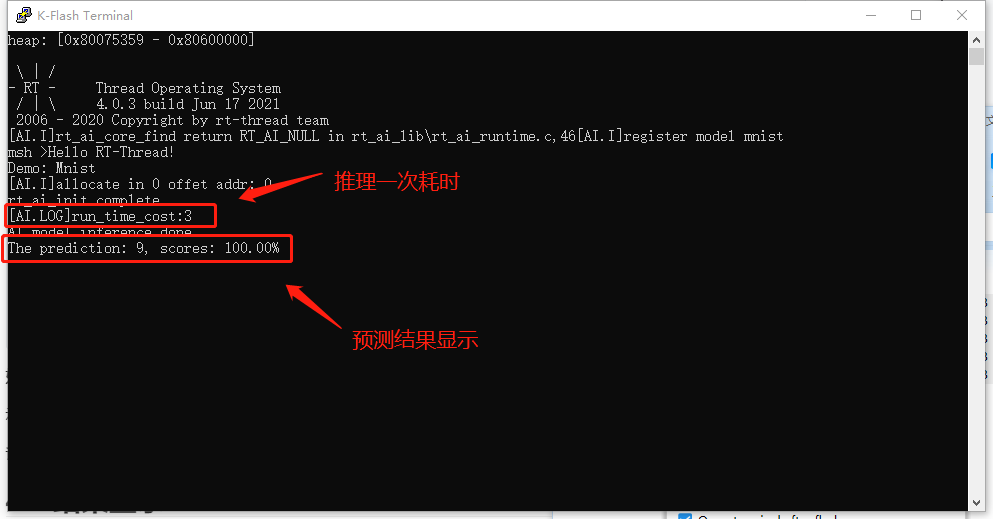

Example Demo of K210 plug-in of RT-AK runs successfully. Interface:

2.2 supplementary description of other operation commands

# Non quantized, without KPU acceleration, -- Information_ type $ python aitools.py --project=<your_project_path> --model=<your_model_path> --platform=k210 --inference_type=float # Non quantitative, specify the cross compilation tool chain path $ python aitools.py --project=<your_project_path> --model=<your_model_path> --platform=k210 --embed_gcc=<your_RISCV-GNU-Compiler_path> --inference_type=float # Quantized as uint8, accelerated by KPU, and quantized data set as picture $ python aitools.py --project=<your_project_path> --model=<your_model_path> --platform=k210 --embed_gcc=<your_RISCV-GNU-Compiler_path> --dataset=<your_val_dataset> # Quantized as uint8, accelerated by KPU, quantized data set as non pictures such as audio, - dataset_format $ python aitools.py --project=<your_project_path> --model=<your_model_path> --platform=k210 --embed_gcc=<your_RISCV-GNU-Compiler_path> --dataset=<your_val_dataset> --dataset_format=raw # Example (quantitative model, image dataset) $ python aitools.py --project="D:\Project\k210_val" --model="./Models/facelandmark.tflite" --model_name=facelandmark --platform=k210 --embed_gcc="D:\Project\k210_third_tools\xpack-riscv-none-embed-gcc-8.3.0-1.2\bin" --dataset="./platforms/plugin_k210/datasets/images"

2.3 description of operating parameters

The parameters in RT-AK include two parts,

- Basic parameters

- K210 plug-in parameters

See for basic parameters https://github.com/RT-Thread/RT-AK/tree/main/RT-AK/rt_ai_tools 0x03 parameter description in

For plug-in parameters, see https://github.com/RT-Thread/RT-AK-plugin-k210/README.md 3. In Detailed description of command line parameters

Parameter description of the above example command line

| Parameter | Description |

|---|---|

| –project | OS+BSP project project folder, which is empty by default and needs to be specified by the user |

| –model | Neural network model file path, default to/ Models/keras_mnist.h5 |

| –model_name | The new model name after neural network model conversion is network by default |

| –platform | Specify the target platform information. At present, stm32 and k210 are supported. The default is example. The available target platforms of the specific body are determined by platforms / xxx JSON registration |

| –embed_gcc | Cross compiling tool chain path, not required. If so, the RT will be changed_ config. Py file. If it is not specified, you need to specify the tool chain path when compiling |

| –dataset | For the data set used in the process of model quantification, this parameter only needs to be provided when -- information type is set to uint8 |

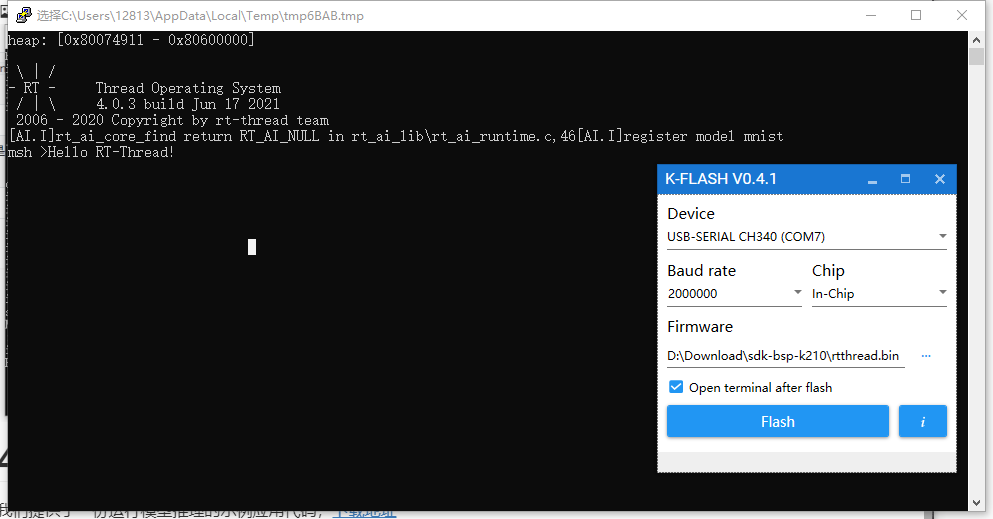

3. Compile & Download

If -- embedded is added during the execution of RT-AK_ GCC is a parameter. You can skip the following parts:

- Setting up the compilation environment

- Method 1: set RTT_ EXEC_ PATH=<your_ toolchains>

- Method 2: modify rtconfig Py file, add OS. On line 22 environ['RTT_EXEC_PATH'] = r'your_ toolchains'

compile:

scons -j 6

If the compilation is correct, rtthread will be generated elf,rtthread.bin file.

Where rtthread Bin needs to be written to the device for operation.

Download:

It can be seen that there is no change in the display interface after downloading,

That's because RT-AK does not provide application code internally. Developers need to write their own code to make the AI model run successfully. At the end of this article, we provide a sample code.

4. Example application code description

We provide a sample application code for running model reasoning,

Download address: http://117.143.63.254:9012/www/RT-AK/mnist_app_k210.zip

Download, unzip, and place it in the < BSP > / applications path

Just compile and burn.

4.1 code flow

System internal initialization:

- System clock initialization

RT AK lib model loading and running:

- Registration model (code is automatically registered without modification)

- Registration model found

- Initialize the model, mount the model information, and prepare the running environment

- Running (reasoning) model

- Get output results

4.2 core code

// main.c

/* Set CPU clock */

sysctl_clock_enable(SYSCTL_CLOCK_AI); // Enable system clock (system clock initialization)

...

// The code for registering the model is in RT_ ai_ mnist_ model. Line 31 under C file, the code is executed automatically

// The relevant information of the model is in rt_ai_mnist_model.h file

/* AI modol inference */

mymodel = rt_ai_find(MY_MODEL_NAME); // Registration model found

if (rt_ai_init(mymodel, (rt_ai_buffer_t *)IMG9_CHW) != 0) // Initialize the model and input data

...

if(rt_ai_run(mymodel, ai_done, NULL) != 0) // Model reasoning once

...

output = (float *)rt_ai_output(mymodel, 0); // Get model output results

/* The output results of the model are processed. The experiment is Mnist. The output results are 10 probability values, and the maximum probability can be selected */

for(int i = 0; i < 10 ; i++)

{

// printf("pred: %d, scores: %.2f%%\n", i, output[i]*100);

if(output[i] > scores && output[i] > 0.2)

{

prediction = i;

scores = output[i];

}

}

Supplementary instructions on how to replace model input data:

The sample data is in the applications folder, and the model does not need to be retrained. Just change lines 18 and 51

Detailed Mnist Demo engineering links, including training and data processing.

- Github: https://github.com/EdgeAIWithRTT/Project4-Mnist_RT-AK_K210

4.3 result display