Lifelong learning with you, this is Android programmer

This article mainly introduces some knowledge points in Android development. By reading this article, you will gain the following contents:

1, input overview

2, input in Systrace

3, Key knowledge points and processes

4, Input refresh and Vsync

5, Input debug information

1, input overview

stay Detailed explanation of Android rendering mechanism based on Choreographer In this article, I mentioned that the main thread of Android App is driven by Message. This Message can be circular animation, timed task or wake-Up of other threads. However, the most common one is Input Message. The Input here is classified by InputReader, which not only includes touch events (Down, Up and Move), Can contain Key events (Home Key, Back Key) Here we focus on touch events

Because the Android system adds some Trace points to the Input chain, and these Trace points are relatively perfect, some manufacturers may add some by themselves. However, we use the standard Trace points here to explain, so that the Trace you catch on your mobile phone will not be different

Input plays a high role in Android. When we play mobile phones, the sliding and jumping of most applications depend on input events. Later, I will write an article to introduce the operation mechanism based on input in Android. Here is input from the perspective of Systrace. Before looking at the following process, I have a general process of input in my mind. When I look at it, I can substitute it:

- The touch screen scans every few milliseconds. If there is a touch event, report the event to the corresponding driver.

- InputReader reads the touch event and sends it to InputDispatcher for event distribution.

- InputDispatcher sends the touch event to the App that has registered the Input event.

- After the App gets the event, it distributes the Input event. If the UI of the App changes during the event distribution, it will request Vsync and draw a frame.

In addition, when looking at Systrace, we should keep in mind that time in Systrace passes from left to right. That is to say, if you draw a vertical line on Systrace, the events on the left of the vertical line will always occur before those on the right. This is also a cornerstone of our analysis of the source code process. I hope that after looking at the source code flow analysis based on Systrace, you will have a graphical and three-dimensional flow chart in your mind. You can quickly locate your code in your mind.

#2, input in Systrace

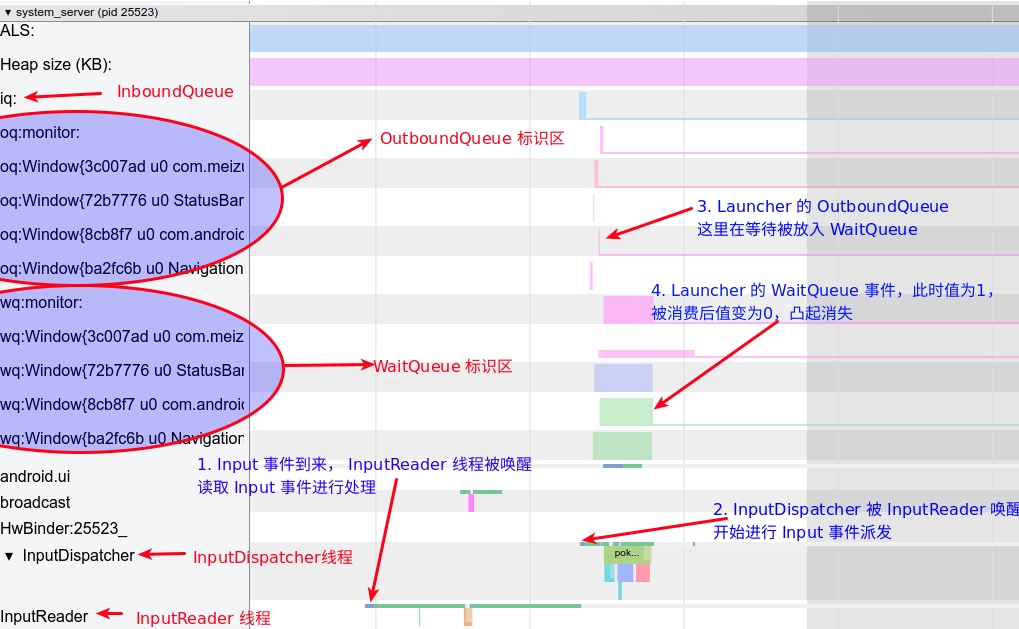

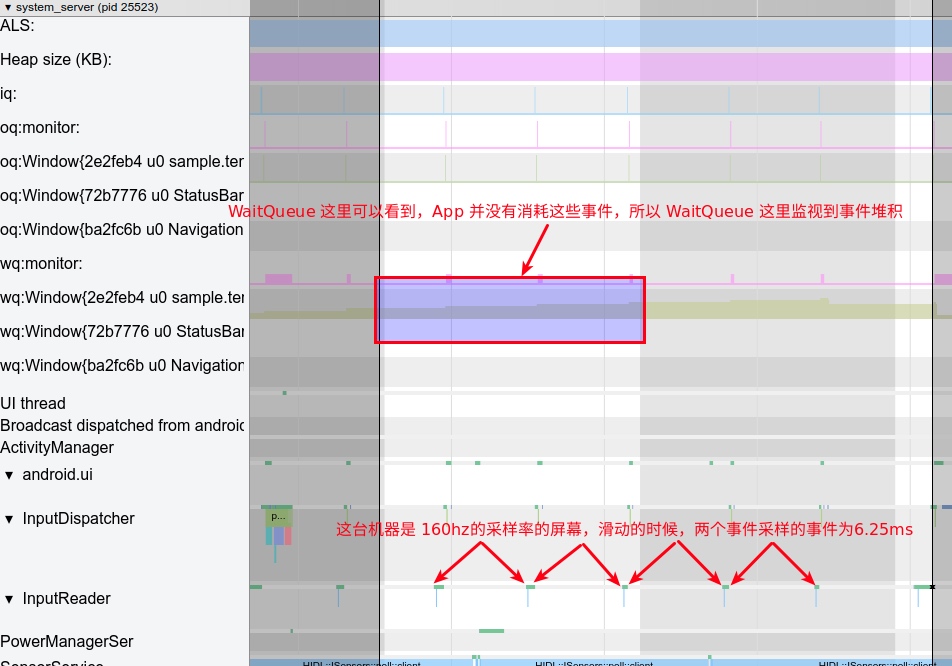

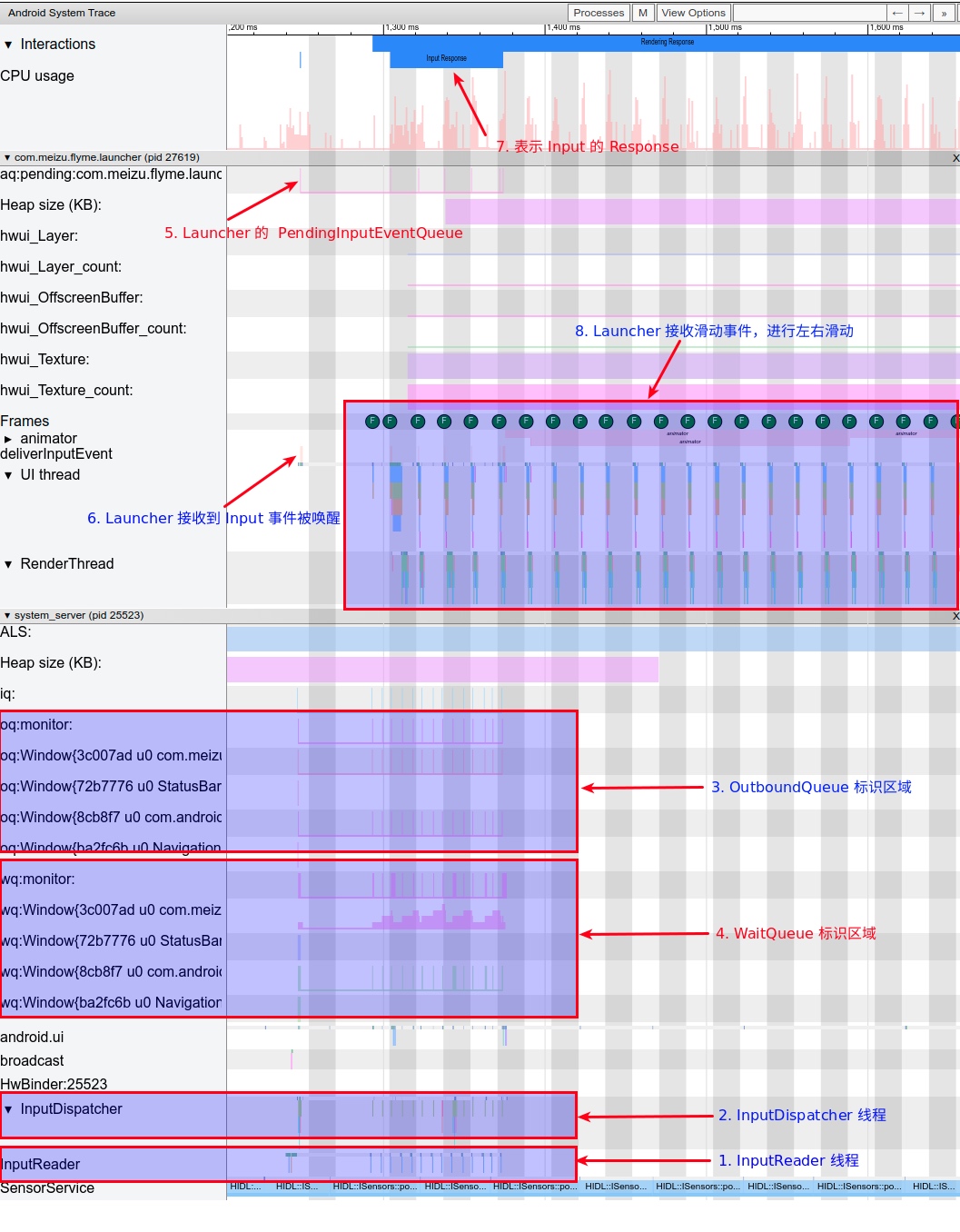

The following figure is an overview diagram. Take the sliding desktop as an example (the sliding desktop includes an Input_Down event + several Input_Move events + an Input_Up event. These events and event flows will be reflected on Systrace, which is also an important entry point for us to analyze Systrace). The main modules involved are SystemServer and App modules, Among them, the flow information of the event is marked in blue, and the auxiliary information is marked in red.

InputReader # and # InputDispatcher # are two Native threads running in SystemServer, which are responsible for reading and distributing Input events. We analyze the Input event flow of Systrace, and first find here. The following is a brief description of the label in the figure above

##1.Systrace description

- InputReader

Be responsible for reading the Input event from the EventHub, and then handing it to the InputDispatcher for event distribution - InputDispatcher

After receiving the event obtained by InputReader, wrap and distribute the event (that is, send it to the corresponding) - OutboundQueue

It contains the events to be distributed to the corresponding AppConnection - WaitQueue

It records the events that have been sent to AppConnection, but the App is still processing the events that did not return successful processing - PendingInputEventQueue

It records the Input events that the App needs to process. Here you can see that the application process has arrived - deliverInputEvent

Identifies that the App UI Thread is awakened by the Input event - ** InputResponse**

Identify the Input event area, where you can see * * Input_Down event + several inputs_ Move event + one Input_ The up incident is counted here** - App responds to Input events

Here is the operation of sliding and then letting go, that is, the familiar operation of desktop sliding. The desktop updates the picture with the sliding of fingers. After letting go, trigger Fling to continue sliding, and you can see the process of the whole event from Systrace

Let's start with the first input_ The workflow is explained in detail according to the processing flow of down event. The processing of other Move events and Up events are the same (some are different, but the impact is small)

2. Workflow of inputdown event in SystemServer

Zoom in on the part of system server to see its workflow (blue), and the sliding desktop includes Input_Down + several inputs_ Move + Input_ Up, we're looking at input here_ Down this event

##3.InputDown event in App workflow

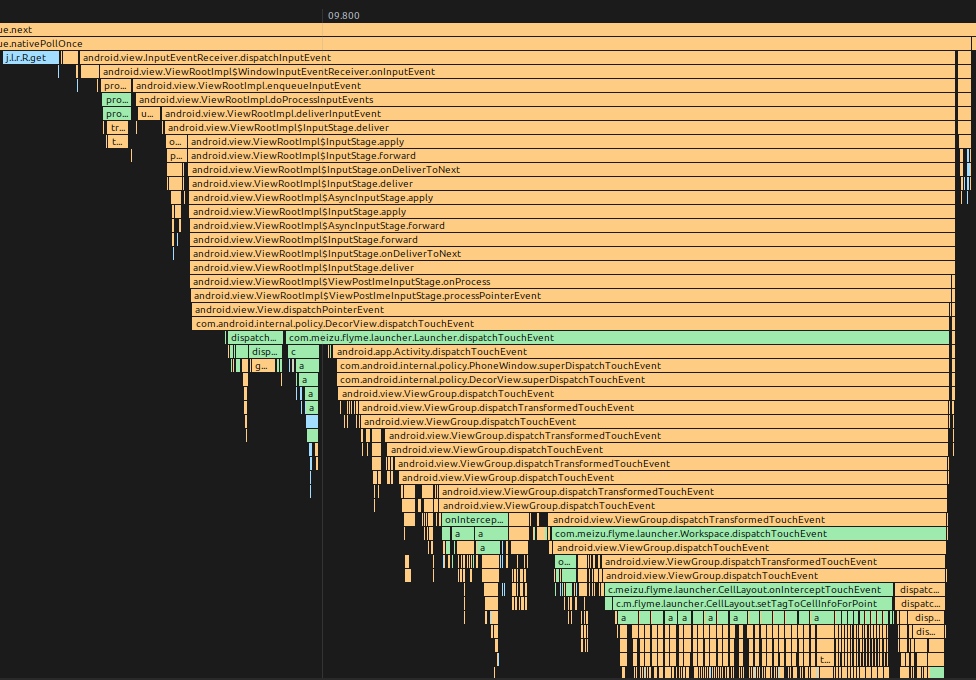

After receiving the Input event, sometimes the application will process it immediately (without Vsync). Sometimes it will process it after the Vsync signal comes. Here is Input_ The donw event is to directly wake up the main thread for processing. Its Systrace is relatively simple. There is an Input event queue at the top, and the main thread is simple for processing

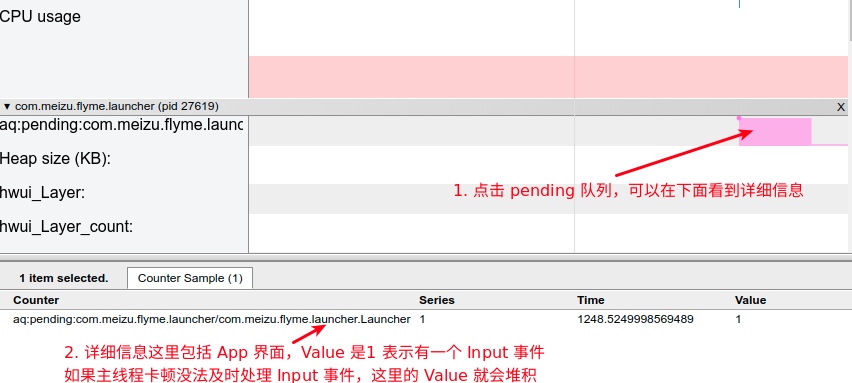

4.App Pending queue

##5. The main thread handles Input events

The main thread handles Input events, which we are familiar with. From the call stack below, we can see that Input events are transferred to ViewRootImpl, and finally to DecorView, and then the familiar Input event distribution mechanism

3, Key knowledge points and processes

From the Systrace above, the basic flow of Input events is as follows:

- InputReader reads Input events

- InputReader puts the read Input event into InboundQueue

- The InputDispatcher takes out the Input event from the InboundQueue and sends it to the OutBoundQueue of each app (connection)

- **At the same time, record the event to the WaitQueue of each app (connection)**

- App receives the Input event, records it to PaddingQueue, and then distributes the event

- After the App processing is completed, the callback InputManagerService will remove the corresponding Input in the WaitQueue that is responsible for listening

Through the above process, an Input event is consumed (of course, this is only normal, and there are many exceptions and details to deal with. I won't elaborate here. I can dig deep when I look at the relevant process), Then this section will take some important knowledge points from the above key flow to explain (some processes and diagrams refer to and copy the diagram of Gityuan's blog, and the link is in the section at the bottom)

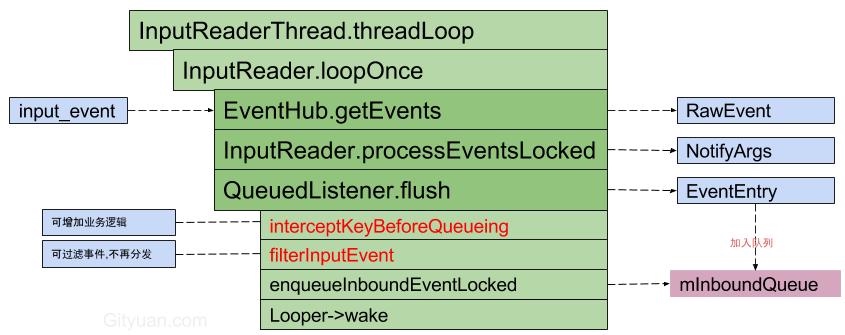

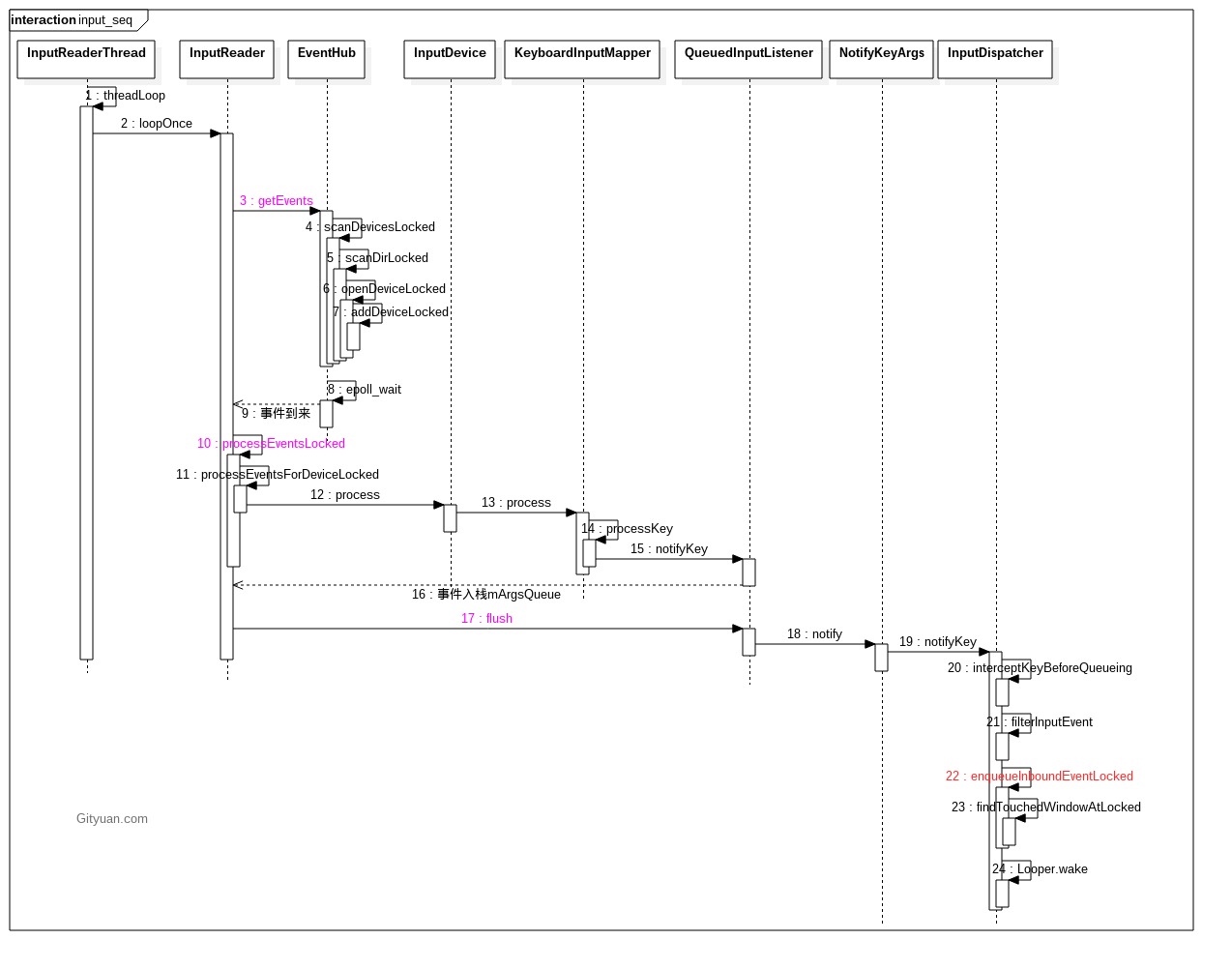

##1.InputReader

InputReader is a Native thread running in the SystemServer process. Its core function is to read events from the EventHub, process them, and send processed events to InputDispatcher

The process of InputReader Loop is as follows

- getEvents: read events through EventHub (listening directory / dev/input) and put them into mEventBuffer, which is an array with a size of 256, and then input the events_ Convert event to RawEvent

- processEventsLocked: process events and convert rawevent - > notifykeyargs (notifyargs)

- Queuedlistener - > flush: send the event to the InputDispatcher thread and convert notifykeyargs - > keyentry (evententry)

The processing flow of the core code loopOnce is as follows:

The logic of InputReader core Loop function loopOnce is as follows

void InputReader::loopOnce() {

int32_t oldGeneration;

int32_t timeoutMillis;

bool inputDevicesChanged = false;

std::vector<InputDeviceInfo> inputDevices;

{ // acquire lock

......

//Get input events and device addition and deletion events, and count is the number of events

size_t count = mEventHub ->getEvents(timeoutMillis, mEventBuffer, EVENT_BUFFER_SIZE);

{

......

if (count) {//Handling events

processEventsLocked(mEventBuffer, count);

}

}

......

mQueuedListener->flush();//Pass the event to InputDispatcher, where getListener gets InputDispatcher

}

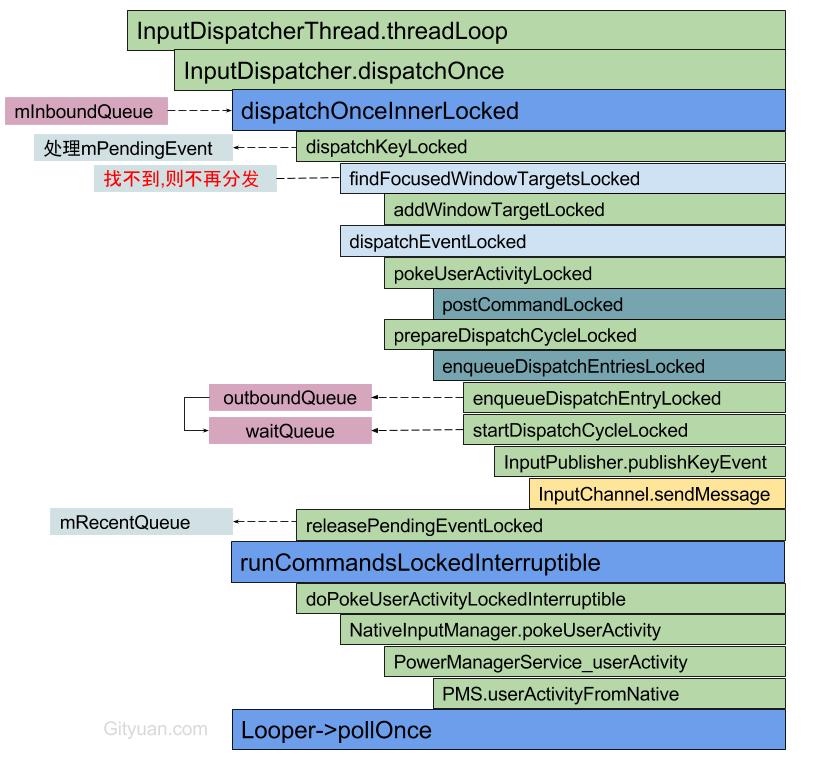

##2. InputDispatcher

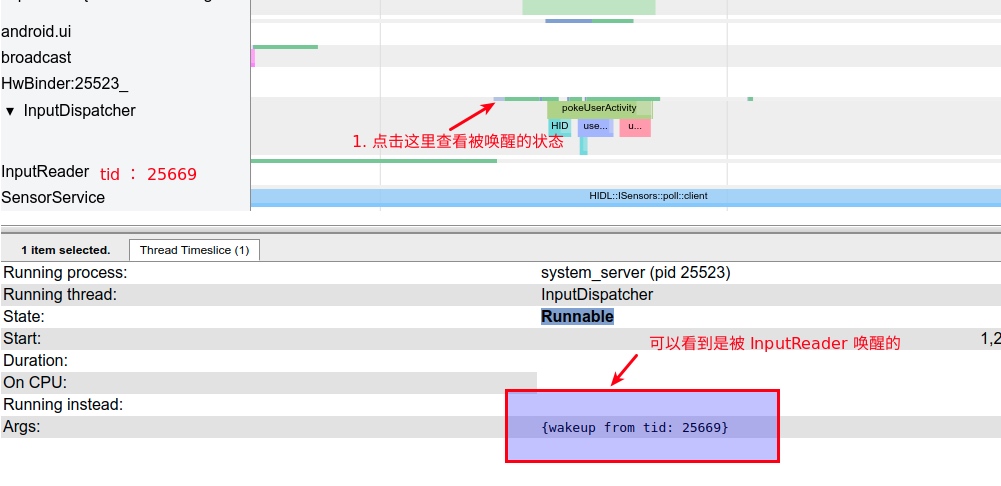

After the above InputReader calls mqueedlistener - > flush, add the Input event to the InputDispatcher's mInboundQueue, and then wake up the InputDispatcher. From the wake-up information of Systrace, you can also see that the InputDispatch thread is awakened by the InputReader

The core logic of InputDispatcher is as follows:

- dispatchOnceInnerLocked(): get the event EventEntry from the mInboundQueue queue of InputDispatcher. In addition, the current time at which the method starts execution is the delivery time of the subsequent event dispatchEntry

- dispatchKeyLocked(): the command doInterceptKeyBeforeDispatchingLockedInterruptible will be added when certain conditions are met;

- enqueueDispatchEntryLocked(): generates an event DispatchEntry and joins the outbound queue of the connection

- startDispatchCycleLocked(): take out the event DispatchEntry from the outboundQueue and put it back into the waitQueue queue of the connection;

- InputChannel.sendMessage sends the message to the remote process through socket;

- runCommandsLockedInterruptible(): process all commands in the mCommandQueue queue in turn through loop traversal. The commands in the mCommandQueue queue are added to the queue through postCommandLocked().

Its core processing logic is in dispatchOnceInnerLocked

void InputDispatcher::dispatchOnceInnerLocked(nsecs_t* nextWakeupTime) {

// Ready to start a new event.

// If we don't already have a pending event, go grab one.

if (! mPendingEvent) {

if (mInboundQueue.isEmpty()) {

} else {

// Inbound queue has at least one entry.

mPendingEvent = mInboundQueue.dequeueAtHead();

traceInboundQueueLengthLocked();

}

// Poke user activity for this event.

if (mPendingEvent->policyFlags & POLICY_FLAG_PASS_TO_USER) {

pokeUserActivityLocked(mPendingEvent);

}

// Get ready to dispatch the event.

resetANRTimeoutsLocked();

}

case EventEntry::TYPE_MOTION: {

done = dispatchMotionLocked(currentTime, typedEntry,

&dropReason, nextWakeupTime);

break;

}

if (done) {

if (dropReason != DROP_REASON_NOT_DROPPED) {

dropInboundEventLocked(mPendingEvent, dropReason);

}

mLastDropReason = dropReason;

releasePendingEventLocked();

*nextWakeupTime = LONG_LONG_MIN; // force next poll to wake up immediately

}

}

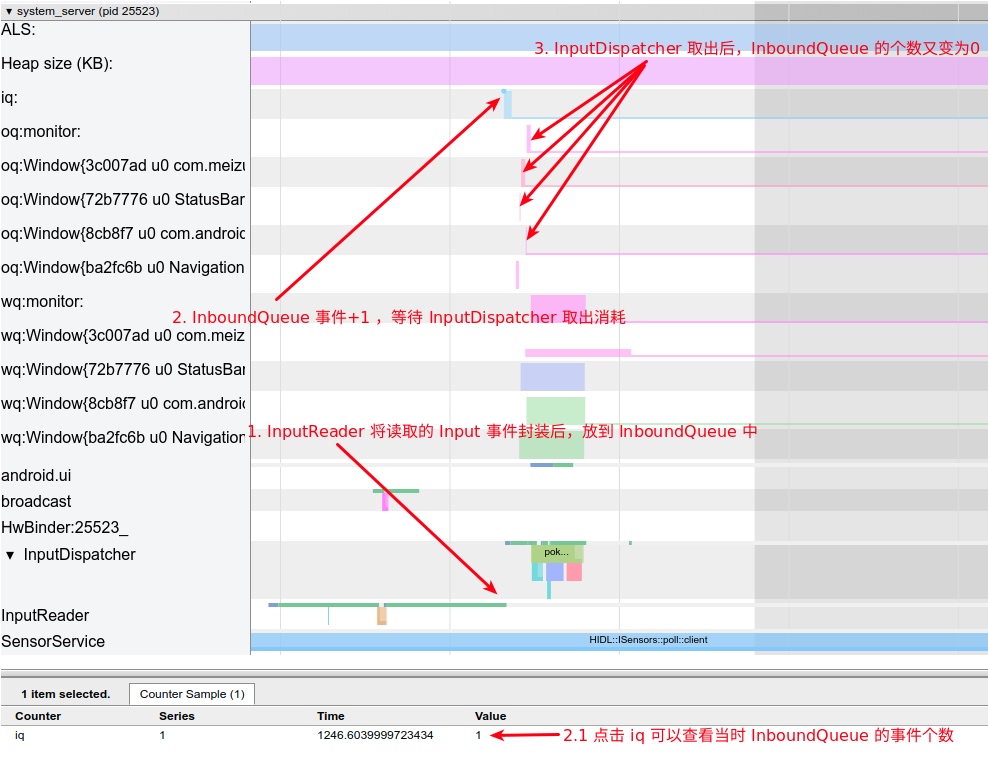

##3.InboundQueue

When InputDispatcher executes notifyKey, it will encapsulate the Input event and put it into the InboundQueue. When the subsequent InputDispatcher cycles to process the Input event, it is to take out the event from the InboundQueue and then process it

4.OutboundQueue

Outbound means outbound. The outbound queue here refers to the event queue to be processed by the App. Each App(Connection) corresponds to an outbound queue. From the diagram in the inbound queue section, the event will first enter the inbound queue and then be sent to the outbound queue of each App by InputDIspatcher

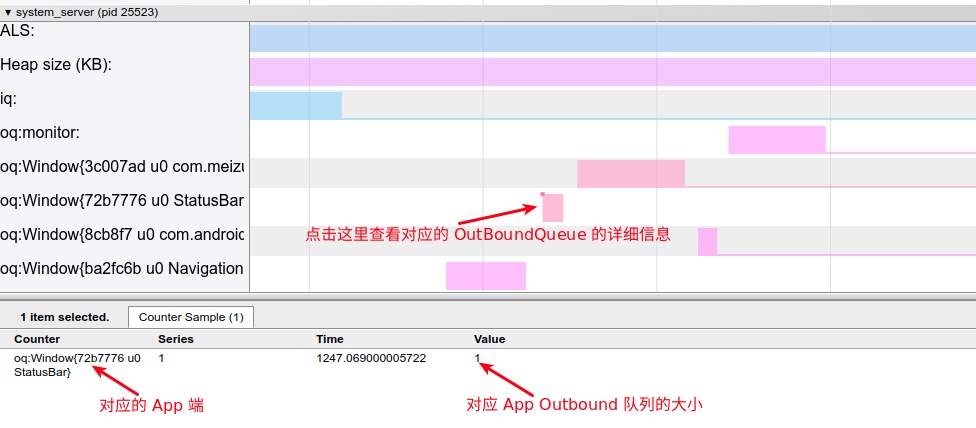

5.WaitQueue

After the InputDispatcher distributes the Input events, it takes the DispatchEntry from the outboundQueue and puts it into the WaitQueue. When the publish ed events are processed (finished), the InputManagerService will get a reply from the application. At this time, it will take out the events in the WaitQueue. From the perspective of Systrace, the WaitQueue of the corresponding App decreases

If the main thread gets stuck, the Input event is not consumed in time, which will also be reflected in WaitQueue, as shown in the following figure:

##6. Overall logic

Figure from Gityuan blog

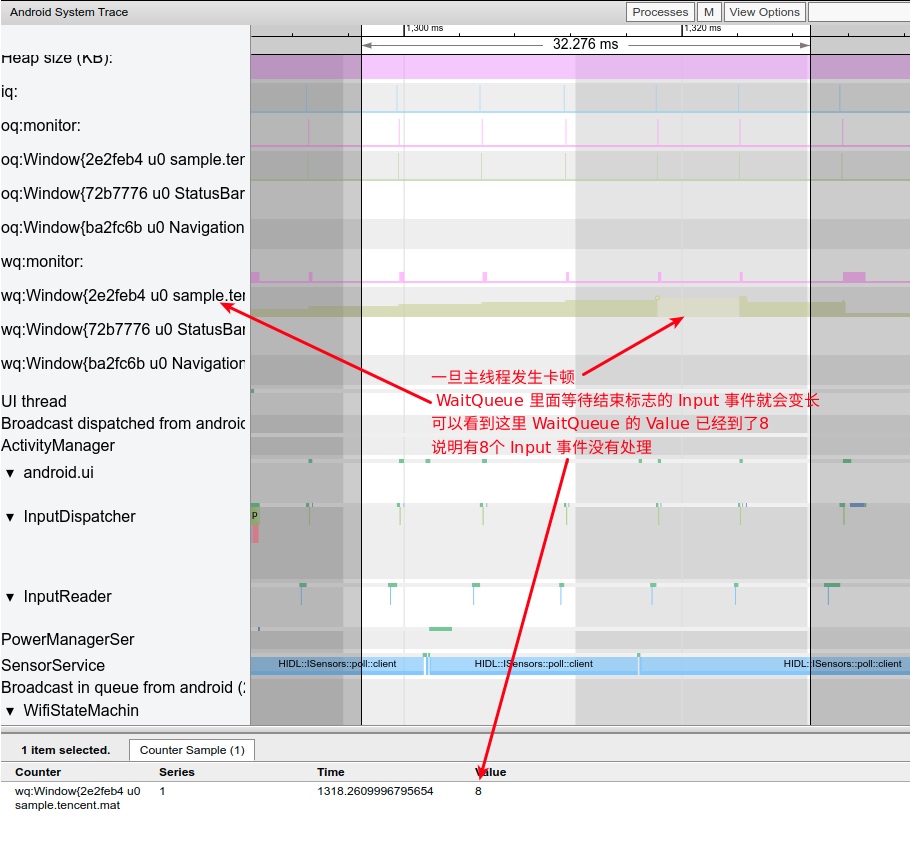

4, Input refresh and Vsync

The refresh of Input depends on the sampling of the touch screen. At present, most screen sampling rates are 120Hz and 160Hz, corresponding to 8ms sampling or 6.25ms sampling. Let's take a look at its display on Systrace

As can be seen in the figure above, InputReader can read a data every 6.25ms and send it to InputDispatcher to distribute it to App. Is it better to have a higher screen sampling rate? Not necessarily. For example, in the above figure, although InputReader can read a data to InputDispatcher every 6.25ms to distribute it to App, from the performance of WaitQueue, the application does not consume this Input event. Why?

The reason is that the time when the application consumes Input events is after the Vsync signal comes. For the screen with a refresh rate of 60Hz, the general system is also 60 fps, that is, the interval between two vsyncs is 16.6ms. If there are two or three Input events during this period, one or two must be abandoned and only the latest one. in other words:

- When the screen refresh rate and system FPS are both 60, blindly increasing the sampling rate of the touch screen will not have much effect. On the contrary, as shown in the figure above, there may be two Input events in some Vsync cycles and three Input events in some Vsync cycles, resulting in uneven events and UI jitter

- When both the screen refresh rate and the system FPS are 60, the touch screen with 120Hz sampling rate can be used

- If the screen refresh rate and system FPS are both 90, the touch screen with 120Hz sampling rate is obviously not enough. At this time, the screen with 180Hz sampling rate should be used

5, Input debug information

Dumpsys Input is mainly used for debugging. We can also take a look at some key information. If you encounter problems at that time, you can also find it from here. The commands are as follows:

adb shell dumpsys input

There are a lot of outputs. Let's intercept the Device information, InputReader and InputDispatcher at the end point

1.Device information

It mainly refers to the Device information currently connected. The following extracts are related to touch

3: main_touch Classes: 0x00000015 Path: /dev/input/event6 Enabled: true Descriptor: 4055b8a032ccf50ef66dbe2ff99f3b2474e9eab5 Location: main_touch/input0 ControllerNumber: 0 UniqueId: Identifier: bus=0x0000, vendor=0xbeef, product=0xdead, version=0x28bb KeyLayoutFile: /system/usr/keylayout/main_touch.kl KeyCharacterMapFile: /system/usr/keychars/Generic.kcm ConfigurationFile: HaveKeyboardLayoutOverlay: false

##2.Input Reader status

InputReader here are some demonstrations of the current Input event

Device 3: main_touch Generation: 24 IsExternal: false HasMic: false Sources: 0x00005103 KeyboardType: 1 Motion Ranges: X: source=0x00005002, min=0.000, max=1079.000, flat=0.000, fuzz=0.000, resolution=0.000 Y: source=0x00005002, min=0.000, max=2231.000, flat=0.000, fuzz=0.000, resolution=0.000 PRESSURE: source=0x00005002, min=0.000, max=1.000, flat=0.000, fuzz=0.000, resolution=0.000 SIZE: source=0x00005002, min=0.000, max=1.000, flat=0.000, fuzz=0.000, resolution=0.000 TOUCH_MAJOR: source=0x00005002, min=0.000, max=2479.561, flat=0.000, fuzz=0.000, resolution=0.000 TOUCH_MINOR: source=0x00005002, min=0.000, max=2479.561, flat=0.000, fuzz=0.000, resolution=0.000 TOOL_MAJOR: source=0x00005002, min=0.000, max=2479.561, flat=0.000, fuzz=0.000, resolution=0.000 TOOL_MINOR: source=0x00005002, min=0.000, max=2479.561, flat=0.000, fuzz=0.000, resolution=0.000 Keyboard Input Mapper: Parameters: HasAssociatedDisplay: false OrientationAware: false HandlesKeyRepeat: false KeyboardType: 1 Orientation: 0 KeyDowns: 0 keys currently down MetaState: 0x0 DownTime: 521271703875000 Touch Input Mapper (mode - direct): Parameters: GestureMode: multi-touch DeviceType: touchScreen AssociatedDisplay: hasAssociatedDisplay=true, isExternal=false, displayId='' OrientationAware: true Raw Touch Axes: X: min=0, max=1080, flat=0, fuzz=0, resolution=0 Y: min=0, max=2232, flat=0, fuzz=0, resolution=0 Pressure: min=0, max=127, flat=0, fuzz=0, resolution=0 TouchMajor: min=0, max=512, flat=0, fuzz=0, resolution=0 TouchMinor: unknown range ToolMajor: unknown range ToolMinor: unknown range Orientation: unknown range Distance: unknown range TiltX: unknown range TiltY: unknown range TrackingId: min=0, max=65535, flat=0, fuzz=0, resolution=0 Slot: min=0, max=20, flat=0, fuzz=0, resolution=0 Calibration: touch.size.calibration: geometric touch.pressure.calibration: physical touch.orientation.calibration: none touch.distance.calibration: none touch.coverage.calibration: none Affine Transformation: X scale: 1.000 X ymix: 0.000 X offset: 0.000 Y xmix: 0.000 Y scale: 1.000 Y offset: 0.000 Viewport: displayId=0, orientation=0, logicalFrame=[0, 0, 1080, 2232], physicalFrame=[0, 0, 1080, 2232], deviceSize=[1080, 2232] SurfaceWidth: 1080px SurfaceHeight: 2232px SurfaceLeft: 0 SurfaceTop: 0 PhysicalWidth: 1080px PhysicalHeight: 2232px PhysicalLeft: 0 PhysicalTop: 0 SurfaceOrientation: 0 Translation and Scaling Factors: XTranslate: 0.000 YTranslate: 0.000 XScale: 0.999 YScale: 1.000 XPrecision: 1.001 YPrecision: 1.000 GeometricScale: 0.999 PressureScale: 0.008 SizeScale: 0.002 OrientationScale: 0.000 DistanceScale: 0.000 HaveTilt: false TiltXCenter: 0.000 TiltXScale: 0.000 TiltYCenter: 0.000 TiltYScale: 0.000 Last Raw Button State: 0x00000000 Last Raw Touch: pointerCount=1 [0]: id=0, x=660, y=1338, pressure=44, touchMajor=44, touchMinor=44, toolMajor=0, toolMinor=0, orientation=0, tiltX=0, tiltY=0, distance=0, toolType=1, isHovering=false Last Cooked Button State: 0x00000000 Last Cooked Touch: pointerCount=1 [0]: id=0, x=659.389, y=1337.401, pressure=0.346, touchMajor=43.970, touchMinor=43.970, toolMajor=43.970, toolMinor=43.970, orientation=0.000, tilt=0.000, distance=0.000, toolType=1, isHovering=false Stylus Fusion: ExternalStylusConnected: false External Stylus ID: -1 External Stylus Data Timeout: 9223372036854775807 External Stylus State: When: 9223372036854775807 Pressure: 0.000000 Button State: 0x00000000 Tool Type: 0

##3.InputDispatcher status

InputDispatch important information here mainly includes

- FocusedApplication: the application that currently gets the focus

- FocusedWindow: the window that currently gets the focus

- TouchStatesByDisplay

- Window s: all Windows

- MonitoringChannels: channels corresponding to Window

- Connections: all connections

- AppSwitch: not pending

- Configuration

Input Dispatcher State:

DispatchEnabled: 1

DispatchFrozen: 0

FocusedApplication: name='AppWindowToken{ac6ec28 token=Token{a38a4b ActivityRecord{7230f1a u0 com.meizu.flyme.launcher/.Launcher t13}}}', dispatchingTimeout=5000.000ms

FocusedWindow: name='Window{3c007ad u0 com.meizu.flyme.launcher/com.meizu.flyme.launcher.Launcher}'

TouchStatesByDisplay:

0: down=true, split=true, deviceId=3, source=0x00005002

Windows:

0: name='Window{3c007ad u0 com.meizu.flyme.launcher/com.meizu.flyme.launcher.Launcher}', pointerIds=0x80000000, targetFlags=0x105

1: name='Window{8cb8f7 u0 com.android.systemui.ImageWallpaper}', pointerIds=0x0, targetFlags=0x4102

Windows:

2: name='Window{ba2fc6b u0 NavigationBar}', displayId=0, paused=false, hasFocus=false, hasWallpaper=false, visible=true, canReceiveKeys=false, flags=0x21840068, type=0x000007e3, layer=0, frame=[0,2136][1080,2232], scale=1.000000, touchableRegion=[0,2136][1080,2232], inputFeatures=0x00000000, ownerPid=26514, ownerUid=10033, dispatchingTimeout=5000.000ms

3: name='Window{72b7776 u0 StatusBar}', displayId=0, paused=false, hasFocus=false, hasWallpaper=false, visible=true, canReceiveKeys=false, flags=0x81840048, type=0x000007d0, layer=0, frame=[0,0][1080,84], scale=1.000000, touchableRegion=[0,0][1080,84], inputFeatures=0x00000000, ownerPid=26514, ownerUid=10033, dispatchingTimeout=5000.000ms

9: name='Window{3c007ad u0 com.meizu.flyme.launcher/com.meizu.flyme.launcher.Launcher}', displayId=0, paused=false, hasFocus=true, hasWallpaper=true, visible=true, canReceiveKeys=true, flags=0x81910120, type=0x00000001, layer=0, frame=[0,0][1080,2232], scale=1.000000, touchableRegion=[0,0][1080,2232], inputFeatures=0x00000000, ownerPid=27619, ownerUid=10021, dispatchingTimeout=5000.000ms

MonitoringChannels:

0: 'WindowManager (server)'

RecentQueue: length=10

MotionEvent(deviceId=3, source=0x00005002, action=MOVE, actionButton=0x00000000, flags=0x00000000, metaState=0x00000000, buttonState=0x00000000, edgeFlags=0x00000000, xPrecision=1.0, yPrecision=1.0, displayId=0, pointers=[0: (524.5, 1306.4)]), policyFlags=0x62000000, age=61.2ms

MotionEvent(deviceId=3, source=0x00005002, action=MOVE, actionButton=0x00000000, flags=0x00000000, metaState=0x00000000, buttonState=0x00000000, edgeFlags=0x00000000, xPrecision=1.0, yPrecision=1.0, displayId=0, pointers=[0: (543.5, 1309.4)]), policyFlags=0x62000000, age=54.7ms

PendingEvent: <none>

InboundQueue: <empty>

ReplacedKeys: <empty>

Connections:

0: channelName='WindowManager (server)', windowName='monitor', status=NORMAL, monitor=true, inputPublisherBlocked=false

OutboundQueue: <empty>

WaitQueue: <empty>

5: channelName='72b7776 StatusBar (server)', windowName='Window{72b7776 u0 StatusBar}', status=NORMAL, monitor=false, inputPublisherBlocked=false

OutboundQueue: <empty>

WaitQueue: <empty>

6: channelName='ba2fc6b NavigationBar (server)', windowName='Window{ba2fc6b u0 NavigationBar}', status=NORMAL, monitor=false, inputPublisherBlocked=false

OutboundQueue: <empty>

WaitQueue: <empty>

12: channelName='3c007ad com.meizu.flyme.launcher/com.meizu.flyme.launcher.Launcher (server)', windowName='Window{3c007ad u0 com.meizu.flyme.launcher/com.meizu.flyme.launcher.Launcher}', status=NORMAL, monitor=false, inputPublisherBlocked=false

OutboundQueue: <empty>

WaitQueue: length=3

MotionEvent(deviceId=3, source=0x00005002, action=MOVE, actionButton=0x00000000, flags=0x00000000, metaState=0x00000000, buttonState=0x00000000, edgeFlags=0x00000000, xPrecision=1.0, yPrecision=1.0, displayId=0, pointers=[0: (634.4, 1329.4)]), policyFlags=0x62000000, targetFlags=0x00000105, resolvedAction=2, age=17.4ms, wait=16.8ms

MotionEvent(deviceId=3, source=0x00005002, action=MOVE, actionButton=0x00000000, flags=0x00000000, metaState=0x00000000, buttonState=0x00000000, edgeFlags=0x00000000, xPrecision=1.0, yPrecision=1.0, displayId=0, pointers=[0: (647.4, 1333.4)]), policyFlags=0x62000000, targetFlags=0x00000105, resolvedAction=2, age=11.1ms, wait=10.4ms

MotionEvent(deviceId=3, source=0x00005002, action=MOVE, actionButton=0x00000000, flags=0x00000000, metaState=0x00000000, buttonState=0x00000000, edgeFlags=0x00000000, xPrecision=1.0, yPrecision=1.0, displayId=0, pointers=[0: (659.4, 1337.4)]), policyFlags=0x62000000, targetFlags=0x00000105, resolvedAction=2, age=5.2ms, wait=4.6ms

AppSwitch: not pending

Configuration:

KeyRepeatDelay: 50.0ms

KeyRepeatTimeout: 500.0ms

Original link: https://www.androidperformance.com/2019/11/04/Android-Systrace-Input/

So far, this article has ended. Reprint the articles on the Internet. Xiaobian feels very excellent. You are welcome to click to read the original text and support the original author. If there is infringement, please contact Xiaobian to delete it. Your suggestions and corrections are welcome. At the same time, we look forward to your attention. Thank you for reading. Thank you!