Installation and deployment of Flink

First, open the terminal and execute the command under / home/xxx / to obtain the installation package:

wget https://labfile.oss.aliyuncs.com/courses/3423/flink-1.10.0-bin-scala_2.11.tar tar -xvf flink-1.10.0-bin-scala_2.11.tar

Unzip it to this directory.

If you have studied Spark, you should feel that the next content is deja vu. There are three deployment modes in Flink: Standalone, Yan and Kubernetes. In this experiment, we focus on the Standalone model. Yan and Kubernetes only need to understand it.

Standalone

The meaning of Standalone here is the same as that in Spark, that is, Flink is responsible for resource scheduling without relying on other tools.

The construction steps are as follows:

- Copy the extracted installation package to the / opt / directory

sudo cp -r ~/flink-1.10.0 /opt/

- Enter the / opt/flink-1.10.0 directory and start the cluster

sudo cd /opt/flink-1.10.0 sudo bin/start-cluster.sh

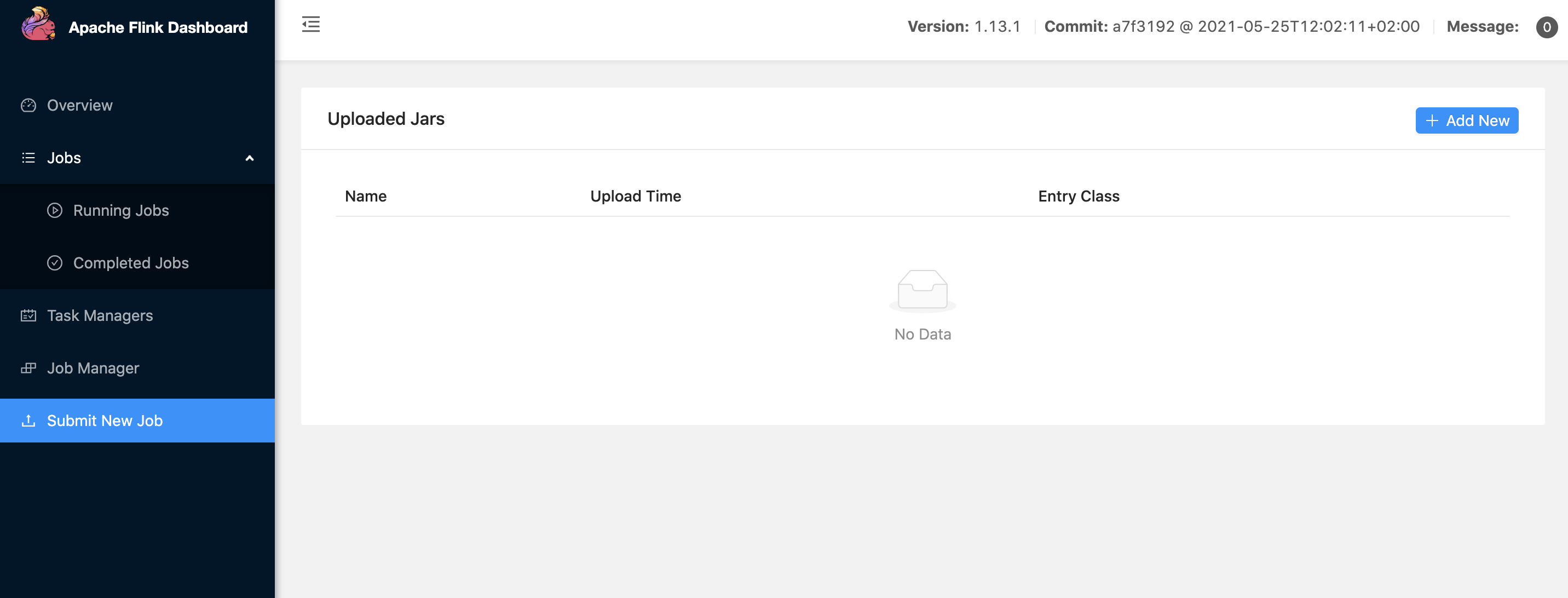

So far, we have built the simplest Standalone cluster, that is, pseudo distributed or single node cluster. Double click the Firefox browser on the desktop and open localhost:8081 to see the following interface:

Limited by our virtual machine environment, we can only build pseudo distributed clusters in the experimental course. If you need to build a Standalone cluster composed of multiple machines, it is not difficult. You only need to make a simple configuration on the basis of the above steps.

Suppose there are three machines with host names bigdata1, bigdata2 and bigdata3. Next, you only need to:

Add the following contents to the conf/flink-conf.yaml file in the Flink installation package of bigdata1:

jobmanager.rpc.address:bigdata1

copy

Add the following content to the conf / slave file in the Flink installation package in bigdata1:

bigdata2

bigdata3

copy

Copy the Flink installation package in bigdata1 to the same directory of bigdata2 and bigdata3 respectively, and then execute bin / start cluster in bigdata1 SH start the cluster.

Note: the premise of the above three steps is that the same versions of jdk and scala are installed on the three machines, and ssh password free login is configured.

Yarn mode

Like Spark, Flink also supports the deployment of Flink tasks in Yan mode, but the version of Hadoop must be above 2.2.

The deployment of Yarn mode is divided into two types: session cluster and pre job cluster.

Session-Cluster

The characteristic of session cluster is that before submitting tasks, you need to start a Flink cluster in Yan. After successful startup, you can submit tasks to the Flink cluster through the Flink run command. After the Job is executed, the cluster will not shut down and wait for the next Job to be submitted. The advantage of this is that the Job startup time is shorter, which is suitable for small-scale jobs with short execution time.

Pre-Job-Cluster

The feature of pre Job cluster is that a Job corresponds to a cluster. Each time a Job is submitted, it will separately apply for resources from Yan according to its own situation. The advantage of this is that the resources used by each Job are independent. The failure of one Job will not affect the normal operation of other jobs. It is suitable for tasks with large scale and long running time.

Kunetes mode

With the development of information technology, container technology is becoming more and more popular. You may not have heard of Kubernetes, but you must have heard of Docker. Yes, similar to Docker, Kubernetes is also a kind of container technology. Although Flink supports deployment based on Kubernetes, it seems that no enterprise in the industry has heard of using this deployment mode, so you only need to understand it without doing too much research.

Flink's POM xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.shiyanlou</groupId>

<artifactId>FlinkLearning</artifactId>

<version>1.0-SNAPSHOT</version>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-scala_2.11</artifactId>

<version>1.7.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.11</artifactId>

<version>1.7.2</version>

</dependency>

</dependencies>

<build>

<plugins>

<!-- This plug-in is used to Scala Code compiled into class file -->

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.4.6</version>

<executions>

<execution>

<goals>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>3.0.0</version>

<configuration>

<archive>

<manifest>

<mainClass></mainClass>

</manifest>

</archive>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

BatchWordCount. The code in scala is as follows:

package com.shiyanlou.wc

import org.apache.flink.api.scala._

object BatchWordCount {

def main(args: Array[String]): Unit = {

// Create execution environment

val env = ExecutionEnvironment.getExecutionEnvironment

// Read data from text

val inputPath = "/home/shiyanlou/words.txt"

val inputDS: DataSet[String] = env.readTextFile(inputPath)

// Computational logic

val wordCountDS: AggregateDataSet[(String, Int)] = inputDS

.flatMap(_.split(" "))

.map((_, 1))

.groupBy(0)

.sum(1)

// Printout

wordCountDS.print()

}

}

Flink stream processing WordCount

In streamwordcount Add the following code to scala:

package com.shiyanlou.wc

import org.apache.flink.streaming.api.scala._

object StreamWordCount {

def main(args: Array[String]): Unit = {

// Create execution environment

val env = StreamExecutionEnvironment.getExecutionEnvironment

// Monitor Socket data

val textDstream: DataStream[String] = env.socketTextStream("localhost", 9999)

// Import implicit conversion

import org.apache.flink.api.scala._

// Computational logic

val dataStream: DataStream[(String, Int)] = textDstream

.flatMap(_.split(" "))

.filter(_.nonEmpty)

.map((_, 1))

.keyBy(0)

.sum(1)

// Set parallelism

dataStream.print().setParallelism(1)

// implement

env.execute("Socket stream word count")

}

}