TiUP is a cluster operation and maintenance tool introduced in version 4.0 of TiDB. TiUP cluster is a cluster management component written by Golang provided by TiUP. Through TiUP cluster component, you can carry out daily operation and maintenance work, including deployment, startup, shutdown, destruction, elastic capacity expansion, upgrading TiDB cluster, and managing TiDB cluster parameters. At present, TiUP can support the deployment of TiDB, TiFlash, TiDB Binlog, TiCDC and monitoring system.

Recommended server configuration

Development and test environment

assembly CPU Memory Local storage network Number of instances(minimum requirements ) TiDB 8 nucleus+ 16 GB+ No special requirements Gigabit Ethernet 1(Can be associated with PD Same as machine) PD 4 nucleus+ 8 GB+ SAS, 200 GB+ Gigabit Ethernet 1(Can be associated with TiDB Same as machine) TiKV 8 nucleus+ 32 GB+ SSD, 200 GB+ Gigabit Ethernet 3 TiFlash 32 nucleus+ 64 GB+ SSD, 200 GB+ Gigabit Ethernet 1 TiCDC 8 nucleus+ 16 GB+ SAS, 200 GB+ Gigabit Ethernet 1

production environment

assembly CPU Memory Hard disk type network Number of instances TiDB 16 nucleus+ 32 GB+ SAS 10 Gigabit network card (2 best) 2 PD 4 nucleus+ 8 GB+ SSD 10 Gigabit network card (2 best) 3 TiKV 16 nucleus+ 32 GB+ SSD 10 Gigabit network card (2 best) 3 TiFlash 48 nucleus+ 128 GB+ 1 or more SSDs 10 Gigabit network card (2 best) 2 TiCDC 16 nucleus+ 64 GB+ SSD 10 Gigabit network card (2 best) 2 monitor 8 core+ 16 GB+ SAS Gigabit Ethernet 1

Deploy TiUP components Online

Select a server as the master computer to perform the following operations:

1. Install the TiUP tool

curl --proto '=https' --tlsv1.2 -sSf https://tiup-mirrors.pingcap.com/install.sh | sh

2. Set the TiUP environment variable

source .bash_profile

3. Confirm whether the TiUP tool is installed

which tiup

4. Install the TiUP cluster component

tiup cluster

5. If installed, update the TiUP cluster component to the latest version

tiup update --self && tiup update cluster

6. Verify the current TiUP cluster version information

tiup --binary cluster

Set ssh password free login

The client of the master computer generates a public-private key: (all the way enter is the default)

ssh-keygen

Upload public key to other servers

ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.1.xxx

test

ssh root@192.168.1.xxx

Edit the cluster topology file and start the cluster

File name: topology Yaml, configuration reference only

global: user: "tidb" ssh_port: 22 deploy_dir: "/tidb-deploy" data_dir: "/tidb-data" pd_servers: - host: 192.168.1.1 tidb_servers: - host: 192.168.1.1 tikv_servers: - host: 192.168.1.2 - host: 192.168.1.3 - host: 192.168.1.4 tiflash_servers: - host: 192.168.1.5 cdc_servers: - host: 192.168.1.6 monitoring_servers: - host: 192.168.1.1 grafana_servers: - host: 192.168.1.1 alertmanager_servers: - host: 192.168.1.1

Execute the deploy command

Before executing the deploy command, first use the check and check --apply commands to check and automatically repair the potential risks of the cluster

tiup cluster check ./topology.yaml --user root [-p] [-i /home/root/.ssh/gcp_rsa]

tiup cluster check ./topology.yaml --apply --user root [-p] [-i /home/root/.ssh/gcp_rsa]

Then execute the deploy command to deploy the TiDB cluster

tiup cluster deploy tidb-test v5.0.2 ./topology.yaml --user root [-p] [-i /home/root/.ssh/gcp_rsa]

View the cluster managed by TiUP

tiup cluster list

Check the deployed TiDB cluster

tiup cluster display tidb-test

Start cluster

tiup cluster start tidb-test

Log directory

/tidb-deploy/tikv-20160/log

Change Password

The default password of tidb is empty. To change the password, you need to find a machine with mysql client

mysql -h 192.168.1.1 -P 4000 -u root

ALTER USER 'root' IDENTIFIED BY 'root';

flush privileges ;

quit

Capacity expansion

tiup cluster scale-out tidb-test scale-out.yaml

Volume reduction

tiup cluster scale-in tidb-test --node 192.168.1.xxx:<ID>

It takes some time to go offline. If the status of the offline node changes to Tombstone, it indicates that the offline is successful.

Uninstall cluster

tiup cluster destroy tidb-test --force

Performance testing using sysbench

Update epel third party software library

yum install -y epel-release

Installing the sysbench tool

yum install -y sysbench

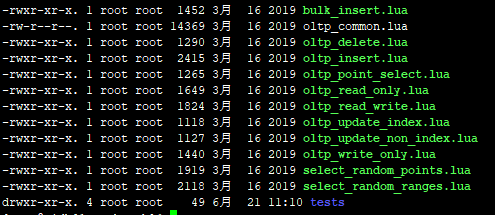

The benchmark script is in the / usr/share/sysbench directory

Generate test table

sudo sysbench --db-driver=mysql --mysql-host=192.168.1.xxx --mysql-port=4000 \ --mysql-user=root --mysql-password=root --mysql-db=bigdata \ --range_size=100 --table_size=100000 --threads=2 --events=0 --time=60 \ --rand-type=uniform /usr/share/sysbench/oltp_read_only.lua prepare

Run the statement to test (the reading performance statistics of the table is 100000 based on the data of the table)

sudo sysbench --db-driver=mysql --mysql-host=192.168.1.xxx --mysql-port=4000 \ --mysql-user=root --mysql-password=root --mysql-db=bigdata \ --range_size=100 --table_size=100000 --threads=2 --events=0 --time=60 \ --rand-type=uniform /usr/share/sysbench/oltp_read_only.lua run

The operation results are as follows:

SQL statistics:

queries performed: #Performance statistics

read: 32018 # Read Statistics (select statement)

write: 0 # Write Statistics (insert, delete, update statements)

other: 4574 # Other statements (such as commit)

total: 36592 # Sum of total executed statements

transactions: 2287 (226.03 per sec.) #Total transactions (transactions processed per second)

queries: 36592 (3616.44 per sec.) #Query performance (query performance per second)

ignored errors: 0 (0.00 per sec.)

reconnects: 0 (0.00 per sec.)

General statistics:

total time: 10.1123s # Total time

total number of events: 2287 # Total transactions

Latency (ms): #delay time

min: 107.13 # Minimum delay time

avg: 140.78 # Average delay

max: 268.09 # Maximum delay

95th percentile: 161.51 # More than 95% statement response time

sum: 321960.64 # Total delay duration

Threads fairness: # Thread fairness

events (avg/stddev): 71.4688/0.61

execution time (avg/stddev): 10.0613/0.04Clear test table

sudo sysbench --db-driver=mysql --mysql-host=192.168.1.xxx --mysql-port=4000 \ --mysql-user=root --mysql-password=root --mysql-db=bigdata \ --range_size=100 --table_size=100000 --threads=2 --events=0 --time=60 \ --rand-type=uniform /usr/share/sysbench/oltp_read_only.lua cleanup

Reference address: https://docs.pingcap.com/zh/tidb/stable/production-deployment-using-tiup