1. Kubernetes Installation

Chinese Community of Kubernetes|Chinese Documentation

- Set Ali Cloud Mirror

vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

- Install minikube

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64

sudo install minikube-linux-amd64 /usr/local/bin/minikube

- Install kubectl

curl -LO https://dl.k8s.io/release/v1.20.0/bin/linux/amd64/kubectl

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

- Install conntrack

yum install conntrack

2. Basic operations

1.Minikube

Prerequisite: Install docker( Installation Notes)

- Start minikube

minikube start --vm-driver=none --image-mirror-country='cn'

- Stop minikube

minikube stop

2.kubectl

-

View Node - kubectl get node(s)

[root@*********************** ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION *********************** Ready control-plane,master 4d3h v1.22.3

Nodes are containers that carry freight.

There are two nodes. The node's hoot is master.

k8s also has the concept of a cluster, which contains master and node, which is the master node and the node. -

[root@*********************** ~]# kubectl create deployment my-nginx --image nginx:latest deployment.apps/my-nginx created

-

View all deploy ment s - kubectl get deploy ment (ment s) (s)

[root@*********************** ~]# kubectl get deployment NAME READY UP-TO-DATE AVAILABLE AGE my-nginx 1/1 1 1 7m46s

-

View all pod s - kubectl get pod(s)

[root@*********************** ~]# kubectl get pods NAME READY STATUS RESTARTS AGE my-nginx-b7d7bc74d-qq56l 1/1 Running 0 8m46s

-

View all pod information and ip and port - kubectl get pods -o wide

[root@*********************** ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES my-nginx-b7d7bc74d-qq56l 1/1 Running 0 12m ---.--.-.- *********************** <none> <none>

-

View all service s - kubectl get service(s)

[root@*********************** ~]# kubectl get service(s) NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4d3h

-

Query all namespace s - kubectl get namespace(s)

[root@*********************** ~]# kubectl get namespaces NAME STATUS AGE default Active 4d3h kube-node-lease Active 4d3h kube-public Active 4d3h kube-system Active 4d3h

1. Default is default

2.kube-* is the namespace of the k8s system itself -

Modify the number of copies

That is, modify the number of pods. If you do not specify the number of copies, the default is pods

# Modify the number of pods to 3 [root@*********************** ~]# kubectl scale deployments/my-nginx --replicas=3 deployment.apps/my-nginx scaled [root@*********************** ~]# kubectl get deployments NAME READY UP-TO-DATE AVAILABLE AGE my-nginx 1/3 3 1 33m [root@*********************** ~]# kubectl get pods NAME READY STATUS RESTARTS AGE my-nginx-b7d7bc74d-ht5fx 1/1 Running 0 36s my-nginx-b7d7bc74d-mc9p5 1/1 Running 0 36s my-nginx-b7d7bc74d-qq56l 1/1 Running 0 33m # Modify the number of pods to two [root@*********************** ~]# kubectl scale deployments/my-nginx --replicas=2 deployment.apps/my-nginx scaled [root@*********************** ~]# kubectl get deployments NAME READY UP-TO-DATE AVAILABLE AGE my-nginx 2/2 2 2 38m [root@*********************** ~]# kubectl get pods NAME READY STATUS RESTARTS AGE my-nginx-b7d7bc74d-mc9p5 1/1 Running 0 5m49s my-nginx-b7d7bc74d-qq56l 1/1 Running 0 38m

3. Basic concepts

1.Cluster

Collection of resources, k8s advantage These resources carry a variety of container-based responses.

2.Master

- Cluster's Brain, Command

- The main task is to schedule, and to decide where to put it, or to transport more than one master

3.Node

- Containers responsible for transport should

- Master management, responsible for monitoring and container status reporting

4.Pod

The smallest unit of k8s, containing 1~N containers

-

Make_:

-

1 pod to transport 12 Containers (most often)

-

More than one pod container (less common)

Group containers that are often closely related and require the sharing of resources, starting and stopping

-

5.Controller

- Manage Pod

- For different business scenarios, k8s offers a variety of Controller s, including Deployment, ReplicaSet, DaemonSet, StatefulSet, Job

-

Deployment

1. The most common Controller manages multiple copies of a Pod (e.g. --replicas=3) and ensures that the Pod is shipped as expected

2. Essential: ReplicaSet is created by ReplicaSet and pod is created by ReplicaSet.

-

ReplicaSet

Also manage multiple copies of Pod

-

DaemonSet

_Creation of up to 12 Pod copies per Node

-

StatefulSet

Ensure that copies are started, updated, and deleted in a fixed order

6.Service

Provides a load balanced, fixed IP and Port for Pod - since the pod is unstable and the IP changes, a fixed IP or Port is required

- Difference:

- Controller is responsible for shipping containers

- Service is responsible for accessing containers

7.Namespace

Resource isolation

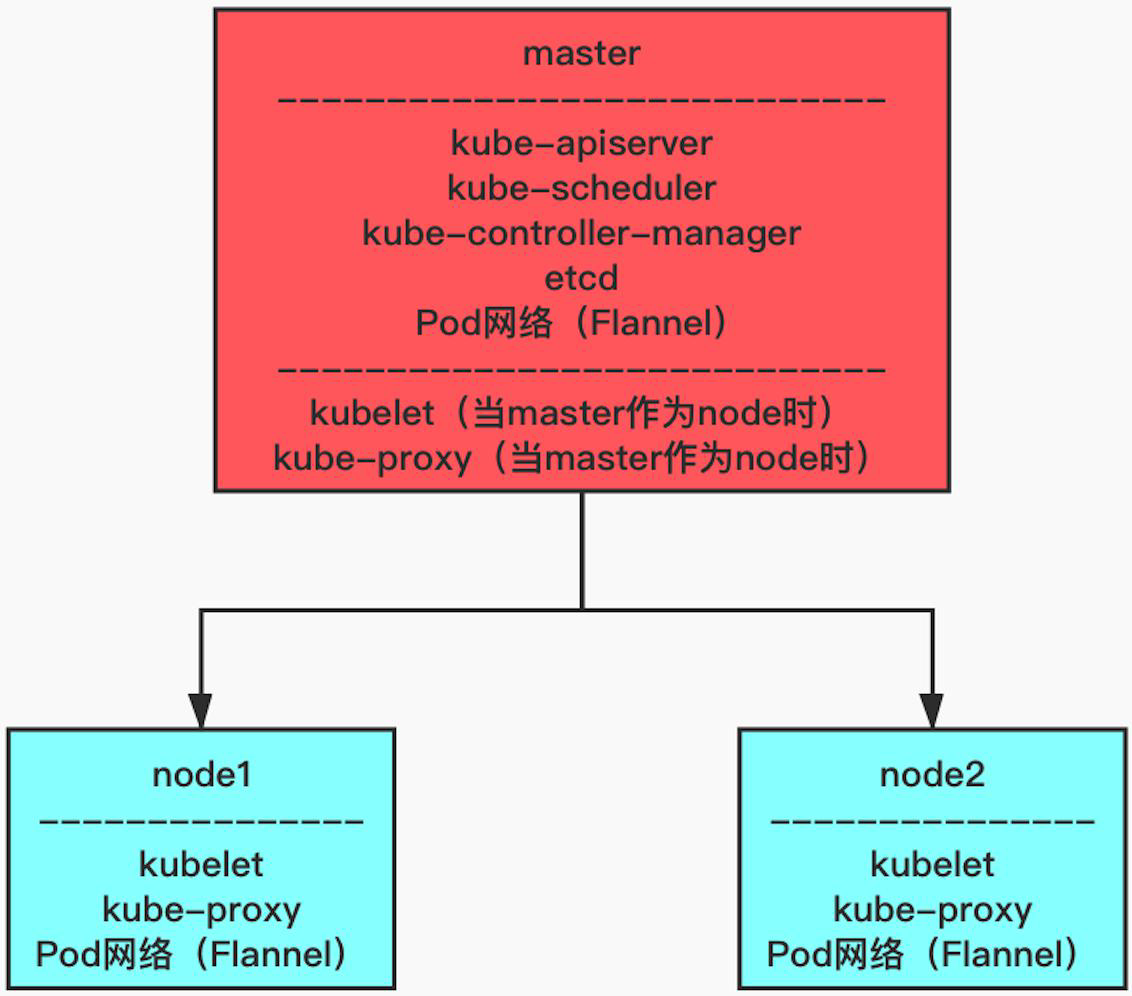

4. Initial knowledge of k8s architecture

1.kubelet is the only Kubernetes component that does not run as a container

2.k8s architecture, mainly composed of Master and Node

1. Execution process

When deploying an application and specifying two copies

- Kuberctl sends deployment requests to API Server

- API Server notifies Controller Manager to create a deployment resource

- Scheduler performs a scheduling task, distributing two copies of Pod to Noe1 and Noe2

- kubectl on node1 and node2 creates and runs Pod on their respective nodes

2.Master

-

API-Server

Belonging to front-end interactive connection, providing instructions based on Http/https RESTful API

-

Scheduler

Responsible for deciding which Node to put the pod on

-

Controller Manager

Common critical components to manage resources in Cluster

-

etcd

Responsible for saving configuration information of k8s and status information of various resources. etcd will notify related components quickly if data changes

3.Node

-

kubelet

Create and run containers

-

kube-proxy

Responsible for forwarding requests and load balancing if there are multiple copies

6. Deployment

1. Creation method

-

kubectl command

-

kubectl run

kubectl run nginx-deployment --image nginx:1.7.9 --replicas=2

[root@*********************** nginx]# kubectl run nginx-deployment --image nginx:1.7.9 --replicas=2 Flag --replicas has been deprecated, has no effect and will be removed in the future. pod/deployments created

Specify the properties of the resource by parameters on the command line (however, -- replicas have been deprecated since K8S v1.18.0 and it is recommended to create pods with kubectl apply)

-

kubectl create

kubectl create deployment nginx-deployment --image nginx:1.7.9 --replicas=2

-

-

Configuration file + kubectl apply

-

Write a yml configuration file (within the file, custom names cannot contain uppercase letters)

For example:

# version number apiVersion: apps/v1 # Type: Pod/ReplicationController/Deployment/Service/Ingress kind: Deployment metadata: # Name of Kind name: nginx-deployment spec: selector: matchLabels: # The name of the container label, where the selector needs to correspond when publishing a Service app: nginx # Number of instances deployed (default one) replicas: 2 template: # Define at least one label, key and value that can be specified arbitrarily metadata: labels: app: nginx # This section describes the Pod specification, defines the properties of each container in the Pod, name and image are required spec: # Container configuration, array type, indicating that multiple containers can be configured containers: # Container name (required) - name: nginx # Container Mirroring (Must) image: nginx:1.7.9 # Policies:'Always','IfNotPresent','Never' # Mirror pull only occurs when the mirror does not exist imagePullPolicy: IfNotPresent ports: # Pod Port - containerPort: 80 -

Execute Command

[root@*********************** nginx]# kubectl apply -f nginx.yaml deployment.apps/nginx-deployment created

-

2. Delete instructions

[root@*********************** ~]# kubectl delete deployment my-nginx

deployment.apps "my-nginx" deleted

Points of Attention

-

If you configure two pods and delete one of them, it will still be added as two pods according to the deployment configuration

-

When a deployment is deleted, the pod is automatically deleted

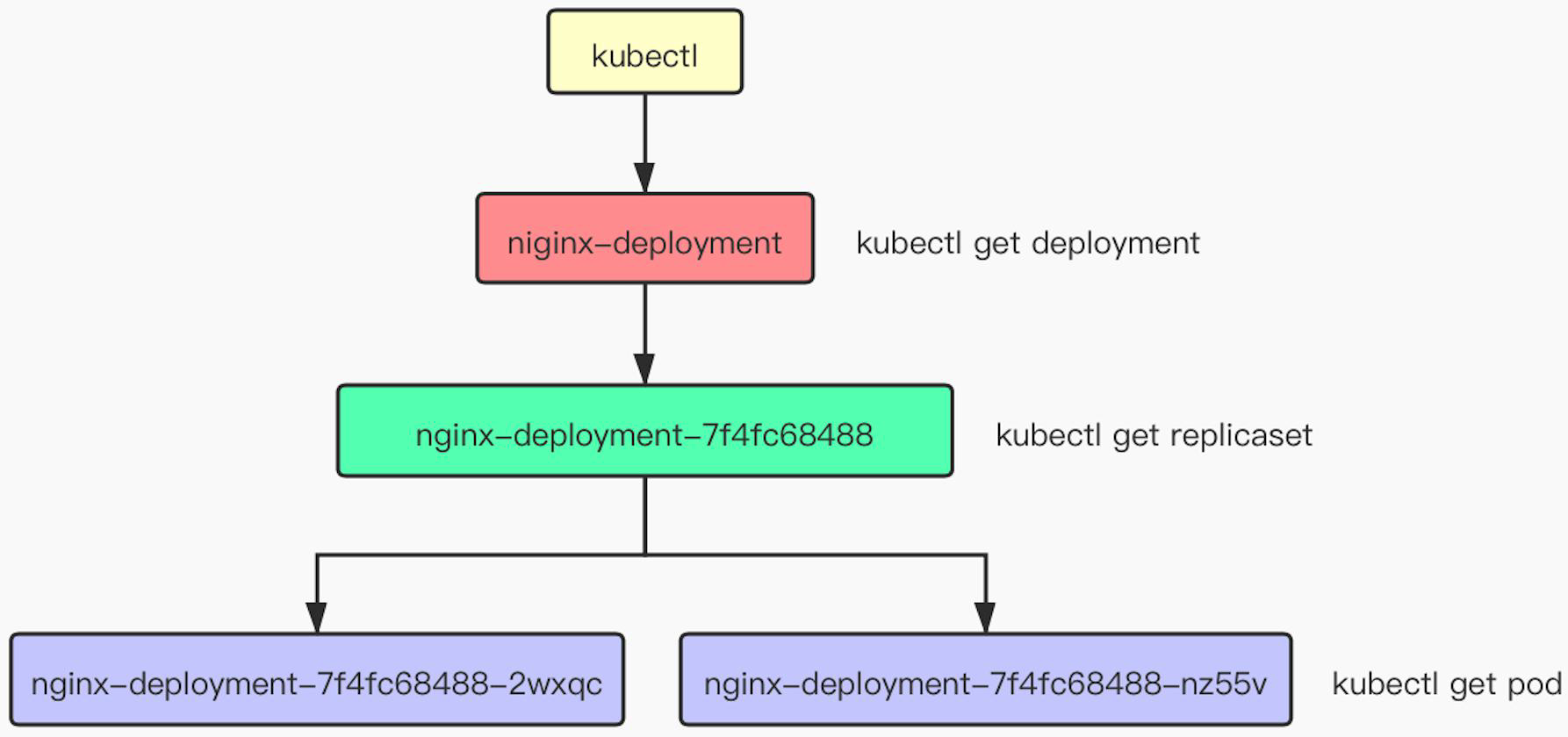

3. Construction process

kubectl - Create Deployment - Create ReplicaSet - Create Pod

- deployment description information

[root@*********************** nginx]# kubectl describe deployment nginx-deployment Name: nginx-deployment Namespace: default . . . NewReplicaSet: nginx-deployment-5d59d67564 (2/2 replicas created) . . .

- Replicaset Description Information

[root@*********************** nginx]# kubectl describe rs nginx-deployment-5d59d67564 Name: nginx-deployment-5d59d67564 Namespace: default . . . . . . Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal SuccessfulCreate 13m replicaset-controller Created pod: nginx-deployment-5d59d67564-mcrsm Normal SuccessfulCreate 13m replicaset-controller Created pod: nginx-deployment-5d59d67564-qt7rc

- Pod Description Information

[root@*********************** nginx]# kubectl describe pod nginx-deployment-5d59d67564-mcrsm Name: nginx-deployment-5d59d67564-mcrsm Namespace: default . . . Controlled By: ReplicaSet/nginx-deployment-5d59d67564 . . . Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 17m default-scheduler Successfully assigned default/nginx-deployment-5d59d67564-mcrsm to *********************** Normal Pulled 17m kubelet Container image "nginx:1.7.9" already present on machine Normal Created 17m kubelet Created container nginx Normal Started 17m kubelet Started container nginx

V. Label

By default, Scheduler schedules a Pod to all available Nodes, but there are cases where we can deploy a Pod to a specified Node through lable, such as:

- There will be a large number of disk I/O pods deployed to the ODE with SSD configured

- GPUPod will need to be deployed to a Node with a GPU configured

-

View label information for nodes

[root@*********************** nginx]# kubectl get node NAME STATUS ROLES AGE VERSION *********************** Ready control-plane,master 4d22h v1.22.3 [root@*********************** nginx]# kubectl get node --show-labels NAME STATUS ROLES AGE VERSION LABELS *********************** Ready control-plane,master 4d22h v1.22.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=***********************,kubernetes.io/os=linux,minikube.k8s.io/commit=76b94fb3c4e8ac5062daf70d60cf03ddcc0a741b,minikube.k8s.io/name=minikube,minikube.k8s.io/updated_at=2021_11_19T17_01_50_0700,minikube.k8s.io/version=v1.24.0,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=,node.kubernetes.io/exclude-from-external-load-balancers=

-

Add/Remove Tags

# Add to [root@*********************** nginx]# kubectl label node *********************** disktype=ssd node/*********************** labeled [root@*********************** nginx]# kubectl get node --show-labels NAME STATUS ROLES AGE VERSION LABELS *********************** Ready control-plane,master 4d22h v1.22.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=***********************,kubernetes.io/os=linux,minikube.k8s.io/commit=76b94fb3c4e8ac5062daf70d60cf03ddcc0a741b,minikube.k8s.io/name=minikube,minikube.k8s.io/updated_at=2021_11_19T17_01_50_0700,minikube.k8s.io/version=v1.24.0,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=,node.kubernetes.io/exclude-from-external-load-balancers=

# delete [root@*********************** nginx]# kubectl label node *********************** disktype- node/*********************** labeled [root@*********************** nginx]# kubectl get node --show-labels NAME STATUS ROLES AGE VERSION LABELS *********************** Ready control-plane,master 4d22h v1.22.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=***********************,kubernetes.io/os=linux,minikube.k8s.io/commit=76b94fb3c4e8ac5062daf70d60cf03ddcc0a741b,minikube.k8s.io/name=minikube,minikube.k8s.io/updated_at=2021_11_19T17_01_50_0700,minikube.k8s.io/version=v1.24.0,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=,node.kubernetes.io/exclude-from-external-load-balancers=

-

Modify xx.yml configuration file

-

Redeploy Deployment

6. Job

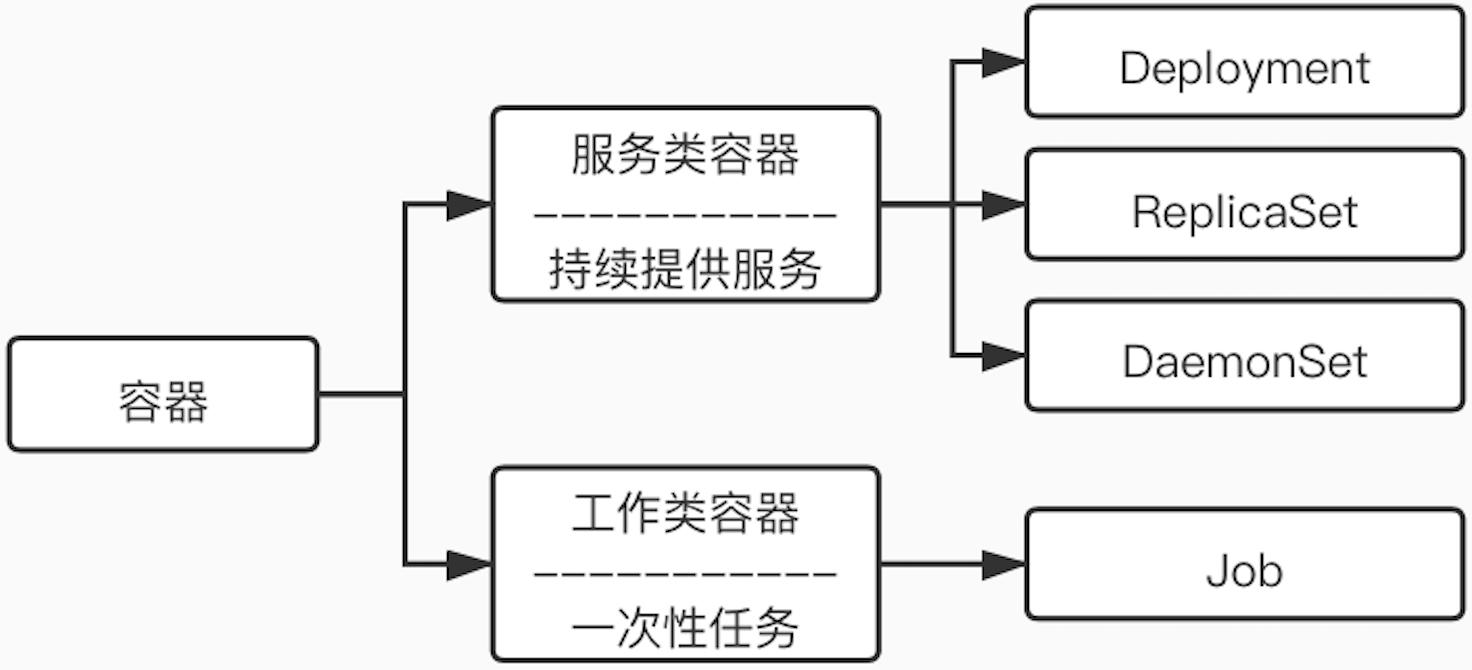

1. Classification of container types

-

Containers can be divided by duration

-

Service Class Container

-

Continuous provision of services requires constant running, such as HTTP Server, Daemon

-

Deployment, ReplicaSet, and DaemonSet are all used to manage service class containers

-

-

Work Class Container

-

One-time tasks, such as batch programs, exit the container when finished

-

Job is used to manage class containers

-

-

2. Create Tasks

-

Normal Job

apiVersion: batch/v1 kind: Job metadata: name: myjob spec: # Quantity to be completed # completions: 6 # Number of Parallels # parallelism: 2 template: metadata: name: myjob spec: containers: - name: hello image: busybox command: ["echo", "hello k8s job!"] # command: ["invalid_command", "hello k8s job!"] restartPolicy: Never # restartPolicy: OnFailure-

command: ["echo", "hello k8s job!"]

[root@*********************** deployConfigFile]# kubectl apply -f myJob.yaml job.batch/myjob created [root@*********************** ~]# kubectl get job NAME COMPLETIONS DURATION AGE myjob 1/1 22s 16h [root@*********************** ~]# kubectl get pods NAME READY STATUS RESTARTS AGE myjob--1-jvttw 0/1 Completed 0 16h [root@*********************** ~]# kubectl logs myjob--1-jvttw hello k8s job!

-

command: ["invalid_command", "hello k8s job!"]

-

restartPolicy: Never

[root@*********************** deployConfigFile]# kubectl get pods NAME READY STATUS RESTARTS AGE myjob--1-2z8dk 0/1 ContainerCreating 0 9s myjob--1-rlzmk 0/1 ContainerCannotRun 0 28s ```

-

restartPolicy: OnFailure

[root@*********************** deployConfigFile]# kubectl get pods NAME READY STATUS RESTARTS AGE myjob--1-xkbrj 0/1 RunContainerError 1 (1s ago) 35s

-

-

-

Timer Job

# apiVersion: batch/v1 apiVersion: batch/v2alpha1 kind: CronJob metadata: name: hello spec: schedule: "*/1 * * * *" jobTemplate: spec: template: spec: containers: - name: hello image: busybox command: ["echo", "hello k8s cronJob!"] restartPolicy: OnFailure-

Possible error information

[root@*********************** deployConfigFile]# kubectl apply -f myCronJob.yaml error: unable to recognize "myCronJob.yaml": no matches for kind "CronJob" in version "batch/v2alpha1"

-

View supported versions

[root@*********************** deployConfigFile]# kubectl api-versions admissionregistration.k8s.io/v1 apiextensions.k8s.io/v1 apiregistration.k8s.io/v1 # Common apps/v1 authentication.k8s.io/v1 authorization.k8s.io/v1 autoscaling/v1 autoscaling/v2beta1 autoscaling/v2beta2 # Common batch/v1 batch/v1beta1 certificates.k8s.io/v1 coordination.k8s.io/v1 discovery.k8s.io/v1 discovery.k8s.io/v1beta1 events.k8s.io/v1 events.k8s.io/v1beta1 flowcontrol.apiserver.k8s.io/v1beta1 networking.k8s.io/v1 node.k8s.io/v1 node.k8s.io/v1beta1 policy/v1 policy/v1beta1 rbac.authorization.k8s.io/v1 scheduling.k8s.io/v1 storage.k8s.io/v1 storage.k8s.io/v1beta1 v1

-

Introducing batch/v2alpha1

[root@*********************** deployConfigFile]# vim /etc/kubernetes/manifests/kube-apiserver.yaml

spec: containers: - command: - kube-apiserver - --runtime-config=batch/v2alpha1=true . . .

-

Manual restart may be required - systemctl restart kubelet

-

Server may fail to restart, upgrade Kubernetes version to 1.5 and above

-

-

-

batch/v1-Reference Documents

[root@*********************** deployConfigFile]# kubectl apply -f myCronJob.yaml cronjob.batch/hello created [root@*********************** deployConfigFile]# kubectl get cronJob NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE hello */1 * * * * False 0 <none> 37s [root@*********************** deployConfigFile]# kubectl get pods NAME READY STATUS RESTARTS AGE hello-27296887--1-7nk7q 0/1 Completed 0 2m8s hello-27296888--1-dg6nh 0/1 Completed 0 67s hello-27296889--1-t5gqx 0/1 ContainerCreating 0 8s

-

7. Service

1. Role

Service provides fixed ip and port, and contains a set of pods. Even if the ip of the Pod changes, the client is facing the fixed ip and port of the Service

- Pod is not robust. Containers in Pod are likely to hang up due to various failures

- Controller s such as Deployment ensure overall robustness by dynamically creating and destroying Pod s, so applications are robust

2. Create

-

Create Deployment

apiVersion: apps/v1 kind: Deployment metadata: name: httpd spec: selector: matchLabels: run: httpd replicas: 3 template: metadata: labels: name: httpd spec: containers: - name: httpd image: httpd ports: - containerPort: 80[root@*********************** httpd]# kubectl apply -f httpd.yaml deployment.apps/httpd created [root@*********************** httpd]# kubectl get pods NAME READY STATUS RESTARTS AGE httpd-5cbd65d46c-f7hgm 1/1 Running 0 2m35s httpd-5cbd65d46c-qvmqw 1/1 Running 0 2m35s httpd-5cbd65d46c-xzbkv 1/1 Running 0 2m35s [root@*********************** httpd]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES httpd-5cbd65d46c-f7hgm 1/1 Running 0 3m16s 172.18.0.6 *********************** <none> <none> httpd-5cbd65d46c-qvmqw 1/1 Running 0 3m16s 172.18.0.4 *********************** <none> <none> httpd-5cbd65d46c-xzbkv 1/1 Running 0 3m16s 172.18.0.5 *********************** <none> <none> [root@*********************** httpd]# curl 172.18.0.6 <html><body><h1>It works!</h1></body></html> [root@*********************** httpd]# curl 172.18.0.5 <html><body><h1>It works!</h1></body></html> [root@*********************** httpd]# curl 172.18.0.4 <html><body><h1>It works!</h1></body></html>

-

Create Service

apiVersion: v1 kind: Service metadata: name: httpd-svc spec: selector: run: httpd ports: - protocol: TCP port: 8080 targetPort: 80[root@*********************** httpd]# kubectl apply -f httpdSvc.yaml service/httpd-svc created [root@*********************** httpd]# kubectl get service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE httpd-svc ClusterIP 10.106.23.93 <none> 8080/TCP 5m57s [root@*********************** httpd]# curl 10.106.23.93:8080 <html><body><h1>It works!</h1></body></html>

-

Relevance

-

kubectl describe service httpd-svc

[root@*********************** httpd]# kubectl describe service httpd-svc Name: httpd-svc Namespace: default Labels: <none> Annotations: <none> Selector: run=httpd Type: ClusterIP IP Family Policy: SingleStack IP Families: IPv4 IP: 10.106.23.93 IPs: 10.106.23.93 Port: <unset> 8080/TCP TargetPort: 80/TCP Endpoints: 172.18.0.4:80,172.18.0.5:80,172.18.0.6:80 . . .

-

iptables-save

[root@*********************** httpd]# iptables-save . . . -A KUBE-SERVICES -d 10.106.23.93/32 -p tcp -m comment --comment "default/httpd-svc cluster IP" -m tcp --dport 8080 -j KUBE-SVC-IYRDZZKXS5EOQ6Q6 . . . -A KUBE-SVC-IYRDZZKXS5EOQ6Q6 ! -s 10.244.0.0/16 -d 10.106.23.93/32 -p tcp -m comment --comment "default/httpd-svc cluster IP" -m tcp --dport 8080 -j KUBE-MARK-MASQ -A KUBE-SVC-IYRDZZKXS5EOQ6Q6 -m comment --comment "default/httpd-svc" -m statistic --mode random --probability 0.33333333349 -j KUBE-SEP-CERU7ZF6K2KWGLTG -A KUBE-SVC-IYRDZZKXS5EOQ6Q6 -m comment --comment "default/httpd-svc" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-FEWNLSQ65IOCPPEH -A KUBE-SVC-IYRDZZKXS5EOQ6Q6 -m comment --comment "default/httpd-svc" -j KUBE-SEP-VEOTCWMCCOB2VENI -A KUBE-SEP-CERU7ZF6K2KWGLTG -s 172.18.0.4/32 -m comment --comment "default/httpd-svc" -j KUBE-MARK-MASQ -A KUBE-SEP-CERU7ZF6K2KWGLTG -p tcp -m comment --comment "default/httpd-svc" -m tcp -j DNAT --to-destination 172.18.0.4:80 -A KUBE-SEP-FEWNLSQ65IOCPPEH -s 172.18.0.5/32 -m comment --comment "default/httpd-svc" -j KUBE-MARK-MASQ -A KUBE-SEP-FEWNLSQ65IOCPPEH -p tcp -m comment --comment "default/httpd-svc" -m tcp -j DNAT --to-destination 172.18.0.5:80 -A KUBE-SEP-VEOTCWMCCOB2VENI -s 172.18.0.6/32 -m comment --comment "default/httpd-svc" -j KUBE-MARK-MASQ -A KUBE-SEP-VEOTCWMCCOB2VENI -p tcp -m comment --comment "default/httpd-svc" -m tcp -j DNAT --to-destination 172.18.0.6:80 . . .

-

8. Rolling Update

k8s self-contained function

-

What is rolling update

Update only a small number of copies at a time, update more copies after success, and eventually update all copies

-

advantage

The best benefit is zero downtime, with copies running throughout the update process, which ensures business continuity

-

Example

-

To update

apiVersion: apps/v1 kind: Deployment metadata: name: httpd spec: selector: matchLabels: run: httpd replicas: 3 template: metadata: labels: run: httpd spec: containers: - name: httpd image: httpd:2.2.31 # image: httpd:2.2.32 imagePullPolicy: IfNotPresent ports: - containerPort: 80[root@*********************** httpd]# kubectl apply -f httpdRollingUpdate.yaml deployment.apps/httpd configured [root@*********************** httpd]# vim httpdRollingUpdate.yaml [root@*********************** httpd]# kubectl apply -f httpdRollingUpdate.yaml deployment.apps/httpd configured [root@*********************** httpd]# kubectl get pod NAME READY STATUS RESTARTS AGE httpd-55f56846f-875sg 1/1 Running 0 108s httpd-55f56846f-ggqb5 1/1 Running 0 108s httpd-55f56846f-tjn66 1/1 Running 0 108s httpd-846d8d79dd-d7bzk 0/1 ContainerCreating 0 13s [root@*********************** httpd]# kubectl describe deployment httpd Name: httpd Namespace: default . . . Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 3m12s deployment-controller Scaled up replica set httpd-55f56846f to 3 Normal ScalingReplicaSet 97s deployment-controller Scaled up replica set httpd-846d8d79dd to 1 Normal ScalingReplicaSet 58s deployment-controller Scaled down replica set httpd-55f56846f to 2 Normal ScalingReplicaSet 58s deployment-controller Scaled up replica set httpd-846d8d79dd to 2 Normal ScalingReplicaSet 53s deployment-controller Scaled down replica set httpd-55f56846f to 1 Normal ScalingReplicaSet 53s deployment-controller Scaled up replica set httpd-846d8d79dd to 3 Normal ScalingReplicaSet 48s deployment-controller Scaled down replica set httpd-55f56846f to 0 [root@*********************** httpd]# kubectl get rs NAME DESIRED CURRENT READY AGE httpd-55f56846f 0 0 0 3m49s httpd-846d8d79dd 3 3 3 2m14s

-

Rollback (- record)

apiVersion: apps/v1 kind: Deployment metadata: name: httpd spec: selector: matchLabels: run: httpd revisionHistoryLimit: 10 replicas: 3 template: metadata: labels: run: httpd spec: containers: - name: httpd image: httpd:2.4.16 imagePullPolicy: IfNotPresent ports: - containerPort: 80apiVersion: apps/v1 kind: Deployment metadata: name: httpd spec: selector: matchLabels: run: httpd revisionHistoryLimit: 10 replicas: 3 template: metadata: labels: run: httpd spec: containers: - name: httpd image: httpd:2.4.18 imagePullPolicy: IfNotPresent ports: - containerPort: 80[root@*********************** httpd]# kubectl apply -f httpd.v1.yaml --record deployment.apps/httpd created [root@*********************** httpd]# kubectl get deploy -o wide NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR httpd 3/3 3 3 47s httpd httpd:2.4.16 run=httpd [root@*********************** httpd]# kubectl apply -f httpd.v2.yaml --record deployment.apps/httpd configured [root@*********************** httpd]# kubectl get deploy -o wide NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR httpd 3/3 3 3 100s httpd httpd:2.4.18 run=httpd [root@*********************** httpd]# kubectl rollout history deployment httpd deployment.apps/httpd REVISION CHANGE-CAUSE 1 kubectl apply --filename=httpd.v1.yaml --record=true 2 kubectl apply --filename=httpd.v2.yaml --record=true

-

Rollback

[root@*********************** httpd]# kubectl rollout undo deployment httpd --to-revision=1 deployment.apps/httpd rolled back [root@*********************** httpd]# kubectl rollout history deployment httpd deployment.apps/httpd REVISION CHANGE-CAUSE 2 kubectl apply --filename=httpd.v2.yaml --record=true 3 kubectl apply --filename=httpd.v1.yaml --record=true

-