1. Introduction to datasets:

UCF101 is a motion recognition dataset for real action videos collected on YouTube and provides 13320 videos from 101 action categories. Official website: https://www.crcv.ucf.edu/research/data-sets/ucf101/

- Dataset name: UCF-101 (2012)

- Total Videos: 13,320 Videos

- Total duration: 27 hours

- Video source: YouTube collection

- Video category: 101

- There are five main types of actions: human interaction with objects, simple body movements, human interaction, playing instruments, Sports

- Each category (folder) is divided into 25 groups, with 4-7 short videos in each group, each of which has a different duration

- Specific categories: eye make-up, lipstick, archery, baby crawling, balance bar, band parade, baseball court, basketball shooting, basketball dump, lying push, cycling, billiard shooting, hair drying, candle blowing, weight squatting, bowling, sandbags, boxing speed bag, breaststroke, brushing, cleaning and jerking, cliff diving, cricket bowling, cricket shooting, Cutting, diving, drumming, fencing, hockey fines, floor gymnastics, flying disc catch, front crawl, golf swing, haircut, hammer throw, hammer, upside-down push-ups, upside-down walking, head massage, high jump, horse riding, hula-hoop, ice dance, javelin throw, juggle ball, jump rope, jack, kayak, knitting, long jump, stabbing, parade, Mixed hitter, mopping floor, nursery clip, parallel bars, pizza toss, guitar, piano, tabra, violin, cello, Daf, Dhol, flute, sitar, pole jumping, saddle horse, pull-up, fist, push-up, rafting, indoor rock climbing, rope climbing, rowing, salsa rotation, shaving, lead ball, skating, skiing, skiing, Skijet, skydiving, football juggling, football free throws, static rings, sumo wrestling, surfing, swing, table tennis rackets, taijiquan, tennis swing, discus throwing, trampoline jumping, typing, high and low bars, volleyball spikes, walking with dogs, wall push-ups, writing on boats, skating. Shaving, shot putting, skating, boarding, skiing, Skijet, skydiving, football juggling, football fines, still life rings, sumo, surfing, swing, table tennis shooting, Taijiquan, tennis swing, discus throwing, trampoline jumping, typing, uneven bars, volleyball spikes, walking with dogs, wall push-ups, writing on boats, skating. Shaving, shot putting, skating boarding, skiing, Skijet, skydiving, football juggling, football fine, still life ring, sumo, surfing, swing, table tennis shooting, Taijiquan, tennis swing, discus throwing, trampoline jumping, typing, uneven bars, volleyball spikes, walking with dogs, wall pushups, writing on board, skating

2. Data set acquisition and decompression:

1. Data Download

UCF101 data download address: http://crcv.ucf.edu/data/UCF101/UCF101.rar

Official data division download address: https://www.crcv.ucf.edu/wp-content/uploads/2019/03/UCF101TrainTestSplits-RecognitionTask.zip

Note: The size of the dataset is 6.46G, and there are three ways to divide the data, which you can choose to use

2. Data set decompression:

The dataset is a compressed file of rar, decompressed with rar, and cd to the corresponding folder

rar x UCF101.rar

After decompression, it is the standard catalog format for classified datasets. The secondary catalog is named Human Activity Category, and the corresponding video data is in the secondary catalog.

Each short video is of varying duration (from zero to more than a dozen seconds), 320*240 in size, with an irregular frame rate of 25 or 29 frames, and contains only one type of human behavior in a video.

Note: If you do not have rar locally, you need to install it. Install the reference on Linux rar Tool Installation and Common Commands on Linux Among them, if you do not have permission to contact the administrator for installation, and if the server has docker use, you can use the chmod command to change container permissions for installation

3. Data Set Partition

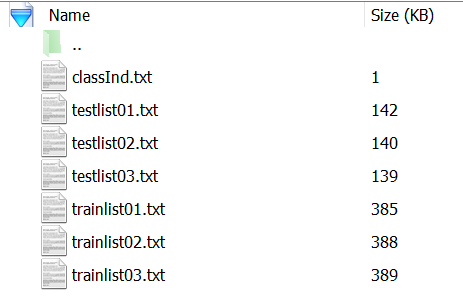

Unzip the downloaded UCF101TrainTestSplits-Recognition Task, as shown in the following figure, in three ways

Choose your own partition method. This paper uses the first partition method to move the validation set to the valfolder and divide the code:

import shutil,os

txtlist = ['testlist01.txt']

dataset_dir = './UCF-101/' #Data Storage Path

copy_path = './val/' #Verification Set Storage Path

for txtfile in txtlist:

for line in open(txtfile, 'r'):

o_filename = dataset_dir + line.strip()

n_filename = copy_path + line.strip()

if not os.path.exists('/'.join(n_filename.split('/')[:-1])):

os.makedirs('/'.join(n_filename.split('/')[:-1]))

shutil.move(o_filename, n_filename)

IV. Data Set Preprocessing

Data processing can be loaded in two ways: first, the video file is generated into a pkl file for processing, or directly for video processing

1. Generate pkl file

Convert video files to pkl files to speed up data reading, code:

import os

from pathlib import Path

import random

import cv2

import numpy as np

import pickle as pk

from tqdm import tqdm

from PIL import Image

import multiprocessing

import time

import torchvision.transforms as transforms

from torch.utils.data import DataLoader, Dataset

class VideoDataset(Dataset):

def __init__(self, directory, local_rank, num_local_rank, resize_shape=[168, 168] , mode='val', clip_len=8, frame_sample_rate=2):

folder = Path(directory) # get the directory of the specified split

print("Load dataset from folder : ", folder)

self.clip_len = clip_len

self.resize_shape = resize_shape

self.frame_sample_rate = frame_sample_rate

self.mode = mode

self.fnames, labels = [], []

for label in sorted(os.listdir(folder))[:200]:

for fname in os.listdir(os.path.join(folder, label)):

self.fnames.append(os.path.join(folder, label, fname))

labels.append(label)

'''

random_list = list(zip(self.fnames, labels))

random.shuffle(random_list)

self.fnames[:], labels[:] = zip(*random_list)

'''

# prepare a mapping between the label names (strings) and indices (ints)

self.label2index = {label: index for index, label in enumerate(sorted(set(labels)))}

# convert the list of label names into an array of label indices

self.label_array = np.array([self.label2index[label] for label in labels], dtype=int)

label_file = str(len(os.listdir(folder))) + 'class_labels.txt'

with open(label_file, 'w') as f:

for id, label in enumerate(sorted(self.label2index)):

f.writelines(str(id + 1) + ' ' + label + '\n')

if mode == 'train' or 'val' and num_local_rank > 1:

single_num_ = len(self.fnames)//24

self.fnames = self.fnames[local_rank*single_num_:((local_rank+1)*single_num_)]

labels = labels[local_rank*single_num_:((local_rank+1)*single_num_)]

for file in tqdm(self.fnames, ncols=80):

fname = file.split("/")

self.directory = '/root/dataset/{}/{}'.format(fname[-3],fname[-2])

if os.path.exists('{}/{}.pkl'.format(self.directory, fname[-1])):

continue

else:

capture = cv2.VideoCapture(file)

frame_count = int(capture.get(cv2.CAP_PROP_FRAME_COUNT))

if frame_count > self.clip_len:

buffer = self.loadvideo(capture, frame_count, file)

else:

while frame_count < self.clip_len:

index = np.random.randint(self.__len__())

capture = cv2.VideoCapture(self.fnames[index])

frame_count = int(capture.get(cv2.CAP_PROP_FRAME_COUNT))

buffer = self.loadvideo(capture, frame_count, file)

def __getitem__(self, index):

# loading and preprocessing. TODO move them to transform classes

return index

def __len__(self):

return len(self.fnames)

def loadvideo(self, capture, frame_count, fname):

# initialize a VideoCapture object to read video data into a numpy array

self.transform_nor = transforms.Compose([

transforms.Resize([224, 224]),

])

# create a buffer. Must have dtype float, so it gets converted to a FloatTensor by Pytorch later

start_idx = 0

end_idx = frame_count-1

frame_count_sample = frame_count // self.frame_sample_rate - 1

if frame_count>300:

end_idx = np.random.randint(300, frame_count)

start_idx = end_idx - 300

frame_count_sample = 301 // self.frame_sample_rate - 1

buffer_normal = np.empty((frame_count_sample, 224, 224, 3), np.dtype('uint8'))

count = 0

retaining = True

sample_count = 0

# read in each frame, one at a time into the numpy buffer array

while (count <= end_idx and retaining):

retaining, frame = capture.read()

if count < start_idx:

count += 1

continue

if retaining is False or count > end_idx:

break

if count%self.frame_sample_rate == (self.frame_sample_rate-1) and sample_count < frame_count_sample:

frame = Image.fromarray(cv2.cvtColor(frame, cv2.COLOR_BGR2RGB))

buffer_normal[sample_count] = self.transform_nor(frame)

sample_count += 1

count += 1

fname = fname.split("/")

self.directory = '/root/dataset/{}/{}'.format(fname[-3],fname[-2])

if not os.path.exists(self.directory):

os.makedirs(self.directory)

# Save tensor to .pkl file

with open('{}/{}.pkl'.format(self.directory, fname[-1]), 'wb') as Normal_writer:

pk.dump(buffer_normal, Normal_writer)

capture.release()

return buffer_normal

if __name__ == '__main__':

datapath = '/root/dataset/UCF101'

process_num = 24

for i in range(process_num):

p = multiprocessing.Process(target=VideoDataset, args=(datapath, i, process_num))

p.start()

print('CPU core number:' + str(multiprocessing.cpu_count()))

for p in multiprocessing.active_children():

print('Subprocess' + p.name + ' id: ' + str(p.pid))

print('all done')

Then process the pkl file

import os

from pathlib import Path

import random

import cv2

import numpy as np

import pickle as pk

from tqdm import tqdm

from PIL import Image

import torchvision.transforms as transforms

from torch.utils.data import DataLoader, Dataset

class VideoDataset(Dataset):

def __init__(self, directory_list, local_rank=0, enable_GPUs_num=0, distributed_load=False, resize_shape=[224, 224] , mode='train', clip_len=32, crop_size=160):

self.clip_len, self.crop_size, self.resize_shape = clip_len, crop_size, resize_shape

self.mode = mode

self.fnames, labels = [], []

# get the directory of the specified split

for directory in directory_list:

folder = Path(directory)

print("Load dataset from folder : ", folder)

for label in sorted(os.listdir(folder)):

for fname in os.listdir(os.path.join(folder, label)) if mode=="train" else os.listdir(os.path.join(folder, label))[:10]:

self.fnames.append(os.path.join(folder, label, fname))

labels.append(label)

random_list = list(zip(self.fnames, labels))

random.shuffle(random_list)

self.fnames[:], labels[:] = zip(*random_list)

# self.fnames = self.fnames[:240]

'''

if mode == 'train' and distributed_load:

single_num_ = len(self.fnames)//enable_GPUs_num

self.fnames = self.fnames[local_rank*single_num_:((local_rank+1)*single_num_)]

labels = labels[local_rank*single_num_:((local_rank+1)*single_num_)]

'''

# prepare a mapping between the label names (strings) and indices (ints)

self.label2index = {label:index for index, label in enumerate(sorted(set(labels)))}

# convert the list of label names into an array of label indices

self.label_array = np.array([self.label2index[label] for label in labels], dtype=int)

def __getitem__(self, index):

# loading and preprocessing. TODO move them to transform classess

buffer = self.loadvideo(self.fnames[index])

if self.mode == 'train':

height_index = np.random.randint(buffer.shape[2] - self.crop_size)

width_index = np.random.randint(buffer.shape[3] - self.crop_size)

return buffer[:,:,height_index:height_index + self.crop_size, width_index:width_index + self.crop_size], self.label_array[index]

else:

return buffer, self.label_array[index]

def __len__(self):

return len(self.fnames)

def loadvideo(self, fname):

# initialize a VideoCapture object to read video data into a numpy array

with open(fname, 'rb') as Video_reader:

video = pk.load(Video_reader)

while video.shape[0]<self.clip_len+2:

index = np.random.randint(self.__len__())

with open(self.fnames[index], 'rb') as Video_reader:

video = pk.load(Video_reader)

height, width = video.shape[1], video.shape[2]

center = (height//2, width//2)

flip, flipCode = True if np.random.random() < 0.5 else False, 1

#rotation, rotationCode = True if np.random.random() < 0.2 else False, random.choice([-270,-180,-90,90,180,270])

speed_rate = np.random.randint(1, 3) if video.shape[0] > self.clip_len*2+2 and self.mode == "train" else 1

time_index = np.random.randint(video.shape[0]-self.clip_len*speed_rate)

video = video[time_index:time_index+(self.clip_len*speed_rate):speed_rate,:,:,:]

self.transform = transforms.Compose([

transforms.Resize([self.resize_shape[0], self.resize_shape[1]]),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])

self.transform_val = transforms.Compose([

transforms.Resize([self.crop_size, self.crop_size]),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])

if self.mode == 'train':

# create a buffer. Must have dtype float, so it gets converted to a FloatTensor by Pytorch later

buffer = np.empty((self.clip_len, 3, self.resize_shape[0], self.resize_shape[1]), np.dtype('float16'))

for idx, frame in enumerate(video):

if flip:

frame = cv2.flip(frame, flipCode=flipCode)

'''

if rotation:

rot_mat = cv2.getRotationMatrix2D(center, rotationCode, 1)

frame = cv2.warpAffine(frame, rot_mat, (height, width))

'''

buffer[idx] = self.transform(Image.fromarray(frame))

elif self.mode == 'validation':

# create a buffer. Must have dtype float, so it gets converted to a FloatTensor by Pytorch later

buffer = np.empty((self.clip_len, 3, self.crop_size, self.crop_size), np.dtype('float16'))

for idx, frame in enumerate(video):

buffer[idx] = self.transform_val(Image.fromarray(frame))

return buffer.transpose((1, 0, 2, 3))

if __name__ == '__main__':

datapath = ['/root/data2/dataset/UCF-101']

dataset = VideoDataset(datapath,

resize_shape=[224, 224],

mode='validation')

dataloader = DataLoader(dataset, batch_size=16, shuffle=True, num_workers=0)

bar = tqdm(total=len(dataloader), ncols=80)

for step, (buffer, labels) in enumerate(dataloader):

print(buffer.shape)

print("label: ", labels)

bar.update(1)

2. Processing video files directly

The overall process is similar to a pkl file except that the processing body becomes a video file, code:

import os

from pathlib import Path

import random

import numpy as np

import pickle as pk

import cv2

from tqdm import tqdm

from PIL import Image

import torchvision.transforms as transforms

import torch

from prefetch_generator import BackgroundGenerator

from torch.utils.data import DataLoader, Dataset

class VideoDataset(Dataset):

def __init__(self, directory_list, local_rank=0, enable_GPUs_num=0, distributed_load=False, resize_shape=[224, 224] , mode='train', clip_len=32, crop_size = 168):

self.clip_len, self.crop_size, self.resize_shape = clip_len, crop_size, resize_shape

self.mode = mode

self.fnames, labels = [],[]

# get the directory of the specified split

for directory in directory_list:

folder = Path(directory)

print("Load dataset from folder : ", folder)

for label in sorted(os.listdir(folder)):

for fname in os.listdir(os.path.join(folder, label)) if mode=="train" else os.listdir(os.path.join(folder, label))[:10]:

self.fnames.append(os.path.join(folder, label, fname))

labels.append(label)

random_list = list(zip(self.fnames, labels))

random.shuffle(random_list)

self.fnames[:], labels[:] = zip(*random_list)

# self.fnames = self.fnames[:240]

if mode == 'train' and distributed_load:

single_num_ = len(self.fnames)//enable_GPUs_num

self.fnames = self.fnames[local_rank*single_num_:((local_rank+1)*single_num_)]

labels = labels[local_rank*single_num_:((local_rank+1)*single_num_)]

# prepare a mapping between the label names (strings) and indices (ints)

self.label2index = {label:index for index, label in enumerate(sorted(set(labels)))}

# convert the list of label names into an array of label indices

self.label_array = np.array([self.label2index[label] for label in labels], dtype=int)

def __getitem__(self, index):

# loading and preprocessing. TODO move them to transform classess

buffer = self.loadvideo(self.fnames[index])

height_index = np.random.randint(buffer.shape[2] - self.crop_size)

width_index = np.random.randint(buffer.shape[3] - self.crop_size)

return buffer[:,:,height_index:height_index + self.crop_size, width_index:width_index + self.crop_size], self.label_array[index]

def __len__(self):

return len(self.fnames)

def loadvideo(self, fname):

# initialize a VideoCapture object to read video data into a numpy array

self.transform = transforms.Compose([

transforms.Resize([self.resize_shape[0], self.resize_shape[1]]),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])

flip, flipCode = 1, random.choice([-1,0,1]) if np.random.random() < 0.5 and self.mode=="train" else 0

try:

video_stream = cv2.VideoCapture(fname)

frame_count = int(video_stream.get(cv2.CAP_PROP_FRAME_COUNT))

except RuntimeError:

index = np.random.randint(self.__len__())

video_stream = cv2.VideoCapture(self.fnames[index])

frame_count = int(video_stream.get(cv2.CAP_PROP_FRAME_COUNT))

while frame_count<self.clip_len+2:

index = np.random.randint(self.__len__())

video_stream = cv2.VideoCapture(self.fnames[index])

frame_count = int(video_stream.get(cv2.CAP_PROP_FRAME_COUNT))

speed_rate = np.random.randint(1, 3) if frame_count > self.clip_len*2+2 else 1

time_index = np.random.randint(frame_count - self.clip_len * speed_rate)

start_idx, end_idx, final_idx = time_index, time_index+(self.clip_len*speed_rate), frame_count-1

count, sample_count, retaining = 0, 0, True

# create a buffer. Must have dtype float, so it gets converted to a FloatTensor by Pytorch later

buffer = np.empty((self.clip_len, 3, self.resize_shape[0], self.resize_shape[1]), np.dtype('float16'))

while (count <= end_idx and retaining):

retaining, frame = video_stream.read()

if count < start_idx:

count += 1

continue

if count % speed_rate == speed_rate-1 and count >= start_idx and sample_count < self.clip_len:

if flip:

frame = cv2.flip(frame, flipCode=flipCode)

try:

buffer[sample_count] = self.transform(Image.fromarray(cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)))

except cv2.error as err:

continue

sample_count += 1

count += 1

video_stream.release()

return buffer.transpose((1, 0, 2, 3))

if __name__ == '__main__':

datapath = ['/root/data1/datasets/UCF-101']

dataset = VideoDataset(datapath,

resize_shape=[224, 224],

mode='validation')

dataloader = DataLoader(dataset, batch_size=8, shuffle=True, num_workers=24, pin_memory=True)

bar = tqdm(total=len(dataloader), ncols=80)

prefetcher = DataPrefetcher(BackgroundGenerator(dataloader), 0)

batch = prefetcher.next()

iter_id = 0

while batch is not None:

iter_id += 1

bar.update(1)

if iter_id >= len(dataloader):

break

batch = prefetcher.next()

print(batch[0].shape)

print("label: ", batch[1])

'''

for step, (buffer, labels) in enumerate(BackgroundGenerator(dataloader)):

print(buffer.shape)

print("label: ", labels)

bar.update(1)

'''