1. What is interface testing

As the name suggests, interface test is to test the interface between systems or components, mainly to verify the exchange, transmission and control of data, management process, and mutual logical dependency. The interface protocols are divided into HTTP,WebService,Dubbo,Thrift,Socket and other types. The test types are mainly divided into function test, performance test, stability test, security test and so on.

In the "pyramid" model of layered testing, interface testing belongs to the category of layer 2 service integration testing. Compared with UI layer (mainly WEB or APP) automation test, interface automation test has greater benefits, easy implementation, low maintenance cost and higher input-output ratio. It is the first choice for each company to carry out automation test.

Let's take an HTTP interface as an example to fully introduce the interface automation test process: from requirement analysis to use case design, from script writing, test execution to result analysis, and provide complete use case design and test script.

2. Basic process

The basic interface function automatic test process is as follows:

Requirement analysis - > use case design - > script development - > test execution - > result analysis

2.1 example interface

Interface name: Douban movie search

Interface document address: https://developers.douban.com/wiki/?title=movie_v2#search

Example of interface call:

- Search by cast: https://api.douban.com/v2/movie/search?q= Zhang Yimou

- Search by Title: https://api.douban.com/v2/movie/search?q= Westward Journey

- Search by type: https://api.douban.com/v2/movie/search?tag= comedy

3. Demand analysis

Requirements analysis refers to requirements, design and other documents. On the basis of understanding the requirements, it is also necessary to understand the internal implementation logic, and can put forward the unreasonable or omission of requirements and design at this stage.

For example: Douban movie search interface, I understand that the demand is to support the search of film titles, actors and labels, and return search results in pages.

4. Use case design

Use case design is to use MindManager or XMind and other mind mapping software to write test case design on the basis of understanding interface test requirements. The main contents include parameter verification, function verification, business scenario verification, security and performance verification, etc. the commonly used use case design methods include equivalence classification method, boundary value analysis method, scenario analysis method, cause and effect diagram, orthogonal table, etc.

For the function test of Douban movie search interface, we mainly design test cases from three aspects: parameter verification, function verification and business scenario verification, as follows:

5. Script development

According to the test case design written above, we use Python + nose tests framework to write relevant automated test scripts. It can completely realize the functions of interface automatic test, automatic execution and e-mail sending test report.

5.1 related lib installation

The necessary lib libraries are as follows, which can be installed by using pip command:

pip install nose

pip install nose-html-reporting

pip install requests

5.2 interface call

Using the requests library, we can easily write the above interface call methods (for example, search q = Andy Lau, and the example code is as follows):

#coding=utf-8 import requests import json url = 'https://api.douban.com/v2/movie/search' params=dict(q=u'Lau Andy') r = requests.get(url, params=params) print 'Search Params:\n', json.dumps(params, ensure_ascii=False) print 'Search Response:\n', json.dumps(r.json(), ensure_ascii=False, indent=4)

When actually writing automated test scripts, we need some encapsulation. In the following code, we encapsulate the Douban movie search interface, test_ The Q method only needs to use the yield method provided by nosetests to easily cycle through each test set in the qs list:

class test_doubanSearch(object):

@staticmethod

def search(params, expectNum=None):

url = 'https://api.douban.com/v2/movie/search'

r = requests.get(url, params=params)

print 'Search Params:\n', json.dumps(params, ensure_ascii=False)

print 'Search Response:\n', json.dumps(r.json(), ensure_ascii=False, indent=4)

def test_q(self):

# Verify search criteria q

qs = [u'Chasing the murderer at night', u'Westward Journey', u'Zhou Xingchi', u'Zhang Yimou', u'Zhou Xingchi,Meng Da Wu', u'Zhang Yimou,Gong Li', u'Zhou Xingchi,Westward Journey', u'Chasing the murderer at night,Pan Yueming']

for q in qs:

params = dict(q=q)

f = partial(test_doubanSearch.search, params)

f.description = json.dumps(params, ensure_ascii=False).encode('utf-8')

yield (f,)

According to the test case design, we can write the automatic test script of each function in turn.

5.3 result verification

When testing the interface manually, we need to judge whether the test is passed through the results returned by the interface, and so is the automatic test.

For this interface, we search "q = Andy Lau". We need to judge whether the returned result contains "actor Andy Lau or film title Andy Lau". When searching "tag = comedy", we need to judge whether the film type in the returned result is "comedy". When the result is divided into pages, we need to check whether the number of returned results is correct, etc. The complete result verification code is as follows:

class check_response():

@staticmethod

def check_result(response, params, expectNum=None):

# Due to the fuzzy matching of the search results, the simple processing here only verifies the correctness of the first returned result

if expectNum is not None:

# When the expected number of results is not None, only the number of returned results is judged

eq_(expectNum, len(response['subjects']), '{0}!={1}'.format(expectNum, len(response['subjects'])))

else:

if not response['subjects']:

# The result is null. Direct return failed

assert False

else:

# The result is not empty. Verify the first result

subject = response['subjects'][0]

# Check the search condition tag first

if params.get('tag'):

for word in params['tag'].split(','):

genres = subject['genres']

ok_(word in genres, 'Check {0} failed!'.format(word.encode('utf-8')))

# Recheck search criteria q

elif params.get('q'):

# Judge whether the title, director or actor contains search words in turn. If any one contains, success will be returned

for word in params['q'].split(','):

title = [subject['title']]

casts = [i['name'] for i in subject['casts']]

directors = [i['name'] for i in subject['directors']]

total = title + casts + directors

ok_(any(word.lower() in i.lower() for i in total),

'Check {0} failed!'.format(word.encode('utf-8')))

@staticmethod

def check_pageSize(response):

# Determine whether the number of paging results is correct

count = response.get('count')

start = response.get('start')

total = response.get('total')

diff = total - start

if diff >= count:

expectPageSize = count

elif count > diff > 0:

expectPageSize = diff

else:

expectPageSize = 0

eq_(expectPageSize, len(response['subjects']), '{0}!={1}'.format(expectPageSize, len(response['subjects'])))

5.4 performing tests

For the above test scripts, we can easily run automated tests by using the nosetests command, and generate test reports in html format by using the nose html reporting plug-in.

The operation command is as follows:

nosetests -v test_doubanSearch.py:test_doubanSearch --with-html --html-report=TestReport.html

5.5 sending email Report

After the test is completed, we can use the method provided by the SMTP lib module to send the test report in html format. The basic process is to read the test report - > Add mail content and attachments - > connect to the mail server - > send mail - > exit. The example code is as follows:

import smtplib

from email.mime.text import MIMEText

from email.mime.multipart import MIMEMultipart

def send_mail():

# Read test report content

with open(report_file, 'r') as f:

content = f.read().decode('utf-8')

msg = MIMEMultipart('mixed')

# Add message content

msg_html = MIMEText(content, 'html', 'utf-8')

msg.attach(msg_html)

# Add attachment

msg_attachment = MIMEText(content, 'html', 'utf-8')

msg_attachment["Content-Disposition"] = 'attachment; filename="{0}"'.format(report_file)

msg.attach(msg_attachment)

msg['Subject'] = mail_subjet

msg['From'] = mail_user

msg['To'] = ';'.join(mail_to)

try:

# Connect to the mail server

s = smtplib.SMTP(mail_host, 25)

# land

s.login(mail_user, mail_pwd)

# Send mail

s.sendmail(mail_user, mail_to, msg.as_string())

# sign out

s.quit()

except Exception as e:

print "Exceptioin ", e

6. Result analysis

Open the test report generated after the operation of nosetests. It can be seen that 51 test cases were executed in this test, 50 were successful and 1 failed.

For the failed use case, you can see that the parameters passed in are: {"count": - 10, "tag": "comedy"}. At this time, the number of returned results is inconsistent with our expected results (when count is negative, the expected result is an error reported by the interface or the default value is 20, but the actual number of returned results is 189. Hurry to bug Douban --)

7. Complete script

The complete automated test script of Douban movie search interface, which I have uploaded to GitHub. Download address: https://github.com/lovesoo/test_demo/tree/master/test_douban

After downloading, complete interface automation test can be carried out by using the following command, and the final test report can be sent by email:

python test_doubanSearch.py

The final test email is sent as follows:

8. Resource sharing

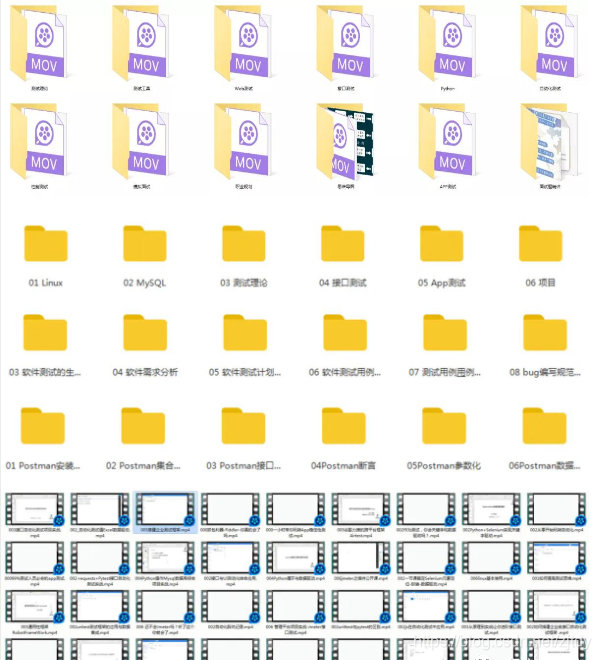

The following are the materials I collected and sorted out. It should be the most complete tutorial warehouse for friends studying [software testing]. This warehouse has also accompanied me through the most difficult journey. I hope it can also help you

Pay attention to [program yuan Muzi] WeChat official account free access to massive resources, technology exchange group (644956177)