background information

TPC-H (Business Intelligence Computing test) is a test set developed by the transaction processing performance Council (TPC) to simulate decision support applications. At present, it is widely used in academia and industry to evaluate the performance of decision support technology. TPC-H is modeled according to the real production and operation environment and simulates the data warehouse of a sales system. It contains 8 tables, and the amount of data can be set from 1g to 3T. The benchmark includes 22 queries, and the main evaluation index is the response time of each query, that is, the time from submitting the query to returning the result. The test results can comprehensively reflect the ability of the system to process queries. Refer to for details TPCH documentation.

Data set introduction

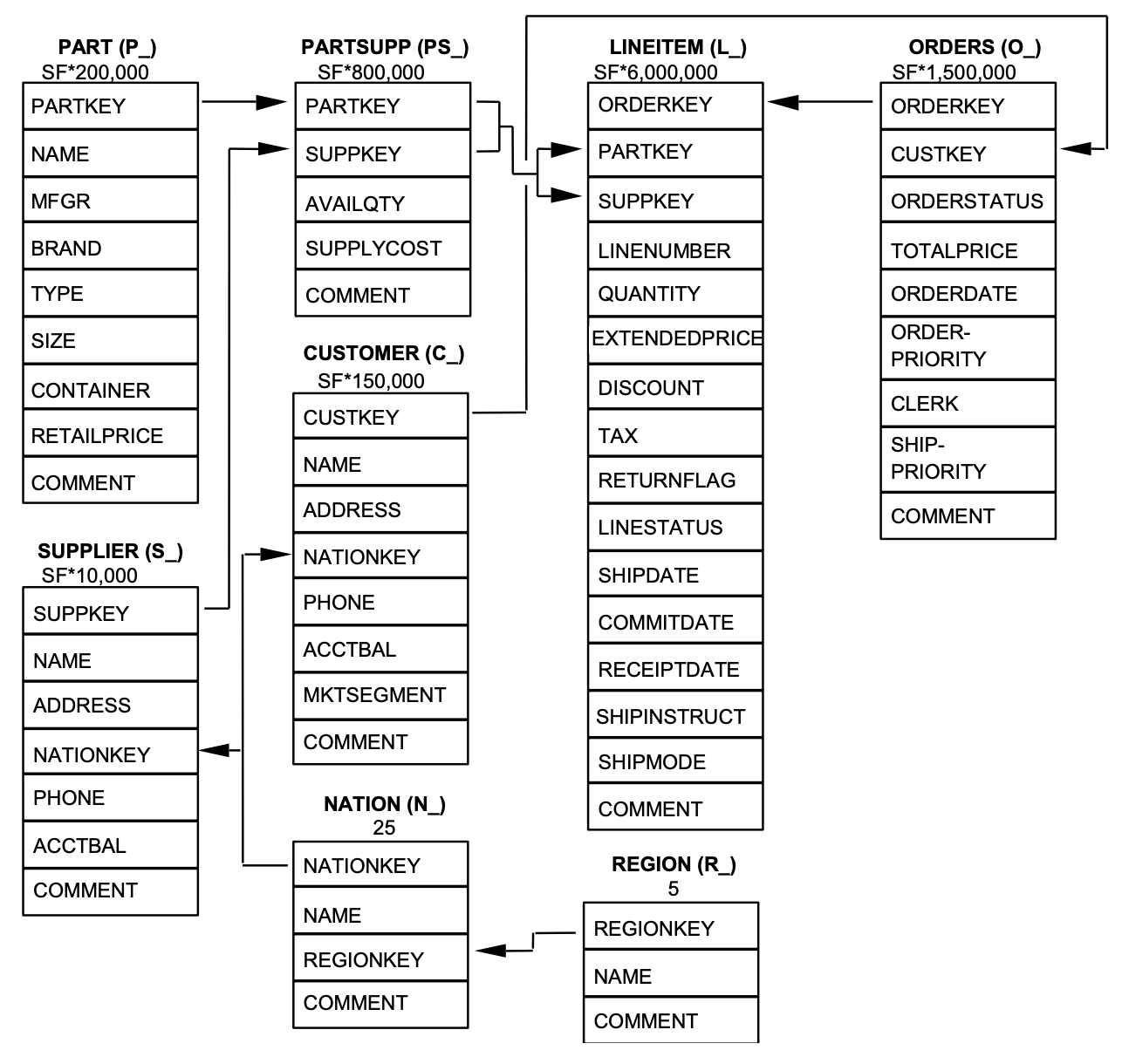

The data set contains the following 8 tables, and the relationship between them is shown in the figure below.

Test details

Description of test data volume

The amount of test data will directly affect the test results. In the generation tool of TPC-H, SF (scale factor) is used to control the amount of data generated. 1 SF corresponds to 1 GB.

Note: the amount of data mentioned above is only The data volume of the original data does not include space occupation such as index, so more space needs to be reserved when preparing the environment.testing environment

The exclusive instance (pay as you go) is used in this test. Since it is only used for test illustration, 8-core 32G is selected for computing resource configuration.

Test scenario

This test scenario mainly includes three parts:

- OLAP query scenario test mainly uses the column save table and directly uses 22 queries in TPCH test;

- The Key/Value point query scenario test mainly uses the row save table. After using the row save table for orders, the point query of primary key filtering is performed;

Basic environmental preparation

- This step is mainly used to prepare the data required for OLAP query scenarios and Key/Value point query scenarios;

Basic environmental preparation

1. Create an ECS instance

Log in to alicloud and create an ECS instance for data generation, data import to Hologres, and client testing. Recommended specifications:

- ecs.g6.4xlarge specification

- CentOS 7.9 system

- ESSD data disk. The specific data capacity is determined according to the amount of data to be tested

- It is recommended that ECS and Hologres instances use the same Region and VPC network

2. Create Hologres instance

- Log in to alicloud, enter the Hologres product console, and click add engine instance

- Select the configuration and fill in the instance name. Please refer to for details Official documents.

3. Create test database

- After creating the instance, you need to log in to the Hologres instance you created and create a database. In this test, the database is named tpch_1sf, please refer to for detailed operation steps Official documents

Generate TPC-H data

1. Prepare data generation tools

- Remote link ECS instance

- Update all libraries

yum update

- Install git

yum install git

- Install gcc

yum install gcc

- Download TPC-H data generation code

git clone https://github.com/gregrahn/tpch-kit.git

- Enter the data generation tool code directory

cd tpch-kit/dbgen

- Compile data generation tool code

make

2. Generate data

- After the compilation is successful, you can use the following code to view the relevant parameters of the code generation tool.

./dbgen --help

- This test only generates 1 GB of data, so run the following code to generate data.

If you need to generate more data, you can adjust the parameters of SF. For example, you can use the following code to generate 1 T data./dbgen -vf -s 1

./dbgen -vf -s 1000

- Generally, 32CU can run TPCH SF10256CU can run TPCH SF50

- After the data is generated, you can use the following code to view the generated file. You can see that the generation tool generates 8 data files, and each data file corresponds to a table in the dataset.

ls | grep '.*.tbl'

OLAP query scenario test

Prepare data

1. Create table

- Since this article mainly uses psql for data import, you need to run the following commands in ECS to install psql

yum install postgresql-server

- After installing psql, you can log in to the Hologres instance using the following command

PGUSER=<AccessID> PGPASSWORD=<AccessKey> psql -p <Port> -h <Endpoint> -d <Database>

- After connecting to Hologres using psql, you can use the following table creation statement to create a database table

DROP TABLE IF EXISTS LINEITEM;

BEGIN;

CREATE TABLE LINEITEM

(

L_ORDERKEY INT NOT NULL,

L_PARTKEY INT NOT NULL,

L_SUPPKEY INT NOT NULL,

L_LINENUMBER INT NOT NULL,

L_QUANTITY DECIMAL(15,2) NOT NULL,

L_EXTENDEDPRICE DECIMAL(15,2) NOT NULL,

L_DISCOUNT DECIMAL(15,2) NOT NULL,

L_TAX DECIMAL(15,2) NOT NULL,

L_RETURNFLAG TEXT NOT NULL,

L_LINESTATUS TEXT NOT NULL,

L_SHIPDATE TIMESTAMPTZ NOT NULL,

L_COMMITDATE TIMESTAMPTZ NOT NULL,

L_RECEIPTDATE TIMESTAMPTZ NOT NULL,

L_SHIPINSTRUCT TEXT NOT NULL,

L_SHIPMODE TEXT NOT NULL,

L_COMMENT TEXT NOT NULL,

PRIMARY KEY (L_ORDERKEY,L_LINENUMBER)

);

CALL set_table_property('LINEITEM', 'clustering_key', 'L_SHIPDATE,L_ORDERKEY');

CALL set_table_property('LINEITEM', 'segment_key', 'L_SHIPDATE');

CALL set_table_property('LINEITEM', 'distribution_key', 'L_ORDERKEY');

CALL set_table_property('LINEITEM', 'bitmap_columns', 'L_ORDERKEY,L_PARTKEY,L_SUPPKEY,L_LINENUMBER,L_RETURNFLAG,L_LINESTATUS,L_SHIPINSTRUCT,L_SHIPMODE,L_COMMENT');

CALL set_table_property('LINEITEM', 'dictionary_encoding_columns', 'L_RETURNFLAG,L_LINESTATUS,L_SHIPINSTRUCT,L_SHIPMODE,L_COMMENT');

CALL set_table_property('LINEITEM', 'time_to_live_in_seconds', '31536000');

COMMIT;

DROP TABLE IF EXISTS ORDERS;

BEGIN;

CREATE TABLE ORDERS

(

O_ORDERKEY INT NOT NULL PRIMARY KEY,

O_CUSTKEY INT NOT NULL,

O_ORDERSTATUS TEXT NOT NULL,

O_TOTALPRICE DECIMAL(15,2) NOT NULL,

O_ORDERDATE timestamptz NOT NULL,

O_ORDERPRIORITY TEXT NOT NULL,

O_CLERK TEXT NOT NULL,

O_SHIPPRIORITY INT NOT NULL,

O_COMMENT TEXT NOT NULL

);

CALL set_table_property('ORDERS', 'segment_key', 'O_ORDERDATE');

CALL set_table_property('ORDERS', 'distribution_key', 'O_ORDERKEY');

CALL set_table_property('ORDERS', 'colocate_with', 'LINEITEM');

CALL set_table_property('ORDERS', 'bitmap_columns', 'O_ORDERKEY,O_CUSTKEY,O_ORDERSTATUS,O_ORDERPRIORITY,O_CLERK,O_SHIPPRIORITY,O_COMMENT');

CALL set_table_property('ORDERS', 'dictionary_encoding_columns', 'O_ORDERSTATUS,O_ORDERPRIORITY,O_CLERK,O_COMMENT');

CALL set_table_property('ORDERS', 'time_to_live_in_seconds', '31536000');

COMMIT;

DROP TABLE IF EXISTS PARTSUPP;

BEGIN;

CREATE TABLE PARTSUPP

(

PS_PARTKEY INT NOT NULL,

PS_SUPPKEY INT NOT NULL,

PS_AVAILQTY INT NOT NULL,

PS_SUPPLYCOST DECIMAL(15,2) NOT NULL,

PS_COMMENT TEXT NOT NULL,

PRIMARY KEY(PS_PARTKEY,PS_SUPPKEY)

);

CALL set_table_property('PARTSUPP', 'distribution_key', 'PS_PARTKEY');

CALL set_table_property('PARTSUPP', 'colocate_with', 'LINEITEM');

CALL set_table_property('PARTSUPP', 'bitmap_columns', 'PS_PARTKEY,PS_SUPPKEY,PS_AVAILQTY,PS_COMMENT');

CALL set_table_property('PARTSUPP', 'dictionary_encoding_columns', 'PS_COMMENT');

CALL set_table_property('PARTSUPP', 'time_to_live_in_seconds', '31536000');

COMMIT;

DROP TABLE IF EXISTS PART;

BEGIN;

CREATE TABLE PART

(

P_PARTKEY INT NOT NULL PRIMARY KEY,

P_NAME TEXT NOT NULL,

P_MFGR TEXT NOT NULL,

P_BRAND TEXT NOT NULL,

P_TYPE TEXT NOT NULL,

P_SIZE INT NOT NULL,

P_CONTAINER TEXT NOT NULL,

P_RETAILPRICE DECIMAL(15,2) NOT NULL,

P_COMMENT TEXT NOT NULL

);

CALL set_table_property('PART', 'distribution_key', 'P_PARTKEY');

CALL set_table_property('PART', 'colocate_with', 'LINEITEM');

CALL set_table_property('PART', 'bitmap_columns', 'P_PARTKEY,P_SIZE,P_NAME,P_MFGR,P_BRAND,P_TYPE,P_CONTAINER,P_COMMENT');

CALL set_table_property('PART', 'dictionary_encoding_columns', 'P_NAME,P_MFGR,P_BRAND,P_TYPE,P_CONTAINER,P_COMMENT');

CALL set_table_property('PART', 'time_to_live_in_seconds', '31536000');

COMMIT;

DROP TABLE IF EXISTS CUSTOMER;

BEGIN;

CREATE TABLE CUSTOMER

(

C_CUSTKEY INT NOT NULL PRIMARY KEY,

C_NAME TEXT NOT NULL,

C_ADDRESS TEXT NOT NULL,

C_NATIONKEY INT NOT NULL,

C_PHONE TEXT NOT NULL,

C_ACCTBAL DECIMAL(15,2) NOT NULL,

C_MKTSEGMENT TEXT NOT NULL,

C_COMMENT TEXT NOT NULL

);

CALL set_table_property('CUSTOMER', 'distribution_key', 'C_CUSTKEY');

CALL set_table_property('CUSTOMER', 'colocate_with', 'LINEITEM');

CALL set_table_property('CUSTOMER', 'bitmap_columns', 'C_CUSTKEY,C_NATIONKEY,C_NAME,C_ADDRESS,C_PHONE,C_MKTSEGMENT,C_COMMENT');

CALL set_table_property('CUSTOMER', 'dictionary_encoding_columns', 'C_NAME,C_ADDRESS,C_PHONE,C_MKTSEGMENT,C_COMMENT');

CALL set_table_property('CUSTOMER', 'time_to_live_in_seconds', '31536000');

COMMIT;

DROP TABLE IF EXISTS SUPPLIER;

BEGIN;

CREATE TABLE SUPPLIER

(

S_SUPPKEY INT NOT NULL PRIMARY KEY,

S_NAME TEXT NOT NULL,

S_ADDRESS TEXT NOT NULL,

S_NATIONKEY INT NOT NULL,

S_PHONE TEXT NOT NULL,

S_ACCTBAL DECIMAL(15,2) NOT NULL,

S_COMMENT TEXT NOT NULL

);

CALL set_table_property('SUPPLIER', 'distribution_key', 'S_SUPPKEY');

CALL set_table_property('SUPPLIER', 'colocate_with', 'LINEITEM');

CALL set_table_property('SUPPLIER', 'bitmap_columns', 'S_SUPPKEY,S_NAME,S_ADDRESS,S_NATIONKEY,S_PHONE,S_COMMENT');

CALL set_table_property('SUPPLIER', 'dictionary_encoding_columns', 'S_NAME,S_ADDRESS,S_PHONE,S_COMMENT');

CALL set_table_property('SUPPLIER', 'time_to_live_in_seconds', '31536000');

COMMIT;

DROP TABLE IF EXISTS NATION;

BEGIN;

CREATE TABLE NATION(

N_NATIONKEY INT NOT NULL PRIMARY KEY,

N_NAME text NOT NULL,

N_REGIONKEY INT NOT NULL,

N_COMMENT text NOT NULL

);

CALL set_table_property('NATION', 'distribution_key', 'N_NATIONKEY');

CALL set_table_property('NATION', 'colocate_with', 'LINEITEM');

CALL set_table_property('NATION', 'bitmap_columns', 'N_NATIONKEY,N_NAME,N_REGIONKEY,N_COMMENT');

CALL set_table_property('NATION', 'dictionary_encoding_columns', 'N_NAME,N_COMMENT');

CALL set_table_property('NATION', 'time_to_live_in_seconds', '31536000');

COMMIT;

DROP TABLE IF EXISTS REGION;

BEGIN;

CREATE TABLE REGION

(

R_REGIONKEY INT NOT NULL PRIMARY KEY,

R_NAME TEXT NOT NULL,

R_COMMENT TEXT

);

CALL set_table_property('REGION', 'distribution_key', 'R_REGIONKEY');

CALL set_table_property('REGION', 'colocate_with', 'LINEITEM');

CALL set_table_property('REGION', 'bitmap_columns', 'R_REGIONKEY,R_NAME,R_COMMENT');

CALL set_table_property('REGION', 'dictionary_encoding_columns', 'R_NAME,R_COMMENT');

CALL set_table_property('REGION', 'time_to_live_in_seconds', '31536000');

COMMIT;- After creation, you can use the following code in psql to check whether the creation is successful

tpch_1sf=# \dt

- If successful, the practical effect is as follows

tpch_1sf=# \dt

List of relations

Schema | Name | Type | Owner

--------+----------+-------+--------------------

public | customer | table | tpch_1sf_developer

public | lineitem | table | tpch_1sf_developer

public | nation | table | tpch_1sf_developer

public | orders | table | tpch_1sf_developer

public | part | table | tpch_1sf_developer

public | partsupp | table | tpch_1sf_developer

public | region | table | tpch_1sf_developer

public | supplier | table | tpch_1sf_developer

(8 rows)2. Import data

- This test scheme mainly uses the COPY FROM STDIN method to import data. For details, please refer to Official documents . Here, the previously generated tbl data file will be imported into the table created in Hologres.

- You can import data by referring to the following shell script in the directory of the data generation tool

for i in `ls *.tbl`; do

echo $i;

name=`echo $i| cut -d'.' -f1`;

PGUSER=<AccessID> PGPASSWORD=<AccessKey> psql -p <Port> -h <Endpoint> -d <Database> -c "COPY $name from stdin with delimiter '|' csv;" < $i;

done- You have now finished importing data

3. Collect statistical information

- In order to better execute the query, you can use the following statements in psql to make Hologres collect the characteristic information of each table.

vacuum region; vacuum nation; vacuum supplier; vacuum customer; vacuum part; vacuum partsupp; vacuum orders; vacuum lineitem; analyze nation; analyze region; analyze lineitem; analyze orders; analyze customer; analyze part; analyze partsupp; analyze supplier;

Execute query

- In order to facilitate the statistics and query of information, you need to use the pgbench tool. You can use the following command to install pgbench (if pgbench already exists on the test machine, please ensure that the version is greater than 9.6, preferably greater than 13, otherwise the following tests will encounter various incompatibilities)

yum install postgresql-contrib

- To facilitate query, you can directly download the required 22 SQL through the following connection

- Then upload to ECS

- Enter the ECS, enter the directory where the files are uploaded, and use the following shell command to extract the files

unzip tpch_data_tpch_query

- Now that you have completed the preparations, you can use pgbench to test. You can use the following command to execute a single query

PGUSER=<AccessID> PGPASSWORD=<AccessKey> pgbench -h <Endpoint> -p <Port> -d <Database> -c <Client_Num> -t <Query_Num> -n -f xxx.sql

- Parameter interpretation

| Configuration item | parameter | explain |

|---|---|---|

| -h | endpoint of Hologres instance | View at Hologres control desk |

| -p | Port address of the Hologres instance | View at Hologres control desk |

| -d | Hologres specifies the database name in the instance | |

| -c | Number of clients (concurrency) | Example: 1. Since the test only tests query performance and does not test concurrency, the concurrency can be set to 1 |

| -t | The number of pressure test queries to be executed by each client | 50 |

| -f | sql for pressure measurement | Example: 6 sql |

- You can also directly execute the following shell} script, directly execute 22 queries in batch, and output the results to the file hologres_tpch_test.out

rm -f hologres_tpch_test.out

echo `date +"%Y-%m-%d %H:%M:%S"` begin >> ./hologres_tpch_test.out

for i in {1..22}

do

PGUSER=<AccessID> PGPASSWORD=<AccessKey> pgbench -h <Endpoint> -p <Port> -d <Database> -c <Client_Num> -t <Query_Num> -n -f ./tpch_data_tpch_query/${i}.sql >> ./hologres_tpch_test.out

doneView hologres_tpch_test.out to get the query results, as shown in the following example

- transaction type: describes the specific SQL file to be executed

- latency average: records the average time of 3 queries of the corresponding SQL file

2021-03-23 03:50:54 begin pghost: hgpostcn-cn-oew21c935002-cn-hangzhou.hologres.aliyuncs.com pgport: 80 nclients: 1 nxacts: 3 dbName: tpch_100 transaction type: ./tpch_data_tpch_query/1.sql scaling factor: 1 query mode: simple number of clients: 1 number of threads: 1 number of transactions per client: 3 number of transactions actually processed: 3/3 latency average = 76.936 ms tps = 12.997850 (including connections establishing) tps = 15.972757 (excluding connections establishing) ...

TPCH 22 query statements

Q1

select

l_returnflag,

l_linestatus,

sum(l_quantity) as sum_qty,

sum(l_extendedprice) as sum_base_price,

sum(l_extendedprice * (1 - l_discount)) as sum_disc_price,

sum(l_extendedprice * (1 - l_discount) * (1 + l_tax)) as sum_charge,

avg(l_quantity) as avg_qty,

avg(l_extendedprice) as avg_price,

avg(l_discount) as avg_disc,

count(*) as count_order

from

lineitem

where

l_shipdate <= date '1998-12-01' - interval '90' day

group by

l_returnflag,

l_linestatus

order by

l_returnflag,

l_linestatus;Q2

select

s_acctbal,

s_name,

n_name,

p_partkey,

p_mfgr,

s_address,

s_phone,

s_comment

from

part,

supplier,

partsupp,

nation,

region

where

p_partkey = ps_partkey

and s_suppkey = ps_suppkey

and p_size = 15

and p_type like '%BRASS'

and s_nationkey = n_nationkey

and n_regionkey = r_regionkey

and r_name = 'EUROPE'

and ps_supplycost = (

select

min(ps_supplycost)

from

partsupp,

supplier,

nation,

region

where

p_partkey = ps_partkey

and s_suppkey = ps_suppkey

and s_nationkey = n_nationkey

and n_regionkey = r_regionkey

and r_name = 'EUROPE'

)

order by

s_acctbal desc,

n_name,

s_name,

p_partkey

limit 100;Q3

select

l_orderkey,

sum(l_extendedprice * (1 - l_discount)) as revenue,

o_orderdate,

o_shippriority

from

customer,

orders,

lineitem

where

c_mktsegment = 'BUILDING'

and c_custkey = o_custkey

and l_orderkey = o_orderkey

and o_orderdate < date '1995-03-15'

and l_shipdate > date '1995-03-15'

group by

l_orderkey,

o_orderdate,

o_shippriority

order by

revenue desc,

o_orderdate

limit 10;Q4

select

o_orderpriority,

count(*) as order_count

from

orders

where

o_orderdate >= date '1993-07-01'

and o_orderdate < date '1993-07-01' + interval '3' month

and exists (

select

*

from

lineitem

where

l_orderkey = o_orderkey

and l_commitdate < l_receiptdate

)

group by

o_orderpriority

order by

o_orderpriority;Q5

select

n_name,

sum(l_extendedprice * (1 - l_discount)) as revenue

from

customer,

orders,

lineitem,

supplier,

nation,

region

where

c_custkey = o_custkey

and l_orderkey = o_orderkey

and l_suppkey = s_suppkey

and c_nationkey = s_nationkey

and s_nationkey = n_nationkey

and n_regionkey = r_regionkey

and r_name = 'ASIA'

and o_orderdate >= date '1994-01-01'

and o_orderdate < date '1994-01-01' + interval '1' year

group by

n_name

order by

revenue desc;Q6

select

sum(l_extendedprice * l_discount) as revenue

from

lineitem

where

l_shipdate >= date '1994-01-01'

and l_shipdate < date '1994-01-01' + interval '1' year

and l_discount between 6 - 1 and 6 + 1

and l_quantity < 2400Q7

set hg_experimental_enable_double_equivalent=on;

select

supp_nation,

cust_nation,

l_year,

sum(volume) as revenue

from

(

select

n1.n_name as supp_nation,

n2.n_name as cust_nation,

extract(year from l_shipdate) as l_year,

l_extendedprice * (1 - l_discount) as volume

from

supplier,

lineitem,

orders,

customer,

nation n1,

nation n2

where

s_suppkey = l_suppkey

and o_orderkey = l_orderkey

and c_custkey = o_custkey

and s_nationkey = n1.n_nationkey

and c_nationkey = n2.n_nationkey

and (

(n1.n_name = 'FRANCE' and n2.n_name = 'GERMANY')

or (n1.n_name = 'GERMANY' and n2.n_name = 'FRANCE')

)

and l_shipdate between date '1995-01-01' and date '1996-12-31'

) as shipping

group by

supp_nation,

cust_nation,

l_year

order by

supp_nation,

cust_nation,

l_year;Q8

set hg_experimental_enable_double_equivalent=on;

select

o_year,

sum(case

when nation = 'BRAZIL' then volume

else 0

end) / sum(volume) as mkt_share

from

(

select

extract(year from o_orderdate) as o_year,

l_extendedprice * (1 - l_discount) as volume,

n2.n_name as nation

from

part,

supplier,

lineitem,

orders,

customer,

nation n1,

nation n2,

region

where

p_partkey = l_partkey

and s_suppkey = l_suppkey

and l_orderkey = o_orderkey

and o_custkey = c_custkey

and c_nationkey = n1.n_nationkey

and n1.n_regionkey = r_regionkey

and r_name = 'AMERICA'

and s_nationkey = n2.n_nationkey

and o_orderdate between date '1995-01-01' and date '1996-12-31'

and p_type = 'STANDARD POLISHED TIN'

) as all_nations

group by

o_year

order by

o_year;Q9

set hg_experimental_enable_double_equivalent=on;

select

nation,

o_year,

sum(amount) as sum_profit

from

(

select

n_name as nation,

extract(year from o_orderdate) as o_year,

l_extendedprice * (1 - l_discount) - ps_supplycost * l_quantity as amount

from

part,

supplier,

lineitem,

partsupp,

orders,

nation

where

s_suppkey = l_suppkey

and ps_suppkey = l_suppkey

and ps_partkey = l_partkey

and p_partkey = l_partkey

and o_orderkey = l_orderkey

and s_nationkey = n_nationkey

and p_name like '%green%'

) as profit

group by

nation,

o_year

order by

nation,

o_year desc;Q10

select

c_custkey,

c_name,

sum(l_extendedprice * (1 - l_discount)) as revenue,

c_acctbal,

n_name,

c_address,

c_phone,

c_comment

from

customer,

orders,

lineitem,

nation

where

c_custkey = o_custkey

and l_orderkey = o_orderkey

and o_orderdate >= date '1993-10-01'

and o_orderdate < date '1993-10-01' + interval '3' month

and l_returnflag = 'R'

and c_nationkey = n_nationkey

group by

c_custkey,

c_name,

c_acctbal,

c_phone,

n_name,

c_address,

c_comment

order by

revenue desc

limit 20;Q11

select

ps_partkey,

sum(ps_supplycost * ps_availqty) as value

from

partsupp,

supplier,

nation

where

ps_suppkey = s_suppkey

and s_nationkey = n_nationkey

and n_name = 'GERMANY'

group by

ps_partkey having

sum(ps_supplycost * ps_availqty) > (

select

sum(ps_supplycost * ps_availqty) * 0.0000010000

from

partsupp,

supplier,

nation

where

ps_suppkey = s_suppkey

and s_nationkey = n_nationkey

and n_name = 'GERMANY'

)

order by

value desc

limit 100;Q12

select

l_shipmode,

sum(case

when o_orderpriority = '1-URGENT'

or o_orderpriority = '2-HIGH'

then 1

else 0

end) as high_line_count,

sum(case

when o_orderpriority <> '1-URGENT'

and o_orderpriority <> '2-HIGH'

then 1

else 0

end) as low_line_count

from

orders,

lineitem

where

o_orderkey = l_orderkey

and l_shipmode in ('MAIL', 'SHIP')

and l_commitdate < l_receiptdate

and l_shipdate < l_commitdate

and l_receiptdate >= date '1994-01-01'

and l_receiptdate < date '1994-01-01' + interval '1' year

group by

l_shipmode

order by

l_shipmode;Q13

select

c_count,

count(*) as custdist

from

(

select

c_custkey,

count(o_orderkey)

from

customer left outer join orders on

c_custkey = o_custkey

and o_comment not like '%special%requests%'

group by

c_custkey

) as c_orders (c_custkey, c_count)

group by

c_count

order by

custdist desc,

c_count desc;Q14

select

100.00 * sum(case

when p_type like 'PROMO%'

then l_extendedprice * (1 - l_discount)

else 0

end) / sum(l_extendedprice * (1 - l_discount)) as promo_revenue

from

lineitem,

part

where

l_partkey = p_partkey

and l_shipdate >= date '1995-09-01'

and l_shipdate < date '1995-09-01' + interval '1' month;Q15

with revenue0(SUPPLIER_NO, TOTAL_REVENUE) as

(

select

l_suppkey,

sum(l_extendedprice * (1 - l_discount))

from

lineitem

where

l_shipdate >= date '1995-12-01'

and l_shipdate < date '1995-12-01' + interval '3' month

group by

l_suppkey

)

select

s_suppkey,

s_name,

s_address,

s_phone,

total_revenue

from

supplier,

revenue0

where

s_suppkey = supplier_no

and total_revenue = (

select

max(total_revenue)

from

revenue0

)

order by

s_suppkey;Q16

select

p_brand,

p_type,

p_size,

count(distinct ps_suppkey) as supplier_cnt

from

partsupp,

part

where

p_partkey = ps_partkey

and p_brand <> 'Brand#45'

and p_type not like 'MEDIUM POLISHED%'

and p_size in (49, 14, 23, 45, 19, 3, 36, 9)

and ps_suppkey not in (

select

s_suppkey

from

supplier

where

s_comment like '%Customer%Complaints%'

)

group by

p_brand,

p_type,

p_size

order by

supplier_cnt desc,

p_brand,

p_type,

p_size;Q17

select

sum(l_extendedprice) / 7.0 as avg_yearly

from

lineitem,

part

where

p_partkey = l_partkey

and p_brand = 'Brand#23'

and p_container = 'MED BOX'

and l_quantity < (

select

0.2 * avg(l_quantity)

from

lineitem

where

l_partkey = p_partkey

and l_partkey in(

select p_partkey

from part

where p_brand = 'Brand#23' and p_container = 'MED BOX')

);Q18

select

c_name,

c_custkey,

o_orderkey,

o_orderdate,

o_totalprice,

sum(l_quantity)

from

customer,

orders,

lineitem

where

o_orderkey in (

select

l_orderkey

from

lineitem

group by

l_orderkey having

sum(l_quantity) > 300

)

and c_custkey = o_custkey

and o_orderkey = l_orderkey

group by

c_name,

c_custkey,

o_orderkey,

o_orderdate,

o_totalprice

order by

o_totalprice desc,

o_orderdate

limit 100;Q19

select

sum(l_extendedprice* (1 - l_discount)) as revenue

from

lineitem,

part

where

(

p_partkey = l_partkey

and p_brand = 'Brand#12'

and p_container in ('SM CASE', 'SM BOX', 'SM PACK', 'SM PKG')

and l_quantity >= 1 and l_quantity <= 1 + 10

and p_size between 1 and 5

and l_shipmode in ('AIR', 'AIR REG')

and l_shipinstruct = 'DELIVER IN PERSON'

)

or

(

p_partkey = l_partkey

and p_brand = 'Brand#23'

and p_container in ('MED BAG', 'MED BOX', 'MED PKG', 'MED PACK')

and l_quantity >= 10 and l_quantity <= 10 + 10

and p_size between 1 and 10

and l_shipmode in ('AIR', 'AIR REG')

and l_shipinstruct = 'DELIVER IN PERSON'

)

or

(

p_partkey = l_partkey

and p_brand = 'Brand#34'

and p_container in ('LG CASE', 'LG BOX', 'LG PACK', 'LG PKG')

and l_quantity >= 20 and l_quantity <= 20 + 10

and p_size between 1 and 15

and l_shipmode in ('AIR', 'AIR REG')

and l_shipinstruct = 'DELIVER IN PERSON'

);Q20

select

s_name,

s_address

from

supplier,

nation

where

s_suppkey in (

select

ps_suppkey

from

partsupp

where

ps_partkey in (

select

p_partkey

from

part

where

p_name like 'forest%'

)

and ps_availqty > (

select

0.5 * sum(l_quantity)

from

lineitem

where

l_partkey = ps_partkey

and l_suppkey = ps_suppkey

and l_shipdate >= date '1994-01-01'

and l_shipdate < date '1994-01-01' + interval '1' year

)

)

and s_nationkey = n_nationkey

and n_name = 'CANADA'

order by

s_name;Q21

select

s_name,

count(*) as numwait

from

supplier,

lineitem l1,

orders,

nation

where

s_suppkey = l1.l_suppkey

and o_orderkey = l1.l_orderkey

and o_orderstatus = 'F'

and l1.l_receiptdate > l1.l_commitdate

and exists (

select

*

from

lineitem l2

where

l2.l_orderkey = l1.l_orderkey

and l2.l_suppkey <> l1.l_suppkey

)

and not exists (

select

*

from

lineitem l3

where

l3.l_orderkey = l1.l_orderkey

and l3.l_suppkey <> l1.l_suppkey

and l3.l_receiptdate > l3.l_commitdate

)

and s_nationkey = n_nationkey

and n_name = 'SAUDI ARABIA'

group by

s_name

order by

numwait desc,

s_name

limit 100;Q22

select

cntrycode,

count(*) as numcust,

sum(c_acctbal) as totacctbal

from

(

select

substring(c_phone from 1 for 2) as cntrycode,

c_acctbal

from

customer

where

substring(c_phone from 1 for 2) in

('13', '31', '23', '29', '30', '18', '17')

and c_acctbal > (

select

avg(c_acctbal)

from

customer

where

c_acctbal > 0.00

and substring(c_phone from 1 for 2) in

('13', '31', '23', '29', '30', '18', '17')

)

and not exists (

select

*

from

orders

where

o_custkey = c_custkey

)

) as custsale

group by

cntrycode

order by

cntrycode;Key/Value check scenario test

Prepare data

1. Create table

- Continue to use the database created in the OLAP query scenario. We will use the orders table in the TPCH dataset for testing. After connecting to Hologres using psql, you can use the following table creation statement to create the database table;

DROP TABLE IF EXISTS orders_row;

BEGIN;

CREATE TABLE public.orders_row (

"o_orderkey" int8 NOT NULL,

"o_custkey" int8,

"o_orderstatus" bpchar(1),

"o_totalprice" numeric(15,2),

"o_orderdate" date,

"o_orderpriority" bpchar(15),

"o_clerk" bpchar(15),

"o_shippriority" int8,

"o_comment" varchar(79),

PRIMARY KEY (o_orderkey)

);

CALL SET_TABLE_PROPERTY('public.orders_row', 'orientation', 'row');

CALL SET_TABLE_PROPERTY('public.orders_row', 'clustering_key', 'o_orderkey');

CALL SET_TABLE_PROPERTY('public.orders_row', 'time_to_live_in_seconds', '3153600000');

CALL SET_TABLE_PROPERTY('public.orders_row', 'distribution_key', 'o_orderkey');

COMMIT;2. Import data in copy mode

- This test scheme mainly uses COPY FROM STDIN to import data. For details, please refer to Official documents . Here, the previously generated tbl data file will be imported into the table created in Hologres.

- You can import data by referring to the following commands in the directory of the data generation tool

PGUSER=<AccessID> PGPASSWORD=<AccessKey> psql -p <Port> -h <Endpoint> -d <Database> -c "COPY public.orders_row from stdin with delimiter '|' csv;" < orders.tbl

3. Import data in insert into mode

- Since you have imported the data of the orders table in the OLAP scenario, you can run the following SQL statement to import the data

INSERT INTO public.orders_row SELECT * FROM public.orders;

query

1. Generate query statement

- The main query statement features of the Key/Value query scenario are as follows

SELECT column_a

,column_b

,...

,column_x

FROM table_x

WHERE pk = value_x

;or

SELECT column_a

,column_b

,...

,column_x

FROM table_x

WHERE pk IN ( value_a, value_b,..., value_x )

;You can use the following script to generate the required sql. This script will generate 2 pieces of sql

- kv_query_single.sql SQL for single value filtering

- kv_query_in.sql SQL for multi value filtering. The script will randomly generate an SQL for 10 values

rm -rf kv_query mkdir kv_query cd kv_query echo '\set column_values random(1,99999999) select O_ORDERKEY,O_CUSTKEY,O_ORDERSTATUS,O_TOTALPRICE,O_ORDERDATE,O_ORDERPRIORITY,O_CLERK,O_SHIPPRIORITY,O_COMMENT from public.orders_row WHERE o_orderkey =:column_values;' >> kv_query_single.sql echo '\set column_values1 random(1,99999999) \set column_values2 random(1,99999999) \set column_values3 random(1,99999999) \set column_values4 random(1,99999999) \set column_values5 random(1,99999999) \set column_values6 random(1,99999999) \set column_values7 random(1,99999999) \set column_values8 random(1,99999999) \set column_values9 random(1,99999999) \set column_values10 random(1,99999999) select O_ORDERKEY,O_CUSTKEY,O_ORDERSTATUS,O_TOTALPRICE,O_ORDERDATE,O_ORDERPRIORITY,O_CLERK,O_SHIPPRIORITY,O_COMMENT from public.orders_row WHERE o_orderkey in(:column_values1,:column_values2,:column_values3,:column_values4,:column_values5,:column_values6,:column_values7,:column_values8,:column_values9,:column_values10);' >> kv_query_in.sql

2. Query

- Pgbench is required for query. You can use the following command to install pgbench

yum install postgresql-contrib

- After that, you can use pgbench to perform pressure measurement for single value filtering scenarios

PGUSER=<AccessID> PGPASSWORD=<AccessKey> pgbench -h <Endpoint> -p <Port> -d <Database> -c <Client_Num> -t <Query_Num> -n -f kv_query_single.sql

- Scenarios for multi value filtering

PGUSER=<AccessID> PGPASSWORD=<AccessKey> pgbench -h <Endpoint> -p <Port> -d <Database> -c <Client_Num> -t <Query_Num> -n -f kv_query_in.sql

- Parameter interpretation

| Configuration item | parameter | explain |

|---|---|---|

| -h | endpoint of Hologres instance | View at Hologres control desk |

| -p | Port address of the Hologres instance | View at Hologres control desk |

| -d | Hologres specifies the database name in the instance | |

| -c | Number of clients (concurrency) | Example: 8 |

| -t | The number of pressure test queries to be executed by each client | 50 |

| -f | sql for pressure measurement | Example: 6 sql |

Test result reference

Amount of test data:

- This test is based on TPCH 100G data set, and the specific data volume is shown in the table below

| Table name | Number of rows |

|---|---|

| LINEITEM | 600,037,902 |

| ORDERS | 150,000,000 |

| PARTSUPP | 80,000,000 |

| PART | 20,000,000 |

| CUSTOMER | 15,000,000 |

| SUPPLIER | 1,000,000 |

| NATION | 25 |

| REGION | 5 |

Cluster specification

| computing resource | storage capacity | Software version | remarks |

|---|---|---|---|

| 64 CU | |||

| (CPU: 64 Core memory: 256 GB) | 100 GB | r0.10.20 | Using the default cluster configuration, the number of shards is 40 |

| 128 CU | |||

| (CPU: 128 Core memory: 512 GB) | 100 GB | r0.10.20 | Using the default configuration of the cluster, the number of shards is 80 |

test result

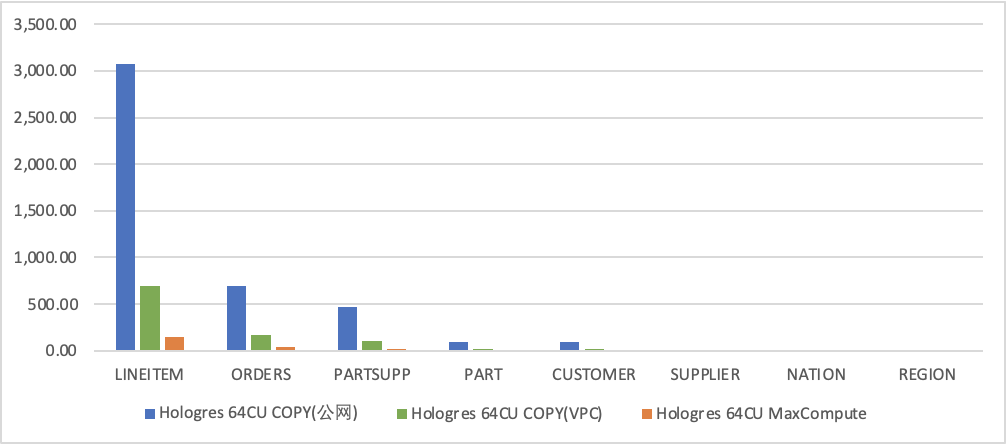

Data import time

- The execution time of data import is in seconds (s).

- Import time refers to importing data into the table in Hologres

- When using the COPY method to import data, a table corresponds to a data file, and the concurrent import method is not used.

- The specific values are shown in the table below

| Table name | Number of rows | Data volume | Hologres 64CU | ||

|---|---|---|---|---|---|

| Import using COPY mode (public network) | Import using COPY mode (VPC network import) | Import using MaxCompute | |||

| LINEITEM | 600,037,902 | 73.6GB | 3,070.453 | 694.364 | 148.165 |

| ORDERS | 150,000,000 | 16.4GB | 691.060 | 172.529 | 37.741 |

| PARTSUPP | 80,000,000 | 2.3GB | 468.560 | 107.092 | 18.488 |

| PART | 20,000,000 | 11.3GB | 96.342 | 24.020 | 8.083 |

| CUSTOMER | 15,000,000 | 2.3GB | 95.190 | 22.937 | 10.363 |

| SUPPLIER | 1,000,000 | 132MB | 5.057 | 1.803 | 1.503 |

| NATION | 25 | 2KB | 0.580 | 0.584 | 0.747 |

| REGION | 5 | 0.375KB | 0.168 | 0.153 | 0.430 |

| Total | 106G | 4427.410 | 1023.482 | 225.52 |

- In the following figure, blue indicates the time of importing data in the public network using COPY mode, green indicates the time of importing data in the VPC network using COPY mode, and gray indicates the time of importing data in the MaxCompute mode

- The lower the ordinate value, the faster the import speed

- Horizontal axis: table name. Vertical axis: data import time (s)

- It can be seen that due to the impact of network bandwidth, when importing local file data in COPY mode, the time of importing data using VPC network is significantly shorter than that using public network; Using MaxCompute to import data is significantly shorter than using COPY to import local file data.

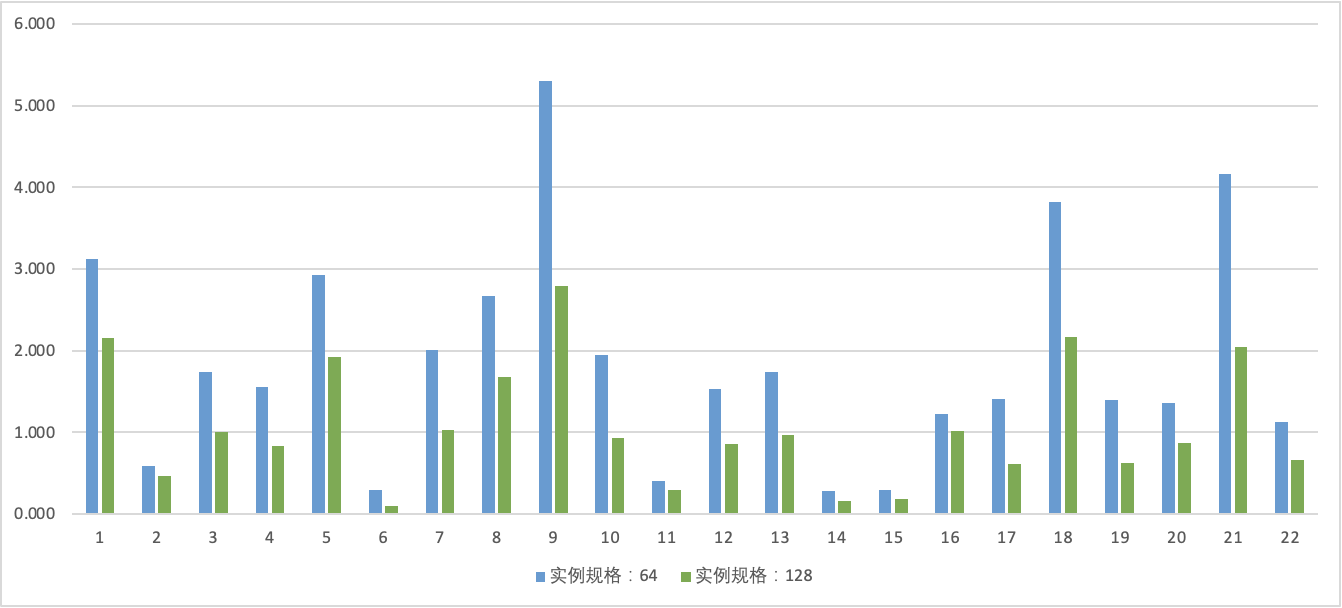

Query time

- Query execution time is in seconds (s).

- The query results are based on the internal table of Hologres

- The specific values are shown in the table below

| TPCH Query number | Hologres 64CU | Hologres 128CU |

|---|---|---|

| 1 | 3.120 | 2.150 |

| 2 | 0.581 | 0.467 |

| 3 | 1.735 | 1.005 |

| 4 | 1.558 | 0.836 |

| 5 | 2.921 | 1.917 |

| 6 | 0.297 | 0.096 |

| 7 | 2.006 | 1.029 |

| 8 | 2.674 | 1.679 |

| 9 | 5.298 | 2.796 |

| 10 | 1.944 | 0.924 |

| 11 | 0.397 | 0.297 |

| 12 | 1.531 | 0.852 |

| 13 | 1.741 | 0.971 |

| 14 | 0.286 | 0.160 |

| 15 | 0.293 | 0.177 |

| 16 | 1.223 | 1.020 |

| 17 | 1.405 | 0.607 |

| 18 | 3.817 | 2.169 |

| 19 | 1.400 | 0.622 |

| 20 | 1.358 | 0.868 |

| 21 | 4.164 | 2.047 |

| 22 | 1.121 | 0.654 |

| Total | 40.870 | 23.343 |

- In the following figure, blue is the query result of 64CU instances and green is the query result of 128CU instances

- The lower the ordinate value, the better the TPC-H performance.

- It can be seen that the query time decreases linearly with the increase of the cost of the instance size

- Horizontal axis: query in file The number in the. Vertical axis: query execution time (s)

Original link: https://developer.aliyun.com/article/785226?

Copyright notice: the content of this article is spontaneously contributed by Alibaba cloud real name registered users. The copyright belongs to the original author. Alibaba cloud developer community does not own its copyright or bear corresponding legal liabilities. Please refer to Alibaba cloud developer community user service agreement and Alibaba cloud developer community intellectual property protection guidelines for specific rules. If you find any content suspected of plagiarism in the community, fill in the infringement complaint form to report. Once verified, the community will immediately delete the content suspected of infringement.