Summary

Longhorn is a cloud-native, lightweight, reliable, and easy-to-use open source distributed block storage system created by Rancher. Once deployed to the K8s cluster, Longhorn automatically aggregates the local storage available on all nodes in the cluster into a storage cluster, which is then used to manage distributed, replicated block storage to support snapshots and data backups.

For Longhorn, you can:

- Using Longhorn volumes as persistent storage for distributed stateful applications in the Kubernetes cluster

- Partition block storage into Longhorn volumes

- Copy block storage across multiple nodes and data centers to improve availability

- Store backup data in external storage, such as NFS or AWS S3

- Recover Volumes from Backup

- Upgrade Longhorn without interrupting persistent volumes

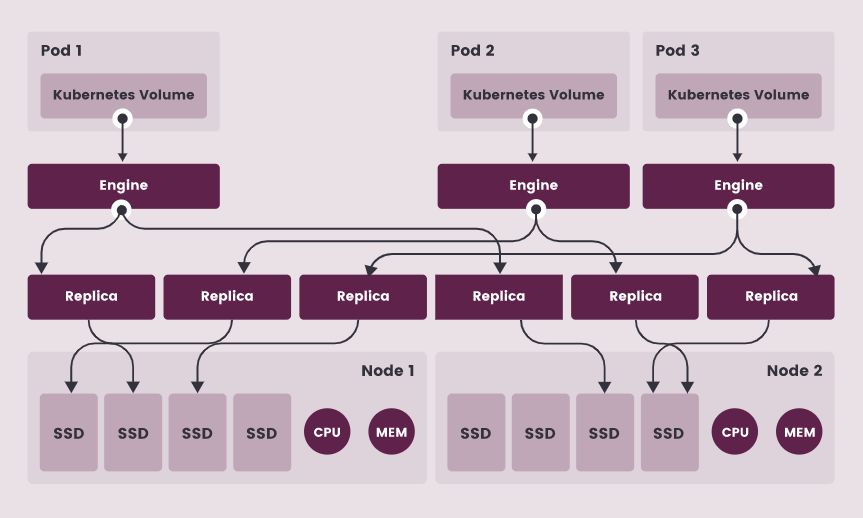

Longhorn is designed with two layers: a data plane and a control plane. Longhorn Engine is a storage controller corresponding to the data plane, and Longhorn Manager corresponds to the control plane.

Longhorn Manager Pod runs as a Kubernetes DaemonSet on each node in the Longhorn cluster. It is responsible for creating and managing volumes in the Kubernetes cluster.

When Longhorn Manager is asked to create a volume, it creates a Longhorn Engine instance on the node attached to the volume that runs as a Linux process. A copy of the data is created on each node. Copies should be placed on separate hosts to ensure maximum availability.

Longhorn is block-based and supports ext4/XFS file systems. The Longhorn CSI driver takes the block device, formats it, and mounts it on the node. kubelet then binds these block devices to the K8s pod so that the pod can access the Longhorn volume.

The number of replicas set should not exceed the number of cluster nodes

Image above

- Three Longhorn volumes were created

- Each volume has a dedicated controller: Longhorn Engine

- Each Longhorn volume has two copies, each of which is a Linux process

- By creating a separate Longhorn Engine for each volume, if one of the controllers fails, the functionality of the other volumes is not affected

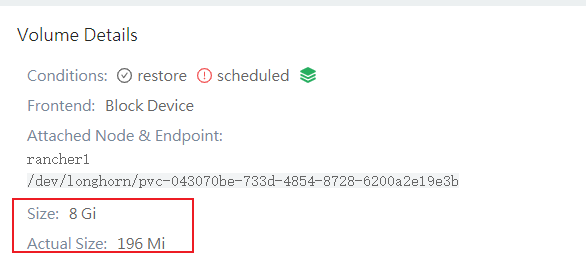

Longhorn copy uses Linux sparse files Build to support thin provisioning. There is an actual size and a nominal size.

Longhorn UI

Longhorn provides UI management pages by simply modifying the service type to be type: NodePort through the Kube edit SVC longhorn-frontend-n longhorn-system command. Save to exit.

$ kube get svc longhorn-frontend -n longhorn-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE longhorn-frontend NodePort 10.43.125.27 <none> 80:32007/TCP 9d

This is accessible through any cluster node IP+32007 port.

deploy

Add helm Repository

$ helm repo add longhorn https://charts.longhorn.io "longhorn" has been added to your repositories $ helm repo update Hang tight while we grab the latest from your chart repositories... ...Successfully got an update from the "longhorn" chart repository ...

To simplify, set alias kube='kubectl'

Execute Deployment

$ kube create namespace longhorn-system namespace/longhorn-system created $ helm install longhorn longhorn/longhorn --namespace longhorn-system NAME: longhorn LAST DEPLOYED: Mon May 24 11:07:24 2021 NAMESPACE: longhorn-system STATUS: deployed REVISION: 1 TEST SUITE: None NOTES: Longhorn is now installed on the cluster! Please wait a few minutes for other Longhorn components such as CSI deployments, Engine Images, and Instance Managers to be initialized.

Pod objects such as csi-attacher, csi-provisioner, csi-resizer, engine-image-ei, longhorn-csi-plugin, and longhorn-manager are then deployed in the longhorn-system namespace.

Wait until these objects become running s before deployment is complete.

$ kube get po -n longhorn-system NAME READY STATUS RESTARTS AGE csi-attacher-5dcdcd5984-6wk4k 1/1 Running 0 13m csi-attacher-5dcdcd5984-7qtbj 1/1 Running 0 13m csi-attacher-5dcdcd5984-rhrwt 1/1 Running 0 13m csi-provisioner-5c9dfb6446-96hjf 1/1 Running 0 13m csi-provisioner-5c9dfb6446-g6szj 1/1 Running 0 13m csi-provisioner-5c9dfb6446-kfvzv 1/1 Running 0 13m csi-resizer-6696d857b6-5s6pt 1/1 Running 0 13m csi-resizer-6696d857b6-b4r87 1/1 Running 0 13m csi-resizer-6696d857b6-w2hhr 1/1 Running 0 13m csi-snapshotter-96bfff7c9-5ghjv 1/1 Running 0 13m csi-snapshotter-96bfff7c9-ctwpt 1/1 Running 0 13m csi-snapshotter-96bfff7c9-rzg66 1/1 Running 0 13m engine-image-ei-611d1496-65hwx 1/1 Running 0 13m engine-image-ei-611d1496-tlw2r 1/1 Running 0 13m instance-manager-e-4fe8f5dc 1/1 Running 0 13m instance-manager-e-a6b90821 1/1 Running 0 13m instance-manager-r-6542f13a 1/1 Running 0 13m instance-manager-r-766ea453 1/1 Running 0 13m longhorn-csi-plugin-4c4qp 2/2 Running 0 13m longhorn-csi-plugin-qktcl 2/2 Running 0 13m longhorn-driver-deployer-5d45dcdc5d-jgsr9 1/1 Running 0 14m longhorn-manager-k5tdr 1/1 Running 0 14m longhorn-manager-pl662 1/1 Running 1 14m longhorn-ui-5879656c55-jxmkj 1/1 Running 0 14m

Note that three copies are created by default, and the numberOfReplicas value can be modified by Kube edit cm longhorn-storageclass-n longhorn-system after deployment to an appropriate value that does not exceed the number of cluster nodes.

test

Deploy a nginx based on longhorn.

longhorn-pvc.yaml:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: longhorn-pvc-demo

spec:

accessModes:

- ReadWriteOnce

volumeMode: Filesystem

storageClassName: longhorn

resources:

requests:

storage: 2Gi

A PVC resource based on the longhorn storage class was created. Longhorn supports dynamic pre-allocation and can automatically create adapted PV volumes.

$ kube apply -f longhorn-pvc.yaml persistentvolumeclaim/longhorn-pvc-demo created $ kube get pvc/longhorn-pvc-demo NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE longhorn-pvc-demo Bound pvc-2202aaea-c129-44bc-9580-55914b6bd7ea 2Gi RWO longhorn 20s

Bind PVC

Mount the PVC as a volume onto the pod below:

longhorn-pod.yaml:

apiVersion: v1

kind: Pod

metadata:

name: volume-test

namespace: default

labels:

app: volume-test

spec:

containers:

- name: volume-test

image: nginx:stable-alpine

imagePullPolicy: IfNotPresent

volumeMounts:

- name: volv

mountPath: /usr/share/nginx/html # nginx default html path

ports:

- containerPort: 80

volumes:

- name: volv

persistentVolumeClaim:

claimName: longhorn-pvc-demo

Waiting for deployment to complete:

$ kubectl get po volume-test NAME READY STATUS RESTARTS AGE volume-test 1/1 Running 0 98s

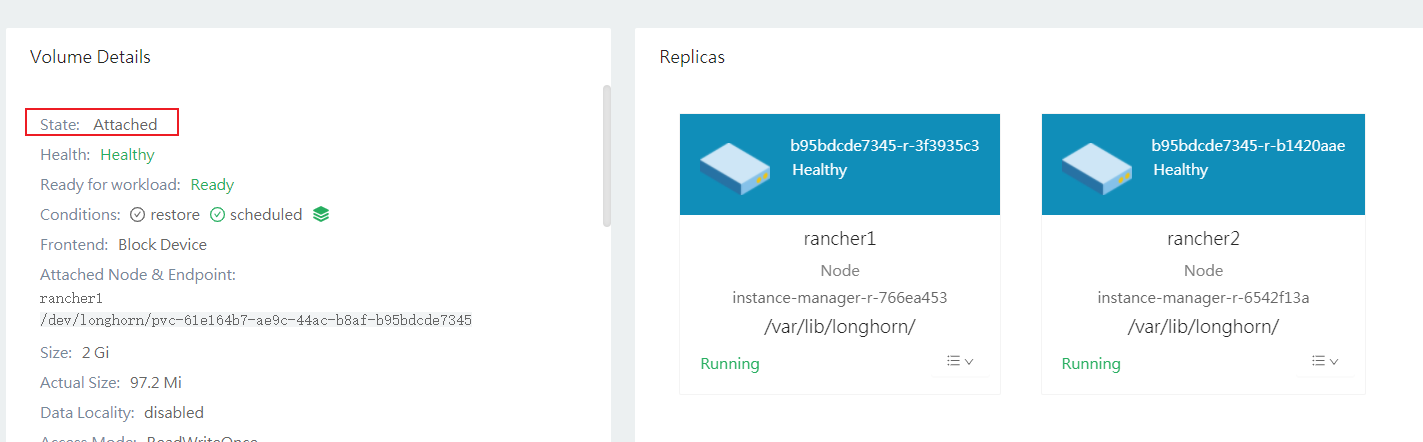

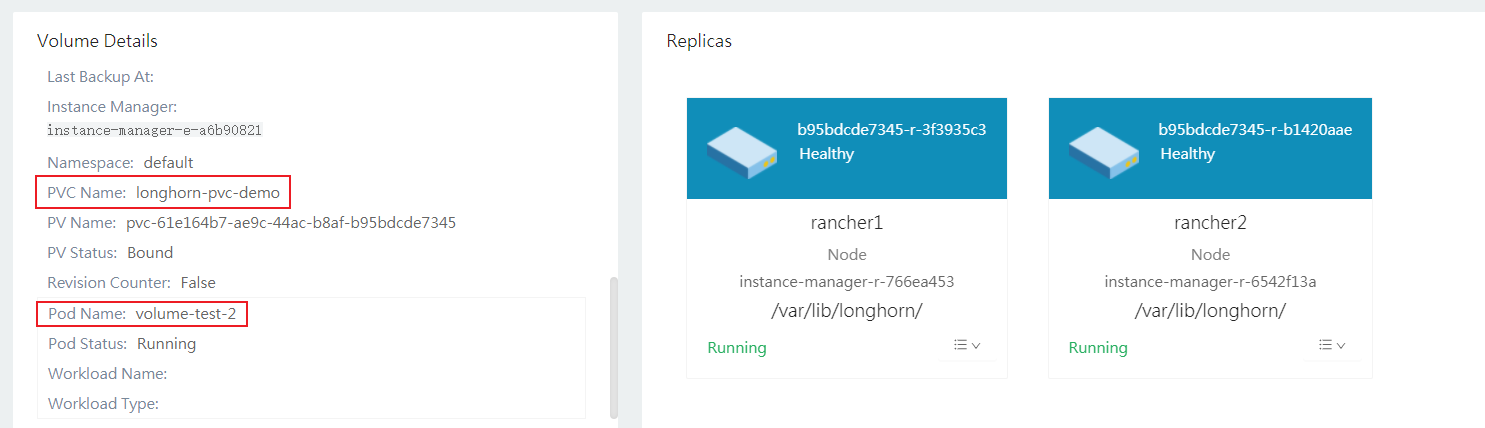

Looking at the status of the volume in the Longhorn UI is Attached, because the test cluster has two nodes, two copies are created here. Each volume creates the number of copies based on the value of the parameter numberOfReplicas. And only one copy will be created for each node. As shown below, replicas are created on the rancher1 and rancher2 nodes to achieve high availability.

Go inside the container, find the mount path, and modify the file.

$ kube exec -it volume-test -- sh # Enter container interior

/ # cd /usr/share/nginx/html/

/usr/share/nginx/html # ls # ext4 format generates lost+found folder by default

lost+found

/usr/share/nginx/html # vi index.html # Create an index.html

/usr/share/nginx/html # ls

index.html lost+found

/usr/share/nginx/html # cat index.html

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Hello Longhorn!</h1>

<p></p>

</body>

</html>

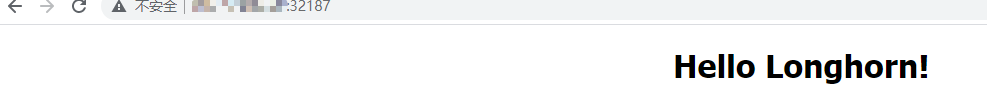

Expose services, access tests.

$ kube expose pod volume-test --type=NodePort --target-port=80 service/volume-test exposed $ kube get svc volume-test NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE volume-test NodePort 10.43.253.209 <none> 80:32187/TCP 7s

Delete pod:

$ kube delete pod volume-test pod "volume-test" deleted

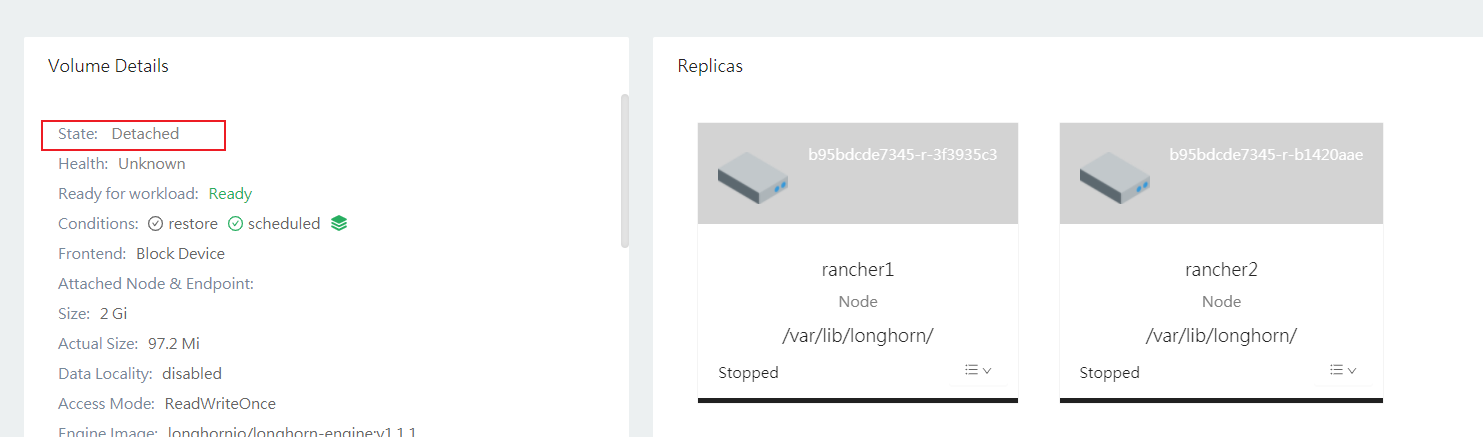

Looking at this point, the volume still exists, but it is in Detached state.

Remount Volume

Create a pod again, specifying the same persistentVolumeClaim.claimName.

longhorn-pod-2.yaml:

apiVersion: v1

kind: Pod

metadata:

name: volume-test-2

namespace: default

labels:

app: volume-test-2

spec:

containers:

- name: volume-test-2

image: nginx:stable-alpine

imagePullPolicy: IfNotPresent

volumeMounts:

- name: volv-2

mountPath: /usr/share/nginx/html # nginx default html path

ports:

- containerPort: 80

volumes:

- name: volv-2

persistentVolumeClaim:

claimName: longhorn-pvc-demo

Enter new pod view:

$ kube exec -it volume-test-2 -- sh

/ # cat /usr/share/nginx/html/

index.html lost+found/

/ # cat /usr/share/nginx/html/index.html

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Hello Longhorn!</h1>

<p></p>

</body>

</html>

The longhorn volume is now automatically mounted again.

Clean up test resources:

$ kube delete -f longhorn-pvc.yaml $ kube delete -f longhorn-pod-2.yaml