1. Introduction to Maxwell

Maxwell is a MySQL real-time capture software written in Java, which is open source by Zendesk in the United States. Real time reading

MySQL binary log Binlog and generates JSON format messages, which are sent to Kafka, Kinesis

RabbitMQ, Redis, Google Cloud Pub/Sub, file or other platform applications.

Official website address: http://maxwells-daemon.io/

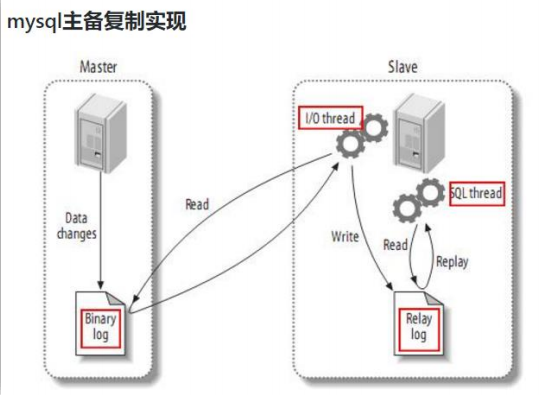

2. MySQL master-slave replication process

-

The Master master database will change the record and write it to the binary log

-

Slave sends dump protocol from the database to mysql master, and the binary log events of master's main database

Copy to its relay log;

- Slave reads and redoes the events in the relay log from the library and synchronizes the changed data to its own database.

3. Working principle of Maxwell

It's very simple to pretend to be a slave and copy data from the master

4. MySQL binlog

4.1 what is binlog

MySQL binary log can be said to be the most important log of MySQL. It records all DDL and

DML (in addition to data query statements) statements are recorded in the form of events, including the time consumed by the execution of the statements, MySQL

Binary logs are transaction safe.

Generally speaking, opening binary logs will have a performance loss of about 1%. Binary has two most important usage scenarios:

-

First, MySQL Replication starts binlog on the Master side, and the Master sends its binary log

Pass it to slaves to achieve the purpose of master slave data consistency.

-

Second: data recovery is natural. Use mysqlbinlog tool to recover data.

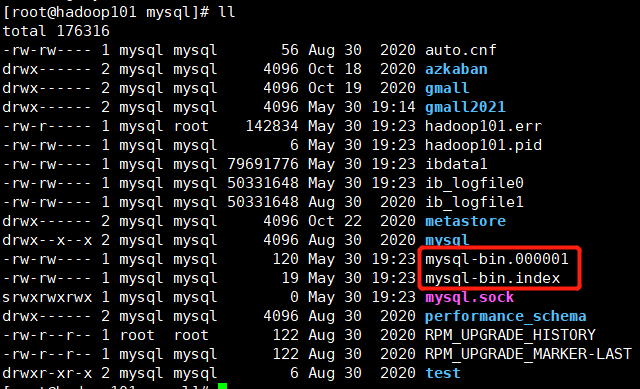

Binary log includes two types of files: binary log index file (file name suffix is. Index), which is used to record all files

Binary file, binary log file (file name suffix is. 00000 *) records all DDL and DML of the database (except

The data query statement event is.

4.2 enable binlog

Find the location of MySQL configuration file: / etc / my cnf

Under the mysql configuration file, modify the configuration

In the [mysqld] block, set / add log bin = MySQL bin

This indicates that the prefix of binlog log is mysql bin, and the log file generated later is mysql bin When the mysql file size reaches the threshold of 1236, the number of each new file is generated in the order of 456.

4.3 binlog classification settings

There are three formats of mysql binlog: state, mixed and row.

In the configuration file, you can select the configuration

binlog_format= statement|mixed|row

-

statement

At the statement level, binlog records the statements that execute write operations every time. Compared with row mode, it saves space, but may produce inconsistencies, such as

update tt set create_date=now() if the binlog log is used for recovery, the data may be different due to different execution time.

Advantages: space saving

Disadvantages: data inconsistency may be caused.

-

row

At the row level, binlog will record the changes recorded in each row after each operation.

Advantages: maintain the absolute consistency of data. Because no matter what the sql is or what function is referenced, it only records the effect after execution.

Disadvantages: it takes up a large space.

-

mixed

The upgraded version of statement solves the inconsistency of statement mode caused by some situations to a certain extent

The default is statement. In some cases, for example, when the function contains UUID(); Include auto_ When the table of the increment field is updated; When the INSERT DELAYED statement is executed; When using UDF; It will be handled in the way of ROW

Advantages: save space while taking into account a certain degree of consistency.

Disadvantages: there are still very few cases that will cause inconsistency. In addition, statement and mixed are inconvenient for the monitoring of binlog.

Based on the above comparison, Maxwell wants to do monitoring analysis, and the row format is more appropriate

5. Preparation of MySQL

5.1 create real-time business database

Database name: gmal2021

url: 192.168.88.71:3306/gmall2021

username: root passwo: 123456

Import data: https://github.com/zhangbaohpu/gmall-mock/blob/master/data/gmall.sql

5.2 start binlog

vim /etc/my.cnf

server-id= 1 log-bin=mysql-bin binlog_format=row #Specify the database to synchronize binlog-do-db=gmall2021

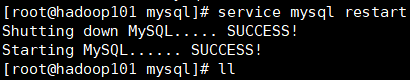

Restart the mysql service and view the initialization file

service mysql restart

5.3 simulation generated data

Configuration file application properties

logging.level.root=info spring.datasource.driver-class-name=com.mysql.jdbc.Driver spring.datasource.url=jdbc:mysql://192.168.88.71:3306/gmall2021?characterEncoding=utf-8&useSSL=false&serverTimezone=GMT%2B8 spring.datasource.username=root spring.datasource.password=123456 logging.pattern.console=%m%n mybatis-plus.global-config.db-config.field-strategy=not_null #Business date mock.date=2021-05-30 #Reset mock.clear=1 #Reset user mock.clear.user=0 #Number of new users generated mock.user.count=1000 #Male ratio mock.user.male-rate=20 #User data change probability mock.user.update-rate:20 #Collection cancellation ratio mock.favor.cancel-rate=10 #Collection quantity mock.favor.count=100 #Number of shopping carts mock.cart.count=300 #Maximum number of purchases per item mock.cart.sku-maxcount-per-cart=3 #Shopping cart source, user query, commodity promotion, intelligent recommendation, promotional activities mock.cart.source-type-rate=60:20:10:10 #Proportion of orders placed by users mock.order.user-rate=95 #Proportion of goods purchased by users from shopping mock.order.sku-rate=70 #Whether to participate in the activity mock.order.join-activity=1 #Whether to use shopping vouchers mock.order.use-coupon=1 #Number of people receiving shopping vouchers mock.coupon.user-count=1000 #Payment proportion mock.payment.rate=70 #Payment method: Alipay: WeChat: UnionPay mock.payment.payment-type=30:60:10 #Evaluation proportion: Good: medium: Poor: automatic mock.comment.appraise-rate=30:10:10:50 #Refund reason proportion: quality problem, the description of the goods is inconsistent with the actual description, the out of stock number is inappropriate, the shooting is wrong, and I don't want to buy other products mock.refund.reason-rate=30:10:20:5:15:5:5

Package mock DB module into jar

https://github.com/zhangbaohpu/gmall-mock.git

Upload the and configuration files to / opt/software/applog directory of Hadoop 101, and run jar

java -jar mock-db-0.0.1-SNAPSHOT.jar

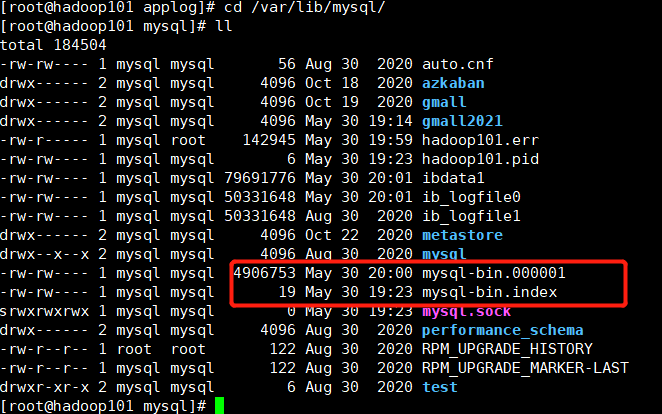

Go to the / var/lib/mysql directory again and check that the size of the index file has increased

6. Maxwell installation

6.1 installation

-

Download and unzip

Download address: http://maxwells-daemon.io/

tar -zxvf maxwell-1.25.0.tar.gz -C /opt/module/

-

Initialize maxwell metadata

-

Create database

Build a Maxwell Library in MySQL to store Maxwell metadata

mysql -uroot -p123456

mysql> create database maxwell;

-

Set security level

If the execution is wrong, see: https://www.cnblogs.com/blog0403/p/14790397.html

mysql> set global validate_password_length=4;

mysql> set global validate_password_policy=0;

-

Assign an account to operate the database

mysql> GRANT ALL ON maxwell.* TO 'maxwell'@'%' IDENTIFIED BY '123456';

Database name: maxwell account number: maxwell password: 123456

-

Assigning this account can monitor the permissions of other databases

mysql> GRANT SELECT ,REPLICATION SLAVE , REPLICATION CLIENT ON *.* TO maxwell@'%';

-

6.2 configuration

Copy and modify configuration files

cd /opt/module/maxwell-1.25.0

cp config.properties.example config.properties

vim config.properties

# tl;dr config log_level=info producer=kafka kafka.bootstrap.servers=hadoop101:9092,hadoop102:9092,hadoop103:9092 #Add content kafka_topic=ods_base_db_m # mysql login info #mysql machine host=hadoop101 #maxwell collection user user=maxwell password=123456 #To synchronize historical data client_id=maxwell_1

Note: by default, it is still output to a Kafka partition of the specified Kafka theme, because multiple partitions may disrupt the order of binlog. If you want to improve the parallelism, first set the number of partitions of Kafka > 1, and then set producer_partition_by attribute, optional value producer_partition_by=database|table|primary_key|random| column

6.3 startup

Create a startup script in / home/zhangbao/bin

vim maxwell.sh

/opt/module/maxwell-1.25.0/bin/maxwell --config /opt/module/maxwell-1.25.0/config.properties >/dev/null 2>&1 &

maxwell started

7. Monitor binglog

maxwell service has been started. At this time, as long as there are data changes in the monitored database, maxwell can monitor the changed data and send it to kafka's topic: ods_base_db_m.

7.1 start kafka consumer

cd /opt/module/kafka/bin

./kafka-console-consumer.sh --zookeeper hadoop101:2181,hadoop102:2181,hadoop103:2181 --topic ods_base_db_m

7.2 start importing data into the database

To import data items into database gmal2021: https://github.com/zhangbaohpu/gmall-mock , module mock DB

There is a jar package after packaging: mock-db-0.0.1-snapshot Jar, configuration file application Properties are as follows:

logging.level.root=info spring.datasource.driver-class-name=com.mysql.jdbc.Driver spring.datasource.url=jdbc:mysql://192.168.88.71:3306/gmall2021?characterEncoding=utf-8&useSSL=false&serverTimezone=GMT%2B8 spring.datasource.username=root spring.datasource.password=123456 logging.pattern.console=%m%n mybatis-plus.global-config.db-config.field-strategy=not_null #Business date mock.date=2021-06-03 #Reset mock.clear=1 #Reset user mock.clear.user=1 #Number of new users generated mock.user.count=1000 #Male ratio mock.user.male-rate=20 #User data change probability mock.user.update-rate:20 #Collection cancellation ratio mock.favor.cancel-rate=10 #Collection quantity mock.favor.count=100 #Number of shopping carts mock.cart.count=300 #Maximum number of purchases per item mock.cart.sku-maxcount-per-cart=3 #Shopping cart source, user query, commodity promotion, intelligent recommendation, promotional activities mock.cart.source-type-rate=60:20:10:10 #Proportion of orders placed by users mock.order.user-rate=95 #Proportion of goods purchased by users from shopping mock.order.sku-rate=70 #Whether to participate in the activity mock.order.join-activity=1 #Whether to use shopping vouchers mock.order.use-coupon=1 #Number of people receiving shopping vouchers mock.coupon.user-count=1000 #Payment proportion mock.payment.rate=70 #Payment method: Alipay: WeChat: UnionPay mock.payment.payment-type=30:60:10 #Evaluation proportion: Good: medium: Poor: automatic mock.comment.appraise-rate=30:10:10:50 #Refund reason proportion: quality problem, the description of the goods is inconsistent with the actual description, the out of stock number is inappropriate, the shooting is wrong, and I don't want to buy other products mock.refund.reason-rate=30:10:20:5:15:5:5

Remember: the jar package and the configuration file are in the same directory, which is convenient for modifying the configuration

Start jar

cd /opt/software/applog

java -jar mock-db-0.0.1-SNAPSHOT.jar

7.3 kafka receiving data

Data received at kafka consumer

{

"database": "gmall2021",

"table": "comment_info",

"type": "insert",

"ts": 1622733351,

"xid": 84599,

"xoffset": 16492,

"data": {

"id": 1400471299563253795,

"user_id": 125,

"nick_name": null,

"head_img": null,

"sku_id": 16,

"spu_id": 4,

"order_id": 27460,

"appraise": "1204",

"comment_txt": "Comment content: 24835156952973466131469453289274897",

"create_time": "2021-06-03 23:15:51",

"operate_time": null

}

}

The ods layer of this data warehouse has been completed, including log data and business data