- OpenCV3.4.6 installation package (including contrib): https://pan.baidu.com/s/1KBD-fAO63p0s5ANYa5XcEQ Extraction code: p7j0

- resources: https://pan.baidu.com/s/1nkQ6iVV7IeeP4gTXvM_DyQ Extraction code: ypvt

Chapter1 reading pictures / videos / cameras

Read picture from file

| modular | function |

|---|---|

| imgcodecs | Image file reading and writing |

| imgproc | Image Procssing |

| highgui | High-level GUI |

- Mat cv::imread(const String &filename, int flags = IMREAD_COLOR)

Loads an image from a file. The function imread loads an image from the specified file and returns it. If the image cannot be read (due to missing files, incorrect permissions, unsupported format or invalid), the function returns an empty matrix (Mat::data==NULL). In the case of color images, the channels of decoded images will be stored in BGR order.

- void cv::imshow(cosnst String &winnanme, InputArray mat)

Displays the image in the specified window. This function should be followed by the cv::waitKey function, which displays the image of the specified milliseconds. Otherwise, it will not display the image. For example, waitKey(0) will display the window indefinitely until there is any key (for image display). waitKey(25) will display one frame for 25 milliseconds, and then the display will turn off automatically. (if you put it in a loop to read the video, it will display the video frame by frame)

- int cv::waitKey(int delay = 0)

Wait for the key to be pressed. The function waitKey waits indefinitely for key events (when delay ≤ 0) or delays milliseconds when it is positive. Since the operating system has the shortest time between switching threads, this function will not wait for a delay of milliseconds completely. It will wait for a delay of at least milliseconds, depending on what else is running on your computer at that time. If no key is pressed before the specified time has elapsed, the code of the pressed key or - 1 is returned.

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main()

{

string path = "Resources/test.png";

Mat img = imread(path);

imshow("Image", img);

waitKey(0); //The displayed picture will not flash by

return 0;

}

Read video from file

To capture video, you need to create a VideoCapture object. Its parameters can be the name of the video file or the device index.

OpenCV3. VideoCapture class constructor and member function in 4.6

- cv::VideoCapture::VideoCapture()

- cv::VideoCapture::VideoCapture(const String &filename)

- cv::VideoCapture::VideoCapture(const String &filename, int apiPreference)

- cv::VideoCapture::VideoCapture(int index)

- cv::VideoCapture::VideoCapture(int index, int apiPreference)

Open a video file or capture device or IP video stream for video capture.

- virtual bool cv::VideoCapture::isOpened() const

Returns true if video capture has been initialized. If the previous call to VideoCapture constructor or VideoCapture::open() succeeds, the method returns true.

- virtual bool cv::VideoCapture::read(OutputArray image)

Grab, decode and return the next video frame.

- virtual double cv::VideoCapture::get(int proId) const

Returns the specified VideoCapture property.

- virtual double cv::VideoCapture::set(int proId, double value)

Set a property in VideoCapture.

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main()

{

string path = "Resources/test_video.mp4";

VideoCapture cap(path); //Video capture object

Mat img;

while (true) {

cap.read(img);

imshow("Image", img);

waitKey(1);

}

return 0;

}

Read camera

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main()

{

VideoCapture cap(0);

Mat img;

while (true) {

cap.read(img);

imshow("Image", img);

waitKey(1);

}

return 0;

}

Chapter2 basic function

- void cv::cvtColor(InputArray src, OutputArray dst, int code, int dstCn = 0)

Converts an image from one color space to another. This function converts the input image from one color space to another. In the case of conversion from RGB color space, the order of channels (RGB or BGR) shall be clearly specified. Note that the default color format in OpenCV is usually called RGB, but it is actually BGR (byte inversion). Therefore, the first byte in the standard (24 bit) color image will be an 8-bit blue component, the second byte will be green and the third byte will be red. Then the fourth, fifth and sixth bytes will be the second pixel (blue, then green, then red), and so on.

- void cv::GaussianBlur(InputArray src, OutputArray dst, Size ksize, double sigmaX, doube sigmaY = 0, int borderType = BORDER_DEFAULT)

Blur the image using Gaussian filter. This function convolutes the source image with the specified Gaussian kernel.

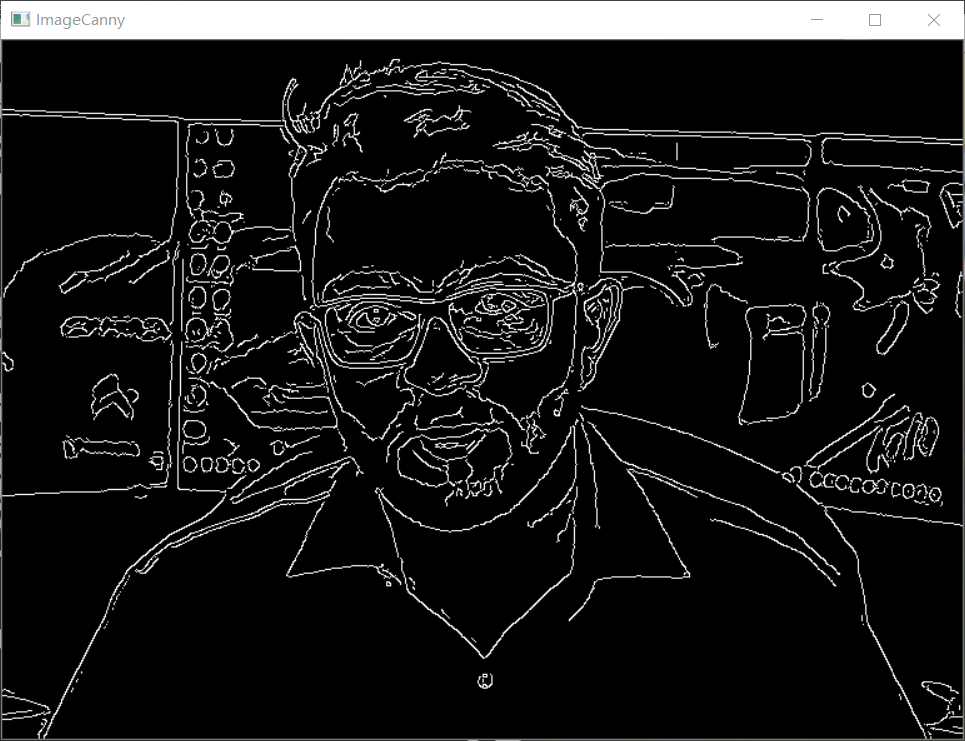

- void cv::Canny(InputArray image, OutputArray edges, double threshold1, double threshold2, int apertureSize = 3, bool L2gradient = false)

Use Canny algorithm to find edges in the image.

- Mat cv::getStructuringElement(int shape, Size ksize, Point anchor = Point(-1, -1))

Returns a structural element of a specified size and shape for morphological operations. This function constructs and returns structural elements that can be further passed to corrosion, expansion or morphology. However, you can also build any binary mask yourself and use it as a structural element.

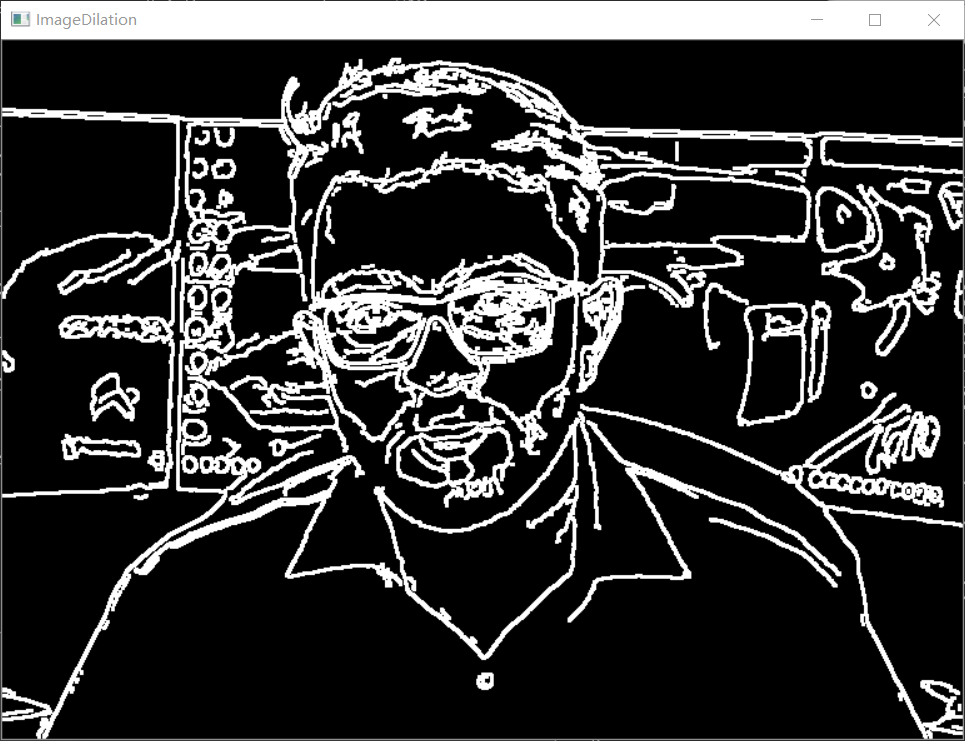

- void cv::dilate(InputArray src, OutputArray dst, InuputArray kernel, Point anchor = Point(-1, -1), int iterations = 1, int borderType = BORDER_CONSTANT, const Scalar &borderValue = morphologyDefaultBorderValue())

Inflate the image with specific structural elements.

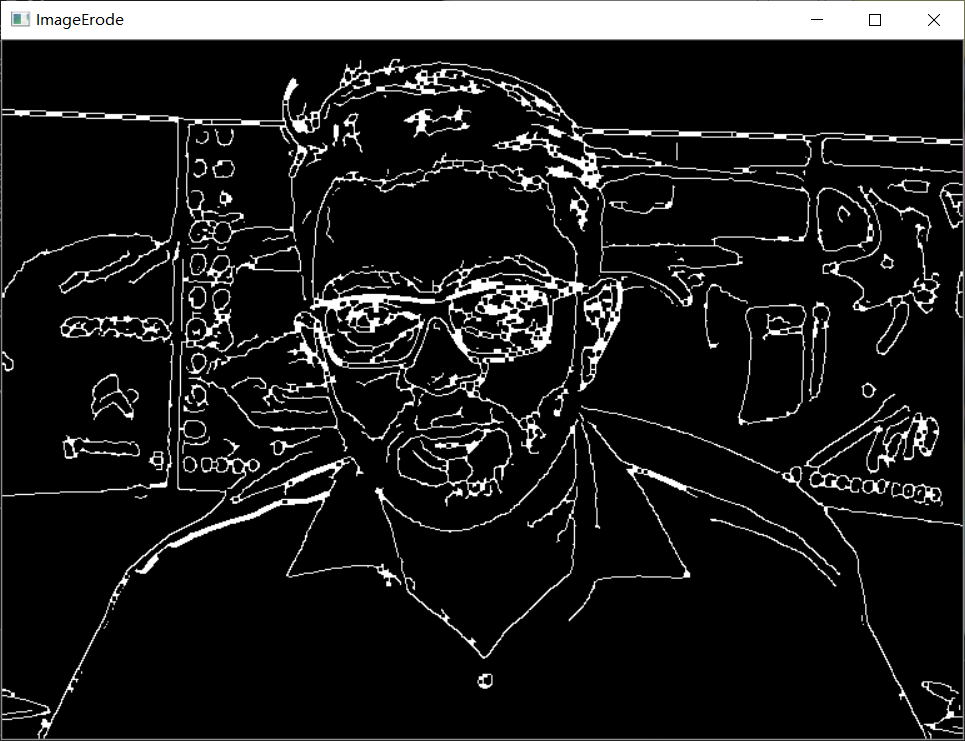

- void cv::erode(InputArray src, OutputArray dst, InuputArray kernel, Point anchor = Point(-1, -1), int iterations = 1, int borderType = BORDER_CONSTANT, const Scalar &borderValue = morphologyDefaultBorderValue())

Corrosion images using specific structural elements.

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main()

{

string path = "resources/test.png";

Mat img = imread(path);

Mat imgGray, imgBlur, imgCanny, imgDil, imgErode;

cvtColor(img, imgGray, COLOR_BGR2GRAY); //Grayscale

GaussianBlur(img, imgBlur, Size(3, 3), 3, 0); //Gaussian blur

Canny(imgBlur, imgCanny, 25, 75); //edge detection

Mat kernel = getStructuringElement(MORPH_RECT, Size(3, 3));

dilate(imgCanny, imgDil, kernel);

erode(imgDil, imgErode, kernel);

imshow("Image", img);

imshow("ImageGray", imgGray);

imshow("ImageBlur", imgBlur);

imshow("ImageCanny", imgCanny);

imshow("ImageDilation", imgDil);

imshow("ImageErode", imgErode);

waitKey(0);

return 0;

}

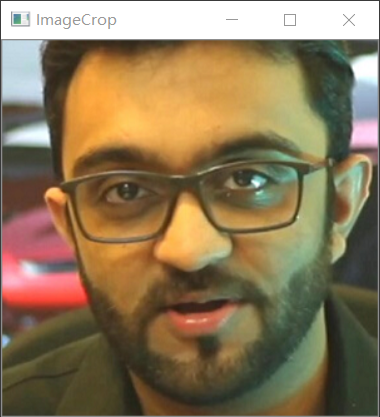

Chapter3 adjustment and clipping

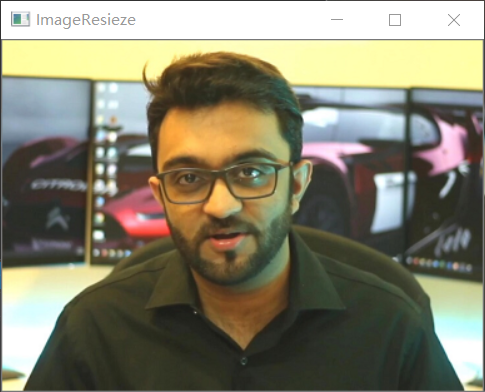

- void cv::resize(InputArray src, OutputArray dst, Size dsize, double fx=0, double fy=0, int interpolation = INTER_LINEAR)

Resize the image. The resize function reduces or maximizes the size of the image src to a specified size. Note that the initial dst type or size is not considered. Instead, size and type are derived from src, dsize, fx, and fy.

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main()

{

string path = "resources/test.png";

Mat img = imread(path);

Mat imgResize, imgCrop;

cout << img.size() << endl;

resize(img, imgResize, Size(), 0.5, 0.5);

Rect roi(200, 100, 300, 300);

imgCrop = img(roi);

imshow("Image", img);

imshow("ImageResieze", imgResize);

imshow("ImageCrop", imgCrop);

waitKey(0);

return 0;

}

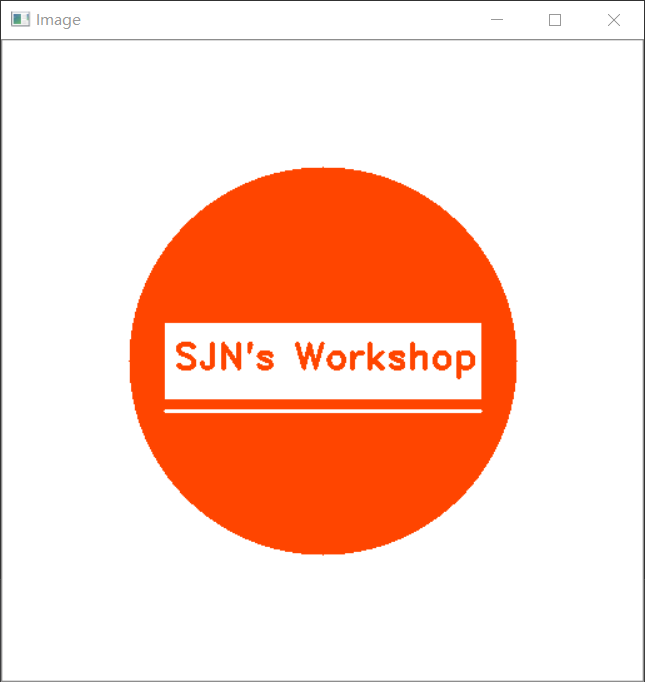

Chapter4 drawing shapes and text

- Mat(int rows, int cols, int type, const Scalar &s)

Overloaded constructor

- void cv::circle(InputOutputArray img, Point center, int radius, const Scalar &color, int thickness=1, int lineType=LINE_8, int shift=0)

The function cv::circle draws a simple or solid circle with a given center and radius.

- void cv::rectangle(InputOutputArray img, Point pt1, Point pt2, const Scalar &color, int thickness=1, int lineType=LINE_8, int shift=0)

- void cv::rectangle(Mat &img, Rect rec, const Scalar &color, int thickness=1, int lineType=LINE_8, int shift=0)

Draw a simple, thick, or filled upper right rectangle. The function cv::rectangle draws a rectangular outline or two filled rectangles with corners pt1 and pt2.

- void cv::line (InputOutputArray img, Point pt1, Point pt2, const Scalar &color, int thickness=1, int lineType=LINE_8, int shift=0)

Draw a line segment connecting two points. The line function draws the line segment between pt1 and pt2 points in the image.

- void cv::putText (InputOutputArray img, const String &text, Point org, int fontFace, double fontScale, Scalar color, int thickness=1, int lineType=LINE_8, bool bottomLeftOrigin=false)

Draw a text string. The function cv::putText renders the specified text string in the image. Symbols that cannot be rendered with the specified font will be replaced with question marks.

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main()

{

//Blank Image

Mat img(512, 512, CV_8UC3, Scalar(255, 255, 255));

circle(img, Point(256, 256), 155, Scalar(0, 69, 255), FILLED);

rectangle(img, Point(130, 226), Point(382, 286), Scalar(255, 255, 255), -1);

line(img, Point(130, 296), Point(382, 296), Scalar(255, 255, 255), 2);

putText(img, "SJN's Workshop", Point(137, 262), FONT_HERSHEY_DUPLEX, 0.95, Scalar(0, 69, 255), 2);

imshow("Image", img);

waitKey(0);

return 0;

}

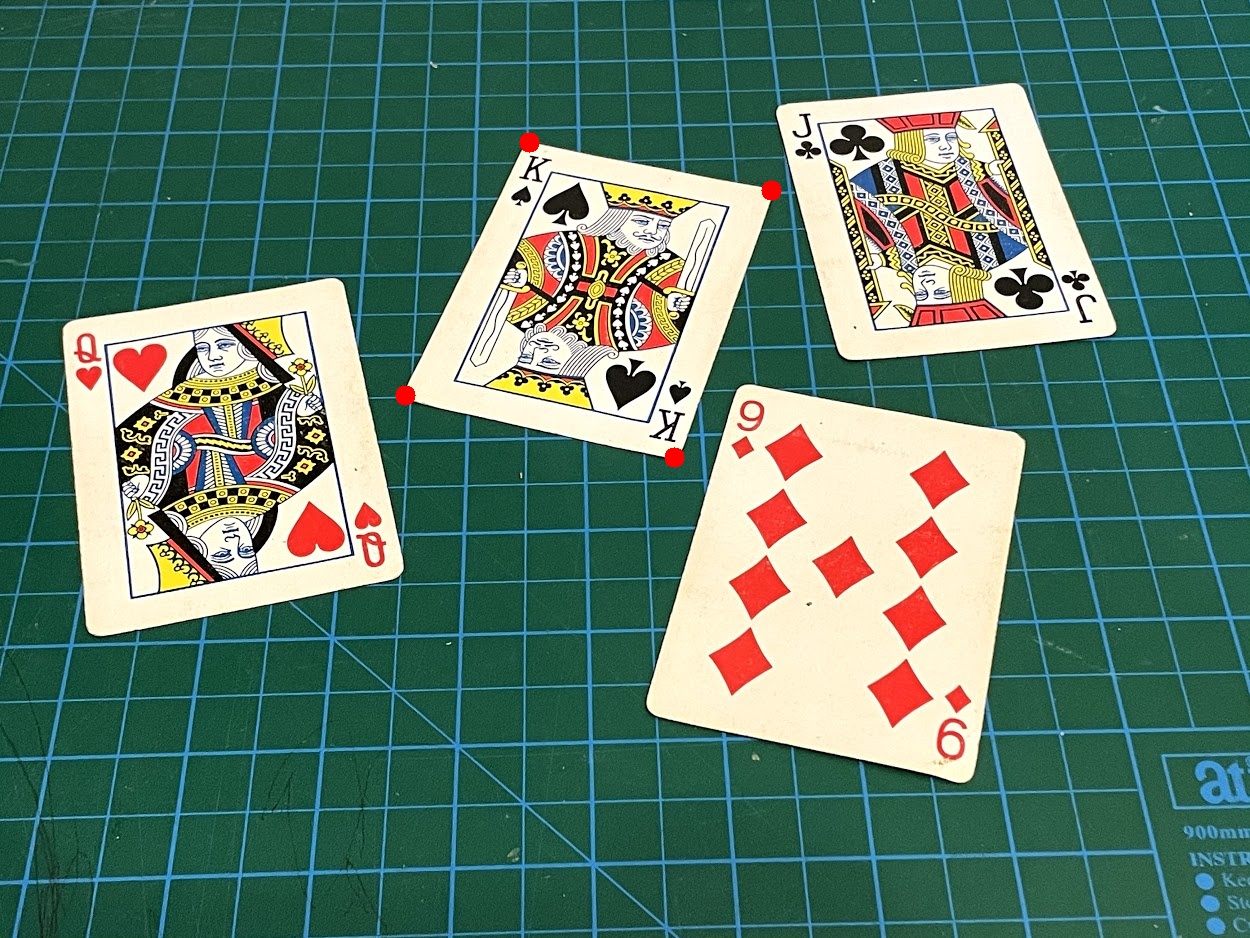

Chapter5 perspective transformation

- Mat cv::getPerspectiveTransform (const Point2f src[], const Point2f dst[])

Returns the 3x3 perspective transformation of the corresponding 4 point pairs.

- void cv::warpPerspective (InputArray src, OutputArray dst, InputArray M, Size dsize, int flags=INTER_LINEAR, int borderMode=BORDER_CONSTANT, const Scalar &borderValue=Scalar())

Apply perspective transformation to the image.

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <iostream>

using namespace cv;

using namespace std;

float w = 250, h = 350;

Mat matrix, imgWarp;

int main()

{

string path = "Resources/cards.jpg";

Mat img = imread(path);

Point2f src[4] = { {529, 142}, {771, 190}, {405, 395}, {674, 457} };

Point2f dst[4] = { {0.0f, 0.0f}, {w, 0.0f}, {0.0f, h}, {w, h} };

matrix = getPerspectiveTransform(src, dst);

warpPerspective(img, imgWarp, matrix, Point(w, h));

for (int i = 0; i < 4; i++) {

circle(img, src[i], 10, Scalar(0, 0, 255), FILLED);

}

imshow("Image", img);

imshow("ImageWarp", imgWarp);

waitKey(0);

return 0;

}

Note: this transformation technology is used in document scanning

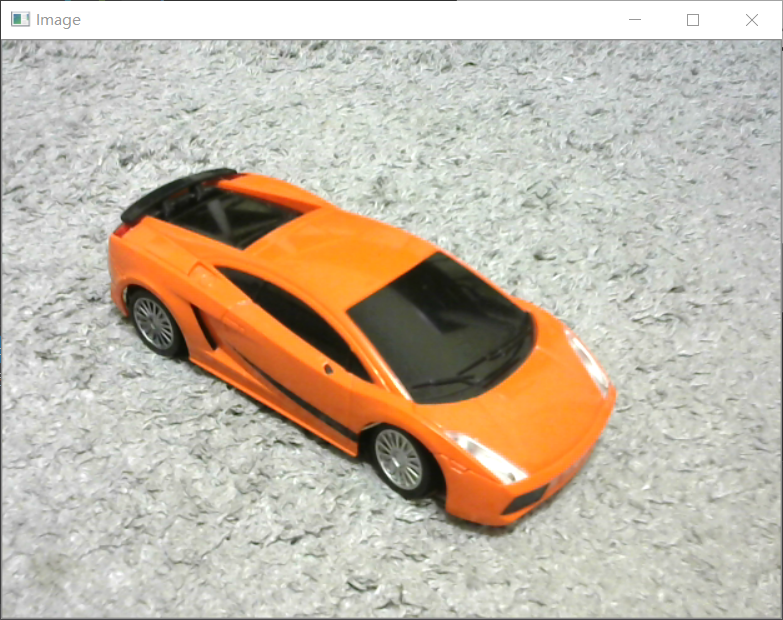

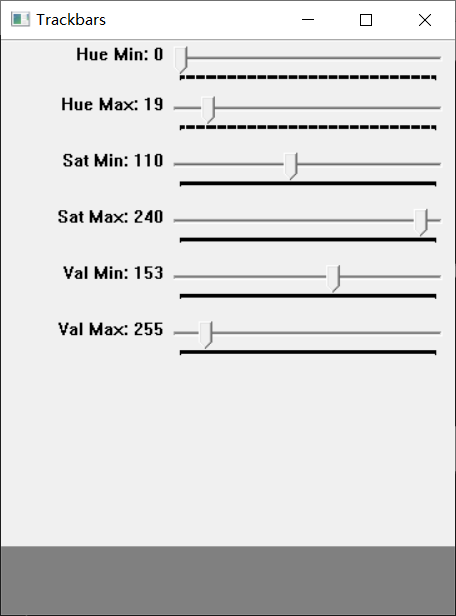

Chapter6 color detection

- void cv::inRange (InputArray src, InputArray lowerb, InputArray upperb, OutputArray dst)

Check whether the array element is between the elements of the other two arrays.

- void cv::namedWindow (const String &winname, int flags = WINDOW_AUTOSIZE)

Create a window. The function namedWindow creates a window that can be used as a placeholder for images and track bars. The created windows are referenced by their names. If a window with the same name already exists, the function does nothing.

- int cv::createTrackbar (const String &trackbarname, const String &winname, int *value, int count, TrackbarCallback onChange = 0, void *userdata = 0)

Create a trackbar and attach it to the specified window. The function createTrackbar creates a trackbar (slider or range control) with the specified name and range, assigns a variable value as the position synchronized with the trackbar, and specifies that the callback function onChange is called when the position of the tracking bar changes. The created track bar is displayed in the specified window winname.

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <iostream>

using namespace cv;

using namespace std;

Mat imgHSV, mask;

int hmin = 0, smin = 110, vmin = 153;

int hmax = 19, smax = 240, vmax = 255;

int main()

{

string path = "resources/lambo.png";

Mat img = imread(path);

cvtColor(img, imgHSV, COLOR_BGR2HSV);

namedWindow("Trackbars", (640, 200));

createTrackbar("Hue Min", "Trackbars", &hmin, 179);

createTrackbar("Hue Max", "Trackbars", &hmax, 179);

createTrackbar("Sat Min", "Trackbars", &smin, 255);

createTrackbar("Sat Max", "Trackbars", &smax, 255);

createTrackbar("Val Min", "Trackbars", &vmin, 255);

createTrackbar("Val Max", "Trackbars", &vmax, 2555);

while (true) {

Scalar lower(hmin, smin, vmin);

Scalar upper(hmax, smax, vmax);

inRange(imgHSV, lower, upper, mask);

imshow("Image", img);

imshow("Image HSV", imgHSV);

imshow("Image Mask", mask);

waitKey(1);

}

return 0;

}

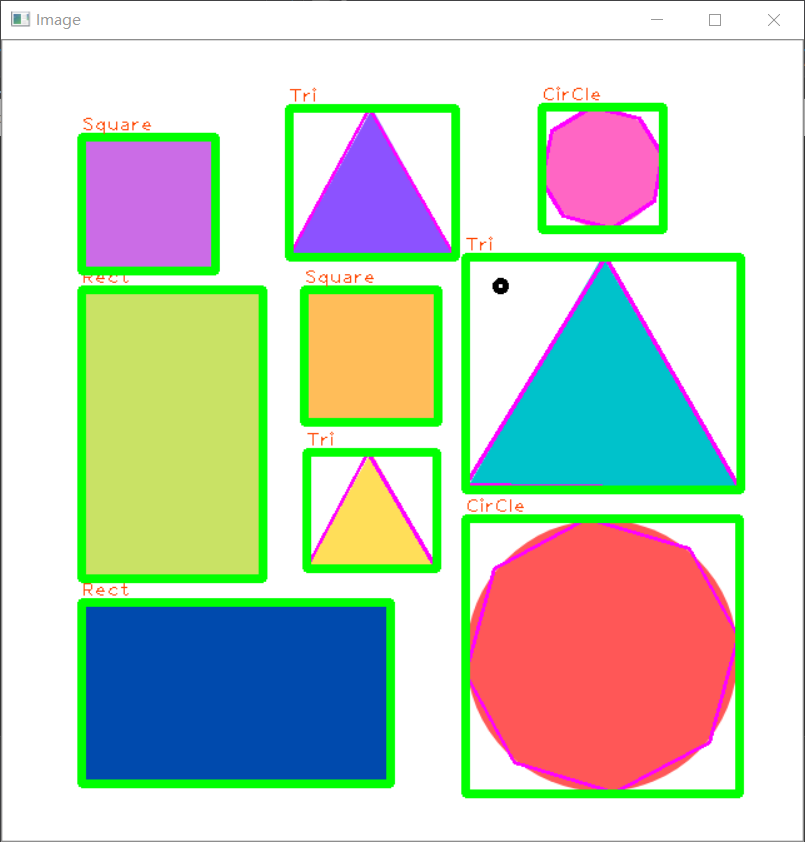

Chapter7 shape / contour detection

- void cv::findContours(InputOutputArray image, OutputArrayOfArrays contours, OutputArray hierarchy, int mode, int method, Point offset = Point())

Find contours in binary images. From opencv3 2. This function will not be modified when starting the source image.

| parameter | meaning |

|---|---|

| image | Binary input image |

| contours | For detected contours, each contour is stored as a point vector (for example, STD:: vector < STD:: vector < CV:: point >) |

| hierarchy | Optional output vectors (for example, STD:: vector < CV:: vec4i >) contain information about the image topology |

| mode | Contour retrieval mode |

| method | Contour approximation method |

| offset | Optional offset for each contour point movement |

- double cv::contourArea(InputArray contour, bool oriented=false)

Calculate contour area

- double cv::arcLength(InputArray curve, bool closed)

Calculate the length of the curve or the perimeter of the closed profile

- void cv::approxPolyDP(InputArray curve, OutputArray approxCurve, double epsilon, bool closed)

The function cv::approxPolyDP approximates a curve or polygon with another curve / polygon with fewer vertices so that the distance between them is less than or equal to the specified accuracy.

- Rect cv::boundingRect(InputArray array)

Calculates and returns the minimum upper boundary rectangle of non-zero pixels of the specified point set or gray image.

- void cv::drawContours(InputOutputArray image, InputArrayOfArrays contours, int contourIdx, const Scalar &color, int thickness = 1, int lineType = LINE_8, InputArray hierarchy = noArray(), int maxLevel = INT_MAX, Point offset = Point())

Draw or fill profiles. If the thickness is ≥ 0, the function draws the contour in the image. If the thickness is < 0, the area surrounded by the contour is filled.

- Point_< _Tp > tl() const

top left corner

- Point_< _Tp > br() const

Lower right corner

//rect template<typename _Tp> class cv::Rect_< _Tp > typedef Rect_<int> cv::Rect2i typedef Rect2i cv::Rect //point template<typename _Tp> class cv::Point_< _Tp > typedef Point_<int> cv::Point2i typedef Point2i cv::Point

| cv::Rect_< _ TP > class attribute | meaning |

|---|---|

| height | Rectangle height |

| width | Rectangle width |

| x | x coordinate of the upper left corner |

| y | y coordinate of the upper left corner |

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <iostream>

using namespace cv;

using namespace std;

void getContours(Mat imgDil, Mat img) {

vector<vector<Point>> contours; //Contour data

vector<Vec4i> hierarchy;

findContours(imgDil, contours, hierarchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE); //All contour contours are found through the preprocessed binary image

//drawContours(img, contours, -1, Scalar(255, 0, 255), 2); // Draw all profiles

for (int i = 0; i < contours.size(); i++)

{

double area = contourArea(contours[i]); //Calculate each contour area

cout << area << endl;

vector<vector<Point>> conPoly(contours.size());

vector<Rect> boundRect(contours.size());

string objectType;

if (area > 1000) //Filter noise

{

//Find the approximate polygon or curve of the contour

double peri = arcLength(contours[i], true);

approxPolyDP(contours[i], conPoly[i], 0.02 * peri, true);

cout << conPoly[i].size() << endl;

boundRect[i] = boundingRect(conPoly[i]); //Find the minimum upper boundary rectangle of each approximate curve

int objCor = (int)conPoly[i].size();

if (objCor == 3) { objectType = "Tri"; }

if (objCor == 4) {

float aspRatio = (float)boundRect[i].width / boundRect[i].height; //Aspect ratio

cout << aspRatio << endl;

if (aspRatio > 0.95 && aspRatio < 1.05) {

objectType = "Square";

}

else {

objectType = "Rect";

}

}

if (objCor > 4) { objectType = "CirCle"; }

drawContours(img, conPoly, i, Scalar(255, 0, 255), 2); //Draw all contours after noise filtering

rectangle(img, boundRect[i].tl(), boundRect[i].br(), Scalar(0, 255, 0), 5); //Draw bounding box

putText(img, objectType, { boundRect[i].x, boundRect[i].y - 5 }, FONT_HERSHEY_PLAIN, 1, Scalar(0, 69, 255), 1);

}

}

}

int main()

{

string path = "resources/shapes.png";

Mat img = imread(path);

Mat imgGray, imgBlur, imgCanny, imgDil;

// Preprocessing

cvtColor(img, imgGray, COLOR_BGR2GRAY);

GaussianBlur(imgGray, imgBlur, Size(3, 3), 3, 0);

Canny(imgBlur, imgCanny, 25, 75);

Mat kernel = getStructuringElement(MORPH_RECT, Size(3, 3));

dilate(imgCanny, imgDil, kernel);

getContours(imgDil, img);

imshow("Image", img);

/*imshow("Image Gray", imgGray);

imshow("Image Blur", imgBlur);

imshow("Image Canny", imgCanny);

imshow("Image Dil", imgDil);*/

waitKey(0);

return 0;

}

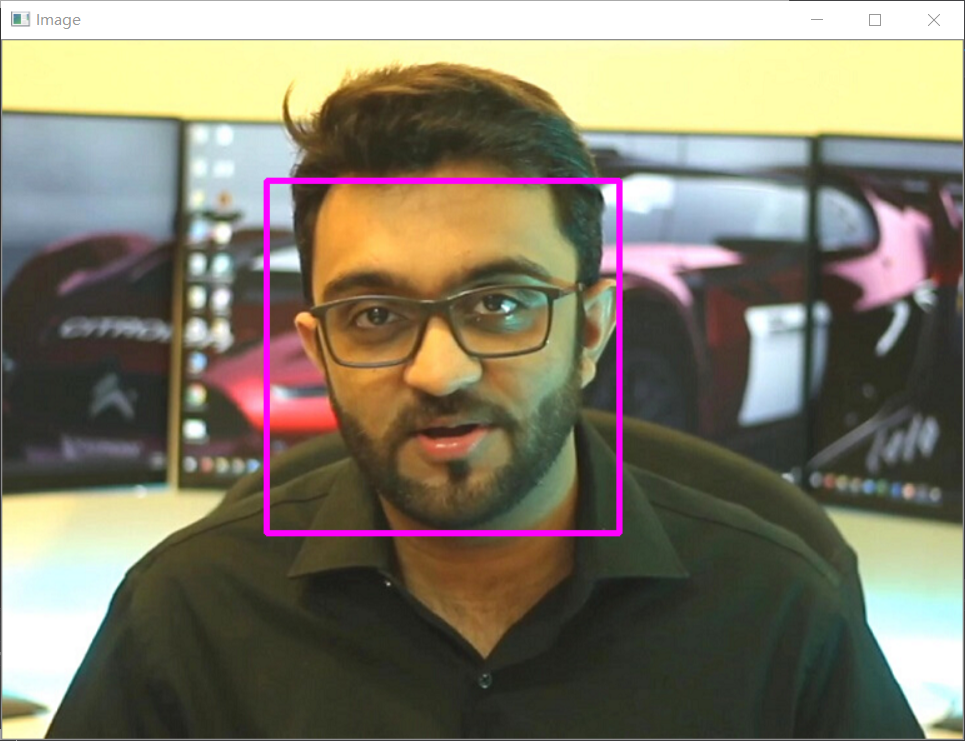

Chapter8 face detection

Involved module objdetect:Object Detection

- class cv::CascadeClassifier

Cascade classifier class for object detection.

- bool load (const String &filename)

Load classifiers from files.

- bool empty() const

Check whether the classifier is loaded.

- void detectMultiScale(InputArray image, std::vector<Rect> &objects, double scaleFactor=1.1, int minNeighbors=3, int flags=0, Size minSize=Size(), Size maxSize=Size())

Detect objects of different sizes in the input image. The detected object is returned as a rectangular list.

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/objdetect.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main()

{

string path = "Resources/test.png";

Mat img = imread(path);

CascadeClassifier faceCascade;

faceCascade.load("Resources/haarcascade_frontalface_default.xml");

if (faceCascade.empty()) { cout << "XML file not loaded" << endl; }

vector<Rect> faces;

faceCascade.detectMultiScale(img, faces, 1.1, 10);

for (int i = 0; i < faces.size(); i++)

{

rectangle(img, faces[i].tl(), faces[i].br(), Scalar(255, 0, 255), 3);

}

imshow("Image", img);

waitKey(0);

return 0;

}

Project1 virtual artist

Color selector: first find out the HSV threshold of the color to be detected

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main()

{

VideoCapture cap(1);

Mat img;

Mat imgHSV, mask, imgColor;

int hmin = 0, smin = 0, vmin = 0;

int hmax = 179, smax = 255, vmax = 255;

namedWindow("Trackbars", (640, 200)); // Create Window

createTrackbar("Hue Min", "Trackbars", &hmin, 179);

createTrackbar("Hue Max", "Trackbars", &hmax, 179);

createTrackbar("Sat Min", "Trackbars", &smin, 255);

createTrackbar("Sat Max", "Trackbars", &smax, 255);

createTrackbar("Val Min", "Trackbars", &vmin, 255);

createTrackbar("Val Max", "Trackbars", &vmax, 255);

while (true) {

cap.read(img);

cvtColor(img, imgHSV, COLOR_BGR2HSV);

Scalar lower(hmin, smin, vmin);

Scalar upper(hmax, smax, vmax);

inRange(imgHSV, lower, upper, mask);

// hmin, smin, vmin, hmax, smax, vmax;

cout << hmin << ", " << smin << ", " << vmin << ", " << hmax << ", " << smax << ", " << vmax << endl;

imshow("Image", img);

imshow("Mask", mask);

waitKey(1);

}

}

Start virtual painting by using the midpoint of the upper boundary of the detected color rectangle

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <iostream>

using namespace cv;

using namespace std;

Mat img;

vector<vector<int>> newPoints;

vector<vector<int>> myColors{ {124, 48, 117, 143, 170, 255}, //purple

{68, 72, 156, 102, 126, 255} }; //green

vector<Scalar> myColorValues{ {255, 0, 255}, //purple

{0, 255, 0} }; //green

Point getContours(Mat imgDil) {

vector<vector<Point>> contours; //Contour data

vector<Vec4i> hierarchy;

findContours(imgDil, contours, hierarchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE); //All contour contours are found through the preprocessed binary image

//drawContours(img, contours, -1, Scalar(255, 0, 255), 2); // Draw all contours (no noise filtering)

vector<vector<Point>> conPoly(contours.size());

vector<Rect> boundRect(contours.size());

Point myPoint(0, 0);

for (int i = 0; i < contours.size(); i++)

{

double area = contourArea(contours[i]); //Calculate each contour area

cout << area << endl;

if (area > 1000) //Filter noise

{

//Find the approximate polygon or curve of the contour

double peri = arcLength(contours[i], true);

approxPolyDP(contours[i], conPoly[i], 0.02 * peri, true);

cout << conPoly[i].size() << endl;

boundRect[i] = boundingRect(conPoly[i]); //Find the minimum upper boundary rectangle of each approximate curve

myPoint.x = boundRect[i].x + boundRect[i].width / 2;

myPoint.y = boundRect[i].y;

//drawContours(img, conPoly, i, Scalar(255, 0, 255), 2); // Draw all contours after noise filtering

//rectangle(img, boundRect[i].tl(), boundRect[i].br(), Scalar(0, 255, 0), 5); // Draw bounding box

}

}

return myPoint; //Returns the coordinates of the midpoint of the upper boundary of the rectangle

}

vector<vector<int>> findColor(Mat img)

{

Mat imgHSV, mask;

cvtColor(img, imgHSV, COLOR_BGR2HSV);

for (int i = 0; i < myColors.size(); i++)

{

Scalar lower(myColors[i][0], myColors[i][1], myColors[i][2]);

Scalar upper(myColors[i][3], myColors[i][4], myColors[i][5]);

inRange(imgHSV, lower, upper, mask);

//imshow(to_string(i), mask);

Point myPoint = getContours(mask); //The midpoint coordinates of the upper boundary of the rectangular box with the current color detected are obtained according to the mask

if (myPoint.x != 0 && myPoint.y != 0)

{

newPoints.push_back({ myPoint.x, myPoint.y, i }); //Get the target point of the detection color of the current frame

}

}

return newPoints;

}

void drawOnCanvas(vector<vector<int>> newPoints, vector<Scalar> myColorValues)

{

for (int i = 0; i < newPoints.size(); i++)

{

circle(img, Point(newPoints[i][0], newPoints[i][1]), 10, myColorValues[newPoints[i][2]], FILLED);

}

}

int main()

{

VideoCapture cap(0);

while (true)

{

cap.read(img);

newPoints = findColor(img);

drawOnCanvas(newPoints, myColorValues);

imshow("Image", img);

waitKey(1);

}

return 0;

}

Project2 document scanning

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <iostream>

using namespace cv;

using namespace std;

Mat imgOriginal, imgGray, imgBlur,imgCanny, imgThre, imgDil, imgErode, imgWarp, imgCrop;

vector<Point> initialPoints, docPoints;

float w = 420, h = 596;

Mat preProcessing(Mat img)

{

cvtColor(img, imgGray, COLOR_BGR2GRAY);

GaussianBlur(imgGray, imgBlur, Size(3, 3), 3, 0);

Canny(imgBlur, imgCanny, 25, 75);

Mat kernel = getStructuringElement(MORPH_RECT, Size(3, 3));

dilate(imgCanny, imgDil, kernel);

//erode(imgDil, imgErode, kernel);

return imgDil;

}

vector<Point> getContours(Mat imgDil) {

vector<vector<Point>> contours; //Contour data

vector<Vec4i> hierarchy;

findContours(imgDil, contours, hierarchy, RETR_EXTERNAL, CHAIN_APPROX_SIMPLE); //All contour contours are found through the preprocessed binary image

//drawContours(img, contours, -1, Scalar(255, 0, 255), 2); // Draw all contours (no noise filtering)

vector<vector<Point>> conPoly(contours.size());

vector<Point> biggest;

int maxArea = 0;

for (int i = 0; i < contours.size(); i++)

{

double area = contourArea(contours[i]); //Calculate each contour area

cout << area << endl;

if (area > 1000) //Filter noise

{

//Find the approximate polygon or curve of the contour

double peri = arcLength(contours[i], true);

approxPolyDP(contours[i], conPoly[i], 0.02 * peri, true);

if (area > maxArea && conPoly[i].size() == 4) {

//drawContours(imgOriginal, conPoly, i, Scalar(255, 0, 255), 5); // Draw all contours after noise filtering

biggest = { conPoly[i][0], conPoly[i][1], conPoly[i][2], conPoly[i][3] };

maxArea = area;

}

}

}

return biggest; //Returns the coordinates of the four points of the maximum contour

}

void drawPoints(vector<Point> points, Scalar color)

{

for (int i = 0; i < points.size(); i++)

{

circle(imgOriginal, points[i], 10, color, FILLED);

putText(imgOriginal, to_string(i), points[i], FONT_HERSHEY_PLAIN, 4, color, 4);

}

}

vector<Point> reorder(vector<Point> points)

{

vector<Point> newPoints;

vector<int> sumPoints, subPoints;

for (int i = 0; i < 4; i++)

{

sumPoints.push_back(points[i].x + points[i].y);

subPoints.push_back(points[i].x - points[i].y);

}

newPoints.push_back(points[min_element(sumPoints.begin(), sumPoints.end()) - sumPoints.begin()]); //0

newPoints.push_back(points[max_element(subPoints.begin(), subPoints.end()) - subPoints.begin()]); //1

newPoints.push_back(points[min_element(subPoints.begin(), subPoints.end()) - subPoints.begin()]); //2

newPoints.push_back(points[max_element(sumPoints.begin(), sumPoints.end()) - sumPoints.begin()]); //3

return newPoints;

}

Mat getWarp(Mat img, vector<Point> points, float w, float h)

{

Point2f src[4] = { points[0], points[1], points[2], points[3] };

Point2f dst[4] = { {0.0f, 0.0f}, {w, 0.0f}, {0.0f, h}, {w, h} };

Mat matrix = getPerspectiveTransform(src, dst);

warpPerspective(img, imgWarp, matrix, Point(w, h));

return imgWarp;

}

int main()

{

string path = "Resources/paper.jpg";

imgOriginal = imread(path);

//resize(imgOriginal, imgOriginal, Size(), 0.5, 0.5);

//Preprocessing

imgThre = preProcessing(imgOriginal);

//Get Contours - Biggest

initialPoints = getContours(imgThre);

//drawPoints(initialPoints, Scalar(0, 0, 255));

docPoints = reorder(initialPoints);

//drawPoints(docPoints, Scalar(0, 255, 0));

//Warp

imgWarp = getWarp(imgOriginal, docPoints, w, h);

//Crop

int cropValue = 5;

Rect roi(cropValue, cropValue, w - (2 * cropValue), h - (2 * cropValue));

imgCrop = imgWarp(roi);

imshow("Image", imgOriginal);

imshow("Image Dilation", imgThre);

imshow("Image Warp", imgWarp);

imshow("Image Crop", imgCrop);

waitKey(0);

return 0;

}

Project3 license plate detection

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/objdetect.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main()

{

VideoCapture cap(0);

Mat img;

CascadeClassifier plateCascade;

plateCascade.load("Resources/haarcascade_russian_plate_number.xml");

if (plateCascade.empty()) { cout << "XML file not loaded" << endl; }

vector<Rect> plates;

while (true) {

cap.read(img);

plateCascade.detectMultiScale(img, plates, 1.1, 10);

for (int i = 0; i < plates.size(); i++)

{

Mat imgCrop = img(plates[i]);

imshow(to_string(i), imgCrop);

imwrite("D:\\VS2019Projects\\chapter2\\chapter2\\resources\\Plates\\1.png", imgCrop);

rectangle(img, plates[i].tl(), plates[i].br(), Scalar(255, 0, 255), 3);

}

imshow("Image", img);

waitKey(1);

}

return 0;

}