Today, continue to crawl a website, http://www.27270.com/ent/meinvtupian/This website has anti-crawling, so some of the code we downloaded is not very well handled, you focus on learning ideas, what suggestions can be commented on in the place to tell me.

For the future direction of network request operation, we simply encapsulate some code this time.

Here you can install a module called retrying first.

pip install retrying

The specific use of this module, go to Baidu. Hey hey.

Here I use a random method to generate USER_AGENT.

import requests from retrying import retry import random import datetime class R: def __init__(self,method="get",params=None,headers=None,cookies=None): # do something def get_headers(self): user_agent_list = [ \ "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1" \ "Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11", \ "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6", \ "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6", \ "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1", \ "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5", \ "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5", \ "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", \ "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", \ "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", \ "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", \ "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", \ "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", \ "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", \ "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", \ "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3", \ "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24", \ "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24" ] UserAgent = random.choice(user_agent_list) headers = {'User-Agent': UserAgent} return headers #other code Python Resource sharing qun 784758214 ,Installation packages are included. PDF,Learning videos, here is Python The gathering place of learners, zero foundation and advanced level are all welcomed.

The easiest way to retrying is to add a decorator to the way you want to try again and again. @retry

Here, I want the network request module to try three times and report an error!

Adding some necessary parameters to the simultaneous R-class initialization method, you can look directly at the following code

_ The retrying_requests method is a private method, which is judged logically according to the get adverbial clause: post mode.

import requests from retrying import retry import random import datetime class R: def __init__(self,method="get",params=None,headers=None,cookies=None): #do something def get_headers(self): # do something @retry(stop_max_attempt_number=3) def __retrying_requests(self,url): if self.__method == "get": response = requests.get(url,headers=self.__headers,cookies=self.__cookies,timeout=3) else: response = requests.post(url,params=self.__params,headers=self.__headers,cookies=self.__cookies,timeout=3) return response.content # other code

The method of network request has been declared, report view and response.content data stream has been declared.

Based on this private method, we add a method to get network text and a method to get network files. Synchronized perfection of class initialization methods, in the development found that to crawl the | page coding English gb2312, so we also need to add a coding parameter to some methods

import requests from retrying import retry import random import datetime class R: # Class initialization method def __init__(self,method="get",params=None,headers=None,cookies=None): self.__method = method myheaders = self.get_headers() if headers is not None: myheaders.update(headers) self.__headers = myheaders self.__cookies = cookies self.__params = params def get_headers(self): # do something @retry(stop_max_attempt_number=3) def __retrying_requests(self,url): # do something # get request def get_content(self,url,charset="utf-8"): try: html_str = self.__retrying_requests(url).decode(charset) except: html_str = None return html_str def get_file(self,file_url): try: file = self.__retrying_requests(file_url) except: file = None return file

At this point, this R class has been perfected by us, complete code, you should piece together from above, you can also turn to the end of the article, go to github directly consult.

Next, there's the more important part of the crawler code. This time, we can simply use classes and objects, and add simple multithreading operations.

First, a class called "ImageList" is created. The first thing about this class is to get the total number of pages we crawled.

This step is relatively simple.

- Getting Web Source Code

- Regular matching of last page elements

- Extraction of Numbers

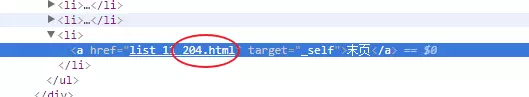

import http_help as hh # This http_help is the R class I wrote about above. import re import threading import time import os import requests # Get a list of all URL s to crawl class ImageList(): def __init__(self): self.__start = "http://Www.27270.com/ent/meinvtupian/list_11_{}.html " URL template" # header file self.__headers = {"Referer":"http://www.27270.com/ent/meinvtupian/", "Host":"www.27270.com" } self.__res = hh.R(headers=self.__headers) # Initialize access request def run(self): page_count = int(self.get_page_count()) if page_count==0: return urls = [self.__start.format(i) for i in range(1,page_count)] return urls # Regular expressions match the last page and analyze the page number def get_page_count(self): # Note that this place requires incoming coding content = self.__res.get_content(self.__start.format("1"),"gb2312") pattern = re.compile("<li><a href='list_11_(\d+?).html' target='_self'>Last</a></li>") search_text = pattern.search(content) if search_text is not None: count = search_text.group(1) return count else: return 0 if __name__ == '__main__': img = ImageList() urls = img.run()

Notice the get_page_count method in the above code, which has obtained the page number at the end.

Inside our run method, through a list generator

urls = [self.__start.format(i) for i in range(1,page_count)]

All links to be crawled are generated in batches.

27270 Pictures - Analyse the list of URL s crawled above and capture the details page

We use the producer and consumer model, that is, to grab the link picture, a download picture, using a multi-threaded way to operate, need to be introduced first.

import threading import time

The complete code is as follows

import http_help as hh import re import threading import time import os import requests urls_lock = threading.Lock() #url operation lock imgs_lock = threading.Lock() #Picture Operation Lock imgs_start_urls = [] class Product(threading.Thread): # Class initialization method def __init__(self,urls): threading.Thread.__init__(self) self.__urls = urls self.__headers = {"Referer":"http://www.27270.com/ent/meinvtupian/", "Host":"www.27270.com" } self.__res = hh.R(headers=self.__headers) # Re-join the urls list after link crawling fails def add_fail_url(self,url): print("{}this URL Grab Failure".format(url)) global urls_lock if urls_lock.acquire(): self.__urls.insert(0, url) urls_lock.release() # Unlock # Main Thread Methods def run(self): print("*"*100) while True: global urls_lock,imgs_start_urls if len(self.__urls)>0: if urls_lock.acquire(): # locking last_url = self.__urls.pop() # Get the last url in the urls and delete it urls_lock.release() # Unlock print("Operating{}".format(last_url)) content = self.__res.get_content(last_url,"gb2312") # Page Attention Coding is an error in other formats of gb2312 if content is not None: html = self.get_page_list(content) if len(html) == 0: self.add_fail_url(last_url) else: if imgs_lock.acquire(): imgs_start_urls.extend(html) # After crawling to the picture, place it in the list of pictures to download. imgs_lock.release() time.sleep(5) else: self.add_fail_url(last_url) else: print("All links have been run.") break def get_page_list(self,content): # regular expression pattern = re.compile('<li> <a href="(.*?)" title="(.*?)" class="MMPic" target="_blank">.*?</li>') list_page = re.findall(pattern, content) return list_page Python Resource sharing qun 784758214 ,Installation packages are included. PDF,Learning videos, here is Python The gathering place of learners, zero foundation and advanced level are all welcomed.

Some of the more important ones in the above code are The use of threading.Lock () locks to manipulate global variables among threads requires timely locking. Other notes I've added to the notes can be worked out as long as you follow the steps and write a little bit, and add some of your own subtle understandings.

So far, we've grabbed all the picture addresses and stored them in a global variable called imgs_start_urls. So here we go again

This list contains addresses like http://www.27270.com/ent/meinvtupian/2018/298392.html. When you open this page, you will find only one picture and a pagination below.

After clicking on the pagination, you know the rules.

http://www.27270.com/ent/meinvtupian/2018/298392.html http://www.27270.com/ent/meinvtupian/2018/298392_2.html http://www.27270.com/ent/meinvtupian/2018/298392_3.html http://www.27270.com/ent/meinvtupian/2018/298392_4.html ....

When you try many times, you will find that the links behind can be completed by splicing. If there is no page, it will show?

Well, if you do the above, you should know how to do it next.

I will paste all the code directly below, or annotate the most important place for you.

class Consumer(threading.Thread): # Initialization def __init__(self): threading.Thread.__init__(self) self.__headers = {"Referer": "http://www.27270.com/ent/meinvtupian/", "Host": "www.27270.com"} self.__res = hh.R(headers=self.__headers) # Picture downloading method def download_img(self,filder,img_down_url,filename): file_path = "./downs/{}".format(filder) # Determine whether a directory exists, exists, and creates if not os.path.exists(file_path): os.mkdir(file_path) # Create directories if os.path.exists("./downs/{}/{}".format(filder,filename)): return else: try: # This place host ing is a pit, because pictures are stored on another server to prevent chain theft. img = requests.get(img_down_url,headers={"Host":"t2.hddhhn.com"},timeout=3) except Exception as e: print(e) print("{}Write pictures".format(img_down_url)) try: # Writing pictures is not redundant with open("./downs/{}/{}".format(filder,filename),"wb+") as f: f.write(img.content) except Exception as e: print(e) return def run(self): while True: global imgs_start_urls,imgs_lock if len(imgs_start_urls)>0: if imgs_lock.acquire(): # locking img_url = imgs_start_urls[0] #After getting the link del imgs_start_urls[0] # Delete item 0 imgs_lock.release() # Unlock else: continue # http://www.27270.com/ent/meinvtupian/2018/295631_1.html #print("Pictures begin to download") img_url = img_url[0] start_index = 1 base_url = img_url[0:img_url.rindex(".")] # Strings can be sliced as lists while True: img_url ="{}_{}.html".format(base_url,start_index) # url splicing content = self.__res.get_content(img_url,charset="gbk") # This place gets content, using gbk coding if content is not None: pattern = re.compile('<div class="articleV4Body" id="picBody">[\s\S.]*?img alt="(.*?)".*? src="(.*?)" />') # Matching pictures, if not matched, means that the operation has been completed. img_down_url = pattern.search(content) # Get the picture address if img_down_url is not None: filder = img_down_url.group(1) img_down_url = img_down_url.group(2) filename = img_down_url[img_down_url.rindex("/")+1:] self.download_img(filder,img_down_url,filename) #Download pictures else: print("-"*100) print(content) break # Terminating circulatory body else: print("{}Link Loading Failed".format(img_url)) if imgs_lock.acquire(): # locking imgs_start_urls.append(img_url) imgs_lock.release() # Unlock start_index+=1 # In the description above, this place needs to be continuously + 1 operated.

All the codes are on it. I tried to add annotations to the key points. You can look at them carefully. If you don't understand them, you can knock them several times more. Because there is no special complexity, many of them are logic.

Finally, we attach the main part of the code to let our code run.

if __name__ == '__main__': img = ImageList() urls = img.run() for i in range(1,2): p = Product(urls) p.start() for i in range(1,2): c = Consumer() c.start() Python Resource sharing qun 784758214 ,Installation packages are included. PDF,Learning videos, here is Python The gathering place of learners, zero foundation and advanced level are all welcomed.

After a while, take your time.