catalogue

2 IO multiplexer select/poll/epoll

Basic practice of IO multiplexing

File descriptor set fd_set operation function

select advantages and disadvantages

Advantages and disadvantages of poll

Horizontal trigger & edge trigger

Horizontal trigger mode LT (default)

6 file descriptor exceeds 1024 limit

1. Several IO operating modes

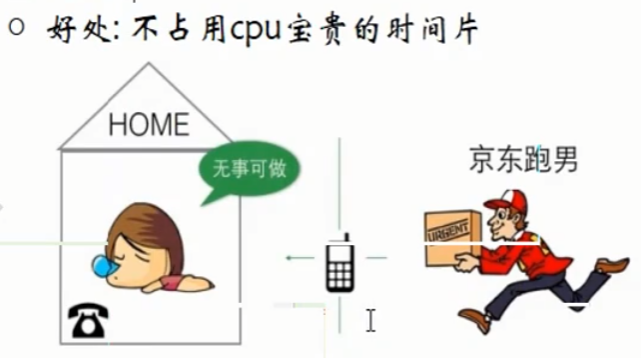

(1) Blocking wait

When blocking, it does not occupy CPU time slice (process blocking state). It means to wake up the server when there is a connection request.

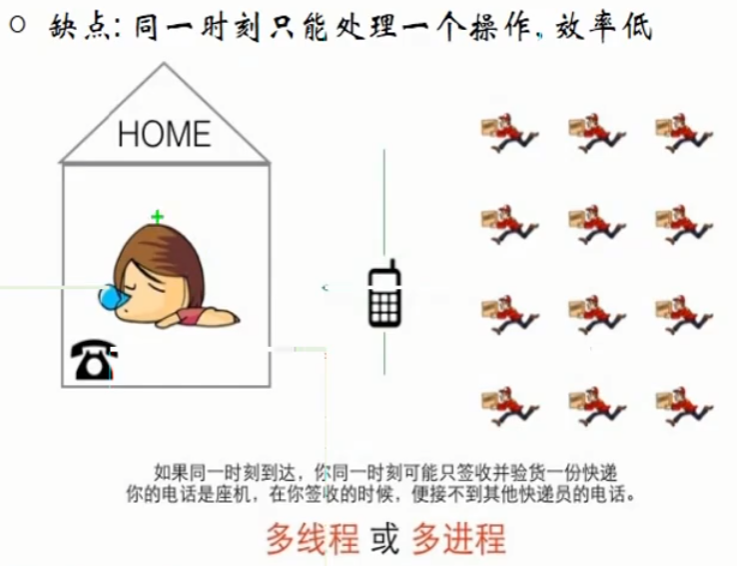

If there is only one connection request, this method is acceptable. If there are multiple connection requests, only one can be processed at the same time. The solution is that the main process or main thread is only responsible for blocking and waiting. Once the connection request is received, the sub process or multi thread method is used to process the connection.

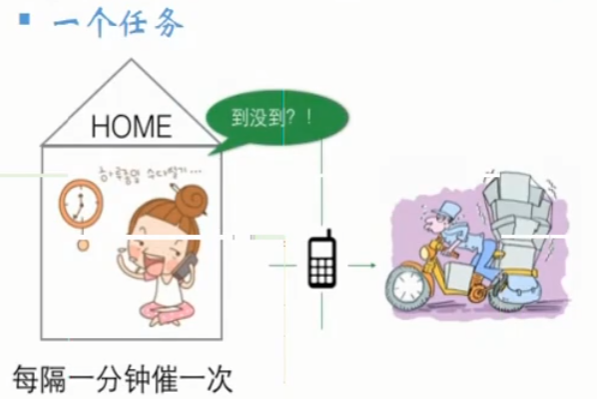

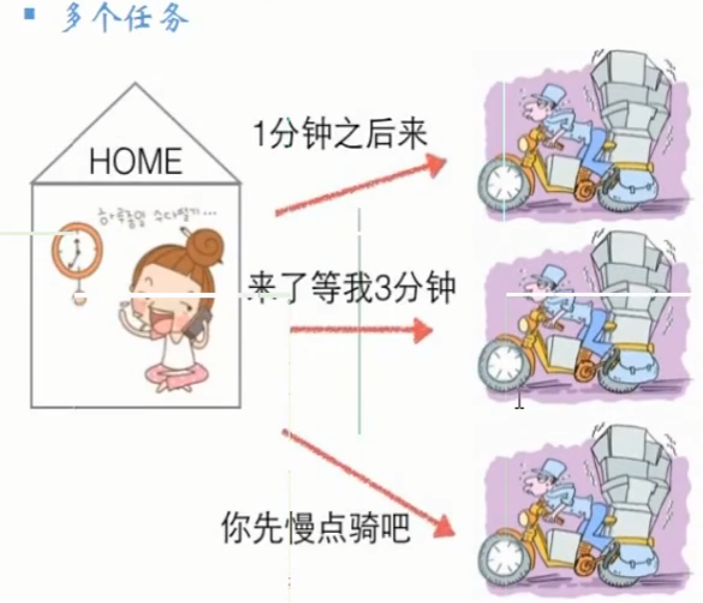

2 non blocking, busy polling

The advantage is that it improves the execution efficiency of the program (faster execution? And it takes time to create a process with multiple processes or threads by blocking and waiting)

The disadvantage is that it needs to occupy more cpu and system resources

Busy polling is also inappropriate for processing multiple connection requests, because it can only process one connection at a time.

2 IO multiplexer select/poll/epoll

IO multiplexing is to entrust the kernel to process the business and return the processing result after processing.

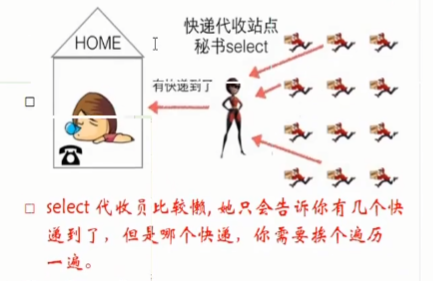

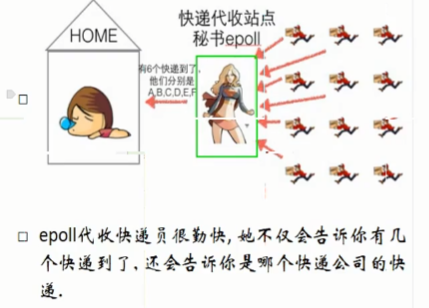

The first type is select/poll

IO multiplexing is to entrust the kernel to help detect how many connected clients need to communicate with the server process. In the select/epoll mode, the kernel will tell the server process how many processes need to communicate with you, but it will not say which processes and which processes need to be traversed. In this way, a linear table is traversed.

The second epoll

The kernel not only tells the server how many clients want to communicate, but also points out which clients. Red and black trees are used in this way.

Basic practice of IO multiplexing

(1) First, construct a list of file descriptors and add the file descriptors we want the kernel to help detect to the table.

(2) Call a function (select/poll/epoll) to always detect the file descriptor in the table, This function does not return until one of these file descriptors performs IO operation (detecting IO operation means detecting the server read buffer. If there is an array in the buffer, it means to communicate. Note that the buffer is associated with the file descriptor, and each file descriptor corresponds to its own buffer).

It should be noted that this function is a blocking function, and its detection of file descriptors is completed by the kernel.

(3) When the function returns, it tells the process how many (which) descriptors to perform IO operations.

3 select function

select function definition

int select(int nfds,

fd_set *readfds,

fd_set *writefds,

fd_set *exceptfds,

struct timeval *timeout);

nfds: The largest of the file descriptors to detect fd Add 1, you can fill 1024 directly

readfds: When reading the set, the kernel will detect the corresponding read buffer of each file descriptor in the read set on the server to see if there is data

write: Write a collection and wear half NULL,Because half of the write operations are initiated actively, there is no need to detect

exceptfds: Exception collection. Exceptions may occur in some file descriptors

timeout:

pass NULL: Permanent blocking, when detected fd Return when changing.

timeout: timeval Structure object used to set blocking time. If both seconds and microseconds are set to zero, blocking will not occur.

struct{

long tv_sec; // Seconds

long tv_usec; // Microseconds

}

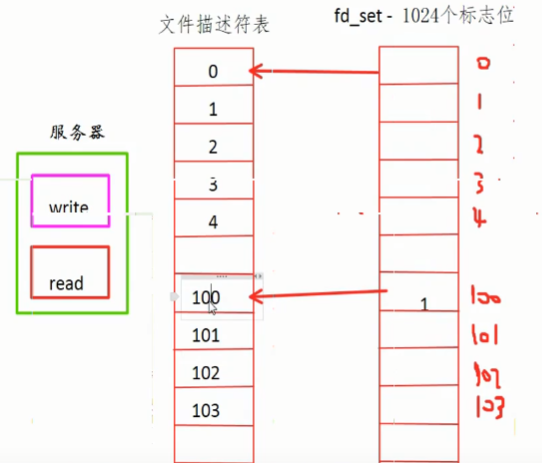

fd_set is a set of file descriptors, which corresponds to a table containing 1024 flag bits, numbers 0 to 1023, and the file descriptor table of the corresponding process. It should be noted that FD_ All stored in set are file descriptors for communication on the server side. fd_set is implemented internally with an array, and the size is limited to 1024.

File descriptor set fd_set operation function

Empty all

void FD_ZERO(fd_set *set);

Delete an item from the collection

void FD_CLR(int fd, fd_set *set);

Adds a file descriptor to the collection

void FD_SET(int fd, fd_set *set);

Determine whether a file descriptor is in the collection

int FD_ISSET(int fd, fd_set *set);

select workflow

Clients a, B, C, D, e and F are connected to the server, corresponding to the file descriptor 3, 410101102103 used by the server for communication. Suppose three clients of ABC send data when entrusting kernel detection.

The first step is to create FD_ Table of type set.

fd_set reads;

The second step is to add the file descriptor to be detected to reads.

FD_SET(3, &read);

FD_SET(4, &read);

FD_SET(100, &read);

......

Step 3: call the select function.

select(103+1, &reads, NULL, NULL, NULL);

Blocking waits until a buffer change is detected before returning.

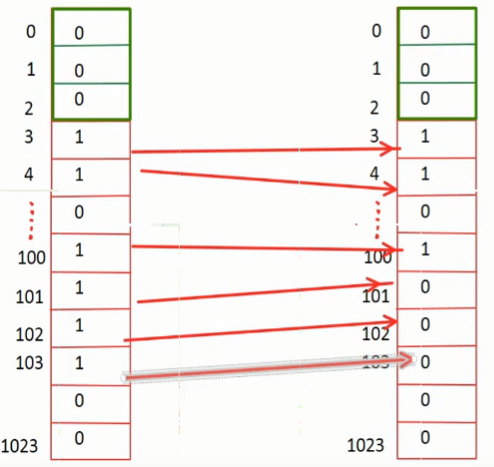

Because the kernel will be entrusted to work after calling select. Our original table reads is in user space. After calling, the kernel will copy a copy to kernel space. The kernel does two things, one is to get the initial table, and the other is to modify the table and return. As shown below, on the left is the initial table obtained by the kernel, and then the kernel starts to detect which file descriptor has data in the corresponding buffer. In our example, there is data in the buffer corresponding to the client ABC, so the 3 and 4100 corresponding to the file descriptor remain 1, while the other detected bits are modified to 0, as shown on the right. It is a kernel modified table. After the select function returns, the modified table is stored in reads, so reads can also be considered as an incoming and outgoing parameter. We need to back up the original reads at the beginning, otherwise it will have changed after select.

After we get the modified table reads, we just use FD_ISSET to traverse each bit in the reads, and see which bit is 1, you can know which clients request communication.

select multiplex code

Pseudo code

int main(){

int lfd = socket(); //Create a socket. lfd is the file descriptor for listening

bind(); // Bind port and IP

listen(); // monitor

// Create FD_ The set table is actually equivalent to a file descriptor table. Two are defined because one of them needs to be backed up

fd_set reads, temp;

// Table initialization

FD_ZERO(&read);

// Add the monitored file descriptor lfd to the table. When a client applies for connection, it also sends a SYN packet to the read buffer of the server. Therefore, you can check whether there is data in the buffer through select to determine whether there is a client requesting connection.

FD_SET(lfd, &read);

int manfd = lfd;

// Calling select once is definitely not enough. You need to call select circularly

while(1){

// Delegate kernel detection

temp = reads; // reads is used for backup, and temp is used as the incoming and outgoing parameters

// When select returns, the value of temp has changed

int ret = select(maxfd + 1, &temp, NULL, NULL, NULL);

// According to the returned result, first judge whether a new connection has arrived, that is, whether there is data in the buffer corresponding to the lfd listening descriptor

if(FD_ISSET(lfd, &temp)){

// If new data is detected in the listening descriptor buffer, it indicates that there is a new connection and accepts the new connection

int cfd = accept(); // At this time, accept will not block because the kernel has detected a new connection

// cfd corresponds to the communication descriptor of a new client and adds it to reads to detect whether the client sends data

fd_set(cfd, &reads);

// After adding a new file descriptor to the read set, the maximum descriptor may change. Update maxfd

maxfd = maxfd < cfd ? cfd : maxfd;

}

// Detect whether the client sends data

for(int i = lfd + 1; i <= maxfd; ++i){

// Traverse the modified temp returned by the select to see which client has data

if(FD_ISSET(i, &temp)){

int len = read();

if(len == 0){ // Description client disconnected? Why?

FD_CLR(i, &reads); // Clear the communication descriptor corresponding to the disconnected client

}

write(); // Communication, write data to the client

}

}

}

}select advantages and disadvantages

Advantages: cross platform

Disadvantages:

Every time you call select, you must set fd_ Copying a set from user mode to kernel mode, fd often costs a lot. Frequent conversion between user space and kernel space is time-consuming, and the copy cost can not be ignored..

Every time you call select, you need to traverse all fd file descriptors passed in the kernel. This overhead is also great when there are many fd files, and the overhead of linear traversal is great.

The number of file descriptors supported by select is too small. The default is 1024, FD_ The bottom layer of set is implemented by array.

4. Poll function

poll function definition

int poll(struct pollfd *fd, nfds_t nfds, int timeout);

fd: pollfd Type array pointer, passed when actually used pollfd Type array name, automatically degenerated into a pointer

nfds: The index of the last element used in the array+1,The kernel polls for detection fd Each file descriptor of the array

timeout:

-1: Permanent blocking

0: Return immediately after the call is completed

>0: Length of wait in milliseconds

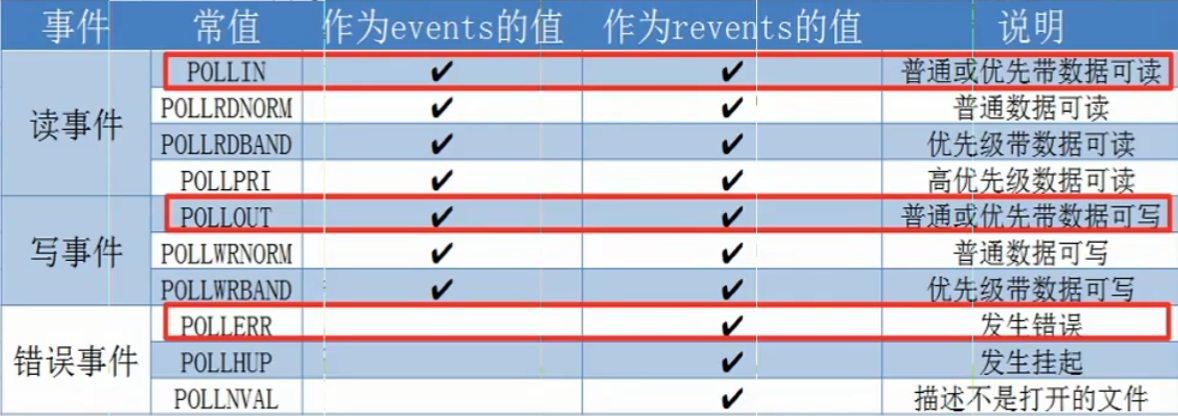

Return value: IO Number of file descriptors to send changespollfd structure is defined as:

struct pollfd{

int fd; // File descriptor

short events; // Waiting events

short revents; // The actual event is the feedback from the kernel, similar to the incoming and outgoing parameters of the function

}

Pollfd structure is equivalent to merging the three sets of read, write and exception in select. Each pollfd structure object has an fd parameter, and then the events parameter is used to select whether the fd is in the read set, write set or exception set. Of course, you can also have all three kinds. Connect the options with |.

Advantages and disadvantages of poll

advantage:

It is said that the file descriptors in the bottom layer of poll are stored in a chain, so there is no limit on the maximum number of connections in select. However, I see that there are no pointer members in the pollfd structure. I don't know how to do this linked list.

Disadvantages:

A large number of fd arrays (linked list?) It is copied between the user state and the kernel address space as a whole, which is expensive.

Traversing fd is also expensive.

"Horizontal trigger": that is, if fd is reported and not processed, the fd will be reported again in the next poll.

Horizontal trigger & edge trigger

Horizontal trigger: when a descriptor event is detected to be ready and notified to the application, the application may not process the event immediately. The event will be notified again when it is called next time.

Edge trigger: when a descriptor event is detected ready and notified to the application, the application must process the event immediately. If not, the event will not be notified in the next call. That is, the edge trigger is only notified once when the status changes from not ready to ready.

5 epoll function

There are three main functions.

epoll_create function

Create underlying red black tree

int epoll_create(int size); size: The maximum number of file descriptors to be detected is planned, but in fact, it doesn't matter if this parameter is filled in small, and it will be expanded automatically. Return value: file descriptor

This function generates a special file descriptor for epoll. Because the file descriptor is organized in the form of red black tree in the bottom epoll, epoll is called_ The create function is equivalent to constructing the root node of a red black tree. In my opinion, call epoll_create is equivalent to creating a red black tree. You can insert and delete nodes in this tree in the future.

epoll_ctl function

Insert the node to be detected into the red black tree or delete it from the tree

epoll_ctl is used to control an epoll file descriptor event, which can be registered, modified and deleted

int epoll_ctl(int epfd, int op, int fd, struct epoll_event *event);

epfd: afferent epoll_create Return value of

op: Three macros are used to control the registration, modification and deletion of nodes

EPOLL_CTL_ADD --- register

EPOLL_CTL_MOD --- modify

EPOLL_CTL_DEL --- delete

fd: To insert on a red black tree/delete/Modified file descriptor

event: epoll The real node on the red black tree at the bottom is epoll_event Object of structure type

epoll_event Structure is defined as follows:

struct epoll_event{

uint32_t events;

epoll_data_t data;

}

For members in a structure:

events: It is used to set the event corresponding to the detection file descriptor. The following macro can be used | Separate and detect multiple events at the same time

EPOLLIN----read

EPOLLOUT---write

EPOLLERR---abnormal

EPOLLPRI---The corresponding file descriptor has emergency data readable

EPOLLHUP---The corresponding file descriptor is hung up

EPOLLET---take EPOLL Set to edge trigger mode

EPOLLONESHOT---Only monitor one event. After listening to this event, if you need to continue to monitor this event socket,You need to put this again socket Add to EPOLL In the queue.

data: yes epoll_data_t Type of consortium

typedef union epoll_data{

void *ptr; // If you want to describe more information about a node, use this member

int fd; // This member is usually used

uint32_t u32;

uint64_t u64;

}Every time epoll is called_ CTL will copy the parameter event to the red black tree as a node, and then copy it to epoll when the change of file descriptor is detected_ Go to the events array of the wait function parameter to know which file descriptors have changed.

epoll_wait function

It is equivalent to the previous select or poll function, which is used to detect the occurrence of IO events, and the epoll above_ Create and epoll_ctl is preparing for this step.

int epoll_wait(

int epfd,

struct epoll_event *events,

int maxevents,

int timeout

);

epfd: epoll_create The return value of, the root node of the red black tree

events: epoll_event Type structure array, when it is detected that some file descriptors have IO Event, the corresponding file descriptors

epoll_event Nodes of type are copied into this array, that is, this array is an incoming and outgoing parameter. It is precisely because of the existence of this array,

epoll To mark which file descriptors have changed.

maxevents: events The size of the array is used to control the overflow when copying elements into the array.

timeout:

-1: Permanent blocking

0: Return now

>0: After the timeout, the event returns

Return value: how many file descriptor states have changedThree working modes of epoll

Horizontal trigger mode LT (default)

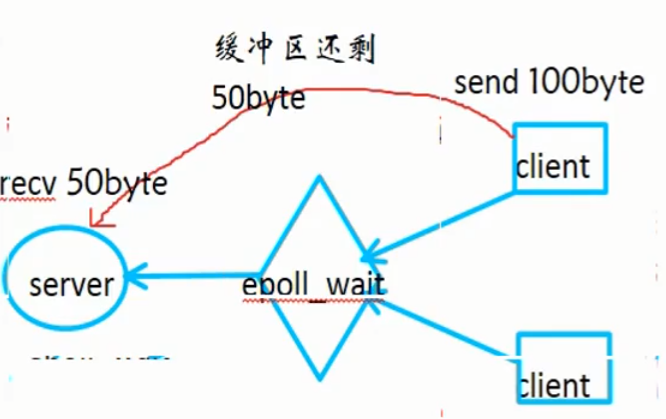

As shown in the figure below, the customer service side sends 100 bytes of data each time, and the server side can only read an array of 50 bytes at a time. When the client sends data, epoll_wait detected data in the read buffer, epoll_ The wait function returns, and then the server reads the data. Wait until 50 bytes are read at a time, carry out the next cycle, and then epoll_wait detects that there is data in the read buffer corresponding to the same file descriptor (the remaining 50 bytes last time), so epoll_wait returns again and continues to read the remaining 50 bytes. This is the horizontal trigger mode.

Horizontal trigger mode features:

(1) As long as the buffer corresponding to fd has data, epoll_wait returns.

(2) The number of times returned is not related to the number of times sent. One send may correspond to multiple epolls_ Wait returns.

Edge trigger mode ET

The client sends data to the server:

When the client sends it once, the server returns it once, that is, the number of times the server returns is strictly determined by the number of times the client sends it.

What if the client sends too much data at one time and the server does not read it all at once? Then, the remaining data remains in the buffer. When the client sends the data again, the data in these buffers will be read out. That is, although the client sends the data again, the server may only read the data left in the buffer that was not read last time.

In this way, the client sends epoll once and the server epoll_wait returns once. If the client sends too much data each time, will it cause the data not to be read in the end? The answer is yes, but the edge trigger mode does not care whether the data is read or not. The edge trigger mode exists to improve efficiency because epoll_ The more wait calls are made, the more overhead the system will incur. The edge trigger mode is used to reduce epoll_ Number of calls to wait.

Edge non blocking trigger

The upper edge trigger mode default file descriptor has a blocking attribute.

In the edge trigger mode mentioned above, the reason why you can't finish reading data is that the server reads too little at a time. If you use while(recv()) to read more at a time, can you read all the data? Because the file descriptor fd under the edge trigger has the blocking attribute, the recv() function will block after reading the data once, so it can no longer run. Of course, epoll can no longer be called_ Wait function to delegate kernel detection.

Therefore, a third mode, edge non blocking trigger, is needed, that is, the file descriptor fd has a non blocking attribute.

Edge non blocking trigger is the most efficient.

There are two ways to set the non blocking of the corresponding file descriptor in the edge trigger mode.

(1) Through the open() function

Set the flags parameter in the open() function to O_WDRW | O_NONBLOCK

(2) Set via fcntl

Step 1: obtain the current flag: int flag = fcntl(fd, F_GETFL);

Step 2: add non blocking attribute to flag: flag |= O_NONBLOCK;

Step 3: set the flag back: fcntl(fd, F_SETFL,flag);

6 file descriptor exceeds 1024 limit

select: the 1024 limit cannot be exceeded because it is implemented with an array. If you want to modify it, you need to change the size of the array and recompile the kernel.

poll: it can break through 1024 because the internal linked list is implemented.

epoll: it can break through 1024 because the internal red black tree is implemented.

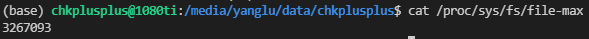

The maximum number of file descriptors that can be supported is also related to the specific machine. You can view a configuration file:

In any case, the upper limit value of the configuration modification file descriptor cannot exceed this number.