Usage rule summary

Local multiple asynchronous tasks use: queue group or enter

Multiple asynchronous tasks on the network use: enter

Multiple asynchronous tasks in the network depend on each other and need to be executed synchronously: semaphore

Thread safety lock: semaphore

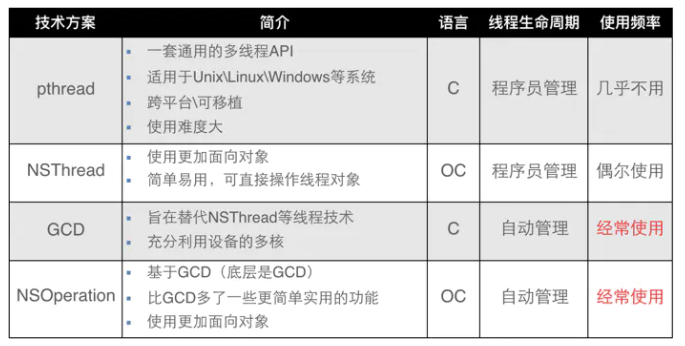

Types of multithreading

reference resources https://www.jianshu.com/p/f536f9a17d90

Multithreading is to let the sub thread execute network requests or time-consuming tasks, and let the main thread only deal with the UI, so as to improve the user's UI interaction experience.

Thread synchronization technology: we need to add mutex locks to the data. If we lock the data, it is equivalent to making these asynchronous sub threads synchronous.

Use of mutex in iOS development

When defining attributes, OC has two options: nonatomic and atomic

Atomic: atomic attribute, which locks the setter method (atomic by default) to ensure that a complete access can be completed. However, when the amount of concurrency is too large, it will still cause thread insecurity. Therefore, nonatomic is generally used directly to improve performance.

nonatomic: a non atomic attribute that does not lock the setter method

Comparison between nonatomic and atomic

atomic: thread safety, which consumes a lot of resources

nonatomic: non thread safe, suitable for mobile devices with small memory

Recommendations:

All attributes are declared as nonatomic. Try to avoid multithreading grabbing the same resource, and hand over the business logic of locking and resource grabbing to the server for processing, so as to reduce the pressure on the mobile client.

Thread overview

Some programs are a straight line from the beginning to the end; Some programs are a circle that loops until it is cut off

A running program is a process or a task. A process contains at least one thread, which is the execution flow of the program. The programs in Mac and iOS are started. When a process is created, a thread starts running. This thread is called the main thread. The status of the main thread in the program is different from that of other threads. It is the final parent thread of other threads, and all interface display operations, i.e. AppKit or UIKit operations, must be carried out in the main thread.

Each process in the system has its own independent virtual memory space, while multiple threads in the same process share the memory space of the process. Each time a new thread is created, it requires some memory (for example, each thread has its own Stack space) and consumes a certain amount of CPU time. In addition, when multiple threads compete for the same resource, attention should be paid to thread safety

Implementation principle of multithreading: Although the CPU can only process one thread at the same time, the CPU can quickly schedule (switch) between multiple threads, resulting in the illusion of concurrent execution of multiple threads.

Use steps of GCD

The steps of using GCD are actually very simple. There are only two steps.

Create a queue (serial or concurrent)

Add the task to the waiting queue, and then the system will execute the task according to the task type (synchronous execution or asynchronous execution)

Tasks and queues

Task: it means to perform an operation. In other words, it means the code you execute in the thread. In GCD, it is placed in block. There are two ways to execute tasks: synchronous execution (sync) and asynchronous execution (async). The main differences between the two are: whether to wait for the end of the task execution of the queue, and whether it has the ability to start a new thread.

dispatch_async: asynchronous function. This function will return immediately without any waiting. The specified block is "asynchronously" appended to the specified queue.

dispatch_sync: synchronization function. This function will not return immediately. It will wait until the specified block added to the specific queue completes its work, So its purpose (and effect) is to block the current thread (therefore, when the main thread synchronously calls the main queue, it will block each other, resulting in jamming. The actual reason is that the synchronization queue must wait until the task entering the synchronization queue is completed. However, for the thread under the current synchronization queue, when the synchronization function calls the synchronization queue to execute the task, it will block the current thread, that is, the synchronization function will block the current thread, but the synchronization queue will be blocked again The execution cannot continue until the execution of the current queue is completed, so it is stuck).

Code interpretation

-(void)test{

dispatch_queue_t que = dispatch_queue_create("que", DISPATCH_QUEUE_SERIAL);

dispatch_sync(que, ^{

NSLog(@"No blocking");

dispatch_sync(que, ^{

NSLog(@"The current thread will be blocked and will not be executed until the current thread is completed, so it will be stuck");

});

});

}

Synchronous execution (sync):

Synchronously add tasks to the specified queue, and wait until the tasks in the queue are completed.

The task can only be executed in the current thread and does not have the ability to start a new thread.

Asynchronous execution (async):

It will not wait for any task to be added to the specified queue asynchronously, and it will not continue to execute any task.

It can execute tasks in new threads and has the ability to start new threads.

queue

The Dispatch Queue is a waiting queue for processing. It executes processing first in first out according to the order in which tasks (block s) are added to the queue.

There are two types of waiting queues

Serial Dispatch Queue: a serial queue that waits for the end of the processing of the currently executed task.

Concurrent Dispatch Queue: a concurrent queue that does not wait for the end of the current task execution.

Creation method / acquisition method of queue

You can use dispatch_queue_create to create a queue, two parameters need to be passed in. The first parameter represents the unique identifier of the queue, which is used for DEBUG and can be empty. The name of Dispatch Queue is recommended to use the whole process domain name in reverse order, such as application ID; The second parameter is used to identify whether it is a serial queue or a concurrent queue. DISPATCH_QUEUE_SERIAL indicates serial queue, DISPATCH_QUEUE_CONCURRENT indicates a concurrent queue.

// Method for creating serial queue

dispatch_queue_t queue = dispatch_queue_create("net.bujige.testQueue", DISPATCH_QUEUE_SERIAL);

// Method for creating concurrent queue

dispatch_queue_t queue = dispatch_queue_create("net.bujige.testQueue", DISPATCH_QUEUE_CONCURRENT);

Serial queue

To append a task to a serial queue:

- (void)serialQueue

{

dispatch_queue_t queue = dispatch_queue_create("serial queue", NULL);

for (NSInteger index = 0; index < 6; index ++) {

dispatch_async(queue, ^{

NSLog(@"task index %ld in serial queue",index);

});

}

}

Code output:

gcd_demo[33484:2481120] task index 0 in serial queue gcd_demo[33484:2481120] task index 1 in serial queue gcd_demo[33484:2481120] task index 2 in serial queue gcd_demo[33484:2481120] task index 3 in serial queue gcd_demo[33484:2481120] task index 4 in serial queue gcd_demo[33484:2481120] task index 5 in serial queue

Via dispatch_ queue_ The create function can create a queue. The first function is the name of the queue, and the second parameters are NULL and dispatch_ QUEUE_ When serial, the returned queue is the serial queue.

In order to avoid duplication of code, I use a for loop here to append the task to the queue.

Note that the tasks here are performed in order. Explain that the task is executed in the form of blocking: you must wait for the execution of the previous task to complete before you can execute the current task. In other words, only one additional processing (task block) can be executed in a Serial Dispatch Queue at the same time, and the system only generates and uses one thread for a Serial Dispatch Queue.

However, if we add six tasks to six serial dispatch queues respectively, the system will process these six tasks at the same time (because another six sub threads will be opened):

- (void)multiSerialQueue

{

for (NSInteger index = 0; index < 10; index ++) {

//Create a new serial queue

dispatch_queue_t queue = dispatch_queue_create("different serial queue", NULL);

dispatch_async(queue, ^{

NSLog(@"serial queue index : %ld",index);

});

}

}

Code output result:

gcd_demo[33576:2485282] serial queue index : 1 gcd_demo[33576:2485264] serial queue index : 0 gcd_demo[33576:2485267] serial queue index : 2 gcd_demo[33576:2485265] serial queue index : 3 gcd_demo[33576:2485291] serial queue index : 4 gcd_demo[33576:2485265] serial queue index : 5

It can be seen from the output results that the six tasks here are not executed in order.

It should be noted that once the developer creates a new serial queue, the system will start a sub thread. Therefore, when using the serial queue, only the serial queue that really needs to be created must be created to avoid resource waste.

Concurrent queue

To append a task to a concurrent queue:

- (void)concurrentQueue

{

dispatch_queue_t queue = dispatch_queue_create("concurrent queue", DISPATCH_QUEUE_CONCURRENT);

for (NSInteger index = 0; index < 6; index ++) {

dispatch_async(queue, ^{

NSLog(@"task index %ld in concurrent queue",index);

});

}

}

Code output result:

gcd_demo[33550:2484160] task index 1 in concurrent queue gcd_demo[33550:2484159] task index 0 in concurrent queue gcd_demo[33550:2484162] task index 2 in concurrent queue gcd_demo[33550:2484182] task index 3 in concurrent queue gcd_demo[33550:2484183] task index 4 in concurrent queue gcd_demo[33550:2484160] task index 5 in concurrent queue

As you can see, dispatch_ queue_ The second parameter of the create function is DISPATCH_QUEUE_CONCURRENT.

Note that the six tasks added to the concurrent queue here are not executed in order, which conforms to the definition of concurrent queue above.

Extended knowledge: iOS and OSX determine the number of concurrent tasks in the Concurrent Dispatch Queue based on the current system status such as the number of processes in the Dispatch Queue, the number of CPU cores, and CPU load.

System provided queue

For serial queues, GCD provides a special serial queue: Main Dispatch Queue.

All tasks placed in the main queue will be executed in the main thread.

You can use dispatch_get_main_queue() gets the main queue.

//Acquisition method of main queue

dispatch_queue_t queue = dispatch_get_main_queue();

For concurrent queues, GCD provides Global Dispatch Queue by default.

You can use dispatch_get_global_queue to get. Two parameters need to be passed in. The first parameter indicates the priority of the queue, which is usually DISPATCH_QUEUE_PRIORITY_DEFAULT. The second parameter is temporarily useless. Just use 0.

//Method for obtaining global concurrent queue

dispatch_queue_t queue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0);

Task creation method

GCD provides the creation method of synchronously executing tasks, dispatch_sync and asynchronously execute task creation method dispatch_async.

// Synchronous execution task creation method

dispatch_sync(queue, ^{

// Here is the code of synchronous execution task

});

// Asynchronous execution task creation method

dispatch_async(queue, ^{

// Here is the code for asynchronous execution of tasks

});

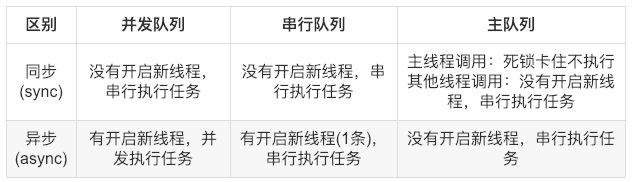

Combination of tasks and queues

1,Synchronous execution + Concurrent queue :When a task is executed in the current thread, a new thread will not be opened. After one task is executed, the next task will be executed.

2,Asynchronous execution + Concurrent queue:Multiple threads can be started and tasks can be executed alternately (at the same time)

3,Synchronous execution + Serial queue:The new thread will not be started and the task will be executed in the current thread. Tasks are serial. After one task is executed, the next task is executed

4,Asynchronous execution + Serial queue: A new thread will be started, but because it is a serial queue, only one new thread will be opened, one task will be executed, and then the next task will be executed. The same serial queue is used in multiple asynchronous tasks, and only the first thread will be used.

5,Synchronous execution + Main line:

5.1:Invoking synchronous execution in main thread + Main line:Waiting for each other is not feasible(deadlock)

5.2:Synchronous execution is invoked in other threads. + Main line:A new thread will not be started. After executing one task, the next task will be executed

6,Asynchronous execution + Main line:Only execute tasks in the main thread. After executing one task, execute the next task

Communication between GCD threads

During iOS development, we usually refresh the UI in the main thread, such as clicking, scrolling, dragging and other events. We usually put some time-consuming operations on other threads, such as image download, file upload and other time-consuming operations. Sometimes when we need to go back to the main thread to refresh the UI or other operations when other threads have completed time-consuming operations, the communication between threads is used.

For example 🌰: Load pictures concurrently, and refresh the UI in the main thread after loading):

//Get the global concurrent queue for time-consuming operations

dispatch_async(dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0), ^{

//Load picture

NSData *dataFromURL = [NSData dataWithContentsOfURL:imageURL];

UIImage *imageFromData = [UIImage imageWithData:dataFromURL];

dispatch_async(dispatch_get_main_queue(), ^{

//Get the main queue and update UIImageView after the picture is loaded

UIImageView *imageView = [[UIImageView alloc] initWithImage:imageFromData];

});

});

Other methods of GCD

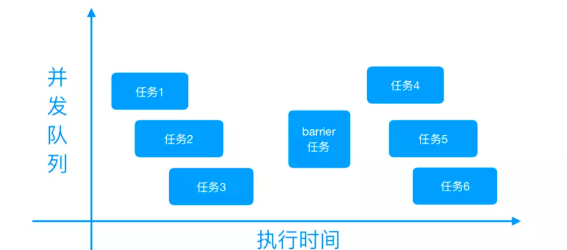

GCD fence method: dispatch_barrier_async

About the method to solve data competition: read processing can be concurrent, but write processing is not allowed to be executed concurrently, such as database.

Sometimes we need to execute two sets of operations asynchronously, and the second set of operations can be executed only after the first set of operations is executed. In this way, we need a method equivalent to a fence to separate two groups of asynchronous operation groups. Of course, the operation group here can contain one or more tasks. This requires dispatch_ barrier_ The async method forms a fence between the two operation groups. Only the Barrier blocks are submitted to the using dispatch_ QUEUE_ When the concurrent attribute creates a parallel queue, it will behave as expected.

dispatch_ barrier_ The async function will wait until all the tasks added to the concurrent queue are executed, and then add the specified task to the asynchronous queue. Then in the dispatch_ barrier_ After the task added by async function is executed, the asynchronous queue will return to normal action, and then add the task to the asynchronous queue and start execution. The global concurrent queue cannot be used. See the following figure for details:

Here is a point to note, which is also mentioned in the official document. If we call dispatch_ barrier_ During async, barrier blocks are submitted to a global queue, and the execution effect of barrier blocks is the same as that of dispatch_async() is consistent; Only barrier blocks are submitted to using dispatch_ QUEUE_ When the concurrent attribute creates a parallel queue, it will behave as expected.

/**

* Fence method dispatch_barrier_async

*/

- (void)barrier {

dispatch_queue_t queue = dispatch_queue_create("net.bujige.testQueue", DISPATCH_QUEUE_CONCURRENT);

dispatch_async(queue, ^{

// Add task 1

for (int i = 0; i < 2; ++i) {

[NSThread sleepForTimeInterval:2]; // Simulate time-consuming operations

NSLog(@"1---%@",[NSThread currentThread]); // Print current thread

}

});

dispatch_async(queue, ^{

// Additional task 2

for (int i = 0; i < 2; ++i) {

[NSThread sleepForTimeInterval:2]; // Simulate time-consuming operations

NSLog(@"2---%@",[NSThread currentThread]); // Print current thread

}

});

dispatch_barrier_async(queue, ^{

// Add task barrier

for (int i = 0; i < 2; ++i) {

[NSThread sleepForTimeInterval:2]; // Simulate time-consuming operations

NSLog(@"barrier---%@",[NSThread currentThread]);// Print current thread

}

});

dispatch_async(queue, ^{

// Additional task 3

for (int i = 0; i < 2; ++i) {

[NSThread sleepForTimeInterval:2]; // Simulate time-consuming operations

NSLog(@"3---%@",[NSThread currentThread]); // Print current thread

}

});

dispatch_async(queue, ^{

// Additional task 4

for (int i = 0; i < 2; ++i) {

[NSThread sleepForTimeInterval:2]; // Simulate time-consuming operations

NSLog(@"4---%@",[NSThread currentThread]); // Print current thread

}

});

}

GCD delay execution method: dispatch_after

Perform a task after a specified time (for example, 3 seconds). You can use the dispatch of GCD_ After function.

Note: Dispatch_ The after function does not start processing after the specified time, but appends the task to the main queue after the specified time. Strictly speaking, this time is not absolutely accurate, but if you want to roughly delay the execution of tasks, dispatch_ The after function is very effective.

/**

* Delay execution method dispatch_after

*/

- (void)after {

NSLog(@"currentThread---%@",[NSThread currentThread]); // Print current thread

NSLog(@"asyncMain---begin");

dispatch_after(dispatch_time(DISPATCH_TIME_NOW, (int64_t)(2.0 * NSEC_PER_SEC)), dispatch_get_main_queue(), ^{

// After 2.0 seconds, asynchronously append the task code to the main queue and start execution

NSLog(@"after---%@",[NSThread currentThread]); // Print current thread

});

}

GCD one-time code (only executed once): dispatch_once

When we create a singleton or have code that is executed only once during the whole program running, we use the dispatch of GCD_ Once function. use

dispatch_ The once function can ensure that a piece of code is executed only once during the running process of the program, and even in a multi-threaded environment, dispatch_once can also ensure thread safety.

/**

* One time code (execute only once) dispatch_once

*/

- (void)once {

static dispatch_once_t onceToken;

dispatch_once(&onceToken, ^{

// Code executed only once (thread safe by default)

});

}

GCD fast iterative method: dispatch_apply

dispatch_apply appends the specified task (block) to the specified queue according to the specified number of times, and waits for the execution of all queues to end.

If you are using dispatch in a serial queue_ Apply, then it will be executed synchronously in order, just like the for loop. But this does not reflect the significance of fast iteration.

We can use concurrent queues for asynchronous execution. For example, when traversing the six numbers 0 ~ 5, the for loop takes out one element at a time and traverses them one by one. dispatch_apply can traverse multiple numbers simultaneously (asynchronously) in multiple threads.

One more thing, whether in a serial queue or an asynchronous queue, dispatch_apply will wait for all tasks to be executed before proceeding. This is like a synchronous operation or a dispatch in a queue group_ group_ Wait method.

Because tasks are executed asynchronously in the concurrent queue, the execution time of each task is uncertain, and the final end order is also uncertain. But apply end must be executed at the end. This is because of dispatch_ The apply function will wait until all tasks are executed before continuing to execute. Functionally, it is the same as the for loop, but it will open up more threads and use more cpu resources. Generally speaking, it still uses the for loop.

/**

* Fast iterative method dispatch_apply

*/

- (void)apply {

dispatch_queue_t queue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0);

NSLog(@"apply---begin");

dispatch_apply(6, queue, ^(size_t index) {

NSLog(@"%zd---%@",index, [NSThread currentThread]);

});

NSLog(@"apply---end");

}

GCD queue group: dispatch_group

Sometimes we need to execute two time-consuming tasks asynchronously, and then return to the main thread to execute the task after both time-consuming tasks are executed. At this time, we can use the queue group of GCD.

**Local time-consuming operations can add tasks directly to the asynchronous queue group dispatch_group_async execution** The network takes time to operate, and the direct use of queue groups may not be synchronized.

Network asynchronous queues need GCD semaphores to achieve synchronization

When implementing local time-consuming operations, the effects of the two schemes are the same, but when performing network asynchronous operations, you need to use dispatch_group_enter,dispatch_group_leave combination, otherwise synchronization may not be achieved. Reference article: https://www.jianshu.com/p/3622f8b251e1

dispatch_group_notify

Monitor the completion status of tasks in the group. When all tasks are completed, add tasks to the group and execute tasks. Does not block the current thread (usually the main thread)

/**

* Queue group dispatch_group_notify

*/

- (void)groupNotify {

NSLog(@"currentThread---%@",[NSThread currentThread]); // Print current thread

NSLog(@"group---begin");

dispatch_group_t group = dispatch_group_create();

dispatch_group_async(group, dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0), ^{

// Add task 1

for (int i = 0; i < 2; ++i) {

[NSThread sleepForTimeInterval:2]; // Simulate time-consuming operations

NSLog(@"1---%@",[NSThread currentThread]); // Print current thread

}

});

dispatch_group_async(group, dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0), ^{

// Additional task 2

for (int i = 0; i < 2; ++i) {

[NSThread sleepForTimeInterval:2]; // Simulate time-consuming operations

NSLog(@"2---%@",[NSThread currentThread]); // Print current thread

}

});

dispatch_group_notify(group, dispatch_get_main_queue(), ^{

// After the previous asynchronous tasks 1 and 2 are executed, return to the main thread to execute the next task

for (int i = 0; i < 2; ++i) {

[NSThread sleepForTimeInterval:2]; // Simulate time-consuming operations

NSLog(@"3---%@",[NSThread currentThread]); // Print current thread

}

NSLog(@"group---end");

});

}

dispatch_group_wait

Pause the current thread (block the current thread, usually the main thread) and wait for the tasks in the specified group to complete before continuing. The task will continue after the timeout. However, the block will be executed after the timeout or after the task is executed,

/**

* Queue group dispatch_group_wait

*/

- (void)groupWait {

NSLog(@"currentThread---%@",[NSThread currentThread]); // Print current thread

NSLog(@"group---begin");

dispatch_group_t group = dispatch_group_create();

dispatch_group_async(group, dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0), ^{

// Add task 1

for (int i = 0; i < 2; ++i) {

[NSThread sleepForTimeInterval:2]; // Simulate time-consuming operations

NSLog(@"1---%@",[NSThread currentThread]); // Print current thread

}

});

dispatch_group_async(group, dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0), ^{

// Additional task 2

for (int i = 0; i < 2; ++i) {

[NSThread sleepForTimeInterval:5]; // Simulate time-consuming operations

NSLog(@"2---%@",[NSThread currentThread]); // Print current thread

}

});

// After the above tasks are completed, the execution will continue (blocking the current thread)

long result = dispatch_group_wait(group, 2);

NSLog(@"group---end");

if (result == 0) {

NSLog(@"group All internal tasks are over");

}else{

NSLog(@"Despite the timeout, group There are still tasks to be completed");

}

}

GCD semaphore: dispatch_semaphore

In actual development, Dispatch Semaphore is mainly used for:

Maintain thread synchronization and convert asynchronous execution tasks into synchronous execution tasks,Solve problems such as asynchronous task data crash Ensure thread safety and lock threads

The premise of using semaphores is to figure out which thread you need to handle to wait (block) and which thread to continue to execute, and then use semaphores

The semaphore in GCD refers to Dispatch Semaphore, which is the signal holding count.

Dispatch Semaphore provides three functions.

dispatch_semaphore_create: Create a Semaphore And initialize the total amount of signals (several threads can execute at the same time, usually 1) dispatch_semaphore_wait: The total semaphore can be reduced by 1. When the total signal is less than 0, it will wait all the time (blocking the thread), otherwise it can be executed normally. This is placed in front of the code to be executed. dispatch_semaphore_signal: Send a signal to add 1 to the total number of signals, which can be used after code execution, so that other threads can continue to execute

Dispatch Semaphore thread synchronization

In our development, we will encounter such requirements: asynchronous execution of time-consuming tasks, and the result of asynchronous execution is other asynchronous preconditions, such as when the data requested by the asynchronous network has dependencies. In other words, it is equivalent to converting an asynchronous execution task into a synchronous execution task. For example: afurlsessionmanager in AFNetworking tasksForKeyPath: Method in M. By introducing semaphores, wait for the results of asynchronous task execution, obtain tasks, and then return the tasks.

- (NSArray *)tasksForKeyPath:(NSString *)keyPath {

__block NSArray *tasks = nil;

dispatch_semaphore_t semaphore = dispatch_semaphore_create(0);

[self.session getTasksWithCompletionHandler:^(NSArray *dataTasks, NSArray *uploadTasks, NSArray *downloadTasks) {

if ([keyPath isEqualToString:NSStringFromSelector(@selector(dataTasks))]) {

tasks = dataTasks;

} else if ([keyPath isEqualToString:NSStringFromSelector(@selector(uploadTasks))]) {

tasks = uploadTasks;

} else if ([keyPath isEqualToString:NSStringFromSelector(@selector(downloadTasks))]) {

tasks = downloadTasks;

} else if ([keyPath isEqualToString:NSStringFromSelector(@selector(tasks))]) {

tasks = [@[dataTasks, uploadTasks, downloadTasks] valueForKeyPath:@"@unionOfArrays.self"];

}

dispatch_semaphore_signal(semaphore);

}];

dispatch_semaphore_wait(semaphore, DISPATCH_TIME_FOREVER);

return tasks;

}

Analysis: semaphore - end means number = 100 after execution; Before printing. And the output number is 100.

This is because asynchronous execution will not wait and can continue to execute the task. Asynchronous execution: after adding task 1 to the queue, do not wait, and then execute dispatch_semaphore_wait method. At this time, semaphore == 0, and the current thread enters the waiting state. Then, asynchronous task 1 begins to execute. Task 1: execute to dispatch_ semaphore_ After signal, the total semaphore. At this time, semaphore == 1, dispatch_ semaphore_ The wait method reduces the total semaphore by 1, and the blocked thread (main thread) resumes execution. Finally, print semaphore - end,number = 100. In this way, thread synchronization is realized, and asynchronous execution tasks are transformed into synchronous execution tasks.

Dispatch Semaphore thread safety and thread synchronization (locking threads)

Thread safety: if there are multiple threads running in the process of your code, and these threads may access the same data or code at the same time. If the result of each run is the same as that of single thread run, and the values of other variables are the same as expected, it is thread safe (the result of multi-threaded run is the same as expected).

If there are only read operations and no write operations for global variables and static variables in each thread, generally speaking, this global variable is thread safe; If multiple threads perform write operations (change variables) at the same time, thread synchronization should generally be considered, otherwise thread safety may be affected.

Thread synchronization: it can be understood that thread a and thread B cooperate together. When a executes to a certain extent, it depends on a result of thread B, so it stops and signals B to run; B executes according to the statement, and then gives the result to a; A. continue the operation.

Thread safety (locking with semaphore)

Ensure that only one thread is accessing public data.

UIkit classes can only be executed in the main thread, so they are absolutely safe. The attribute uses nonatomic

1. synchronized locking is a mutex lock. When a thread executes locked code, other threads will enter sleep and need to wake up before continuing to execute. The performance is low.

-(void)test3{

dispatch_async(dispatch_get_global_queue(0, 0), ^{

@synchronized (self) {//Locking ensures that the execution in the block is completed before other operations are executed

NSLog(@"1 start");

sleep(2);

NSLog(@"1 end");

}

});

dispatch_async(dispatch_get_global_queue(0, 0), ^{

@synchronized (self) {

NSLog(@"2 start");

sleep(2);

NSLog(@"2 end");

}

});

}

2. Semaphore semaphore locking belongs to spin lock. When a thread executes the locked code, other threads will enter the dead loop waiting. When it is unlocked, it will be executed immediately, with high performance.

-(void)test3{

dispatch_semaphore_t semalook = dispatch_semaphore_create(1);

dispatch_async(dispatch_get_global_queue(0, 0), ^{

dispatch_semaphore_wait(semalook, DISPATCH_TIME_FOREVER);

NSLog(@"1 start");

sleep(2);

NSLog(@"1 end");

dispatch_semaphore_signal(semalook);

});

dispatch_async(dispatch_get_global_queue(0, 0), ^{

dispatch_semaphore_wait(semalook, DISPATCH_TIME_FOREVER);

NSLog(@"2 start");

sleep(2);

NSLog(@"2 end");

dispatch_semaphore_signal(semalook);

});

}

3,NSLock

-(void)test3{

NSLock *lock = [[NSLock alloc]init];

dispatch_async(dispatch_get_global_queue(0, 0), ^{

[lock lock];

NSLog(@"1 start");

sleep(2);

NSLog(@"1 end");

[lock unlock];

});

dispatch_async(dispatch_get_global_queue(0, 0), ^{

[lock lock];

NSLog(@"2 start");

sleep(2);

NSLog(@"2 end");

[lock unlock];

});

}

dispatch_set_target_queue

This function has two functions:

1. Change the priority of the queue.

2. Prevent concurrent execution of multiple serial queues.

Change the priority of the queue

dispatch_ queue_ The priority of the serial queue and merge queue generated by the Create method is consistent with the global dispatch queue of the default priority.

If you want to change the priority of a queue, you need to use dispatch_set_target_queue function.

For example 🌰: Create a Serial Dispatch Queue that performs action processing in the background

//Requirement: generate a background serial queue

- (void)changePriority

{

dispatch_queue_t queue = dispatch_queue_create("queue", NULL);

dispatch_queue_t bgQueue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_BACKGROUND, 0);

//The first parameter: the queue whose priority needs to be changed;

//Second parameter: target queue

dispatch_set_target_queue(queue, bgQueue);

}

Prevent concurrent execution of multiple serial queues

If you add tasks to five serial queues, these tasks will be executed concurrently. Because each serial queue will create a thread, which will execute concurrently.

If you use dispatch for multiple serial queues_ set_ target_ If the queue is assigned to the same target, the multiple serial queues are executed synchronously on the target queue and are no longer executed in parallel.

Adding the serial queue to the specified priority queue will execute the serial queue in the order of adding to the priority queue.

+(void)testTargetQueue {

dispatch_queue_t targetQueue = dispatch_queue_create("test.target.queue", DISPATCH_QUEUE_SERIAL);

dispatch_queue_t queue1 = dispatch_queue_create("test.1", DISPATCH_QUEUE_SERIAL);

dispatch_queue_t queue2 = dispatch_queue_create("test.2", DISPATCH_QUEUE_SERIAL);

dispatch_queue_t queue3 = dispatch_queue_create("test.3", DISPATCH_QUEUE_SERIAL);

dispatch_set_target_queue(queue1, targetQueue);

dispatch_set_target_queue(queue2, targetQueue);

dispatch_set_target_queue(queue3, targetQueue);

dispatch_async(queue1, ^{

NSLog(@"1 in");

[NSThread sleepForTimeInterval:3.f];

NSLog(@"1 out");

});

dispatch_async(queue2, ^{

NSLog(@"2 in");

[NSThread sleepForTimeInterval:2.f];

NSLog(@"2 out");

});

dispatch_async(queue3, ^{

NSLog(@"3 in");

[NSThread sleepForTimeInterval:1.f];

NSLog(@"3 out");

});

}

dispatch_suspend/dispatch_resume

dispatch_suspend does not immediately suspend the running block, but suspends the subsequent block execution after the current block execution is completed.

//Suspend the specified queue

dispatch_suspend(queue);

//Restore the specified queue

dispatchp_resume(queue);

dispatch_queue_t queue = dispatch_queue_create("com.test.gcd", DISPATCH_QUEUE_SERIAL);

//Submit the first block and print after 5 seconds.

dispatch_async(queue, ^{

sleep(5);

NSLog(@"After 5 seconds...");

});

//Submitting the second block also delays printing for 5 seconds

dispatch_async(queue, ^{

sleep(5);

NSLog(@"After 5 seconds again...");

});

//One second delay

NSLog(@"sleep 1 second...");

sleep(1);

//Suspend queue

NSLog(@"suspend...");

dispatch_suspend(queue);

//Delay 10 seconds

NSLog(@"sleep 10 second...");

sleep(10);

//Recovery queue

NSLog(@"resume...");

dispatch_resume(queue);