Many people's impression of screen sharing only stays in the scene of PPT reporting on the PC, but in fact, today's screen sharing has already crossed the circle. For example, in a familiar scene - live game broadcast, the anchor needs to show his picture to the audience in the form of "screen sharing", and has high requirements for real-time and fluency.

For many mobile game anchors, the common practice is to share the mobile game screen live with the help of PC transfer; In fact, by calling the rongyun screen sharing SDK, you can have the ability of screen real-time sharing directly on the mobile terminal.

This article will mainly discuss the issue of iOS screen sharing, understand the development process of iOS ReplayKit framework, the function evolution of each stage, and the code and idea of realizing the corresponding functions combined with rongyun screen sharing SDK.

01 ReplayKit development history

The iOS screen recording replay kit began to appear from iOS 9.

iOS9

WWDC15 provides the replay kit framework for the first time. Its initial appearance is mainly used to record videos and store them in photo albums.

The two API s for iOS9 to start recording and stop recording have great limitations:

Only MP4 files generated by the system can be obtained, and they cannot be obtained directly. They need to be saved to the album first, and then obtained from the album;

Source data, i.e. pcm and yuv data, cannot be obtained;

The permission given to developers is low. They cannot record other apps, and they will not record when they exit the background. They can only record the current APP screen.

Controllable behaviors are:

Stop recording to pop up a video preview window, which can save or cancel or share the video file;

After recording, you can view, edit, or share in a specified way.

The API to start recording video is shown below.

/*!

Deprecated. Use startRecordingWithHandler: instead.

@abstract Starts app recording with a completion handler. Note that before recording actually starts, the user may be prompted with UI to confirm recording.

@param microphoneEnabled Determines whether the microphone input should be included in the recorded movie audio.

@discussion handler Called after user interactions are complete. Will be passed an optional NSError in the RPRecordingErrorDomain domain if there was an issue starting the recording.

*/

[[RPScreenRecorder sharedRecorder] startRecordingWithMicrophoneEnabled:YES handler:^(NSError * _Nullable error) {

if (error) {

//TODO.....

}

}];

When calling to start screen recording, the system will pop up a pop-up window, which needs to be confirmed by the user before normal recording.

The API to stop recording video is shown below.

/*! @abstract Stops app recording with a completion handler.

@discussion handler Called when the movie is ready. Will return an instance of RPPreviewViewController on success which should be presented using [UIViewController presentViewController:animated:completion:]. Will be passed an optional NSError in the RPRecordingErrorDomain domain if there was an issue stopping the recording.

*/

[[RPScreenRecorder sharedRecorder] stopRecordingWithHandler:^(RPPreviewViewController *previewViewController, NSError * error){

[self presentViewController:previewViewController animated:YES completion:^{

//TODO.....

}];

}];

iOS10

After the release of WWDC16, apple upgraded the ReplayKit, opened the access to source data, and added two Extension targets. Details include:

Add the Target of UI and Upload extensions;

Add developer permissions, allow users to log in to the service, and set up live broadcast and source data operations;

The screen can only be recorded through the expansion area. You can record not only your own APP, but also other apps;

Only APP screens can be recorded, not iOS system screens.

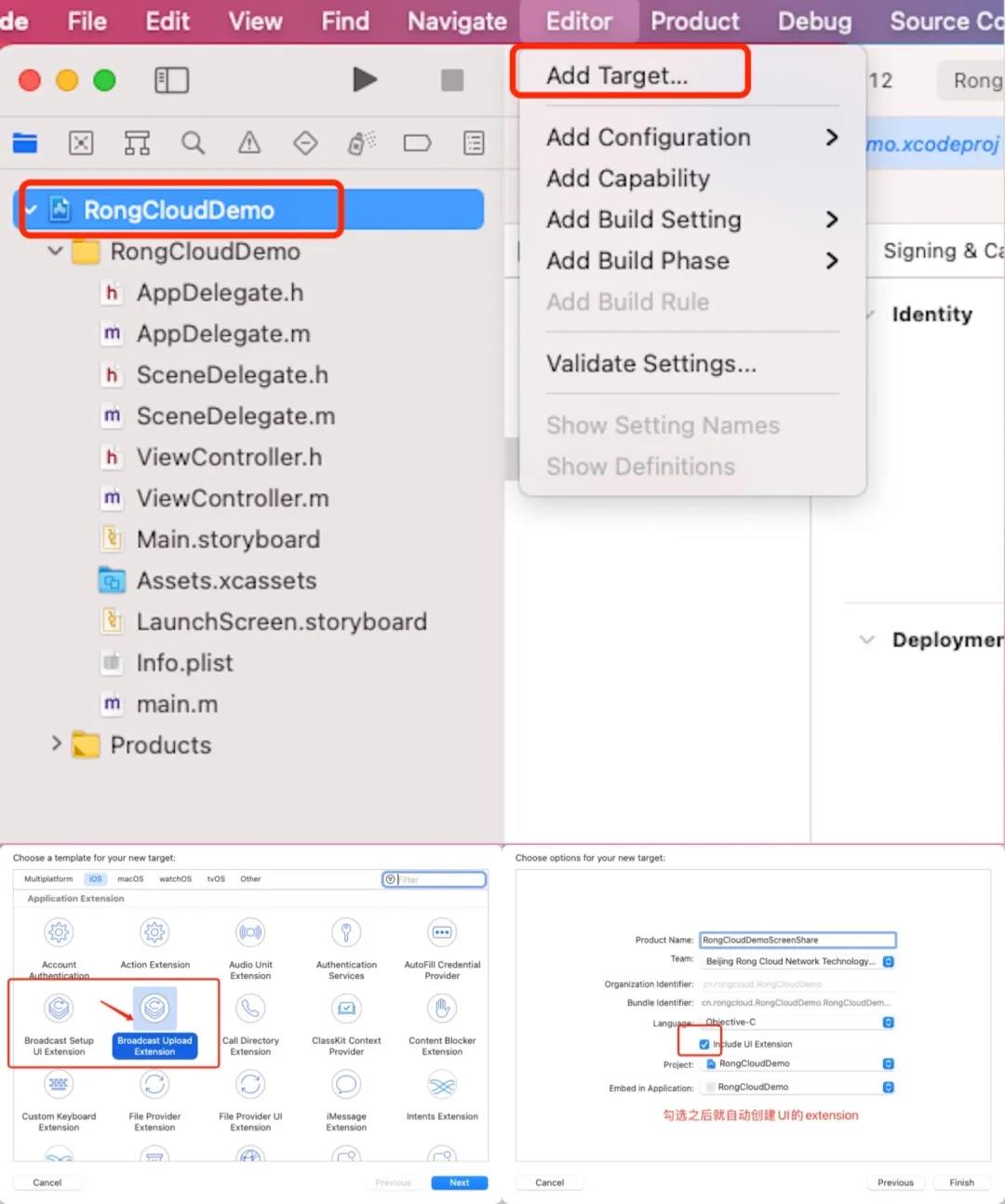

The method of creating an Extension is shown in the following figure.

UI Extension

/*

These two API s can be understood as event callback functions triggered by pop-up windows;

*/

- (void)userDidFinishSetup {

//Trigger RPBroadcastActivityViewControllerDelegate of Host App

}

- (void)userDidCancelSetup {

//Trigger RPBroadcastActivityViewControllerDelegate of Host App

}

Upload Extension

- (void)broadcastStartedWithSetupInfo:(NSDictionary<NSString *,NSObject *> *)setupInfo {

// User has requested to start the broadcast. Setup info from the UI extension can be supplied but optional.

//Here is mainly to do some initialization behavior operations

}

- (void)broadcastPaused {

// User has requested to pause the broadcast. Samples will stop being delivered.

//Receive system pause signal

}

- (void)broadcastResumed {

// User has requested to resume the broadcast. Samples delivery will resume.

//Receive system recovery signal

}

- (void)broadcastFinished {

// User has requested to finish the broadcast.

//Receiving system completion signal

}

//Here is the most explosive point of this update. We can get the system source data, and the system is divided into three categories: video frame, voice in App and microphone

- (void)processSampleBuffer:(CMSampleBufferRef)sampleBuffer withType:(RPSampleBufferType)sampleBufferType {

switch (sampleBufferType) {

case RPSampleBufferTypeVideo:

// Handle video sample buffer

break;

case RPSampleBufferTypeAudioApp:

// Handle audio sample buffer for app audio

break;

case RPSampleBufferTypeAudioMic:

// Handle audio sample buffer for mic audio

break;

default:

break;

}

}

Host APP

RPBroadcastControllerDelegate

//start

if (![RPScreenRecorder sharedRecorder].isRecording) {

[RPBroadcastActivityViewController loadBroadcastActivityViewControllerWithHandler:^(RPBroadcastActivityViewController * _Nullable broadcastActivityViewController, NSError * _Nullable error) {

if (error) {

NSLog(@"RPBroadcast err %@", [error localizedDescription]);

}

broadcastActivityViewController.delegate = self; /*RPBroadcastActivityViewControllerDelegate*/

[self presentViewController:broadcastActivityViewController animated:YES completion:nil];

}];

}

#pragma mark- RPBroadcastActivityViewControllerDelegate

- (void)broadcastActivityViewController:(RPBroadcastActivityViewController *)broadcastActivityViewController didFinishWithBroadcastController:(RPBroadcastController *)broadcastController error:(NSError *)error {

if (error) {

//TODO:

NSLog(@"broadcastActivityViewController:%@",error.localizedDescription);

return;

}

[broadcastController startBroadcastWithHandler:^(NSError * _Nullable error) {

if (!error) {

NSLog(@"success");

} else {

NSLog(@"startBroadcast:%@",error.localizedDescription);

}

}];

}

#pragma mark- RPBroadcastControllerDelegate

- (void)broadcastController:(RPBroadcastController *)broadcastController didFinishWithError:(nullable NSError *)error{

NSLog(@"didFinishWithError: %@", error);

}

- (void)broadcastController:(RPBroadcastController *)broadcastController didUpdateServiceInfo:(NSDictionary <NSString *, NSObject <NSCoding> *> *)serviceInf {

NSLog(@"didUpdateServiceInfo: %@", serviceInf);

}

iOS11

After WWDC17, apple upgraded ReplayKit2 again and added data acquisition outside the APP, which can be obtained directly in the Host App, including:

The recorded APP screen data can be processed directly in the Host APP;

The screen data of iOS system can be recorded, but it needs to be turned on manually through the control center.

Start APP screen recording

[[RPScreenRecorder sharedRecorder] startCaptureWithHandler:^(CMSampleBufferRef _Nonnull sampleBuffer, RPSampleBufferType bufferType, NSError * _Nullable error) {

[self.videoOutputStream write:sampleBuffer error:nil];

} completionHandler:^(NSError * _Nullable error) {

NSLog(@"startCaptureWithHandler:%@",error.localizedDescription);

}];

Stop APP screen recording

[[RPScreenRecorder sharedRecorder] stopCaptureWithHandler:^(NSError * _Nullable error) {

[self.assetWriter finishWritingWithCompletionHandler:^{

//TODO

}];

}];

iOS12

Apple updated ReplayKit on WWDC18 with RPSystemBroadcastPickerView, which is used to start system recording in APP, greatly simplifying the process of screen recording.

if (@available(iOS 12.0, *)) {

self.systemBroadcastPickerView = [[RPSystemBroadcastPickerView alloc] initWithFrame:CGRectMake(0, 0, 50, 80)];

self.systemBroadcastPickerView.preferredExtension = ScreenShareBuildID;

self.systemBroadcastPickerView.showsMicrophoneButton = NO;

self.navigationItem.rightBarButtonItem = [[UIBarButtonItem alloc] initWithCustomView:self.systemBroadcastPickerView];

} else {

// Fallback on earlier versions

}

02 rongyun RongRTCReplayKitExt

In order to reduce the integration burden of developers, rongyun has specially created the RongRTCReplayKitExt library to serve the screen sharing business.

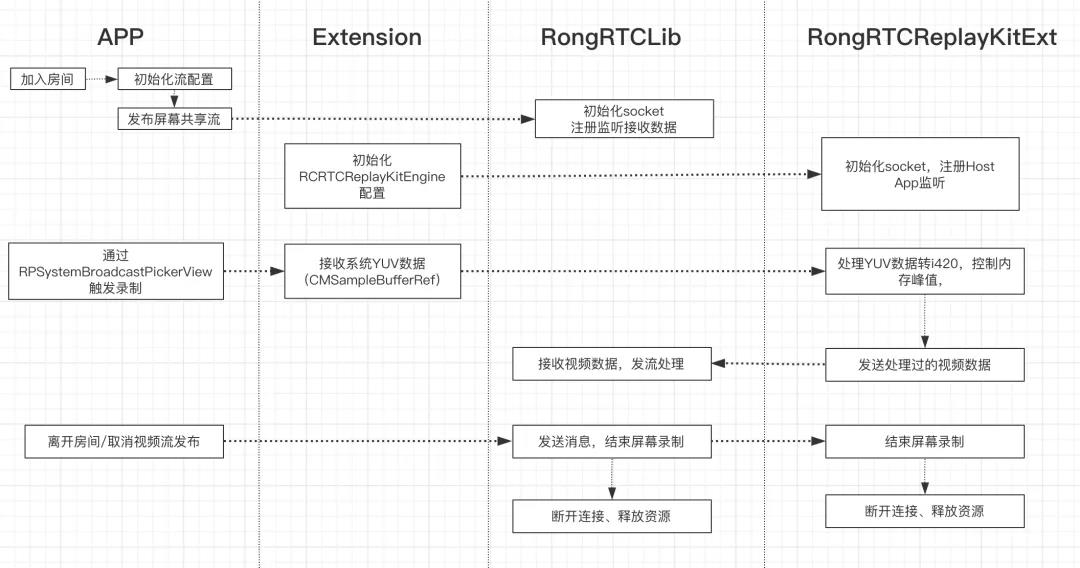

Design ideas

Upload Extension

SampleHandler receives data and initializes RCRTCReplayKitEngine;

RCRTCReplayKitEngine initializes socket communication, processes YUV data to i420, and controls memory peak.

App

Original release process:

IM connection - join room - publish resource (RCRTCScreenShareOutputStream);

socket initialization is performed internally, and the protocol is implemented to receive the processed data and push the stream.

Code example

Upload extension

#import "SampleHandler.h"

#import <RongRTCReplayKitExt/RongRTCReplayKitExt.h>

static NSString *const ScreenShareGroupID = @"group.cn.rongcloud.rtcquickdemo.screenshare";

@interface SampleHandler ()<RongRTCReplayKitExtDelegate>

@end

@implementation SampleHandler

- (void)broadcastStartedWithSetupInfo:(NSDictionary<NSString *, NSObject *> *)setupInfo {

// User has requested to start the broadcast. Setup info from the UI extension can be supplied but optional.

[[RCRTCReplayKitEngine sharedInstance] setupWithAppGroup:ScreenShareGroupID delegate:self];

}

- (void)broadcastPaused {

// User has requested to pause the broadcast. Samples will stop being delivered.

}

- (void)broadcastResumed {

// User has requested to resume the broadcast. Samples delivery will resume.

}

- (void)broadcastFinished {

[[RCRTCReplayKitEngine sharedInstance] broadcastFinished];

}

- (void)processSampleBuffer:(CMSampleBufferRef)sampleBuffer withType:(RPSampleBufferType)sampleBufferType API_AVAILABLE(ios(10.0)) {

switch (sampleBufferType) {

case RPSampleBufferTypeVideo:

[[RCRTCReplayKitEngine sharedInstance] sendSampleBuffer:sampleBuffer withType:RPSampleBufferTypeVideo];

break;

case RPSampleBufferTypeAudioApp:

// Handle audio sample buffer for app audio

break;

case RPSampleBufferTypeAudioMic:

// Handle audio sample buffer for mic audio

break;

default:

break;

}

}

#pragma mark - RongRTCReplayKitExtDelegate

-(void)broadcastFinished:(RCRTCReplayKitEngine *)broadcast reason:(RongRTCReplayKitExtReason)reason {

NSString *tip = @"";

switch (reason) {

case RongRTCReplayKitExtReasonRequestedByMain:

tip = @"Screen sharing has ended......";

break;

case RongRTCReplayKitExtReasonDisconnected:

tip = @"Application disconnect.....";

break;

case RongRTCReplayKitExtReasonVersionMismatch:

tip = @"Integration error( SDK Version number does not match)........";

break;

}

NSError *error = [NSError errorWithDomain:NSStringFromClass(self.class)

code:0

userInfo:@{

NSLocalizedFailureReasonErrorKey:tip

}];

[self finishBroadcastWithError:error];

}

Host App

- (void)joinRoom {

RCRTCVideoStreamConfig *videoConfig = [[RCRTCVideoStreamConfig alloc] init];

videoConfig.videoSizePreset = RCRTCVideoSizePreset720x480;

videoConfig.videoFps = RCRTCVideoFPS30;

[[RCRTCEngine sharedInstance].defaultVideoStream setVideoConfig:videoConfig];

RCRTCRoomConfig *config = [[RCRTCRoomConfig alloc] init];

config.roomType = RCRTCRoomTypeNormal;

[self.engine enableSpeaker:YES];

__weak typeof(self) weakSelf = self;

[self.engine joinRoom:self.roomId

config:config

completion:^(RCRTCRoom *_Nullable room, RCRTCCode code) {

__strong typeof(weakSelf) strongSelf = weakSelf;

if (code == RCRTCCodeSuccess) {

self.room = room;

room.delegate = self;

[self publishScreenStream];

} else {

[UIAlertController alertWithString:@"Failed to join the room" inCurrentViewController:strongSelf];

}

}];

}

- (void)publishScreenStream {

self.videoOutputStream = [[RCRTCScreenShareOutputStream alloc] initWithAppGroup:ScreenShareGroupID];

RCRTCVideoStreamConfig *videoConfig = self.videoOutputStream.videoConfig;

videoConfig.videoSizePreset = RCRTCVideoSizePreset1280x720;

videoConfig.videoFps = RCRTCVideoFPS24;

[self.videoOutputStream setVideoConfig:videoConfig];

[self.room.localUser publishStream:self.videoOutputStream

completion:^(BOOL isSuccess, RCRTCCode desc) {

if (isSuccess) {

NSLog(@"Publish custom stream succeeded");

} else {

NSLog(@"Failed to publish custom stream%@", [NSString stringWithFormat:@"Failed to subscribe to remote stream:%ld", (long) desc]);

}

}];

}

03 some precautions

First, the memory of ReplayKit2 cannot exceed 50MB. Once the peak value is exceeded, the system will forcibly recycle. Therefore, when processing data in the Extension, you need to pay special attention to memory release.

Second, the process communicates before. If CFDefaultcenter cannot carry parameters, it can only send messages; If you need to carry parameters, you must cache local files. A problem to note here is that data can be printed when running in debug mode, but local file data cannot be obtained under release. For specific implementation, please refer to the introduction in Github.

Finally, I want to make complaints about the small Tucao: when the abnormal recording ends, the system will pop up, and the window can not be deleted. It can only restart the device. This should be considered a more annoying BUG in the iOS system.