1, Concept description

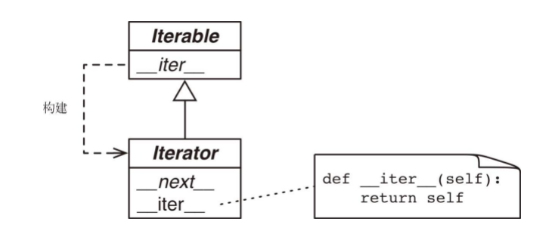

An iteratable object is an object that can be iterated. We can obtain its iterator through the built-in ITER function. The iteratable object needs to be implemented internally__ iter__ Function to return its associated iterator;

Iterator is responsible for traversing specific data one by one, which is implemented by__ next__ The function can access the associated data elements one by one; At the same time, through__ iter__ To realize the compatibility of iterative objects;

Generator is an iterator mode, which realizes the lazy generation of data, that is, the corresponding elements will be generated only when used;

2, Iteratability of sequences

python's built-in sequence can be iterated through for, and the interpreter will call the iter function to obtain the iterator of the sequence, because the iter function is compatible with the implementation of the sequence__ getitem__, An iterator is automatically created;

Iterator

import re

from dis import dis

class WordAnalyzer:

reg_word = re.compile('\w+')

def __init__(self, text):

self.words = self.__class__.reg_word.findall(text)

def __getitem__(self, index):

return self.words[index]

def iter_word_analyzer():

wa = WordAnalyzer('this is mango word analyzer')

print('start for wa')

for w in wa:

print(w)

print('start while wa_iter')

wa_iter = iter(wa)

while True:

try:

print(next(wa_iter))

except StopIteration as e:

break;

iter_word_analyzer()

dis(iter_word_analyzer)

# start for wa

# this

# is

# mango

# word

# analyzer

# start while wa_iter

# this

# is

# mango

# word

# analyzer

# 15 0 LOAD_GLOBAL 0 (WordAnalyzer)

# 2 LOAD_CONST 1 ('this is mango word analyzer')

# 4 CALL_FUNCTION 1

# 6 STORE_FAST 0 (wa)

#

# 16 8 LOAD_GLOBAL 1 (print)

# 10 LOAD_CONST 2 ('start for wa')

# 12 CALL_FUNCTION 1

# 14 POP_TOP

#

# 17 16 LOAD_FAST 0 (wa)

# 18 GET_ITER

# >> 20 FOR_ITER 12 (to 34)

# 22 STORE_FAST 1 (w)

#

# 18 24 LOAD_GLOBAL 1 (print)

# 26 LOAD_FAST 1 (w)

# 28 CALL_FUNCTION 1

# 30 POP_TOP

# 32 JUMP_ABSOLUTE 20

#

# 20 >> 34 LOAD_GLOBAL 1 (print)

# 36 LOAD_CONST 3 ('start while wa_iter')

# 38 CALL_FUNCTION 1

# 40 POP_TOP

#

# 21 42 LOAD_GLOBAL 2 (iter)

# 44 LOAD_FAST 0 (wa)

# 46 CALL_FUNCTION 1

# 48 STORE_FAST 2 (wa_iter)

#

# 23 >> 50 SETUP_FINALLY 16 (to 68)

#

# 24 52 LOAD_GLOBAL 1 (print)

# 54 LOAD_GLOBAL 3 (next)

# 56 LOAD_FAST 2 (wa_iter)

# 58 CALL_FUNCTION 1

# 60 CALL_FUNCTION 1

# 62 POP_TOP

# 64 POP_BLOCK

# 66 JUMP_ABSOLUTE 50

#

# 25 >> 68 DUP_TOP

# 70 LOAD_GLOBAL 4 (StopIteration)

# 72 JUMP_IF_NOT_EXC_MATCH 114

# 74 POP_TOP

# 76 STORE_FAST 3 (e)

# 78 POP_TOP

# 80 SETUP_FINALLY 24 (to 106)

#

# 26 82 POP_BLOCK

# 84 POP_EXCEPT

# 86 LOAD_CONST 0 (None)

# 88 STORE_FAST 3 (e)

# 90 DELETE_FAST 3 (e)

# 92 JUMP_ABSOLUTE 118

# 94 POP_BLOCK

# 96 POP_EXCEPT

# 98 LOAD_CONST 0 (None)

# 100 STORE_FAST 3 (e)

# 102 DELETE_FAST 3 (e)

# 104 JUMP_ABSOLUTE 50

# >> 106 LOAD_CONST 0 (None)

# 108 STORE_FAST 3 (e)

# 110 DELETE_FAST 3 (e)

# 112 RERAISE

# >> 114 RERAISE

# 116 JUMP_ABSOLUTE 50

# >> 118 LOAD_CONST 0 (None)

# 120 RETURN_VALUE

3, Classic iterator pattern

A standard iterator needs to implement two interface methods, one of which can get the name of the next element__ next__ Methods and methods that return self directly__ iter__ method;

When the iterator iterates over all elements, it will throw a StopIteration exception, but python's built-in for, list push, tuple unpacking, etc. will automatically handle this exception;

Realize__ iter__ The main purpose is to facilitate the use of iterators, so as to maximize the convenience of using iterators;

The iterator can only iterate once. If it needs to iterate again, it needs to call the iter method again to obtain a new iterator, which requires each iterator to maintain its own internal state, that is, an object cannot be both an iteratable object and an iterator;

From the classic object-oriented design pattern, iteratable objects can generate their own associated iterators at any time, and iterators are responsible for the iterative processing of specific elements;

import re

from dis import dis

class WordAnalyzer:

reg_word = re.compile('\w+')

def __init__(self, text):

self.words = self.__class__.reg_word.findall(text)

def __iter__(self):

return WordAnalyzerIterator(self.words)

class WordAnalyzerIterator:

def __init__(self, words):

self.words = words

self.index = 0

def __iter__(self):

return self;

def __next__(self):

try:

word = self.words[self.index]

except IndexError:

raise StopIteration()

self.index +=1

return word

def iter_word_analyzer():

wa = WordAnalyzer('this is mango word analyzer')

print('start for wa')

for w in wa:

print(w)

print('start while wa_iter')

wa_iter = iter(wa)

while True:

try:

print(next(wa_iter))

except StopIteration as e:

break;

iter_word_analyzer()

# start for wa

# this

# is

# mango

# word

# analyzer

# start while wa_iter

# this

# is

# mango

# word

# analyzer

4, Generators are also iterators

The generator is generated by calling the generator function, which is a factory function containing yield;

The generator itself is an iterator, which supports traversing the generator using the next function, and will throw a StopIteration exception after traversal;

When the generator executes, it will pause at the place of the yield statement and return the value of the expression on the right of yield;

def gen_func():

print('first yield')

yield 'first'

print('second yield')

yield 'second'

print(gen_func)

g = gen_func()

print(g)

for val in g:

print(val)

g = gen_func()

print(next(g))

print(next(g))

print(next(g))

# <function gen_func at 0x7f1198175040>

# <generator object gen_func at 0x7f1197fb6cf0>

# first yield

# first

# second yield

# second

# first yield

# first

# second yield

# second

# StopIteration

We can__ iter__ As a generator function

import re

from dis import dis

class WordAnalyzer:

reg_word = re.compile('\w+')

def __init__(self, text):

self.words = self.__class__.reg_word.findall(text)

def __iter__(self):

for word in self.words:

yield word

def iter_word_analyzer():

wa = WordAnalyzer('this is mango word analyzer')

print('start for wa')

for w in wa:

print(w)

print('start while wa_iter')

wa_iter = iter(wa)

while True:

try:

print(next(wa_iter))

except StopIteration as e:

break;

iter_word_analyzer()

# start for wa

# this

# is

# mango

# word

# analyzer

# start while wa_iter

# this

# is

# mango

# word

# analyzer

5, Implement lazy iterators

One of the highlights of iterators is through__ next__ To realize the traversal of elements one by one, which brings the possibility of traversal of this big data container;

Our previous implementation directly calls re. When initializing Findall gets all the sequence elements, which is not a good implementation; We can use re Finder to get data during traversal;

import re

from dis import dis

class WordAnalyzer:

reg_word = re.compile('\w+')

def __init__(self, text):

# self.words = self.__class__.reg_word.findall(text)

self.text = text

def __iter__(self):

g = self.__class__.reg_word.finditer(self.text)

print(g)

for match in g:

yield match.group()

def iter_word_analyzer():

wa = WordAnalyzer('this is mango word analyzer')

print('start for wa')

for w in wa:

print(w)

print('start while wa_iter')

wa_iter = iter(wa)

wa_iter1= iter(wa)

while True:

try:

print(next(wa_iter))

except StopIteration as e:

break;

iter_word_analyzer()

# start for wa

# <callable_iterator object at 0x7feed103e040>

# this

# is

# mango

# word

# analyzer

# start while wa_iter

# <callable_iterator object at 0x7feed103e040>

# this

# is

# mango

# word

# analyzer

6, Simplifying lazy iterators with generator expressions

A generator expression is a declarative definition of a generator, which is similar to the syntax of a list push, except that the generated elements are inert;

def gen_func():

print('first yield')

yield 'first'

print('second yield')

yield 'second'

l = [x for x in gen_func()]

for x in l:

print(x)

print()

ge = (x for x in gen_func())

print(ge)

for x in ge:

print(x)

# first yield

# second yield

# first

# second

#

# <generator object <genexpr> at 0x7f78ff5dfd60>

# first yield

# first

# second yield

# second

Implementing word analyzer using generator expressions

import re

from dis import dis

class WordAnalyzer:

reg_word = re.compile('\w+')

def __init__(self, text):

# self.words = self.__class__.reg_word.findall(text)

self.text = text

def __iter__(self):

# g = self.__class__.reg_word.finditer(self.text)

# print(g)

# for match in g:

# yield match.group()

ge = (match.group() for match in self.__class__.reg_word.finditer(self.text))

print(ge)

return ge

def iter_word_analyzer():

wa = WordAnalyzer('this is mango word analyzer')

print('start for wa')

for w in wa:

print(w)

print('start while wa_iter')

wa_iter = iter(wa)

while True:

try:

print(next(wa_iter))

except StopIteration as e:

break;

iter_word_analyzer()

# start for wa

# <generator object WordAnalyzer.__iter__.<locals>.<genexpr> at 0x7f4178189200>

# this

# is

# mango

# word

# analyzer

# start while wa_iter

# <generator object WordAnalyzer.__iter__.<locals>.<genexpr> at 0x7f4178189200>

# this

# is

# mango

# word

# analyzer