I am 🌟 Liao Zhiwei 🌟, one person 🌕 Java Development Engineer 🌕,📝 High quality creators in Java 📝,🎉 CSDN blog expert 🎉,🌹 Founder of the behind the scenes bigwig community 🌹. He has many years of front-line R & D experience, has studied the underlying source code of various common frameworks and middleware, and has practical architecture experience in large-scale distributed, micro service and three high architecture (high performance, high concurrency and high availability).

🍊 Blogger: java_wxid

🍊 Blogger: Liao Zhiwei

🍊 community: Big man behind the scenes

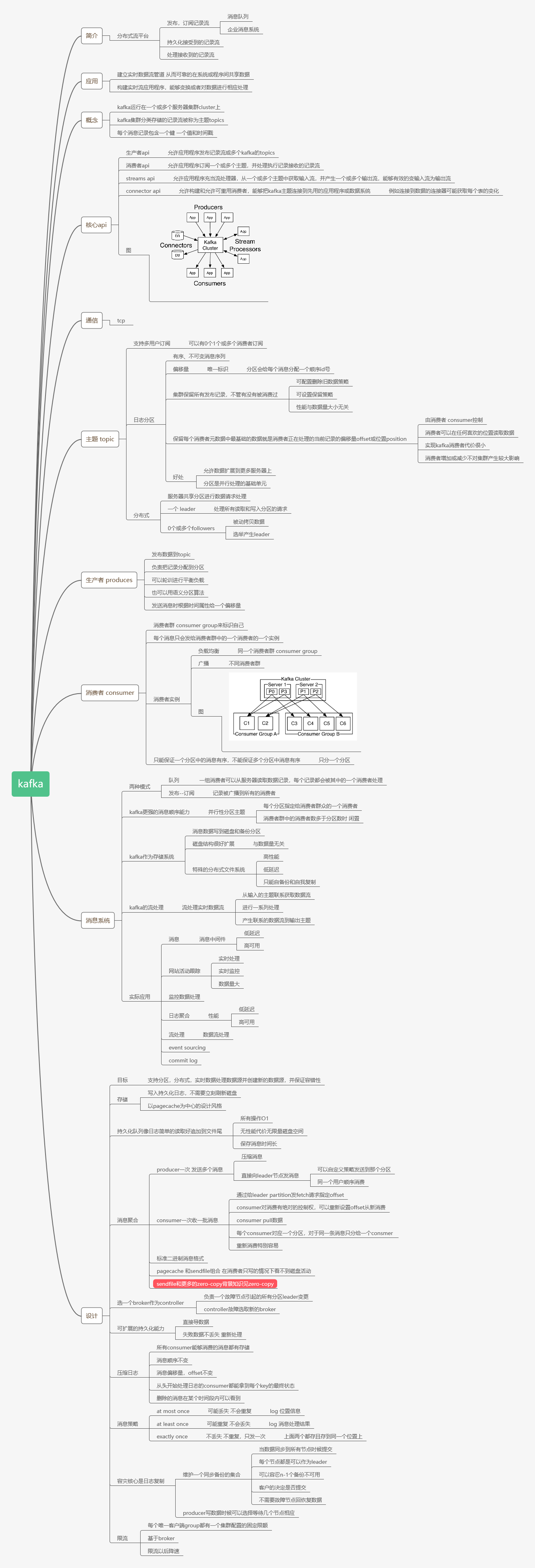

Content of this article:

Java client access Kafka

Introducing maven dependency

<dependency> <groupId>org.apache.kafka</groupId> <artifactId>kafka-clients</artifactId> <version>2.4.1</version> </dependency>

Message sender code

package com.sky.kafka.kafkaDemo;

import com.alibaba.fastjson.JSON;

import org.apache.kafka.clients.producer.*;

import org.apache.kafka.common.serialization.StringSerializer;

import java.util.Properties;

import java.util.concurrent.CountDownLatch;

import java.util.concurrent.ExecutionException;

import java.util.concurrent.TimeUnit;

public class MsgProducer {

private final static String TOPIC_NAME = "my-replicated-topic";

public static void main(String[] args) throws InterruptedException, ExecutionException {

Properties props = new Properties();

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.65.60:9092,192.168.65.60:9093,192.168.65.60:9094");

/*

Issue message persistence mechanism parameters

(1)acks=0: It means that the producer can continue to send the next message without waiting for any broker to confirm the reply of the message. Highest performance, but most likely to lose messages.

(2)acks=1: At least wait until the leader has successfully written data to the local log, but do not wait for all follower s to write successfully. You can continue to send the next message

Message. In this case, if the follower fails to successfully back up the data and the leader hangs up, the message will be lost.

(3)acks=-1 Or all: wait for min.insync Replicas (the default value is 1, and the recommended configuration is greater than or equal to 2). The number of replicas configured by this parameter is successfully written to the log. This policy will ensure that

As long as one backup survives, there will be no data loss. This is the strongest data guarantee. Generally, this configuration is only used in financial level or scenarios dealing with money.

*/

/*props.put(ProducerConfig.ACKS_CONFIG, "1");

*//*

Retrying occurs when sending fails. The default retry interval is 100ms. Retrying can ensure the reliability of message sending, but it may also cause repeated message sending, such as network jitter, so it is necessary to

The receiver handles the idempotency of message reception

*//*

props.put(ProducerConfig.RETRIES_CONFIG, 3);

//Retry interval setting

props.put(ProducerConfig.RETRY_BACKOFF_MS_CONFIG, 300);

//Set the local buffer for sending messages. If this buffer is set, messages will be sent to the local buffer first, which can improve message sending performance. The default value is 33554432, or 32MB

props.put(ProducerConfig.BUFFER_MEMORY_CONFIG, 33554432);

*//*

kafka The local thread will fetch data from the buffer and send it to the broker in batches,

Set the size of batch messages. The default value is 16384, or 16kb, which means that a batch is sent when it is full of 16kb

*//*

props.put(ProducerConfig.BATCH_SIZE_CONFIG, 16384);

*//*

The default value is 0, which means that the message must be sent immediately, but this will affect performance

Generally, it is set to about 10ms, which means that after the message is sent, it will enter a local batch. If the batch is full of 16kb within 10ms, it will be sent together with the batch

If the batch is not full within 10 milliseconds, the message must also be sent. The message sending delay cannot be too long

*//*

props.put(ProducerConfig.LINGER_MS_CONFIG, 10);*/

//Serialize the sent key from the string into a byte array

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

//Serialize the sent message value from a string into a byte array

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

Producer<String, String> producer = new KafkaProducer<String, String>(props);

int msgNum = 5;

final CountDownLatch countDownLatch = new CountDownLatch(msgNum);

for (int i = 1; i <= msgNum; i++) {

Order order = new Order(i, 100 + i, 1, 1000.00);

//Specify send partition

/*ProducerRecord<String, String> producerRecord = new ProducerRecord<String, String>(TOPIC_NAME

, 0, order.getOrderId().toString(), JSON.toJSONString(order));*/

//No sending partition is specified. The specific sending partition calculation formula is: hash(key)%partitionNum

ProducerRecord<String, String> producerRecord = new ProducerRecord<String, String>(TOPIC_NAME

, order.getOrderId().toString(), JSON.toJSONString(order));

//Synchronization blocking method waiting for successful message sending

/*RecordMetadata metadata = producer.send(producerRecord).get();

System.out.println("Sending message result in synchronization mode: "+" topic - + metadata. Topic() + "|partition-“

+ metadata.partition() + "|offset-" + metadata.offset());*/

//Send message in asynchronous callback mode

producer.send(producerRecord, new Callback() {

public void onCompletion(RecordMetadata metadata, Exception exception) {

if (exception != null) {

System.err.println("Failed to send message:" + exception.getStackTrace());

}

if (metadata != null) {

System.out.println("Send message results asynchronously:" + "topic-" + metadata.topic() + "|partition-"

+ metadata.partition() + "|offset-" + metadata.offset());

}

countDownLatch.countDown();

}

});

//Send points TODO

}

countDownLatch.await(5, TimeUnit.SECONDS);

producer.close();

}

}

Message receiver code

package com.sky.kafka.kafkaDemo;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.common.serialization.StringDeserializer;

import java.time.Duration;

import java.util.Arrays;

import java.util.Properties;

public class MsgConsumer {

private final static String TOPIC_NAME = "my-replicated-topic";

private final static String CONSUMER_GROUP_NAME = "testGroup";

public static void main(String[] args) {

Properties props = new Properties();

props.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.65.60:9092,192.168.65.60:9093,192.168.65.60:9094");

// Consumer group name

props.put(ConsumerConfig.GROUP_ID_CONFIG, CONSUMER_GROUP_NAME);

// Whether to automatically submit offset. The default is true

props.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, "true");

// Interval between auto commit offset s

props.put(ConsumerConfig.AUTO_COMMIT_INTERVAL_MS_CONFIG, "1000");

//props.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, "false");

/*

When the consumption theme is a new consumption group, or the consumption method of offset is specified, and the offset does not exist, how should it be consumed

latest(Default): only consume the messages sent to the topic after you start

earliest: For the first time, you can consume from scratch, and then continue to consume according to the consumption offset record. This need is different from that of a consumer Seektobegining (consume from scratch every time)

*/

//props.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");

/*

consumer The interval between sending a heartbeat to the broker. If the broker receives a heartbeat, it will respond through the heartbeat

rebalance The scheme is distributed to the consumer. This time can be a little shorter

*/

props.put(ConsumerConfig.HEARTBEAT_INTERVAL_MS_CONFIG, 1000);

/*

How often does the service broker think that a consumer fails when it doesn't feel the heartbeat, and it will kick it out of the consumer group,

The corresponding Partition will also be reassigned to other consumer s. The default is 10 seconds

*/

props.put(ConsumerConfig.SESSION_TIMEOUT_MS_CONFIG, 10 * 1000);

//The maximum number of messages pulled by a poll. If the consumer processes quickly, it can be set to be larger. If the processing speed is average, it can be set to be smaller

props.put(ConsumerConfig.MAX_POLL_RECORDS_CONFIG, 500);

/*

If the interval between two poll operations exceeds this time, the broker will think that the processing capacity of the consumer is too weak,

It will be kicked out of the consumption group and the partition will be allocated to other consumer s

*/

props.put(ConsumerConfig.MAX_POLL_INTERVAL_MS_CONFIG, 30 * 1000);

props.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

props.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(props);

consumer.subscribe(Arrays.asList(TOPIC_NAME));

// Consumption specified partition

//consumer.assign(Arrays.asList(new TopicPartition(TOPIC_NAME, 0)));

//Message backtracking consumption

/*consumer.assign(Arrays.asList(new TopicPartition(TOPIC_NAME, 0)));

consumer.seekToBeginning(Arrays.asList(new TopicPartition(TOPIC_NAME, 0)));*/

//Specify offset consumption

/*consumer.assign(Arrays.asList(new TopicPartition(TOPIC_NAME, 0)));

consumer.seek(new TopicPartition(TOPIC_NAME, 0), 10);*/

//Start consumption from a specified point in time

/*List<PartitionInfo> topicPartitions = consumer.partitionsFor(TOPIC_NAME);

//Consumption started 1 hour ago

long fetchDataTime = new Date().getTime() - 1000 * 60 * 60;

Map<TopicPartition, Long> map = new HashMap<>();

for (PartitionInfo par : topicPartitions) {

map.put(new TopicPartition(topicName, par.partition()), fetchDataTime);

}

Map<TopicPartition, OffsetAndTimestamp> parMap = consumer.offsetsForTimes(map);

for (Map.Entry<TopicPartition, OffsetAndTimestamp> entry : parMap.entrySet()) {

TopicPartition key = entry.getKey();

OffsetAndTimestamp value = entry.getValue();

if (key == null || value == null) continue;

Long offset = value.offset();

System.out.println("partition-" + key.partition() + "|offset-" + offset);

System.out.println();

//Determine the offset according to the timestamp in the consumption

if (value != null) {

consumer.assign(Arrays.asList(key));

consumer.seek(key, offset);

}

}*/

while (true) {

/*

* poll() API Is a long poll to pull messages

*/

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(1000));

for (ConsumerRecord<String, String> record : records) {

System.out.printf("Message received: partition = %d,offset = %d, key = %s, value = %s%n", record.partition(),

record.offset(), record.key(), record.value());

}

/*if (records.count() > 0) {

// If the offset is submitted manually, the current thread will block until the offset is submitted successfully

// Synchronous submission is generally used because there is generally no logic code after submission

consumer.commitSync();

// Manually and asynchronously submit offset. The offset submitted by the current thread will not be blocked. You can continue to process the following program logic

consumer.commitAsync(new OffsetCommitCallback() {

@Override

public void onComplete(Map<TopicPartition, OffsetAndMetadata> offsets, Exception exception) {

if (exception != null) {

System.err.println("Commit failed for " + offsets);

System.err.println("Commit failed exception: " + exception.getStackTrace());

}

}

});

}*/

}

}

}

summary

The above is what I want to talk about today. I hope all readers can actively participate in the discussion in the comment area and put forward some valuable opinions or suggestions to the article 📝, Reasonable content, I will adopt the updated blog post and share it with you again.

🙏 Four company concern 🔎 give the thumbs-up 👍 Collection ⭐ Leave a message 📝

Thank you for your support. Write a blog and share it with you. Your support( 🔎 give the thumbs-up 👍 Collection ⭐ Leave a message 📝) Is the greatest help to my creation.

🍊 The official account of WeChat: North and South dust

🍊 Home address: java_wxid

🍊 Community address: Big man behind the scenes

Big words for readers

I'm a very ordinary programmer myself. I put it in the crowd, except for the inherent 🌹 Flourishing beauty 🌹, The only hair left is the big one of 180. A person like me has been writing blog silently for many years. There is an old saying that is good, 🌕 Before the cow, it was a fool's persistence 🌕. I hope I can create my own through a large number of works, accumulation of time, personal charm, luck and opportunity 🌟 Technical influence 🌟. At the same time, I hope I can become a 🎄 Understand technology 🎄,🎄 Understand business 🎄,🎄 Understand management 🎄 Comprehensive talents, as the chief designer of the project architecture route, control the overall situation 🌕 Team brain 🌕, In the technical team 🍊 Absolute core 🍊 It is my goal to move forward in the next few years.

Tip: the following are resource sharing. Please click three times.Interview materials

Welfare release, 🎉 Welcome to pay attention 🔎 give the thumbs-up 👍 Collection ⭐ Leave a message 📝, Please. 🙏, This is really important to me.

Click: Interview materials

Extraction code: 2021

200 sets of PPT templates

Welfare release, 🎉 Welcome to pay attention 🔎 give the thumbs-up 👍 Collection ⭐ Leave a message 📝, Please. 🙏, This is really important to me.

Click: 200 sets of PPT templates

Extraction code: 2021

Wisdom of questioning

Welfare release, 🎉 Welcome to pay attention 🔎 give the thumbs-up 👍 Collection ⭐ Leave a message 📝, Please. 🙏, This is really important to me.

Click: Wisdom of questioning

Extraction code: 2021

Java development learning route

| name | link |

|---|---|

| JavaSE | Click: JavaSE |

| MySQL column | Click: MySQL column |

| JDBC column | Click: JDBC column |

| MyBatis column | Click: MyBatis column |

| Web column | Click: Web column |

| Spring column | Click: Spring column |

| Spring MVC column | Click: Spring MVC column |

| SpringBoot column | Click: SpringBoot column |

| Springcourse column | Click: Springcourse column |

| Redis column | Click: Redis column |

| Linux column | Click: Linux column |

| Maven 3 column | Click: Maven 3 column |

| Spring Security5 column | Click: Spring Security5 column |

| More columns | For more columns, please go to java_wxid home page see |

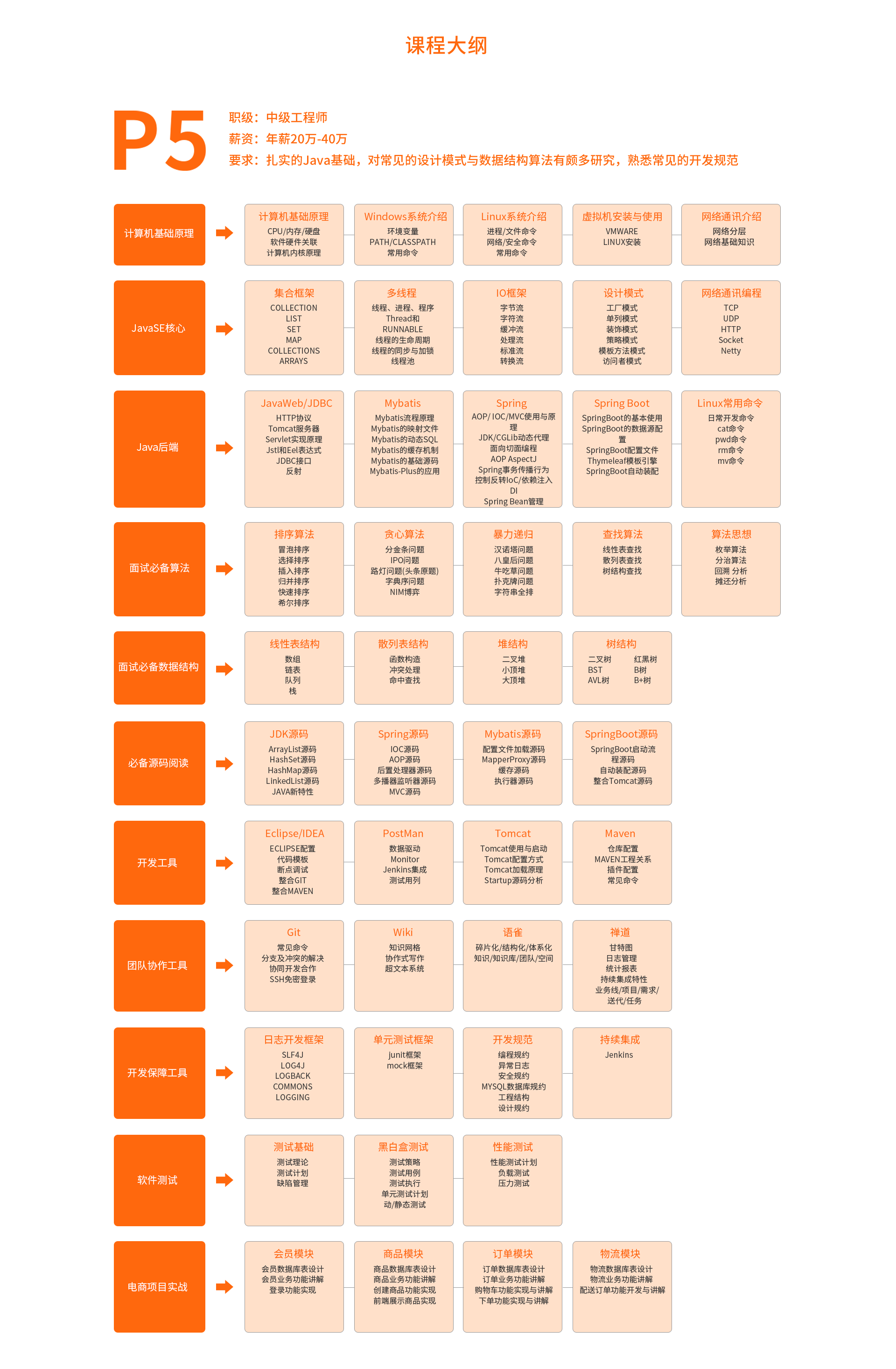

P5 learning Roadmap

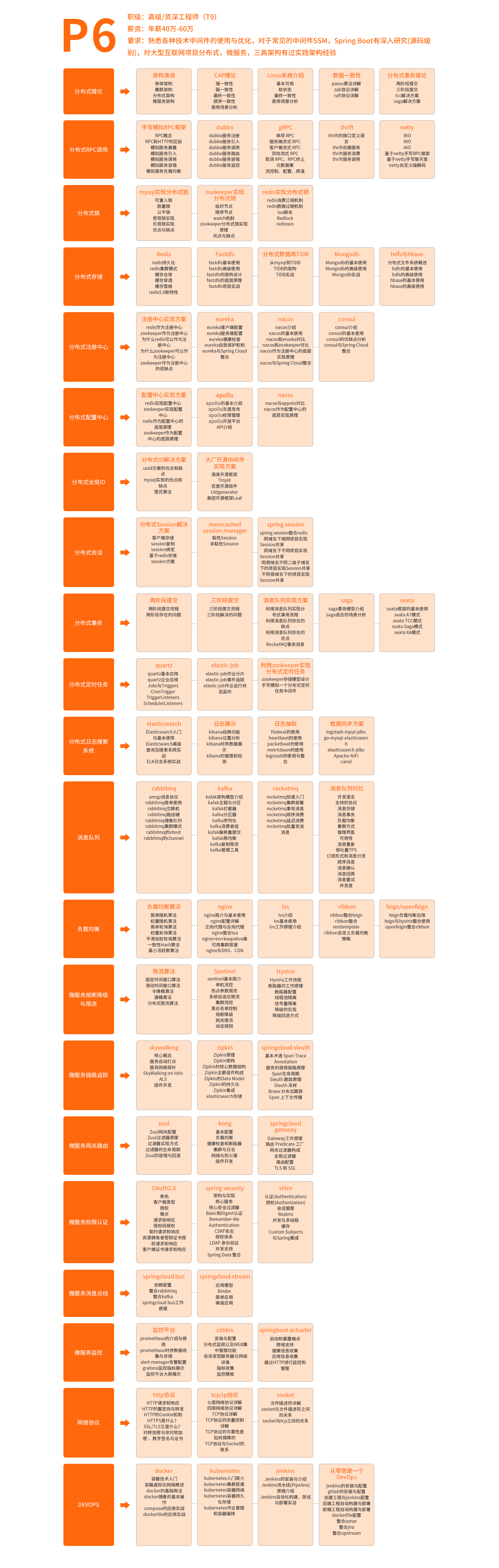

P6 learning Roadmap

P6 learning Roadmap

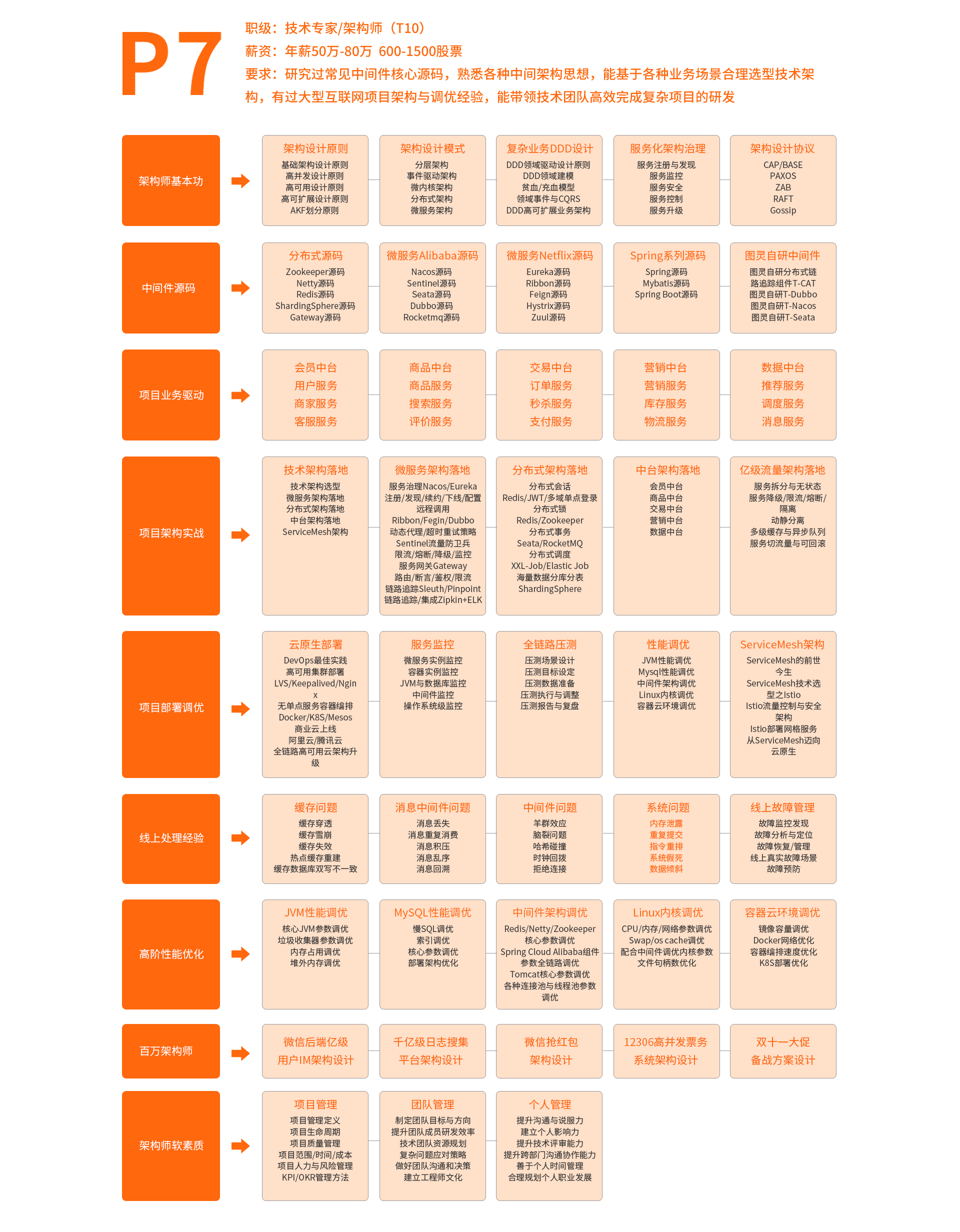

P7 learning Roadmap

P7 learning Roadmap

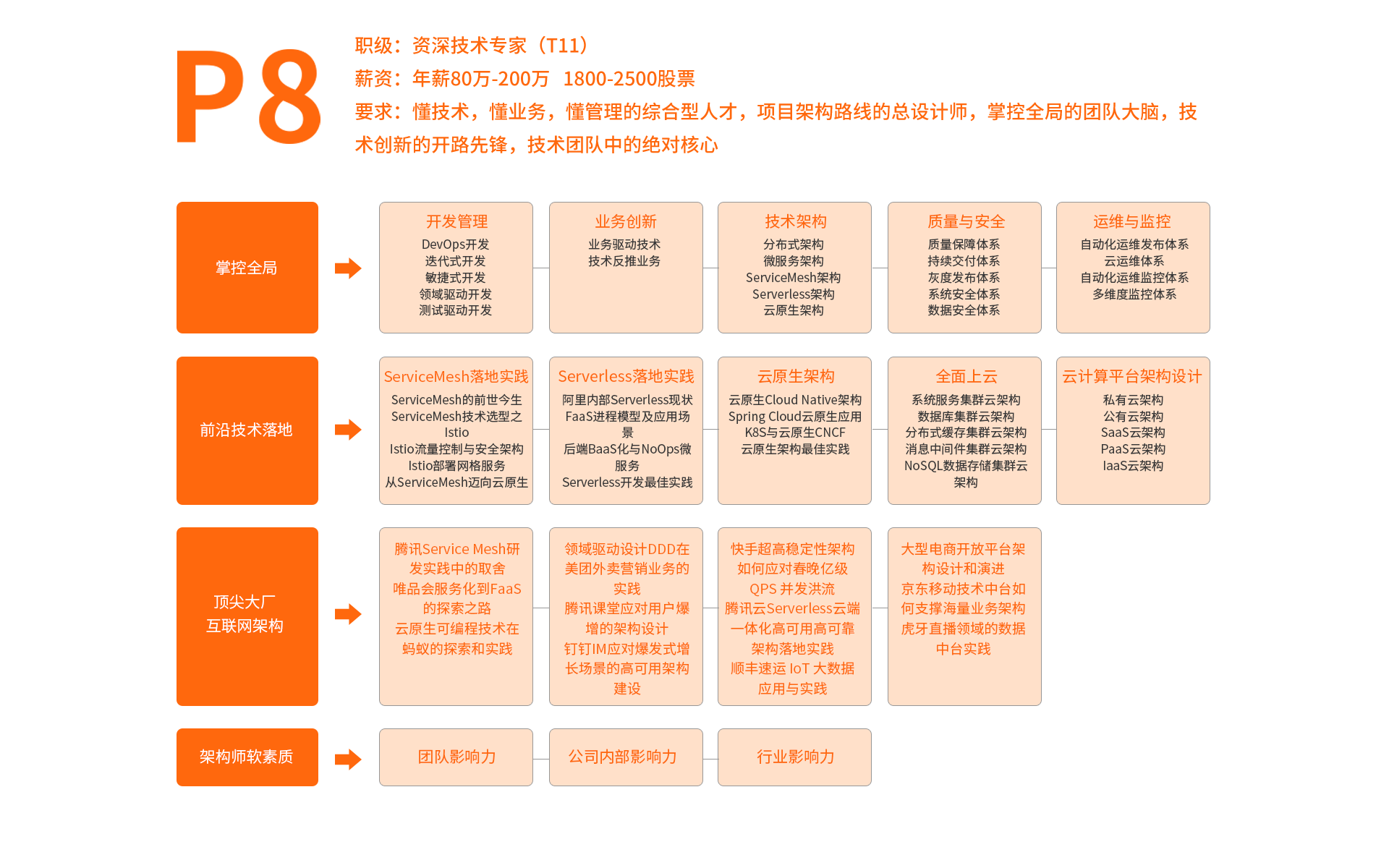

P8 learning Roadmap

P8 learning Roadmap

The above four figures describe in detail the knowledge and skills required as Java developers. Students who have failed to learn can chat with me in private, 🎉 Welcome to pay attention 🔎 give the thumbs-up 👍 Collection ⭐ Leave a message 📝.

🍊 Blogger: java_wxid

🍊 Blogger: Liao Zhiwei

🍊 community: Big man behind the scenes