The java concurrency explained in the previous articles mainly focuses on ensuring the atomicity of the code in the critical area when accessing shared variables. In this chapter, we further study the visibility of shared variables among multiple threads and the ordering of multiple instructions

1. JAVA Memory Model (JMM)

JMM is the Java Memory Model. It defines the abstract concepts of main memory and working memory from the java level. The bottom layer corresponds to CPU register, cache, hardware memory, CPU instruction optimization, etc. JMM is embodied in the following aspects

-

Atomicity - ensures that instructions are not affected by thread context switching (explained earlier)

-

Visibility - ensures that instructions are not affected by the cpu cache

-

Orderliness - ensures that instructions are not affected by cpu instruction parallel optimization

Visibility and order will be explained later:

2. Visibility

First look at a piece of code and try to run:

public class CantGoOut {

static boolean run = true;

public static void main(String[] args) throws InterruptedException {

Thread t = new Thread(()->{

while(run){

// ....

// System.out.println("add me and you can stop"); If you add this code, it will stop

}

});

t.start();

Thread.sleep(1000);

System.out.println("Try to stop the thread");

run = false; // The thread t does not stop as expected

}

}Why? Analyze:

-

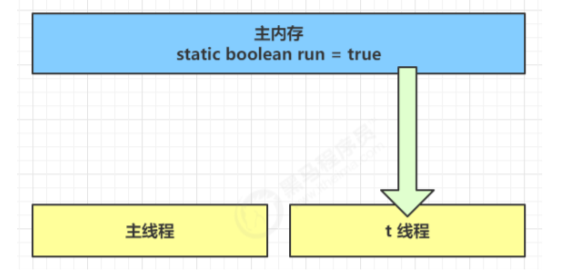

In the initial state, the t thread just started to read the value of run from the main memory to the working memory.

-

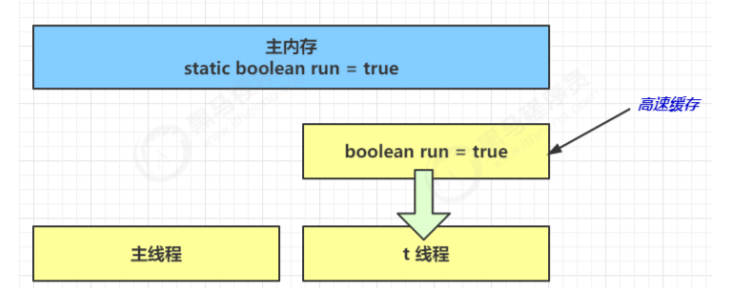

Because t1 thread frequently reads the value of run from main memory, jit real-time compiler will cache the value of run into the cache in its own working memory to reduce the access to run in main memory to improve efficiency

-

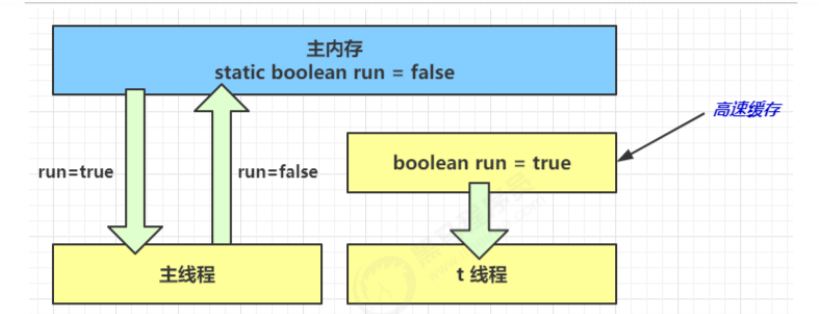

One second later, the main thread modifies the value of run and synchronizes it to main memory, while t reads the value of this variable from the cache in its working memory, and the result is always the old value.

resolvent

Volatile (meaning volatile keyword) can be used to modify member variables and static member variables. It can avoid the thread from looking up the value of the variable from its own work cache. It must get its value from main memory. Threads operate volatile variables directly in main memory.

Using the synchronized keyword has the same effect! In the Java memory model, synchronized specifies that when a thread locks, it first empties the working memory → copies the copy of the latest variable in the main memory to the working memory → executes the code → flushes the changed value of the shared variable into the main memory → releases the mutex.

-

2.1 two phase termination of synchronization mode

The volatile keyword is used to implement the two-phase termination mode. The above code:

public class TwoStepStop {

public static void main(String[] args) throws InterruptedException {

Monitor monitor = new Monitor();

monitor.start();

Thread.sleep(3500);

monitor.stop();

}

}

class Monitor {

Thread monitor;

//Set a flag to judge whether it has been terminated

private volatile boolean stop = false;

/**

* Start monitor thread

*/

public void start() {

//Set wire controller thread to monitor thread status

monitor = new Thread() {

@Override

public void run() {

//Start constant monitoring

while (true) {

//Phase II termination

if (stop) {

System.out.println("Processing subsequent tasks");

break;

}

System.out.println("Monitor running...");

try {

//Thread sleep

Thread.sleep(1000);

} catch (InterruptedException e) {

//Phase I termination

System.out.println("Interrupted");

}

}

}

};

monitor.start();

}

/**

* Used to stop the monitor thread

*/

public void stop() {

//Interrupt thread

monitor.interrupt();

//Modify tag

stop = true;

}

}2.2 hesitation mode of synchronization mode

Balking mode is used when a thread finds that another thread or this thread has done the same thing, then this thread does not need to do it again and directly ends the return. It is a bit similar to a singleton.

give an example:

public class BalkModel {

public static void main(String[] args) throws InterruptedException {

Monitor1 monitor = new Monitor1();

monitor.start();

monitor.start();

Thread.sleep(3500);

monitor.stop();

}

}

class Monitor1 {

Thread monitor;

//Set a flag to judge whether it has been terminated

private volatile boolean stop = false;

//Set a flag to judge whether it has been started

private boolean starting = false;

/**

* Start monitor thread

*/

public void start() {

//Lock to avoid thread safety problems during multithreading

synchronized (this) {

if (starting) {

//Started, return directly

return;

}

//Start the monitor and change the flag

starting = true;

}

//Set wire controller thread to monitor thread status

monitor = new Thread() {

@Override

public void run() {

//Start constant monitoring

while (true) {

if(stop) {

System.out.println("Processing subsequent tasks");

break;

}

System.out.println("Monitor running...");

try {

//Thread sleep

Thread.sleep(1000);

} catch (InterruptedException e) {

System.out.println("Interrupted");

}

}

}

};

monitor.start();

}

/**

* Used to stop the monitor thread

*/

public void stop() {

//Interrupt thread

monitor.interrupt();

stop = true;

}

}3 order

Run the following code:

public class IResult {

int r1;

}

public class TestProblem {

int num = 0;

// volatile modified variables can disable instruction rearrangement. volatile boolean ready = false; It can prevent the code before the variable from being reordered

boolean ready = false;

// Thread 1 executes this method

public void actor1(IResult r) {

if(ready) {

r.r1 = num + num;

}

else {

r.r1 = 1;

}

}

// Thread 2 executes this method

public void actor2(IResult r) {

num = 2;

ready = true;

}

public static void main(String[] args) {

TestProblem testProblem = new TestProblem();

IResult r = new IResult();

for(int i = 0; i < 100; i++){

new Thread(() -> {

testProblem.actor1(r);

}).start();

new Thread(() -> {

testProblem.actor2(r);

}).start();

System.out.println("The first"+ i +"The result of this time is:" + r.r1);

}

}

}result:

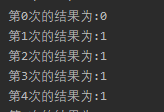

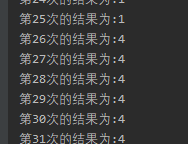

You will find that these three results, 1 and 4, are obvious, but how does 0 come from?

This phenomenon is called instruction rearrangement, which is some optimization of JIT compiler at run time. This phenomenon can be repeated only through a large number of tests,

Reordering also needs to follow certain rules:

-

Reordering does not reorder operations that have data dependencies. For example: a=1;b=a; In this instruction sequence, since the second operation depends on the first operation, the two operations will not be reordered at compile time and processor runtime.

-

Reordering is to optimize performance, but no matter how reordering, the execution results of programs in a single thread cannot be changed. For example: a = 1; b=2; For the three operations of c=a+b, the first step (a=1) and the second step (b=2) may be reordered because there is no data dependency, but the operation of c=a+b will not be reordered because the final result must be c=a+b=3.

In other words, if the running order of two instructions does not affect the result, the running order can be exchanged with each other.

Reordering in single thread mode will certainly ensure the correctness of the final result, but in multi-threaded environment, the problem arises. Solution: the variable modified by volatile can disable instruction rearrangement.

Note: using synchronized does not solve the ordering problem, but if the variable is within the protection range of the synchronized code block, the variable will not be operated by multiple threads at the same time, and the ordering problem does not need to be considered! In this case, it is equivalent to solving the reordering problem! Referring to the code in the double checked locking problem, the instance variables in the first code fragment are all in the synchronized code block, and the instances in the second code fragment are not all in the synchronized code block, so there is a problem.

3.1.volatile principle

The underlying implementation principle of volatile is Memory Barrier (Memory Fence)

-

The write barrier will be added after the write instruction to the volatile variable

-

A read barrier is added before a read instruction to a volatile variable

What are the read barrier and the write barrier, and what are their functions?

The write barrier (sfence) ensures that changes to shared variables before the barrier are synchronized to main memory.

The lfence ensures that after the barrier, the shared variables are read and the new data in main memory is loaded.

The write barrier ensures that the code before the write barrier is not placed after the write barrier when the instruction is reordered

The read barrier ensures that the code behind the read barrier does not rank before the read barrier when the instruction is reordered

3.2. How to ensure visibility and order

1. The write barrier (sfence) ensures that changes to shared variables before the barrier are synchronized to main memory.

volatile boolean ready;

public void actor2(I_Result r) {

num = 2;

ready = true; // ready is modified by volatile, and the assignment has a write barrier

// Write barrier

}2. The lfence ensures that after the barrier, the latest data in main memory is loaded for the reading of shared variables

public void actor1(I_Result r) {

// Read barrier

// ready is modified by volatile, and the read value has a read barrier

if(ready) {

r.r1 = num + num;

} else {

r.r1 = 1;

}

}Note:

-

The write barrier only guarantees that subsequent reads can read the latest results, but it cannot guarantee that the reads of other threads run ahead of it

-

The guarantee of order only ensures that the relevant code in this thread is not reordered

Take the double checked locking singleton mode as an example, which is the most commonly used place for volatile.

1. Initial single instance mode:

public final class Singleton {

private Singleton() { }

private static Singleton INSTANCE = null;

public static Singleton getInstance() {

// The first access will be synchronized, and the subsequent use does not need to enter synchronized

synchronized(Singleton.class) {

if (INSTANCE == null) { // t1

INSTANCE = new Singleton();

}

}

return INSTANCE;

}

}So at singlton Class locking can effectively solve the problem of multi-threaded singleton creation, but after singleton creation, subsequent instances will still be locked out when they are accessed again, resulting in efficiency problems.

2. Double check lock single instance mode:

public final class Singleton {

private Singleton() { }

private static Singleton INSTANCE = null;

public static Singleton getInstance() {

if(INSTANCE == null) { // t2

// The first access is synchronized, but the subsequent use is not synchronized

synchronized(Singleton.class) {

if (INSTANCE == null) { // t1

INSTANCE = new Singleton();

}

}

}

return INSTANCE;

}

}This solves the problem of lock efficiency after the singleton is created.

However, a new problem will be introduced here: we can review our previous introduction to syn and the creation process of objects:

Question 1: the impact of SYN: the first if(INSTANCE == null) is not within the scope of syn, so he will not receive the monitor limit of syn and directly read the content in instance.

Problem 2: the impact of the object creation process: there are many steps during object creation, such as loading, linking and initialization: if the object is only loaded into memory, then instance is not null, and there is still no initialization data in the object at this time. It is an incomplete object. The object read at this time has problems when used.

Through the analysis of the above two problems, we can see that the visibility and order of instance are very necessary:

public final class Singleton {

private Singleton() { }

private static volatile Singleton INSTANCE = null;

public static Singleton getInstance() {

// An instance is not created before it enters the internal synchronized code block

if (INSTANCE == null) {

synchronized (Singleton.class) { // t2

// Maybe another thread has created an instance, so judge again

if (INSTANCE == null) { // t1

INSTANCE = new Singleton();

}

}

}

return INSTANCE;

}

}How does it solve that the second thread will not read the uninitialized instance?

That is, volatile will add a read-write barrier to the reading and writing of variables. When thread 2 reads the instance, it finds that there is a read barrier before the instance. At this time, it will wait for the instance variable to be read after writing. At this time, it ensures that the read instance is an object that completes the whole creation process.