1. Context switching

Even a single core processor supports multi-threaded code execution. The CPU implements this mechanism by allocating CPU time slices to each thread. Time slice is the time allocated by the CPU to each thread. Because the time slice is very short, the CPU keeps switching threads for execution, which makes us feel that multiple threads execute at the same time. The time slice is generally tens of milliseconds (ms).

The CPU circularly executes tasks through the time slice allocation algorithm. After the current task executes one time slice, it will switch to the next task. However, the state of the previous task will be saved before switching, so that the state of this task can be loaded again when switching back to this task next time. Therefore, the process from saving to reloading a task is a context switch.

1.1 must multithreading be fast?

public class ConcurrencyTest {

private static final long count = 100001;

public static void main(String[] args) throws InterruptedException {

concurrency();

serial();

}

private static void concurrency() throws InterruptedException{

long start = System.currentTimeMillis();

Thread thread = new Thread(new Runnable() {

@Override

public void run() {

int a = 0;

for (long i=0;i<count;i++){

a += 5;

}

}

});

thread.start();

int b = 0;

for (long i=0;i<count;i++){

b--;

}

long time = System.currentTimeMillis()-start;

thread.join();

System.out.println("Concurrency : "+time + "ms,b= "+b);

}

private static void serial(){

long start = System.currentTimeMillis();

int a = 0;

for (long i=0;i<count;i++){

a += 5;

}

int b = 0;

for (long i=0;i<count;i++){

b --;

}

long time = System.currentTimeMillis()-start;

System.out.println("serial :"+time+"ms, b= "+b+",a="+a);

}

}

When the cumulative operation is performed no more than one million times concurrently, the speed will be slower than that of serial cumulative operation. So why is concurrent execution slower than serial execution? At this time, because the thread has the overhead of creation and context switching.

1.2 reduce context switching

(1) Use the jstack command dump thread information to see what the threads in the process with pid 10622 are doing?

jstack 10622 > /home/rmc/log/dump10

(2) Count the status of all threads and find two threads in waiting (onobject monitor) status.

6 RUNNABLE 1 TIMED_WAITING(onobjectmonitor) 2 TIMED_WAITING(sleeping) 2 WAITING(onobjectmonitor) 64 WAITING(parking)

Test context switching times and duration

(1) Using lbench3, you can measure the duration of context switching.

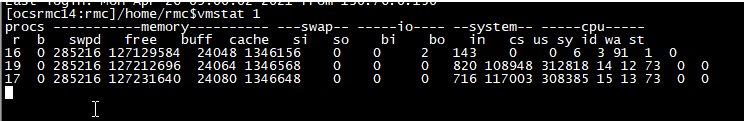

(2) Using vmstat 1, you can measure the number of context switches.

CS(Content Switch) indicates the number of context switches. From the above test results, we can see that the context switches more than 1000 times per second.

1.3 how to reduce context switching:

Lock free concurrent programming / CAS algorithm / using least threads / coroutine

(1) Lock free concurrent programming: when multithreading competes for locks, it will cause context switching. Therefore, when multithreading processes data, some methods can be used to avoid using locks, such as segmenting the data Id according to the hash algorithm, and different threads process different segments of data.

(2) CAS algorithm. Java's Atomic package uses CAS algorithm to update data without locking.

(3) Use the least number of threads. Avoid creating unnecessary threads. For example, there are few tasks, but many threads are created for processing, which will cause a large number of threads to be in a waiting state.

(4) Synergetic process. Realize multi task scheduling in a single thread, and maintain the switching between multiple tasks in a single thread.

Several common ways to avoid deadlock:

(1) Avoid a thread acquiring multiple locks at the same time.

(2) Avoid one thread occupying multiple resources in the lock, and try to ensure that each lock occupies only one resource.

(3) Try to use timing lock and use lock Try lock (timeout) instead of using the internal lock mechanism.

(4) For database locks, locking and unlocking must be in a database connection, otherwise unlocking will fail.

Resource constraints:

(1) Resource limitation means that when concurrent programming is carried out, the execution speed of the program is limited by computer hardware resources or software resources.

(2) Problems caused by resource constraints: in concurrent programming, the principle of speeding up code execution is to turn the serial execution part of the code into concurrent execution. However, if a section of serial code is executed concurrently and is still executed serially due to resource constraints, the program will not speed up execution, but will be slower, Because it increases the time of context switching and resource scheduling.

(3) How to solve the problem of resource limitation: for hardware resource limitation, we can consider using cluster parallel execution program.

For Java engineers, it is strongly recommended to use JDK and contract the provided concurrency containers and tool classes to solve the concurrency problem, because these classes have passed sufficient testing and optimization.