Author Ma Chao

Produced by | CSDN (ID: CSDNnews)

With the ups and downs of traffic in the Internet era, many technology giants have also failed in the face of the impact of traffic. XXX broke down and the news search continues. With the automatic elasticity of rapid expansion, Serverless can calmly deal with similar impacts, which also makes this new technology shine in the limelight.

Behind the noise of Serverless, Rust seems to firmly occupy the C position, but in fact, there are many patterns and routines to summarize under the topic of high concurrency, especially professional programming frameworks such as Tokio and RxJava, which are very helpful for programmers to write high-performance programs. In order to discuss the topic of high concurrency in depth, this paper still focuses on several mainstream back-end languages such as Java, C, Go and Rust. It can be said that these languages have their own unique niche in the face of high concurrency scenarios. Below is like sharing the writer's experience.

Before the formal discussion, the author should first explain that the topic discussed in this paper is high concurrency rather than high parallelism. In fact, concurrency and parallelism are completely two things. Parallelism is a core responsible for one task, and its foundation is multi-core execution architecture; Concurrency is the alternative execution of multiple tasks, that is, high concurrency is to limit the performance of the system, try to wait for the empty window returned by IO, and arrange full load work for the CPU, so as to make the single core play the effect of multi-core.

A swordsman with a knife flow - Go language

Different from Java, Rust and other languages, Go language has its own style. It doesn't need any high concurrency framework, because Go language itself is a very powerful high concurrency framework. The first impression of Go language is very extreme. It has very strict requirements for code simplicity. It is strictly prohibited to import packages that are not used in the code, and it is also required to delete variables that are not used.

There are many excellent examples of Go language, such as Docker, K8s, TiDB, BFE, etc. even without referring to these successful open source projects, relying only on the demonstration given by the official can make a simple Go statement show amazing performance. With a limited number of lines of code, the performance of Go language should be the best of all frameworks.

Using Go language makes it easy for programmers to develop an application with strong performance. It is precisely this simple and easy-to-use feature that makes many developers mistakenly think that the excellent efficiency of the program is the embodiment of their coding strength. But in fact, if you have a deep understanding of Go language, you will find that many details may be hidden behind the high concurrency artifact Goroutine. Here are two examples.

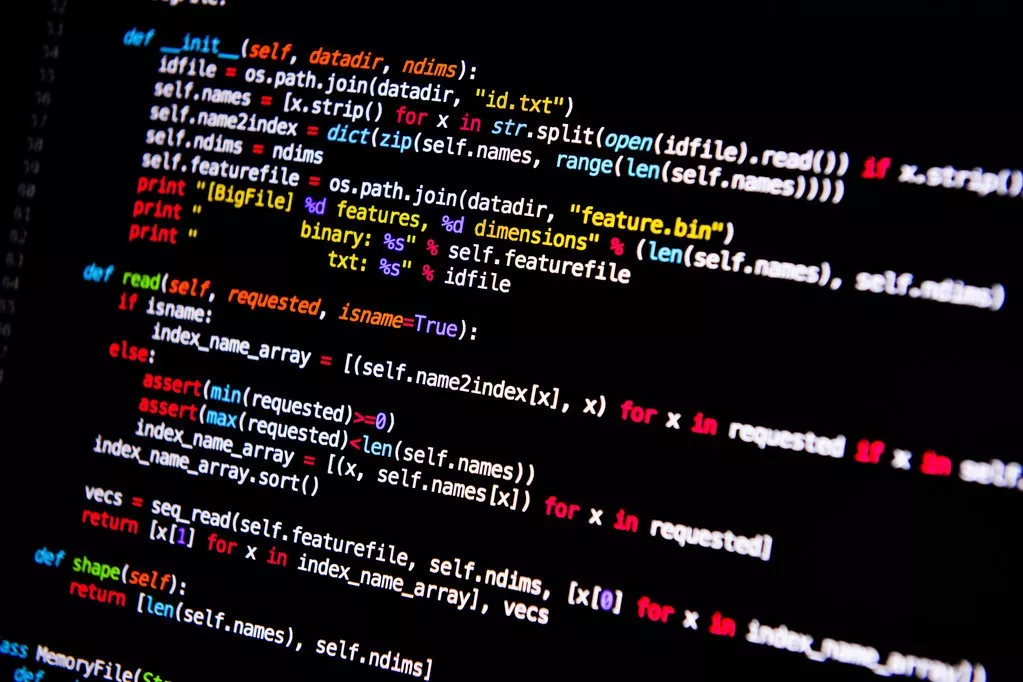

1, The memory barrier causes the variable value not to be refreshed: in the following code, we start a Gourtine infinite call i + +, and continuously + 1 the variable i.

package main

import (

"fmt"

"runtime"

"time"

)

func main() {

var y int32

go func() {

for {

y++

}

}()

time.Sleep(time.Second)

fmt.Println("y=", y)

}

But no matter how long your main thread waits, the output is always a=0.

This is actually a barrier problem between cache and memory. The CPU's operation on variable a is limited to cache, but it is not flush ed into memory. Therefore, when printing the value of variable a, the main goutine can only get the starting value, that is, 0.

The solution to this problem is also simple and speechless. It can be solved by adding an if judgment that is completely impossible to be executed to the body of the execution function of Gouroutine.

package main

import (

"fmt"

"runtime"

"time"

)

func main() {

var y int32

z:=0

go func() {

for {

if z > 0 {

fmt.Println("zis", z)//This line of code will not execute to

}

y++

}

}()

time.Sleep(time.Second)

fmt.Println("y=", y)

}

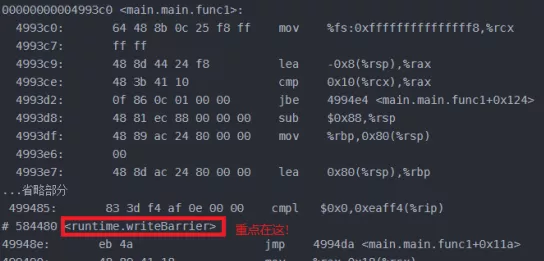

Through the decompilation tool to view the assembly code, you can see that the if operation actually invokes the writebarrier, that is, the memory write barrier operation.

Although this if branch will not be executed at all, as long as this if code segment exists, Goroutine will execute the memory wirecarrier operation when it is scheduled to be executed, so as to flush the variables in the adjustment cache into the main memory. This mechanism is likely to hide bugs that are very difficult to check.

2, The wrong delivery of closure address causes the wrong value of slice elements: in daily work, if the elements in a slice / array are independent of each other, we are very likely to create closures through gouroutine and take out each element in the slice for separate processing. However, if we do not refer to the best practice, the handwritten code may have hidden dangers, such as:

import (

"fmt"

"time"

)

func main() {

tests1ice := []int{1, 2, 3, 4, 5}

for _, v := range tests1ice {

go func() {

fmt.Println(v)

}()

}

time.Sleep(time.Millisecond)

}

The above code generally takes only one element, such as five consecutive 3's or five 5's,

To solve this problem, you need to force the use of value passing, as follows:

go func(v int) {

fmt.Println(v)

}(v)

The difficulties and complications related to Go language are not the focus of our attention today. What the author wants to express here is that Go language wants to be easy to use, but it is difficult to use precision and limit. Therefore, I personally think that Go language is very similar to the Oriental swordsmanship sect of yidaoliu. It is simple to get started and fast to form, but the road to become a top expert is actually as long.

What are the concepts of Poll, Epoll and Future in high concurrency

After talking about the alternative faction of Go language, we return to several important concepts in high concurrency. Among the languages we focus on today, Future is not a mainstream implementation, but the concepts of Future and Poll are so important that we must focus on Rust at the beginning. Therefore, we will focus on Rust first Compared with Java, the Future implementation is relatively complete and the feature support is complete. Therefore, the following code takes Rust as an example.

In short, future is not a value, but a value type, a value type that can only be obtained in the future. The future object must implement the std::future:: future interface in the Rust standard library. The Output of future is the value that cannot be generated until future is completed. In Rust, future calls Future::poll through the manager to promote future operations. Future is essentially a state machine and can be nested. Let's take a look at the following example. In the main function, we instantiate MainFuture and call. await. In addition to migrating between several States, MainFuture will also call a delayed future to realize the nesting of future.

MainFuture takes State0 state as the initial state. When the scheduler calls the poll method, MainFuture tries to raise its state as much as possible. If the future is completed, it returns Poll::Ready. If MainFuture is not completed, it returns Pending because the DelayFuture it is waiting for does not reach Ready status. After receiving the Pending result, the scheduler will put the MainFuture back into the queue to be scheduled. Later, it will call the poll method again to promote the execution of future. The details are as follows:

use std::future::Future;

use std::pin::Pin;

usestd::task::{Context, Poll};

usestd::time::{Duration, Instant};

struct Delay {

when: Instant,

}

impl Future forDelay {

type Output = &'static str;

fn poll(self: Pin<&mut Self>, cx:&mut Context<'_>)

-> Poll<&'static str>

{

if Instant::now() >= self.when {

println!("Hello world");

Poll::Ready("done")

} else {

cx.waker().wake_by_ref();

Poll::Pending

}

}

}

enum MainFuture {

State0,

State1(Delay),

Terminated,

}

impl Future forMainFuture {

type Output = ();

fn poll(mut self: Pin<&mut Self>,cx: &mut Context<'_>)

-> Poll<()>

{

use MainFuture::*;

loop {

match *self {

State0 => {

let when = Instant::now() +

Duration::from_millis(1);

let future = Delay { when};

println!("initstatus");

*self = State1(future);

}

State1(ref mut my_future) =>{

matchPin::new(my_future).poll(cx) {

Poll::Ready(out) =>{

assert_eq!(out,"done");

println!("delay finished this future is ready");

*self = Terminated;

returnPoll::Ready(());

}

Poll::Pending => {

println!("notready");

returnPoll::Pending;

}

}

}

Terminated => {

panic!("future polledafter completion")

}

}

}

}

}

#[tokio::main]

async fn main() {

let when = Instant::now() +Duration::from_millis(10);

let mainFuture=MainFuture::State0;

mainFuture.await;

}

Of course, there is an obvious problem with the implementation of Future. Through the running results, we can also know that the debugger obviously performs Poll operations many times when it needs to wait. Ideally, it needs to execute Poll operations when Future has made progress. In fact, the Poll that keeps turning favoritism degenerates into an inefficient Select. We will explain the topic of Epoll in detail in the next section, so we won't repeat it here.

The solution lies in the Context parameter in the poll function. This Context is the Future waker(). By calling waker, you can send a signal to the actuator that the task should be polled. When the state of Future advances, calling wake to notify the executor is the positive solution. Therefore, it is necessary to change the code of Delay:

let waker =cx.waker().clone();

let when = self.when;

// Spawn a timer thread.

thread::spawn(move || {

let now = Instant::now();

if now < when {

thread::sleep(when - now);

}

waker.wake();

});

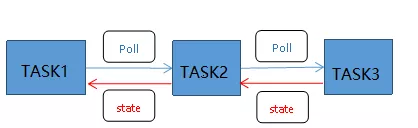

No matter what kind of high concurrency framework, it is essentially a scheduler based on this Task/Poll mechanism. The essential work of poll is to monitor the execution status of previous tasks in the chain.

By making good use of Poll's mechanism, we can avoid the above scheduling algorithm of event loop regularly traversing the whole event queue. The essence of Poll is to notify the corresponding handler of the event in the state of ready. When developing applications based on Poll's framework such as tokio, programmers don't have to care about the whole message transmission at all, just use and_then, spawn and other methods can establish the task chain and make the system work. Epoll multiplexing, the famous epoll multiplexing mechanism in Linux, is a high concurrency mechanism based on Poll. In this mechanism, a thread can monitor the status of multiple tasks. Once the status of a task descriptor becomes ready, it can notify the corresponding handler for subsequent operations.

In short, Future is a value type that can only be obtained in the Future. Poll is a method to promote Future state migration, while Epoll is a multiplexing mechanism that uses only one thread to monitor multiple Future/Task states.

C language - forever famous Shaolin school

There are numerous high concurrency products in C language. Classic operating systems and databases from Linux to Redis are basically developed based on C language. Even we just mentioned that Epoll, a highly concurrent artifact in Linux, is essentially a C language program. The concept of C language is to fully trust the programmer's own ability. The language itself has neither syntax sugar nor strict compilation inspection. Therefore, if you can't master C skillfully, it will hardly output you any productivity.

However, the upper limit of C language is the highest among all the languages we are going to talk about today. C language has neither virtual machine nor garbage collector. Its only limitation is the physical performance limit of the computer. In the previous article, "the old man who created the Github champion project can be called 10 times the original programmer", it was introduced that the performance of taosTimer not only far exceeds the original Timer, Even timerfd is better than Epoll Timer based on multiplexing. Therefore, C language is very much like Shaolin school. It opens its doors and makes good friends. Whether it's a top expert such as a sweeping monk or a seemingly ordinary pyrotechnic Toutuo, they are all Shaolin disciples.

C language is the native language of programmers in the programming world. Here, take the cache of Tdengine as an example. The working principle of TaosCache is as follows:

1. Cache initialization (taosoponconcache): first initialize the cache object sconcache, then initialize the hash table connHashList, and call taosTmrReset to reset the timer.

2. Link join cache (taosAddConnIntoCache): first calculate the hash value (hash) through ip, port and username, and then add the link (connInfo) to the pNode node corresponding to connHashList[hash]. pNode itself is a double linked list. connInfo with the same hash value will also be sorted according to the addition time and put into the pNode double linked list. Note that pNode is a node of the hash table connHashList, and it is also a linked list. The code is as follows:

void *taosAddConnIntoCache(void *handle, void*data, uint32_t ip, short port, char *user) {

int hash;

SConnHash* pNode;

SConnCache *pObj;

uint64_ttime = taosGetTimestampMs();

pObj =(SConnCache *)handle;

if (pObj== NULL || pObj->maxSessions == 0) return NULL;

if (data== NULL) {

tscTrace("data:%p ip:%p:%d not valid, not added in cache",data, ip, port);

returnNULL;

}

hash =taosHashConn(pObj, ip, port, user);//Calculate hash value through ip port user

pNode =(SConnHash *)taosMemPoolMalloc(pObj->connHashMemPool);

pNode->ip = ip;

pNode->port = port;

pNode->data = data;

pNode->prev = NULL;

pNode->time = time;

pthread_mutex_lock(&pObj->mutex);

//The following is the linked list of adding link information to pNode

pNode->next = pObj->connHashList[hash];

if(pObj->connHashList[hash] != NULL) (pObj->connHashList[hash])->prev =pNode;

pObj->connHashList[hash] = pNode;

pObj->total++;

pObj->count[hash]++;

taosRemoveExpiredNodes(pObj, pNode->next, hash, time);

pthread_mutex_unlock(&pObj->mutex);

tscTrace("%p ip:0x%x:%d:%d:%p added, connections in cache:%d",data, ip, port, hash, pNode, pObj->count[hash]);

returnpObj;

}

3. Take the link from the cache (taosGetConnFromCache): calculate its hash value (hash) according to ip, port and username, take out the pNode node corresponding to connHashList[hash], and then take out the elements with the same ip and port requirements from pNode.

RxJava of Java - the most balanced Tai Chi Sword

High concurrency products written in Java language are no less than C. for example, Kafka, Rocket MQ and other classics are also masterpieces of Java. Compared with Go and C, getting started with Java is not too difficult. Due to the existence of garbage collector GC, headache instruction problems and memory leakage basically do not exist in the Java world.

With the support of JVM virtual machine, the lower limit of Java language is usually relatively high. Even junior programmers can achieve relatively high productivity through Java, and even higher productivity than intermediate programmers using C; But it is also the limitation of JVM virtual machine. The upper limit of Java language is not as high as that of C and Rust. However, it cannot be denied that Java is the most balanced language in terms of learning difficulty, productivity, performance, memory consumption and so on. This is especially like the Tai Chi Sword of Wudang school, which has almost no flaws and weaknesses, and pursues the beauty of balance and harmony.

At present, RxJava is the most popular framework for high concurrency in Java. Because Java is too popular and there are many online interpretations, the code will not be listed here. At the end of this paper, take Java as an example to talk about the possible problems in high concurrency.

Rust's Tokio - no rookie's carefree pie

Rust is a newly emerging language with Serverless in recent years. On the surface, it looks like C. It has neither JVM virtual machine nor GC garbage collector, but it is not C. rust especially distrusts programmers and tries to let the rust compiler kill the errors in the program in the Build stage before generating executable files. Since there is no GC, rust has created a set of variable life cycle and borrowing call mechanism. Developers must always be careful whether there is a problem with the life cycle of variables.

Moreover, Rust is as difficult as the Martian Language. The multi-channel should be clone d before use, and the hash table with lock should be unwrap ped before use. Various usages are completely different from Java and Go. However, due to such strict use restrictions, the problems of Gorotine in the Go language we just mentioned will not appear in Rust, because of the usages of Go, All of them do not comply with the check of Rust variable life cycle, and it is impossible to compile and pass.

So Rust is very like the carefree school. It's very difficult to get started, but as long as you can graduate and write programs that can be compiled, you're 100% an expert, so this is an extreme language with a high lower limit and a high upper limit.

At present, Tokio is the most representative of Rust's high concurrency programming framework. The example of Future at the beginning of this article is written based on Tokio framework, which will not be repeated here.

According to the official statement, the Tokio task of each Rust is only 64 bytes in size, which will improve the efficiency by several orders of magnitude compared with the network request directly through the fold thread. With the help of the high concurrency framework, developers can squeeze the performance of the hardware to the limit.

Be particularly careful of pits in high concurrency

No matter RxJava, Tokio and Gortouine, no matter how powerful the high concurrency framework is, there will be some common problems that need special attention on the road of pursuing extreme performance. Here are a few examples.

1, Pay attention to branch prediction: we know that modern CPUs are executed based on the instruction pipeline, that is, the CPU will put the code that may be executed in the future on the pipeline for decoding and other processing operations in advance, but in case of code branches, we need to predict to know which of the following instructions may be executed.

A typical example of instruction prediction can be seen in the following code:

public class Main {

public static void main(String[] args) {

long timeNow=System.currentTimeMillis();

int max=100,min=0;

long a=0,b=0,c=0;

for(int j=0;j<10000000;j++){

int ran=(int)(Math.random()*(max-min)+min);

switch(ran){

case 0:

a++;

break;

case 1:

b++;

break;

default:

c++;

}

}

long timeDiff=System.currentTimeMillis()-timeNow;

System.out.println("a is "+a+"b is"+b+"c is "+c);

System.out.println("Total time "+timeDiff);

}

}

In the above code, you only need to change the value range of random number, that is, change max=100 to max=5, and the execution time of the above code will increase by at least 30%. This is because when Max precedes 5, the value range of variable ran is from 0 to 5. At this time, the probability distribution of execution of each branch is relatively balanced, and there is no dominant branch, so the instruction prediction is likely to fail, This leads to the reduction of CPU execution efficiency, which needs to be paid high attention in high concurrency programming scenarios.

2, Align variables according to cache lines: at present, various mainstream high concurrency frameworks are based on multiplexing mechanism, and the scheduling of tasks on each CPU core basically does not need programmers' attention. However, in the multi-core scenario, programmers need to align variables according to the size of cache lines as much as possible, so as to avoid the problem of invalid cache between CPUs, For example, in the following example, two threads operate on two members [0] and [1] in the data arr respectively.

public class Main {

public static void main(String[] args) {

final MyData data = new MyData();

new Thread(new Runnable() {

public void run() {

data.add(0);

}

}).start();

new Thread(new Runnable() {

public void run() {

data.add(1);

}

}).start();

try{

Thread.sleep(100);

} catch (InterruptedException e){

e.printStackTrace();

}

long[] arr=data.Getitem();

System.out.println("arr0 is "+arr[0]+"arr1is"+arr[1]);

}

}

class MyData {

private long[] arr={0,0};

public long[] Getitem(){

return arr;

}

public void add(int j){

for (;true;){

arr[j]++;

}

}

}

However, as long as the ARR is changed into two-dimensional data and the operating variables are changed from arr[j] to arr[j][0], the program operation efficiency can be greatly improved.

Performance and efficiency are the eternal pursuit of programmers. No matter C, Java, Rust and Go, each language has its own niche. The pursuit of short, smooth and fast Go is the only choice; The pursuit of stability and balance in all aspects is still the first to promote Wudang Java. The development team pursuing extreme performance suggests trying Rust; C is more suitable for individual talents who pursue personal heroism. As long as they select their own development framework, strictly implement best practices, and pay attention to branch prediction and variable alignment, they can achieve very good performance.

Author: Ma Chao, CSDN blog expert, Alibaba cloud MVP, Huawei cloud MVP, Huawei 2020 technology community developer star.

From October 23 to 24, I went to Changsha to participate in 1024 programmer's day, appreciate the wonderful sharing brought by front-line technical experts, communicate and cooperate with many developer friends, and celebrate the programmer's own festival. Guests include academician Ni Guangnan, academician Gong Ke, Academician Wang Huaimin, father of MySQL, co-founder of Kubernetes, CTO of RISC-V International Foundation, co-founder of PostgreSQL global development group, MongoDB CTO