In JDK 1.6 and JDK 1.7, HashMap is implemented by bitbucket + linked list, that is, it uses linked list to handle conflicts, and the linked lists with the same hash value are stored in a linked list. However, when there are many elements in a bucket, that is, there are many elements with equal hash value, the efficiency of searching by key value in turn is low. In JDK 1.8, HashMap is implemented by bitbucket + linked list + red black tree. When the length of linked list exceeds the threshold (8), the linked list may be converted to red black tree, which greatly reduces the search time.

Briefly speaking, the implementation principle of HashMap:

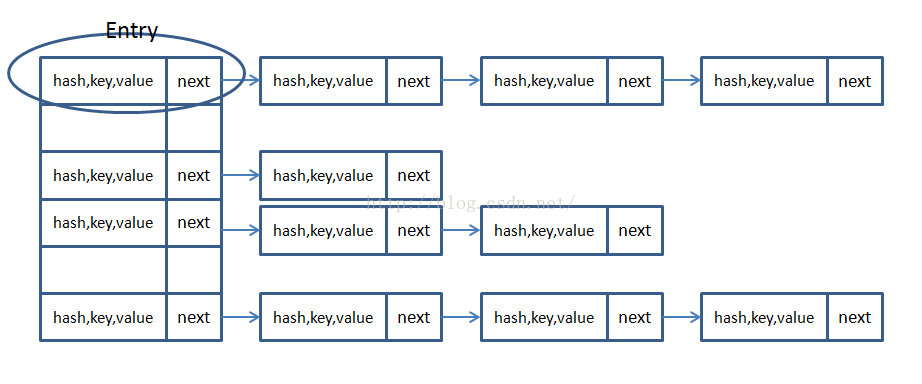

First, there is a table array, in which each element is a node linked list. When adding an element (key value), the hash value of the element key is calculated first, and an index is obtained through the length of the table and the hash value of the key, so as to determine the position of inserting into the array. However, the elements with the same hash value may have been placed in the same position of the array. At this time Just add this element to the tail of the node list with the same hash value. They are in the same position of the array, but they form the chain list. The hash values on the same chain list are the same, so the array stores the chain list. When the length of the linked list is greater than or equal to 8, the linked list may be converted to a red black tree, which greatly improves the efficiency of searching.

storage structure

static class Node<K,V> implements Map.Entry<K,V> {

final int hash;

final K key;

V value;

Node<K,V> next; //You can see it's a linked list

Node(int hash, K key, V value, Node<K,V> next) {

this.hash = hash;

this.key = key;

this.value = value;

this.next = next;

}

*

*

*

}transient Node<K,V>[] table;

- HashMap contains an array table of Node type, which is inherited from Map.Entry.

- Node stores key value pairs. It contains four fields. From the next field, we can see that node is a linked list.

- Each position in the table array can be treated as a bucket, and a bucket holds a linked list.

- HashMap uses zipper method to resolve conflicts, and the same Node holds the same hash value.

Data domain

// Serialization of ID private static final long serialVersionUID = 362498820763181265L; // Initialization capacity, 16 barrels for initialization static final int DEFAULT_INITIAL_CAPACITY = 1 << 4; // aka 16 // Maximum capacity: 1073741824, more than 1 billion static final int MAXIMUM_CAPACITY = 1 << 30; // Default load factor. So initially, when the number of key value pairs is greater than 16 * 0.75 = 12, the capacity expansion will be triggered. static final float DEFAULT_LOAD_FACTOR = 0.75f; // When put() an element to a bucket and its linked list length reaches 8, it is possible to convert the linked list to a red black tree static final int TREEIFY_THRESHOLD = 8; // When hashMap is expanded, if the length of the linked list is less than or equal to 6, it will be reduced from red black tree to linked list again. static final int UNTREEIFY_THRESHOLD = 6; // Before transforming into a red black tree, there will be a judgment that only when the number of key value pairs is greater than 64 will the transformation occur, otherwise the capacity will be expanded directly. This is to avoid unnecessary conversion when multiple key value pairs are placed in the same linked list in the early stage of HashMap establishment. static final int MIN_TREEIFY_CAPACITY = 64; // Array of storage elements transient Node<k,v>[] table; // Number of storage elements transient int size; // Fast fail mechanism of modified times transient int modCount; // Critical value when the actual size (capacity * fill ratio) exceeds the critical value, the capacity will be expanded int threshold; // Packing ratio final float loadFactor;

Constructor

public HashMap() {

this.loadFactor = DEFAULT_LOAD_FACTOR; // all other fields defaulted

}

public HashMap(int initialCapacity) {

this(initialCapacity, DEFAULT_LOAD_FACTOR);

}

public HashMap(int initialCapacity, float loadFactor) {

if (initialCapacity < 0)

throw new IllegalArgumentException("Illegal initial capacity: " +

initialCapacity);

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

if (loadFactor <= 0 || Float.isNaN(loadFactor))

throw new IllegalArgumentException("Illegal load factor: " +

loadFactor);

this.loadFactor = loadFactor;

// tableSizeFor(initialCapacity) method calculates close to initialCapacity

// Parameter 2^n as the initialization capacity.

this.threshold = tableSizeFor(initialCapacity);

}

public HashMap(Map<? extends K, ? extends V> m) {

this.loadFactor = DEFAULT_LOAD_FACTOR;

putMapEntries(m, false);

}-

The HashMap constructor allows the user to pass in capacity that is not to the nth power of 2, because it automatically converts the incoming capacity to the nth power of 2.

put() operation source code analysis

public V put(K key, V value) {

return putVal(hash(key), key, value, false, true);

}

static final int hash(Object key) {

int h;

// "Disturbance function". Refer to https://www.cnblogs.com/zhengwang/p/8136164.html

return (key == null) ? 0 : (h = key.hashCode()) ^ (h >>> 16);

}

final V putVal(int hash, K key, V value, boolean onlyIfAbsent,

boolean evict) {

HashMap.Node<K,V>[] tab; HashMap.Node<K,V> p; int n, i;

// Initialize table if not initialized

if ((tab = table) == null || (n = tab.length) == 0)

n = (tab = resize()).length;

// Through the length and hash of table and operation, an index is obtained,

// Then determine whether there is a node at the index of the table array subscript.

if ((p = tab[i = (n - 1) & hash]) == null)

// If the table array index is empty, a new node will be created and placed there.

tab[i] = newNode(hash, key, value, null);

else {

// Running to this point indicates that there is already a node at the table array index, that is, a collision has occurred

HashMap.Node<K,V> e; K k;

// Check whether the key of this node is the same as the inserted key.

if (p.hash == hash &&

((k = p.key) == key || (key != null && key.equals(k))))

e = p;

// Check whether the node is already a red black tree

else if (p instanceof TreeNode)

// If the node is already a red black tree, continue to add nodes to the tree species

e = ((HashMap.TreeNode<K,V>)p).putTreeVal(this, tab, hash, key, value);

else {

for (int binCount = 0; ; ++binCount) {

// Loop through the node list here

// Determine whether to reach the end of the list

if ((e = p.next) == null) {

// After arriving at the end of the list, insert the new node into the list directly, and insert it into the end of the list. Before jdk8, it is the head insertion method

p.next = newNode(hash, key, value, null);

if (binCount >= TREEIFY_THRESHOLD - 1) // -1 for 1st

// If the length of node list is greater than or equal to 8, the node may be converted to a red black tree

treeifyBin(tab, hash);

break;

}

// Check whether the key of this node is the same as the inserted key.

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

break;

p = e;

}

}

// When the insert key exists, update the value value and return the old value

if (e != null) { // existing mapping for key

V oldValue = e.value;

if (!onlyIfAbsent || oldValue == null)

e.value = value;

afterNodeAccess(e);

return oldValue;

}

}

// Number of modifications++

++modCount;

// If the current size is greater than the threshold, the threshold is originally the initial capacity * 0.75

if (++size > threshold)

resize();

afterNodeInsertion(evict);

return null;

}- The put() process is as follows:

- Determine whether the key value is empty or null for the array table [], otherwise, resize() is the default size;

- Calculate the hash value and table length according to the key to get the inserted array index. If tab[index] == null, directly create a new node according to the key value to add. Otherwise, go to 3

- Determine whether the hash conflict in the current array is handled by linked list or red black tree (check the first node type), and handle it separately

- Why is the head insertion method replaced by the tail insertion? In jdk1.7, the head insertion method may take into account a so-called hot data point (the newly inserted data may be used earlier); the time complexity to find the tail of the linked list is O(n), or additional memory address is needed to save the tail position of the linked list. The head insertion method can save the insertion time. However, when expanding the capacity, the original order of elements in the linked list will be changed, which leads to the problem of link formation in the concurrent scenario.

- From the putVal() source code, we can see that HashMap does not restrict the null key value pair (hash value is set to 0), that is, HashMap allows to insert the null key value pair at the end of the key. But before JDK 1.8, HashMap used the 0th node to hold the null key value pair.

- Determine node subscript: get an index through table length and key hash.

- Before transforming into a red black tree, there will be a judgment that only when the number of key value pairs is greater than 64 will the transformation occur, otherwise the capacity will be expanded directly. This is to avoid unnecessary conversion when multiple key value pairs are placed in the same linked list in the early stage of HashMap establishment.

get() operation source code analysis

public V get(Object key) {

HashMap.Node<K,V> e;

return (e = getNode(hash(key), key)) == null ? null : e.value;

}

final HashMap.Node<K,V> getNode(int hash, Object key) {

HashMap.Node<K,V>[] tab; HashMap.Node<K,V> first, e; int n; K k;

// table is not empty

if ((tab = table) != null && (n = tab.length) > 0 &&

// Through the length and hash of table and operation, an index, table

// The element at subscript index is not empty, that is, the element is node list

(first = tab[(n - 1) & hash]) != null) {

// First, judge the first node in the node list

if (first.hash == hash && // always check first node

// Judge whether the key is null or not

((k = first.key) == key || (key != null && key.equals(k))))

// If the key is equal, the first one will be returned

return first;

// The key of the first node is different and the node list contains more than one node

if ((e = first.next) != null) {

// Determine whether the node linked list turns into a red black tree.

if (first instanceof HashMap.TreeNode)

// Then search in the red black tree.

return ((HashMap.TreeNode<K,V>)first).getTreeNode(hash, key);

do {

// Loop through the nodes in the node list to determine whether the key s are equal

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

return e;

} while ((e = e.next) != null);

}

}

// If the key does not exist in the table, null will be returned.

return null;

}- The get(key) method first obtains the hash value of the key,

- Calculate hash & (table. Len - 1) to get the position in the linked list array,

- First, judge whether the key of the first node in the node list (bucket) is equal to the parameter key,

- If it is not equal, it is determined whether it has been turned into a red black tree. If it is turned into a red black tree, it is searched in the red black tree,

- If it does not turn into a red black tree, it can traverse the following linked list to find the same key Value and return the corresponding Value value.

Analyze the source code of resize() operation

// Element adjustment after initialization or expansion

final HashMap.Node<K,V>[] resize() {

// Get old table

HashMap.Node<K,V>[] oldTab = table;

// Old table capacity

int oldCap = (oldTab == null) ? 0 : oldTab.length;

// Critical value of capacity expansion of old table

int oldThr = threshold;

// Define new table capacity and threshold

int newCap, newThr = 0;

// If the original table is not empty

if (oldCap > 0) {

// If the table capacity reaches the maximum, the modification threshold is integer.max "value

// MAXIMUM_CAPACITY = 1 << 30;

// Integer.MAX_VALUE = 1 << 31 - 1;

if (oldCap >= MAXIMUM_CAPACITY) {

// When the map reaches the maximum capacity, and the data is put into the map, the critical value is directly set to the maximum value of the integer

// Do not resize until the maximum capacity is reached.

threshold = Integer.MAX_VALUE;

// End operation

return oldTab;

}

// Here is the expansion operation (2x)

else if ((newCap = oldCap << 1) < MAXIMUM_CAPACITY &&

oldCap >= DEFAULT_INITIAL_CAPACITY)

// The critical value also doubles

newThr = oldThr << 1; // double threshold

}

else if (oldThr > 0) // initial capacity was placed in threshold

/*

* Enter this if to prove the band parameter structure used to create the HashMap: public HashMap(int initialCapacity)

* Or public HashMap(int initialCapacity, float loadFactor)

* Note: the initial capacity in the construction with parameters will pass the

* tableSizeFor(initialCapacity)Method to calculate the approximate initial capacity

* Parameter 2^n as the initialization capacity.

* Therefore, the actual capacity created is not equal to the initial capacity set.

*/

newCap = oldThr;

else { // zero initial threshold signifies using defaults

// Enter this if to prove the nonparametric construction used to create the map:

// Then initialize the parameters newCap (new capacity), newthr (new boundary value of expansion valve)

newCap = DEFAULT_INITIAL_CAPACITY;

newThr = (int)(DEFAULT_LOAD_FACTOR * DEFAULT_INITIAL_CAPACITY);

}

if (newThr == 0) {

// There are two possibilities to enter.

// 1. It indicates that the old table capacity is greater than 0 but less than 16

// 2. The belt parameter structure used to create the HashMap, and calculate the critical value according to the loadFactor.

float ft = (float)newCap * loadFactor;

newThr = (newCap < MAXIMUM_CAPACITY && ft < (float)MAXIMUM_CAPACITY ?

(int)ft : Integer.MAX_VALUE);

}

// Modify threshold

threshold = newThr;

@SuppressWarnings({"rawtypes","unchecked"})

// Generate new table based on new capacity

HashMap.Node<K,V>[] newTab = (HashMap.Node<K,V>[])new HashMap.Node[newCap];

// Replace with a new table

table = newTab;

// If the oldTab is not null, it means that the capacity is expanded. Otherwise, the newTab is returned directly

if (oldTab != null) {

/* Traverse the original table */

for (int j = 0; j < oldCap; ++j) {

HashMap.Node<K,V> e;

if ((e = oldTab[j]) != null) {

oldTab[j] = null;

// Judge that there is only one node in this bucket (linked list)

if (e.next == null)

// Recalculate the position index in the table according to the new capacity, and assign the current element to it.

newTab[e.hash & (newCap - 1)] = e;

// Determine whether the list has been turned into a red black tree

else if (e instanceof HashMap.TreeNode)

// In the split function, it may be because the length of the red black tree is less than or equal to UNTREEIFY_THRESHOLD (6)

// Then turn the red and black trees back to the linked list

((HashMap.TreeNode<K,V>)e).split(this, newTab, j, oldCap);

else { // preserve order

// Run here to prove that there are multiple nodes in the bucket.

HashMap.Node<K,V> loHead = null, loTail = null;

HashMap.Node<K,V> hiHead = null, hiTail = null;

HashMap.Node<K,V> next;

do {

// Traverse the barrel

next = e.next;

if ((e.hash & oldCap) == 0) {

if (loTail == null)

loHead = e;

else

loTail.next = e;

loTail = e;

}

else {

if (hiTail == null)

hiHead = e;

else

hiTail.next = e;

hiTail = e;

}

} while ((e = next) != null);

if (loTail != null) {

loTail.next = null;

newTab[j] = loHead;

}

if (hiTail != null) {

hiTail.next = null;

newTab[j + oldCap] = hiHead;

}

}

}

}

}

return newTab;

}

- Data can still be put after the maximum value is reached.

- During initialization with construction parameters, the actual capacity created is not equal to the initial capacity set. The tableSizeFor() method can automatically convert the incoming capacity to the nth power of 2.

- Red black trees can degenerate into lists.

- It should be noted that the expansion operation needs to reinsert all key value pairs of oldTable into newTable, so this step is time-consuming.