1, Why can Nginx handle it asynchronously and non blocking

Look at the whole process of a request: when the request comes, establish a connection, and then receive the data. After receiving the data, send the data.

Specifically, the bottom layer of the system is the read-write event. When the read-write event is not ready, it must not be operable. If it is not called in a non blocking way, it will have to block the call. If the event is not ready, you can only wait. When the event is ready, you can continue. Blocking calls will enter the kernel and wait, and the cpu will let them out for others. It is obviously inappropriate for single threaded worker s. When there are more network events, everyone is waiting. When the cpu is idle and no one is using it, the cpu utilization will naturally not go up, let alone high concurrency. Well, you say add the number of processes. What's the difference between this and apache's thread model? Note that don't add unnecessary context switching. Therefore, in nginx, blocking system calls are the most taboo. Don't block, then it's not blocked. Non blocking means that if the event is not ready, return to EAGAIN immediately and tell you that the event is not ready. What are you panicking about? Come back later. Well, you can check the event later until the event is ready. During this period, you can do other things first, and then check whether the event is ready. Although it's not blocked, you have to check the status of events from time to time. You can do more things, but the cost is not small.

2, Advantages of Nginx

[1] Faster: This is reflected in two aspects: on the one hand, under normal circumstances, a single request will get a faster response; On the other hand, during peak periods (such as tens of thousands of concurrent requests), Nginx can respond to requests faster than other Web servers.

[2] High scalability, cross platform: the design of Nginx is highly scalable. It is completely composed of multiple modules with different functions, different levels, different types and very low coupling. Therefore, when repairing a Bug or upgrading a module, you can focus on the module itself without paying attention to others. Moreover, in the HTTP module, the HTTP filter module is also designed: after a normal HTTP module processes the request, a series of HTTP filter modules will reprocess the request results. In this way, when we develop a new HTTP module, we can not only use different levels or different types of modules such as HTTP core module, events module and log module, but also reuse a large number of existing HTTP filter modules intact. This excellent design with low coupling degree has created a huge third-party module of Nginx. Of course, the open third-party module is as easy to use as the officially released module.

Nginx modules are embedded into binary files for execution, both officially released modules and third-party modules. This makes the third-party modules have extremely excellent performance and make full use of the high concurrency characteristics of nginx. Therefore, many high traffic websites tend to develop customized modules in line with their own business characteristics.

[3] High reliability: for reverse proxy, the probability of downtime is very small. High reliability is the most basic condition for us to choose Nginx, because the reliability of Nginx is obvious to all. Many high traffic websites use Nginx on a large scale on the core server. The high reliability of Nginx comes from the excellent design of its core framework code and the simplicity of module design; In addition, the commonly used modules officially provided are very stable, and each worker process is relatively independent. When a worker process makes an error, the master process can quickly "pull up" a new worker sub process to provide services.

[4] Low memory consumption: generally, 10000 inactive HTTP keep alive connections consume only 2.5MB of memory in Nginx, which is the basis for Nginx to support high concurrency connections. It takes 150M memory to open 10 Nginx.

[5] Single machine supports more than 100000 concurrent connections: This is a very important feature! With the rapid development of the Internet and the doubling of the number of Internet users, major companies and websites need to deal with a large number of concurrent requests. A Server that can withstand more than 100000 concurrent requests in the peak period will undoubtedly be favored by everyone. Theoretically, the upper limit of concurrent connections supported by Nginx depends on memory, and 100000 is far from being capped. Of course, the ability to handle more concurrent requests in time is closely related to business characteristics.

[6] Hot deployment: the separation design of Master management process and Worker work process enables Nginx to provide heating deployment function, that is, it can be deployed in 7 × Upgrade the executable of Nginx on the premise of 24-hour uninterrupted service. Of course, it also supports the functions of updating configuration items and changing log files without stopping the service.

[7] The freest BSD license agreement: This is a powerful driving force for the rapid development of Nginx. BSD license agreement not only allows users to use Nginx for free, but also allows users to directly use or modify the source code of Nginx in their own projects and then publish it. This has attracted countless developers to continue to contribute their wisdom to Nginx.

Of course, the above seven features are not all of Nginx. With countless official function modules and third-party function modules, Nginx can meet most application scenarios. These function modules can be superimposed to achieve more powerful and complex functions. Some modules also support the integration of Nginx with Perl, Lua and other scripting languages, which greatly improves the development efficiency. These features urge users to think more about Nginx when looking for a Web server. The core reason for choosing Nginx is that it can support high concurrent requests while maintaining efficient services.

[8] High performance: handle 20000-30000 concurrent connections, and the official monitoring can support 50000 concurrent connections.

3, Tell me about the configuration parameters you used in Nginx

When Nginx is installed, there will be a corresponding installation directory, Nginx Conf is the main configuration file of Nginx. The main configuration file of Nginx is divided into four parts: Main (global configuration), server (host configuration), upstream (load balancing server setting) and location(URL matching specific location setting). The relationship between these four parts is: server inherits main, location inherits server, and upstream will neither inherit other settings nor be inherited.

[1] Global block: configure instructions that affect Nginx global. Generally, there are user groups running the Nginx server, the pid storage path of the Nginx process, the log storage path, the introduction of configuration files, the number of worker process es allowed to be generated, etc.

[2] events block: the configuration affects the Nginx server or the network connection with the user. There is the maximum number of connections per process, which event driven model is selected to process connection requests, whether it is allowed to accept multiple network connections at the same time, and start the serialization of multiple network connections.

[3] http block: it can nest multiple server s, configure most functions such as proxy, cache, log definition and the configuration of third-party modules. Such as file import, MIME type definition, log customization, whether to use sendfile to transfer files, connection timeout, number of single connection requests, etc.

[4] server block: configure the relevant parameters of the virtual host. There can be multiple servers in one http.

[5] location block: configure the routing of requests and the processing of various pages.

4, Differences between Nginx and Apache

[1] Nginx is an event based Web server (select and epoll functions, etc.), and Apache is a process based server;

[2] Nginx means that all requests are processed by one thread, and Apache means that a single thread processes a single request;

[3] Nginx performs well in load balancing. Apache will reject new connections when the traffic reaches the limit of the process;

[4] Nginx's scalability and performance do not depend on hardware, while Apache relies on CPU, memory and other hardware components;

[5] Nginx is better in memory consumption and connection, while Apache has not improved in memory consumption and connection;

[6] Nginx pays attention to speed and Apache pays attention to power;

[7] Nginx avoids the probability of subprocesses, and Apache is based on subprocesses;

[8] Nginx handles requests asynchronously and non blocking, while Apache is blocking. Under high concurrency, nginx can maintain low resource consumption and high performance;

5, What are the Nginx load balancing algorithms

At present, the upstream of Nginx supports four allocation methods:

[1] Polling (default): each request is allocated to different back-end servers one by one in chronological order. If the back-end server goes down, it will be raised automatically;

[2] Weight: Specifies the polling probability. The weight is proportional to the access ratio. It is used in the case of uneven back-end servers;

[3]ip_hash: each request is allocated according to the hash result of the access IP, so that each visitor accesses a back-end server. It can solve the problem of session;

[4] fair (third party): the request is allocated according to the response time of the back-end server, and the response time block is allocated first;

[5]url_hash (third party): allocate according to the hash result of URL;

6, Common status codes

499: the processing time of the server is too long, and the client actively closes the connection.

[reference blog]: https://www.cnblogs.com/kevingrace/p/7205623.html

7, Static resource allocation

For the cache server of static resources, for example, in the project where the front-end and back-end are separated, in order to speed up the response speed of the front-end page, we can put the relevant resources of the front-end, such as html, js, css or pictures, into the directory specified by Nginx. When accessing, we only need to add a path through IP to achieve efficient and fast access, Let's talk about how to use Nginx as a static resource server under Windows.

[1] Modify nginx Config configuration file;

[2] The main configuration parameters are as follows. I have directly removed some irrelevant parameters. Note that multiple location s can be configured, so that relevant paths can be specified according to business needs to facilitate subsequent operation, maintenance and management:

8, Reverse proxy configuration

The reverse proxy server can hide the existence and characteristics of the source server. It acts as an intermediate layer between Internet cloud and web server. This is good for security, especially when you use web hosted services.

[reverse agent]: https://blog.csdn.net/zhengzhaoyang122/article/details/94303198

9, Nginx process model

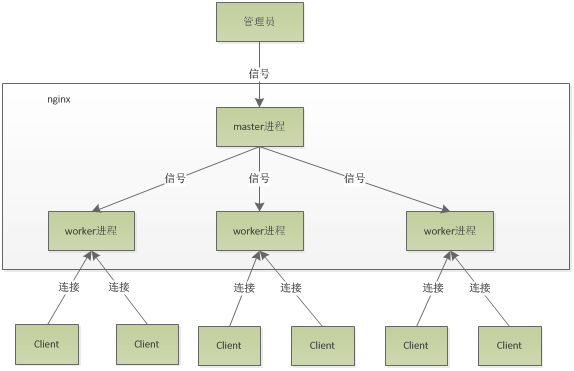

By default, Nginx adopts multi process working mode. After Nginx is started, it will run one master process and multiple worker processes. Among them, the master acts as the interactive interface between the whole process group and users. At the same time, it monitors the process and manages the worker process to realize the functions of restarting service, smooth upgrade, replacing log files, real-time effectiveness of configuration files and so on. Workers are used to handle basic network events. Workers are equal. They compete together to handle requests from clients. The process model of Nginx is shown in the figure below:

The master uses signals to control the work process

10, One Master and multiple wokers are good

[1] Nginx – s reload can be used for hot deployment, and nginx can be used for hot deployment;

[2] Each woker is an independent process. If there is a problem with one woker, the other wokers are independent and continue to compete to realize the request process without causing service interruption;

12, How many woker s are appropriate

It is most suitable that the number of worker s is equal to the number of CPUs in the server;

13, Sending the request takes up several connections of woker

When accessing static resources, there are two: one request and one return of static resources;

When accessing non static resources, there are four: nginx needs to send the request to a node in the Tomcat cluster for processing once, and Tomcat returns the result once after processing;

14, Nginx has a Master and four wokers. Each Woker supports a maximum of 1024 connections. What is the maximum number of concurrent connections supported

The maximum concurrent number of common static access is: worker_connections * worker_processes /2. If HTTP is used as the reverse proxy, the maximum concurrent number should be worker_connections * worker_processes/4.

15, How Nginx works

Nginx consists of a kernel and modules. Nginx itself does little work. When it receives an HTTP request, it just maps the request to a location block by looking up the configuration file, and each instruction configured in the location will start different modules to complete the work. Therefore, the module can be regarded as the real labor worker of nginx. Usually, the instructions in a location will involve one handler module and multiple filter modules (of course, multiple locations can reuse the same module). The handler module is responsible for processing the request and generating the response content, while the filter module processes the response content. The modules developed by users according to their own needs belong to the third-party module. It is with the support of so many modules that the function of nginx will be so powerful. The modules of nginx are structurally divided into core modules, basic modules and third-party modules:

[1] Core modules: HTTP module, EVENT module and MAIL module;

[2] Basic modules: http Access module, HTTP FastCGI module, HTTP Proxy module and HTTP Rewrite Module;

[3] Third party modules: http # Upstream # Request # Hash module, Notice module and HTTP # Access # Key module.

The modules of Nginx are divided into the following three categories in terms of function:

[1] Handlers. This kind of module directly processes the request, and performs operations such as outputting content and modifying headers information. Generally, there can only be one handler module;

[2] Filters. The output of this kind of content is mainly modified by the processor module NX;

[3] Proxies (proxy class module). Such modules are the HTTP # Upstream modules of Nginx. These modules mainly interact with some back-end services, such as FastCGI, to realize the functions of service proxy and load balancing.

16, Load balancing configuration

[blog connection]: https://blog.csdn.net/zhengzhaoyang122/article/details/94287448

17, Virtual host configuration

[blog connection]: https://blog.csdn.net/zhengzhaoyang122/article/details/93793377

18, Nginx common commands

[1] Start nginx:

[root@LinuxServer sbin]# /usr/local/nginx/sbin/nginx -c /usr/local/nginx/conf/nginx.conf

[2] Stop nginx -s stop or nginx -s quit;

[3] Overload configuration/ SBIN / nginx - s reload or service nginx reload;

[4] Overload the specified configuration file, - c: use the specified configuration file instead of nginx in the conf directory conf ;

[root@LinuxServer sbin]# /usr/local/nginx/sbin/nginx -c /usr/local/nginx/conf/nginx.conf ;

[5] View nginx version nginx -v;

[6] Check whether the configuration file is correct nginx -t;

[7] Display help information nginx -h;

19, Please explain what the C10K problem is

C10K problem refers to the inability to process network sockets of a large number of clients (10000) at the same time.

20, How does Nginx handle Http requests

[1] First, when Nginx starts, it will parse the configuration file to get the port and IP address to be monitored, and then initialize the monitored Socket in the Master process of Nginx (create the Socket, set addr, reuse and other options, bind to the specified IP address port, and then listen).

[2] Then, fork (an existing process can call the fork function to create a new process. The new process created by fork is called a child process) to create multiple child processes.

[3] After that, the child process will compete to accept the new connection. At this point, the client can initiate a connection to nginx. When the client shakes hands with nginx three times and establishes a connection with nginx. At this time, a child process will accept successfully, get the Socket of the established connection, and then create the encapsulation of the connection by nginx, that is, ngx_connection_t structure.

[4] Then, set the read-write event processing function and add read-write events to exchange data with the client. Here, there is still some logic to continue in "how does Nginx achieve high concurrency?" From the point of view of the problem.

[5] Nginx or the client will take the initiative to close the connection. At this point, a connection will die.

[6] Finally, Nginx or the client will take the initiative to close the connection. At this point, a connection will die.

21, The difference between fast cgi and cgi

FastCGI is a scalable and high-speed interface for communication between HTTP server and dynamic scripting language. Most popular HTTP servers support FastCGI, including Apache, Nginx and lighttpd. At the same time, FastCGI is also supported by many scripting languages, including PHP.

FastCGI is developed and improved from CGI. The main disadvantage of the traditional CGI interface is poor performance, because every time the HTTP server encounters a dynamic program, it needs to restart the script parser to execute the parsing, and then return the result to the HTTP server. This is almost unavailable when dealing with high concurrent access. In addition, the traditional CGI interface has poor security and is rarely used now.

FastCGI interface adopts C/S structure, which can separate HTTP server and script parsing server, and start one or more script parsing daemons on the script parsing server at the same time. When the HTTP server encounters a dynamic program every time, it can directly deliver it to the FastCGI process for execution, and then return the obtained results to the browser. In this way, the HTTP server can specifically process static requests or return the results of the dynamic script server to the client, which greatly improves the performance of the whole application system.

[1] cgi: according to the requested content, the web server will fork a new process to run the external c program (or perl script...). This process will return the processed data to the web server. Finally, the web server will send the content to the user, and the fork process will also exit. If the next time the user requests to change the dynamic script, the web server forks a new process again and again.

[2] Fastcgi: when the web server receives a request, it will not fork a process again (because the process starts when the web server starts and will not exit). The web server directly passes the content to the process (inter process communication, but fastcgi uses other methods, tcp communication). After receiving the request, the process will process it, Return the result to the web server, and finally wait for the next request instead of exiting.

To sum up, the difference lies in whether the fork process is repeated to process requests.

22, How does Nginx achieve high concurrency

One main process and multiple working processes. Each working process can process multiple requests. For each incoming request, there will be a worker process to process it. But it is not the whole process. It is processed to the place where blocking may occur, such as forwarding the request to the upstream (back-end) server and waiting for the request to return. Then, the processing worker will continue to process other requests. Once the upstream server returns, this event will be triggered, and the worker will take over and the request will go down. Because of the nature of the work of web server, most of the life of each request is in network transmission. In fact, there is not much time spent on the server machine. This is the secret of solving high concurrency by several processes. That is @skoo to say, webserver is just a network IO intensive application, not a computing intensive application.

23, Why not use multithreading

Apache: create multiple processes or threads, and each process or thread will allocate cpu and memory for it (threads are much smaller than processes, so worker s support higher concurrency than perfork). Concurrency will drain server resources.

Nginx: single thread is used to process requests asynchronously and non blocking (the administrator can configure the number of working processes of the main process of nginx) (epoll). CPU and memory resources will not be allocated for each request, which saves a lot of resources and reduces a lot of CPU context switching. That's why nginx supports higher concurrency.

24, Why is Nginx performance so high

Thanks to its event processing mechanism: asynchronous non blocking event processing mechanism: using epoll model, it provides a queue to solve the problem;

25, Design of memory pool

In order to avoid memory fragmentation, reduce the number of memory applications to the operating system and reduce the development complexity of each module, Nginx adopts a simple memory pool (unified application and unified release). For example, allocate a memory pool for each http request and destroy the entire memory pool at the end of the request.

26, Platform independent code implementation

The core code is implemented with code independent of the operating system, and the system calls related to the operating system are implemented independently for each operating system, which finally creates the portability of Nginx.

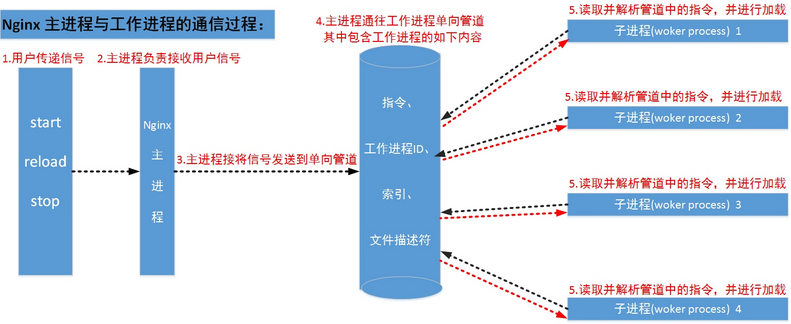

27, Communication between Nginx processes

The number of the main processes that are not started will be determined by the Nginx function. After the main process is added to the main process that is not started, the number of the main process will be determined by the Nginx function, And establish a one-way pipeline and pass it to the working process. This pipeline is different from the ordinary pipeline. It is a single channel from the main process to the working process, including the instructions that the main process wants to send to the working process, the working process ID, the index of the working process in the working process table and the necessary file descriptor.

The main process communicates with the outside world through the signal mechanism. When it receives the signal to be processed, it sends the correct instructions to the relevant working process through the pipeline. Each working process has the ability to capture the readable events in the pipeline. When there are readable events in the pipeline, the working process will read and parse the instructions from the pipeline, and then take corresponding execution actions, This completes the interaction between the main process and the work process.

28, Event driven framework

Nginx event driven framework: the so-called event driven architecture, in short, is to generate events from some event sources, collect and distribute events by one or more event collectors (epold, etc.), and then many event processors will register the events they are interested in and "consume" these events at the same time. Nginx will not use processes or threads as event consumers. It can only be a module. The current process calls the module.

In traditional web servers (such as Apache), the so-called events are limited to the establishment and closing of TCP connections, and other reads and writes are no longer event driven. At this time, it will degenerate into the batch mode of executing each operation in sequence. In this way, each request will always occupy system resources after the connection is established, and the resources will not be released until the connection is closed. A great waste of memory, cpu and other resources. And treat a process or thread as an event consumer. There is an important difference between traditional web server and Nginx: the former monopolizes a process resource for each event consumer, while the latter is only called by the event distributor process for a short time.

29, Multistage asynchronous processing of requests

The multi-stage asynchronous processing of requests can only be realized based on the event driven framework, that is, the processing process of a request is divided into multiple stages according to the trigger mode of events, and each stage can be triggered by event collection and distributor (epoll, etc.). For example, an http request can be divided into seven stages.

30, In Nginx, please explain the difference between break and last in the Rewrite module

Official documents are defined as follows:

[1] last: stop executing the current round of ngx_http_rewrite_module instruction set, and then find the new location matching the changed URI;

[2] break: stop executing the current round of ngx_http_rewrite_module instruction set;

[for example]: as follows:

Using break will match the URL twice. If no item is met, it will stop matching the following location and directly initiate the request www.xxx com/test2.txt, because the file test2.txt does not exist Txt, 404 will be displayed directly.

If you use last, you will continue to search for the following locations that meet the conditions (meet the rewritten / test2.txt request) and match them ten times. If you don't get the results ten times, it will be the same as break. Return to the above example, / test2 Txt just corresponds to the condition of face location. Enter the code execution in curly brackets {}, and 500 will be returned here.

31, Please list the best uses of Nginx server

The best use of Nginx server is to deploy dynamic HTTP content on the network, using SCGI, WSGI application server and FastCGI handler for scripts. It can also be used as a load balancer.

32, Nginx in nginx Optimization of conf configuration file

33, Parameter optimization of Nginx kernel

34, Optimize the performance of Nginx with TCMalloc

35, Compilation and installation process optimization

36, Event model supported by Nginx

Nginx supports the following methods for handling connections (I/O multiplexing methods), which can be specified through the use instruction.

[1] Select – standard method. If there is no more effective method on the current platform, it is the default method at compile time. You can use the configuration parameter – with select_ Module and – without select_ Module to enable or disable this module.

[2] Poll – standard method. If there is no more effective method on the current platform, it is the default method at compile time. You can use the configuration parameter – with poll_ Module and – without poll_ Module to enable or disable this module.

[3] Kqueue – an efficient method for FreeBSD 4.1+, OpenBSD 2.9+, NetBSD 2.0 and MacOS X. using kqueue in a dual processor MacOS X system may cause kernel crash.

[4] Epoll – an efficient method for Linux kernel version 2.6 and later systems. In some distributions, such as SuSE 8.2, there are patches for the 2.4 kernel to support epoll.

[5] Rtsig - executable real-time signal, which is used in systems after Linux kernel version 2.2.19. By default, no more than 1024 POSIX real-time (queued) signals can appear in the whole system. This situation is inefficient for high load servers; Therefore, it is necessary to increase the size of the queue by adjusting the kernel parameter / proc / sys / kernel / rtsig max. However, since the Linux kernel version 2.6.6-mm2, this parameter is no longer used, and there is an independent signal queue for each process. The size of this queue can be limited by rlimit_ Sigbinding parameter adjustment. When the queue is too congested, nginx abandons it and starts using the poll method to process the connection until it returns to normal.

[6] / dev/poll – an efficient method for Solaris 7 11/99+, HP/UX 11.22+ (eventport), IRIX 6.5.15 + and Tru64 UNIX 5.1A +

[7] eventport – an efficient method for Solaris 10 In order to prevent kernel crash, it is necessary to install this security patch.

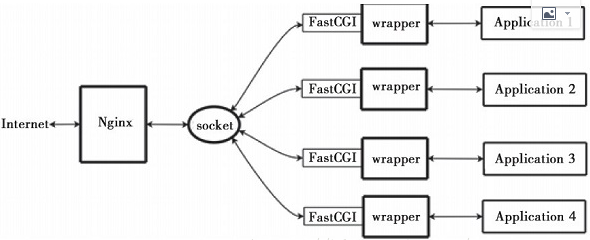

37, Operation principle of fastcinx

Nginx does not support direct calling or parsing of external programs. All external programs (including PHP) must be called through FastCGI interface. FastCGI interface is socket under Linux (this socket can be file socket or ip socket).

Wrapper: in order to call the CGI program, you also need a wrapper of FastCGI (the wrapper can be understood as the program used to start another program), which is bound to a fixed socket, such as a port or file socket. When Nginx sends a CGI request to this socket, the wrapper receives the request through the FastCGI interface, and then fork (derives) a new thread, which calls the interpreter or external program to process the script and read the returned data; Then, the wrapper passes the returned data to Nginx along the fixed socket through the FastCGI interface; Finally, Nginx sends the returned data (html page or picture) to the client. This is the whole operation process of Nginx+FastCGI, as shown in the figure below.

38, Nginx multi process event model: asynchronous non blocking

Although nginx uses multiple workers to process requests, there is only one main thread in each worker. The number of concurrency that can be processed is very limited. How many workers can handle how many concurrency? Why is high concurrency? No, that's what nginx is good at. Nginx uses an asynchronous and non blocking way to process requests, that is, nginx can process thousands of requests at the same time. The number of requests that a worker process can handle at the same time is only limited by the memory size. Moreover, in terms of architecture design, there is almost no limit of synchronization lock when processing concurrent requests between different worker processes, and the worker process usually does not enter the sleep state. Therefore, when the number of processes on nginx is equal to the number of CPU cores (it is better that each worker process is bound to a specific CPU core), The cost of inter process switching is minimal.

The common working mode of Apache (APACHE also has asynchronous non blocking version, but it is not commonly used because it conflicts with some built-in modules). Each process only processes one request at a time. Therefore, when the number of concurrency reaches thousands, thousands of processes are processing requests at the same time. This is not a small challenge for the operating system. The memory occupation brought by the process is very large, and the cpu overhead brought by the context switching of the process is very large, so the natural performance can not go up, and these overhead is completely meaningless.