1, Please talk about your understanding of volatile

1. volatile is a lightweight synchronization mechanism provided by Java virtual machine

Ensure visibility; Atomicity is not guaranteed; Prohibit instruction rearrangement

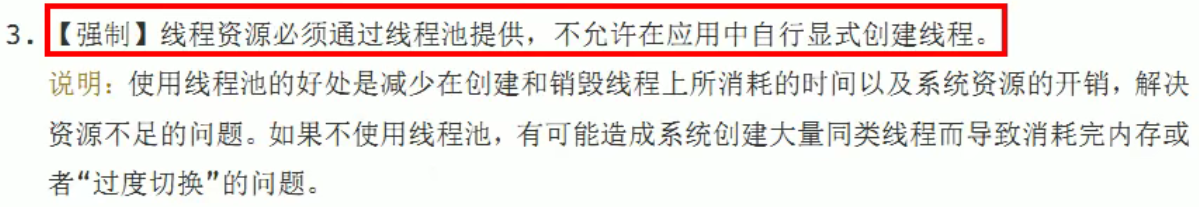

2. Talk about JMM (Java memory model)

Java Memory model itself is an abstract concept and does not really exist. It describes a set of rules or specifications that define the access methods of various variables in the program (including instance fields, static fields and elements constituting array objects). JMM Provisions on synchronization: (1). Before the thread is unlocked, the value of the shared variable must be flushed to the main memory (2). Before a thread locks, it must read the latest value of the main memory into its own working memory (3). Locking and unlocking are the same lock because JVM The entity running the program is a thread, and when each thread is created JVM A working memory (sometimes called stack space) will be created for it. The working memory is the private data area of each thread, and Java The memory model stipulates that all variables are stored in the main memory. The main memory is a shared memory area that can be accessed by all threads, but the operation of threads on variables (reading, assignment, etc.) must be carried out in the working memory. First, copy the variables from the main memory to their own working memory space, then operate the variables, and write the variables back to the main memory after the operation is completed, The variables in the main memory cannot be operated directly. The working memory of each thread stores copies of the variables in the main memory. Therefore, different threads cannot access each other's working memory. The communication (value transfer) between threads must be completed through the main memory. The brief access process is shown in the following figure:

2.1 visibility

Through the front JMM We know that: The operation of shared variables in main memory by each thread is copied to its own working memory for operation, and then written back to main memory. There may be a thread AAA Shared variables modified X But has not been written back to main memory by another thread BBB For the same shared variable in main memory X Operation, but at this time AAA Shared variables in thread working memory X To thread BBB It is not visible to the public. This synchronization delay between working memory and main memory causes visibility problems.

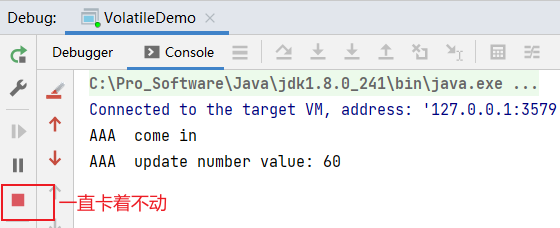

When volatile is not added to the following code:

package com.jujuxiaer.interview.thread;

import java.util.concurrent.TimeUnit;

/**

* @author Jujuxiaer

*/

class MyData{

int number = 0;

public void addTo60(){

this.number = 60;

}

}

public class VolatileDemo {

public static void main(String[] args) {

// Resource class

MyData myData = new MyData();

new Thread(() -> {

System.out.println(Thread.currentThread().getName() + "\t come in");

// Pause for a moment

try {

TimeUnit.SECONDS.sleep(3);

} catch (InterruptedException e) {

e.printStackTrace();

}

myData.addTo60();

System.out.println(Thread.currentThread().getName() + "\t update number value: " + myData.number);

}, "AAA").start();

while (myData.number == 0) {

// The main thread will stay stuck in the loop and wait until the value of number is not 0 and jump out of the loop

}

System.out.println(Thread.currentThread().getName() + "\t mission is over");

}

}

The picture above has been stuck, indicating that when number Shared resources are not keyed volatile The workflow is like this, AAA The thread will be in main memory number=0 The value of was read AAA In the working memory of the thread, that is, in the working memory number=0,Then it took three seconds to number The value of is changed to 60, but there is no keyword volatile,So it won't number=60 Write back to main memory. next main Here comes the thread while(number == 0)Here, this is main Thread reads from main memory number Value of, read number Or 0, so this while If the association is established all the time, the phenomenon that it has been stuck in the figure above appears.

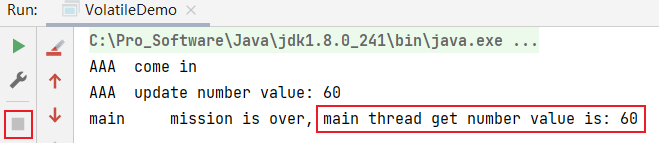

When the keyword volatile is added to number:

package com.jujuxiaer.interview.thread;

import java.util.concurrent.TimeUnit;

/**

* @author Jujuxiaer

*/

class MyData{

volatile int number = 0;

public void addTo60(){

this.number = 60;

}

}

public class VolatileDemo {

public static void main(String[] args) {

// Resource class

MyData myData = new MyData();

new Thread(() -> {

System.out.println(Thread.currentThread().getName() + "\t come in");

// Pause for a moment

try {

TimeUnit.SECONDS.sleep(3);

} catch (InterruptedException e) {

e.printStackTrace();

}

myData.addTo60();

System.out.println(Thread.currentThread().getName() + "\t update number value: " + myData.number);

}, "AAA").start();

while (myData.number == 0) {

// The main thread will stay stuck in the loop and wait until the value of number is not 0 and jump out of the loop

}

System.out.println(Thread.currentThread().getName() + "\t mission is over, main thread get number value is: " + myData.number);

}

}

As shown in the screenshot above, plus volatile After, main Thread in while It can be perceived at the loop number The value of was changed and notified. The actual process is like this, AAA Thread read from main memory number=0 Put it in your working memory and number The value of is changed to 60 because it is added volatile keyword, AAA The thread updates the value number=60 Synchronize to main memory, that is, the data in main memory number Will become 60, and then main Thread in while Read from main memory at loop number It's 60. I read it main In the working memory of the thread.

2.2 atomicity

Although the keyword volatile is added, atomicity cannot be guaranteed:

package com.jujuxiaer.interview.thread;

class MyData2{

volatile int number = 0;

public void addPlusPlus() {

this.number++;

}

}

public class VolatileDemo2 {

public static void main(String[] args) {

// Resource class

MyData2 myData = new MyData2();

for(int i = 0; i < 20; i++) {

new Thread(() -> {

for (int j = 0; j < 1000; j++) {

myData.addPlusPlus();

}

}, String.valueOf(i)).start();

}

// You need to wait until all the above 20 threads are calculated

while (Thread.activeCount() > 2) {

Thread.yield();

}

System.out.println(Thread.currentThread().getName() + "\t finally number value: " + myData.number);

}

}

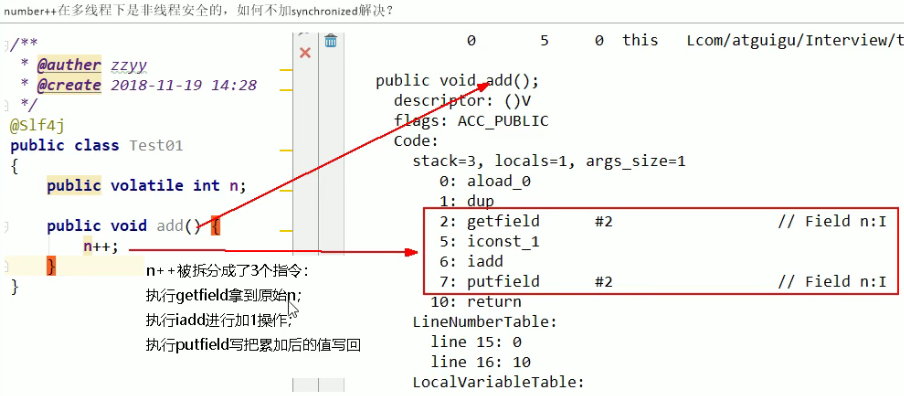

The calculation result of the above code is less than 20000, which indicates that the atomicity of this.number + + is not guaranteed. Because multiple threads operate on number in main memory at the same time, write values will be lost. Number + + is thread safe under multithreading. The mber + + operation actually has three steps, as shown in the figure below:

2.3 VolatileDemo code demonstration visibility + atomicity code

package com.jujuxiaer.interview.thread;

import java.util.concurrent.atomic.AtomicInteger;

class MyData2{

volatile int number = 0;

public void addPlusPlus() {

this.number++;

}

AtomicInteger atomicInteger = new AtomicInteger();

public void addMyAtomic() {

this.atomicInteger.getAndIncrement();

}

}

public class VolatileDemo2 {

public static void main(String[] args) {

// Resource class

MyData2 myData = new MyData2();

for(int i = 0; i < 20; i++) {

new Thread(() -> {

for (int j = 0; j < 1000; j++) {

myData.addPlusPlus();

myData.addMyAtomic();

}

}, String.valueOf(i)).start();

}

// You need to wait until all the above 20 threads are calculated

while (Thread.activeCount() > 2) {

Thread.yield();

}

System.out.println(Thread.currentThread().getName() + "\t int type, finally number value: " + myData.number);

System.out.println(Thread.currentThread().getName() + "\t AtomicInteger type, finally number value: " + myData.atomicInteger);

}

}

The execution result of the above code: the value of myData.number is less than 20000, and the value of myData.atomicInteger is equal to 20000. The reason is that AtomicInteger can ensure the atomicity of + + operations.

2.4 order

When a computer executes a program, in order to improve the performance, the compiler and processor often rearrange the instructions, which are generally divided into the following 2:

(1) In the single thread environment, ensure that the final execution result of the program is consistent with the result of code sequential execution.

(2) The processor must consider the data dependency between instructions when reordering.

(3) In the multi-threaded environment, threads execute alternately. Due to the existence of compiler optimization rearrangement, whether the variables used in the two threads can ensure consistency is uncertain and the result can not be predicted.

Example 1 of rearrangement:

public void mySort() {

int x = 11; // Statement 1

int y = 12; // Statement 2

x = x + 5; // Statement 3

y = x * x; // Statement 4

}

The possible sequence of statements after instruction rearrangement is as follows:

1234

2134

1324

Question: after instruction rearrangement, will statement 4 program the first one?

No, because the data dependency between instructions must be considered when reordering. If statement 4 becomes the first, the variable x None is defined before statement 4, so it won't.

Example 2 of rearrangement:

Initially, int a, b, x, y = 0;

| Thread 1 | Thread 2 |

|---|---|

| x = a; | y = b; |

| b = 1; | a = 2; |

| x = 0 y = 0 |

If the compiler rearranges and optimizes this program, the following program reading order may occur:

| Thread 1 | Thread 2 |

|---|---|

| b = 1; | a = 2; |

| x = a; | y = b; |

| x = 2 y = 1 |

Summary of prohibition of instruction rearrangement:

volatile implements the prohibition of instruction rearrangement optimization, so as to avoid the disorder of program execution in multi-threaded environment.

Let's first understand a concept. Memory Barrier, also known as Memory Barrier, is a CPU instruction. It has two functions:

(1) . ensure the execution sequence of specific operations

(2) . ensure the memory visibility of some variables (use this feature to realize the memory visibility of volatile)

Because both compiler and processor can perform instruction rearrangement optimization. If a Memory Barrier is inserted between instructions, it will tell the compiler and CPU that no instruction can be reordered with this Memory Barrier instruction, that is, the instructions before and after the Memory Barrier are prohibited from performing reordering optimization by inserting a Memory Barrier. Another function of the Memory Barrier is to forcibly brush out the cached data of various CPUs, so any thread on the CPU can read the latest version of these data.

When writing volatile variables, a store barrier instruction will be added after the write operation to refresh the shared variables in the working memory back to the main memory.

When reading volatile variables, a load instruction will be added before the write operation to read the shared variables from the main memory.

Thread safety is guaranteed:

The visibility problem caused by synchronization delay between working memory and main memory can be solved by using synchronized or volatile keywords, which can make the modified variables of one thread immediately visible to other threads.

The visibility and ordering problems caused by instruction rearrangement can be solved by volatile keyword, because another function of volatile is to prohibit instruction rearrangement optimization.

3. Where have you used volatile?

3.1 DCL code of singleton mode

public class SingletonDemo {

private static SingletonDemo instance = null;

public SingletonDemo() {

System.out.println(Thread.currentThread().getName() + "\t Construction method SingletonDemo()Executed");

}

public static SingletonDemo getInstance() {

if (instance == null) {

synchronized (SingletonDemo.class) {

if (instance == null) {

instance = new SingletonDemo();

}

}

}

return instance;

}

public static void main(String[] args) {

for(int i = 0; i < 1000; i++) {

new Thread(() -> {

SingletonDemo.getInstance();

}, String.valueOf(i)).start();

}

}

}

3.2 single case mode volatile analysis

private static volatile SingletonDemo instance = null;

In the above code, the DCL (double ended lock checking) mechanism does not necessarily guarantee thread safety. Because there is instruction rearrangement, you need to add the volatile keyword to the SingletonDemo instance variable to prohibit instruction rearrangement.

Analyze the DCL process. When a thread performs the first detection and the read instance is not null, it is possible that the reference corresponding to the instance may not have completed initialization.

Because instance = new SingletonDemo(); It can be divided into the following (pseudo code):

memory = allocate(); // 1. Allocate object memory space

instance(memory); // 2. Initialization object

instance = memory; // 3. Set instance to point to the memory address just allocated. At this time, instance is not null

There is no data dependency between step 2 and step 3 above, and in the single thread environment, the execution results of the program before or after rearrangement do not change, so the reordering optimization of step 1, step 3 and step 2 will occur.

memory = allocate(); // 1. Allocate object memory space

instance = memory; // 3. Set instance to point to the memory address just allocated. At this time, instance is not null, but object initialization has not been completed yet

instance(memory); // 2. Initialization object

2, You know what

1. Compare and exchange

public class CASDemo {

public static void main(String[] args) {

AtomicInteger atomicInteger = new AtomicInteger(5);

System.out.println(atomicInteger.compareAndSet(5, 2019) + "\t current data: " + atomicInteger.get());

System.out.println(atomicInteger.compareAndSet(5, 2024) + "\t current data: " + atomicInteger.get());

}

}

The output result is:

true current data: 2019

false current data2: 2019

Explanation: after new AtomicInteger(5), the value in the main memory is 5. The sentence atomicInteger.compareAndSet(5, 2019) expects that the value in the main memory is 5. If the value in the main memory is 5, change the 5 in the working memory to 2019 and write it to the main memory, that is, the value in the main memory is also changed to 2019. atomicInteger.compareAndSet(5, 2024) the expected value of the main memory is 5. Compare the expected value with the value in the actual main memory. If the two are not equal, updating the value to 2024 fails. The final value is 2019.

2. CAS underlying principle? If you know, talk about your understanding of UnSafe

2.1 atomicInteger.getAndIncrement()

atomicInteger.getAndIncrement()Method source code:

/**

* Atomically increments by one the current value.

*

* @return the previous value Memory offset address

*/

public final int getAndIncrement() {

return unsafe.getAndAddInt(this, valueOffset, 1);

}

2.2 Unsafe

(1). Unsafe class is the core class of CAS. Because Java methods cannot directly access the underlying system, they need to be accessed through local methods. Unsafe is equivalent to a back door. Based on this class, you can directly operate the data of specific memory. Unsafe class exists in sun.misc package. Its internal method operations can directly operate memory like C pointers, because the execution of CAS operations in Java depends on the methods of unsafe class.

Note that all methods in the Unsafe class are modified native ly, that is, all methods in the Unsafe class directly call the underlying resources of the operating system to execute the corresponding plan.

(2) . variable valueOffset indicates the offset address of the variable in memory, because Unsafe obtains data according to the memory offset address.

/**

* Atomically increments by one the current value.

*

* @return the previous value

*/

public final int getAndIncrement() {

return unsafe.getAndAddInt(this, valueOffset, 1);

}

(3) The variable value is decorated with volatile to ensure memory visibility between multiple threads

2.3 what is CAS

The full name of CAS is compare and swap, which is a CPU concurrency primitive. Its function is to judge whether the value of a memory location is the expected value. If so, it will be changed to a new value. This process is atomic. CAS concurrency primitives are embodied in the Java language, which are the methods in the sun.misc.Unsafe class. Call the CAS method in the Unsafe class, and the JVM will help us implement the CAS assembly instruction. This is a function completely dependent on hardware, through which atomic operation is realized. Again, CAS is a system primitive, which belongs to the language category of the operating system. It is composed of several instructions. It is a sub process used to complete a function, and the execution of the primitive must be continuous. It is not allowed to be interrupted in the re execution process, that is, CAS is a primitive instruction of the CPU, which will not cause the so-called data inconsistency problem.

2.3.1 unsafe.getAndAddInt()

The var1 AtomicInteger object itself

var2 the reference address of the object value

var4 quantity to be changed

var5 is the real value in main memory found through var1 and var2.

Compare the current value of the object with var5. If it is the same, update var5+var4 and return true; If different, continue to take values and then compare until the update is completed.

example:

Suppose that thread A and thread B execute getAndAddInt simultaneously (running on different CPUs respectively).

- The original value of value in AtomicInteger is 5, that is, the value of AtomicInteger in the main memory is 5. According to the JMM model, thread A and thread B each hold A copy of value with A value of 5 in the working memory.

- Thread A obtains the value of 5 through getIntVolatile(var1, var2). At this time, thread A is suspended.

- Thread B also obtains the value of 5 through the getIntVolatile(var1, var2) method. At this time, thread B is not suspended and executes the compareAndSwapInt method. The comparison memory value is also 5. The memory value is successfully modified to 6. Thread B completes execution.

- At this time, thread A recovers and performs compareAndSwapInt method comparison. It is found that the value 5 in its own hand is inconsistent with the value 6 in the main memory, indicating that the value has been modified by other threads first. Thread A failed to modify this time and can only read it again.

- Thread A obtains the value again. Because value is modified by volatile, thread A can always see the modification of it by other threads. Thread A continues to perform compareAndSwapInt for comparison and replacement until it succeeds.

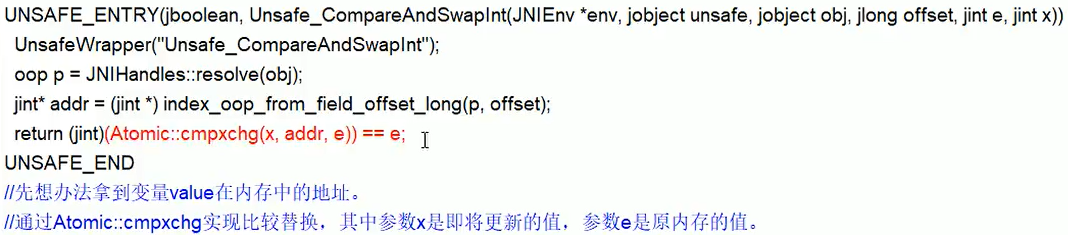

2.3.2 bottom assembly

compareAndSwapInt in Unsafe class is a local method whose implementation is located in unsafe.cpp

2.3.3 brief summary

CAS: compare the values in the current working memory with those in the main memory. If they are the same, perform the specified operations. Otherwise, continue the comparison until you know that the values in the main memory and the working memory are consistent.

CAS application: CAS has three operands, memory value V, old expected value A, and updated value B to be modified. If and only if the expected value A is the same as the memory value V, modify the memory value V to B, otherwise do nothing and continue the cycle.

3. Disadvantages of CAS

(1) . the cycle time is long and the cost is great;

We can see that when the getAndAddInt method in the figure above executes, there is a do while. If CAS fails, it will keep trying circularly. If CAS fails for a long time, it may bring great overhead to CPU.

(2) . only atomic operation of one shared variable can be guaranteed;

When an operation is performed on a shared variable, cyclic CAS can be used to ensure atomic operation. However, when multiple shared variables are executed, cyclic CAS cannot ensure the atomicity of the operation. At this time, locks can be used to ensure atomicity.

(3) 1. ABA problem;

3, About ABA of atom class AtomInterger? Atomic update references, you know?

1. How did ABA problem arise

An important premise of CAS algorithm is to take out the data in memory at a certain time, compare and replace it at the current time, then the time difference will lead to the change of data.

For example, both thread 1 and thread 2 take data a from memory location V, and thread 2 performs some operations to change the value to B, and then thread 2 changes the data at location V to A. at this time, thread 1 performs CAS operation and finds that there is still a in memory, and then thread 1 operates successfully.

Although the CAS operation of thread 1 is successful, it does not mean that the process is OK.

2. Atomic reference

class User {

String userName;

int age;

public User(String userName, int age) {

this.userName = userName;

this.age = age;

}

public String getUserName() {

return userName;

}

public void setUserName(String userName) {

this.userName = userName;

}

public int getAge() {

return age;

}

public void setAge(int age) {

this.age = age;

}

@Override

public String toString() {

return "User{" +

"userName='" + userName + '\'' +

", age=" + age +

'}';

}

}

public class AtomicReferenceDemo {

public static void main(String[] args) {

User zhangsan = new User("zhangsan", 22);

User lisi = new User("lisi", 24);

AtomicReference<User> atomicReference = new AtomicReference<>();

atomicReference.set(zhangsan);

System.out.println(atomicReference.compareAndSet(zhangsan, lisi) + "\t " + atomicReference.get().toString());

System.out.println(atomicReference.compareAndSet(zhangsan, lisi) + "\t " + atomicReference.get().toString());

}

}

The result output is:

true User{userName='lisi', age=24}

false User{userName='lisi', age=24}

3. Timestamp atomic reference

import java.util.concurrent.TimeUnit;

import java.util.concurrent.atomic.AtomicReference;

import java.util.concurrent.atomic.AtomicStampedReference;

/**

* @author Jujuxiaer

*/

public class ABADemo {

static AtomicReference<Integer> atomicReference = new AtomicReference<>(100);

static AtomicStampedReference<Integer> atomicStampedReference = new AtomicStampedReference<>(100, 1);

public static void main(String[] args) {

System.out.println("==============Here is ABA Problem generation==============");

new Thread(() -> {

atomicReference.compareAndSet(100, 101);

atomicReference.compareAndSet(101, 100);

}, "t1").start();

new Thread(() -> {

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println(atomicReference.compareAndSet(100, 2019) + "\t" + atomicReference.get());

}, "t2").start();

try {

TimeUnit.SECONDS.sleep(3);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println("==============Here is ABA Problem solving==============");

new Thread(() -> {

int stamp = atomicStampedReference.getStamp();

System.out.println(Thread.currentThread().getName() + "\t The version number obtained for the first time is" + stamp);

// Pause for 1 second to ensure that the version number obtained by the t4 thread above is also 1

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

atomicStampedReference.compareAndSet(100, 101, stamp, stamp + 1);

stamp = atomicStampedReference.getStamp();

System.out.println(Thread.currentThread().getName() + "\t The version number obtained for the second time is" + stamp);

atomicStampedReference.compareAndSet(101, 100, stamp, stamp + 1);

System.out.println(Thread.currentThread().getName() + "\t The version number obtained for the third time is" + atomicStampedReference.getStamp());

}, "t3").start();

new Thread(() -> {

int stamp2 = atomicStampedReference.getStamp();

System.out.println(Thread.currentThread().getName() + "\t The version number obtained for the first time is" + stamp2);

// Pause for 3 seconds to ensure that the t3 thread above completes an ABA operation

try {

TimeUnit.SECONDS.sleep(3);

} catch (InterruptedException e) {

e.printStackTrace();

}

boolean isSuccess = atomicStampedReference.compareAndSet(100, 2019, stamp2, stamp2 + 1);

stamp2 = atomicStampedReference.getStamp();

System.out.println(Thread.currentThread().getName() + "\t Modified successfully:" + isSuccess + " Current latest version number:" + stamp2);

System.out.println(Thread.currentThread().getName() + "\t Current actual latest value:" + atomicStampedReference.getReference());

}, "t4").start();

}

}

4, We know that ArrayList is thread unsafe. Please write an unsafe case and give a solution

public class ContainerNotSafeDemo {

public static void main(String[] args) {

List<String> list = new ArrayList<>();

for (int i = 0; i < 30; i++) {

new Thread(() -> {

list.add(UUID.randomUUID().toString().substring(0, 8));

System.out.println(list);

}, String.valueOf(i)).start();

}

}

}

The above code will appear java.util.ConcurrentModificationException abnormal

Cause:

Concurrent scramble modification. Referring to the roster signature, one person is writing and another student comes to scramble, resulting in inconsistent data and abnormal concurrent modification.

Solution:

1. new Vector<>();replace

2. Collections.syschronizedList(new ArrayList<>());replace

3. new CopyOnWriteArrayList<>();replace

CopyOnWriteArrayList Source code:

/**

* Appends the specified element to the end of this list.

*

* @param e element to be appended to this list

* @return {@code true} (as specified by {@link Collection#add})

*/

public boolean add(E e) {

final ReentrantLock lock = this.lock;

lock.lock();

try {

Object[] elements = getArray();

int len = elements.length;

Object[] newElements = Arrays.copyOf(elements, len + 1);

newElements[len] = e;

setArray(newElements);

return true;

} finally {

lock.unlock();

}

}

CopyOnWrite container, that is, the copy on write container. When adding elements to a container, instead of directly adding elements to the current container Obejct [], first copy the current container Object[]Copy to a new container Object[] newElements, and then add elements to the new container Object[] newElements. After adding elements, point the reference of the original container to the new container setArray(newElements). The advantage of this is that the CopyOnWrite container can be read concurrently without locking. Because the current container will not add any elements, the CopyOnWrite container is also an idea of separation of reading and writing. Reading and writing are different containers.

public class ContainerNotSafeDemo {

public static void main(String[] args) {

Set<String> set = new HashSet<>();

for (int i = 0; i < 50; i++) {

new Thread(() -> {

set.add(UUID.randomUUID().toString().substring(0, 8));

System.out.println(set);

}, String.valueOf(i)).start();

}

}

}

The above code will appear java.util.ConcurrentModificationException Abnormal.

Cause:

Concurrent scramble modification. Referring to the roster signature, one person is writing and another student comes to scramble, resulting in inconsistent data and abnormal concurrent modification.

Solution:

1. Set<String> set = Collections.synchronizedSet(new HashSet<>());replace

2. CopyOnWriteArraySet<String> set = new CopyOnWriteArraySet<>(new HashSet<>());replace

public class ContainerNotSafeDemo {

public static void main(String[] args) {

Map<String, String> map = new HashMap<>();

for (int i = 0; i < 50; i++) {

new Thread(() -> {

map.put(Thread.currentThread().getName(), UUID.randomUUID().toString().substring(0, 8));

System.out.println(map);

}, String.valueOf(i)).start();

}

}

}

The above code will appear java.util.ConcurrentModificationException Abnormal.

Cause:

Concurrent scramble modification. Referring to the roster signature, one person is writing and another student comes to scramble, resulting in inconsistent data and abnormal concurrent modification.

Solution:

1.Map<String, String> map = Collections.synchronizedMap(new HashMap<>());replace

2. Map<String, String> map = new ConcurrentHashMap<>();replace

5, Fair lock / unfair lock / reentrant lock / recursive lock / spin lock what's your understanding? Please write a spin lock

1. Fair lock and unfair lock

1.1 what is it

Fair lock: multiple threads acquire locks according to the order in which they apply for locks, similar to queuing for meals and arriving first come first served.

Unfair lock: the order in which multiple threads acquire locks is not the order in which they apply for locks. It is possible that the thread that applies later acquires locks first than the thread that applies first. In the case of high concurrency, priority reversal or starvation may occur.

1.2 difference between the two

The creation of ReentrantLock in the contract can specify the boolean type of constructor to obtain fair lock or unfair lock. The default is unfair lock.

About the difference between the two:

Fair lock: fair. In a concurrent environment, each thread will first view the waiting queue maintained by the lock when obtaining the lock. If it is empty or the current thread is the first in the waiting queue, it will occupy the lock. Otherwise, it will be added to the waiting queue and get itself from the queue according to FIFO rules.

Unfair locks are rude. When they come up, they directly try to possess the lock. If the attempt fails, they will adopt a method similar to fair locks.

1.3 digression

For Java ReentrantLock, specify whether the lock is a fair lock through the constructor. The default is a non fair lock. The advantage of non fair lock is that the throughput is greater than that of fair lock. For Synchronized, it is also an unfair lock.

2. Reentrant lock (also known as recursive lock)

2.1 what is it

It means that after the outer function of the same thread obtains the lock, the memory recursive function can still obtain the code of the lock. When the same thread obtains the lock in the outer method, it will automatically obtain the lock when entering the inner method.

2.2 reentrantlock / synchronized is a typical reentrant lock

2.3 the function of reentrant lock is to avoid deadlock

2.4ReentrantLockDemo

class Phone {

public synchronized void sendSMS() {

System.out.println(Thread.currentThread().getName() + "\t invoked sendSMS");

sendEmail();

}

public synchronized void sendEmail() {

System.out.println(Thread.currentThread().getName() + "\t invoked sendEmail");

}

}

public class ReentrantLockDemo {

public static void main(String[] args) {

Phone phone = new Phone();

new Thread(() -> {

phone.sendSMS();

}, "t1").start();

new Thread(() -> {

phone.sendSMS();

}, "t2").start();

}

}

Execution result of the above code:

t1 invoked sendSMS

t1 invoked sendEmail

t2 invoked sendSMS

t2 invoked sendEmail

Explanation:

t1 Thread in sendSMS()When this method obtains the lock, t1 Method of entering the inner layer sendEmail()This inner method automatically acquires the lock.

package com.jujuxiaer.interview.thread;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

class Phone implements Runnable {

Lock lock = new ReentrantLock();

@Override

public void run() {

sendSMS();

}

public void sendSMS() {

lock.lock();

try {

System.out.println(Thread.currentThread().getName() + "\t invoked sendSMS");

sendEmail();

} finally {

lock.unlock();

}

}

public void sendEmail() {

lock.lock();

try {

System.out.println(Thread.currentThread().getName() + "\t invoked sendEmail");

} finally {

lock.unlock();

}

}

}

public class ReentrantLockDemo {

public static void main(String[] args) {

Phone phone = new Phone();

Thread t1 = new Thread(phone, "t1");

Thread t2 = new Thread(phone, "t2");

t1.start();

t2.start();

}

}

Execution result of the above code:

t1 invoked sendSMS

t1 invoked sendEmail

t2 invoked sendSMS

t2 invoked sendEmail

Explanation:

public void sendSMS() {

lock.lock();

try {

// A thread can enter any lock it owns, synchronized code block. The synchronized code block refers to sendEmail();

System.out.println(Thread.currentThread().getName() + "\t invoked sendSMS");

sendEmail();

} finally {

lock.unlock();

}

}

Note: the number of lock.lock() and lock.unlock() should be matched one by one. The following can also be used:

public void sendEmail() {

lock.lock();

lock.lock();

try {

System.out.println(Thread.currentThread().getName() + "\t invoked sendEmail");

} finally {

lock.unlock();

lock.unlock();

}

}

3. Spin lock

It means that the thread trying to acquire the lock will not block immediately, but will try to acquire the lock in a circular way. This has the advantage of reducing the consumption of thread context switching, but the disadvantage is that the loop will consume cpu.

package com.jujuxiaer.interview.thread;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.atomic.AtomicReference;

/**

* @author Jujuxiaer

*/

public class SpinLockDemo {

// Atomic reference thread

AtomicReference<Thread> atomicReference = new AtomicReference<>();

public void myLock() {

Thread thread = Thread.currentThread();

System.out.println(thread.getName() + "\t come in");

while (!atomicReference.compareAndSet(null, thread)) {

}

}

public void myUnLock() {

Thread thread = Thread.currentThread();

atomicReference.compareAndSet(thread,null);

System.out.println(thread.getName() + "\t invoked myUnLock()");

}

public static void main(String[] args) {

SpinLockDemo spinLockDemo = new SpinLockDemo();

new Thread(() -> {

spinLockDemo.myLock();

try {

TimeUnit.SECONDS.sleep(5);

} catch (InterruptedException e) {

e.printStackTrace();

}

spinLockDemo.myUnLock();

}, "AA").start();

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

new Thread(() -> {

spinLockDemo.myLock();

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

spinLockDemo.myUnLock();

}, "BB").start();

}

}

Execution results:

AA come in

BB come in

AA invoked myUnLock()

BB invoked myUnLock()

4. Exclusive lock / shared lock

Exclusive lock: a read lock that can only be held by one thread at a time. Both ReentrantLock and Synchronized are exclusive locks.

Shared lock: the lock can be held by multiple threads.

For ReentrantReadWriteLock, its read lock is a shared lock and its write lock is an exclusive lock. The shared lock of read lock can ensure that concurrent reading is very efficient, and the processes of reading, writing, reading and writing are mutually exclusive.

package com.jujuxiaer.interview.thread;

import java.util.HashMap;

import java.util.Map;

import java.util.concurrent.TimeUnit;

/**

* @author Jujuxiaer

*/

public class ReadWriteLockDemo {

public static void main(String[] args) {

MyCache myCache = new MyCache();

for(int i = 0; i < 5; i++) {

int finalI = i;

new Thread(() -> {

myCache.put(String.valueOf(finalI), finalI);

}, String.valueOf(i)).start();

}

for(int i = 0; i < 5; i++) {

int finalI1 = i;

new Thread(() -> {

myCache.get(String.valueOf(finalI1));

}, String.valueOf(i)).start();

}

}

}

class MyCache {

private volatile Map<String, Object> map = new HashMap<>();

public void put(String key, Object value) {

System.out.println(Thread.currentThread().getName() + "\t Writing:" + key);

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

map.put(key, value);

System.out.println(Thread.currentThread().getName() + "\t Write complete");

}

public void get(String key) {

System.out.println(Thread.currentThread().getName() + "\t Reading:" + key);

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

Object result = map.get(key);

System.out.println(Thread.currentThread().getName() + "\t Read complete: " + result);

}

}

Execution results:

0 writing: 0

3 writing: 3

4 Writing: 4

2 writing: 2

1 writing: 1

0 reading: 0

1 Reading: 1

2 reading: 2

3 Reading: 3

4 reading: 4

0 write complete

0 read complete: 0

4 read complete: null

2 read complete: null

3 read complete: null

1 read complete: null

2 write complete

3 write complete

1 write complete

4 write complete

It can be seen from the results that the middle of the write operation is interrupted by reading.

The read-write lock is added. The improved code is as follows:

package com.jujuxiaer.interview.thread;

import java.util.HashMap;

import java.util.Map;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.locks.ReentrantLock;

import java.util.concurrent.locks.ReentrantReadWriteLock;

/**

* @author Jujuxiaer

* There is no problem for multiple threads to read a resource class at the same time, so in order to meet the concurrency, reading shared resources should be carried out at the same time.

* However, if a thread wants to write to a shared resource, no other thread should be able to read or write to the resource.

* Summary:

* Read read coexistence

* Read write cannot coexist

* Write write cannot coexist

* <p>

* Write operation: atom + exclusive. The whole process must be a complete unity. The middle cannot be divided or interrupted

*/

public class ReadWriteLockDemo {

public static void main(String[] args) {

MyCache myCache = new MyCache();

for(int i = 0; i < 5; i++) {

int finalI = i;

new Thread(() -> {

myCache.put(String.valueOf(finalI), finalI);

}, String.valueOf(i)).start();

}

for(int i = 0; i < 5; i++) {

int finalI1 = i;

new Thread(() -> {

myCache.get(String.valueOf(finalI1));

}, String.valueOf(i)).start();

}

}

}

class MyCache {

private volatile Map<String, Object> map = new HashMap<>();

private ReentrantReadWriteLock rwLock = new ReentrantReadWriteLock();

public void put(String key, Object value) {

ReentrantReadWriteLock.WriteLock writeLock = rwLock.writeLock();

writeLock.lock();

try {

System.out.println(Thread.currentThread().getName() + "\t Writing:" + key);

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

map.put(key, value);

System.out.println(Thread.currentThread().getName() + "\t Write complete");

}catch (Exception e){

e.printStackTrace();

}finally {

writeLock.unlock();

}

}

public void get(String key) {

ReentrantReadWriteLock.ReadLock readLock = rwLock.readLock();

readLock.lock();

try {

System.out.println(Thread.currentThread().getName() + "\t Reading");

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

Object result = map.get(key);

System.out.println(Thread.currentThread().getName() + "\t Read complete: " + result);

}catch (Exception e){

e.printStackTrace();

}finally {

readLock.unlock();

}

}

public void clearCacheMap() {

}

}

The results are as follows:

1 writing: 1

1 write complete

0 writing: 0

0 write complete

2 writing: 2

2 write complete

3 writing: 3

3 write complete

4 Writing: 4

4 write complete

0 is reading

1 Reading

2 reading

3 Reading

4 reading

0 read complete: 0

1 read complete: 1

2 read complete: 2

4 read complete: 4

3 read complete: 3

It can be observed that there is no interruption between write operations, and read operations can be concurrent

6, Have you used CountDownLatch/CyclicBarrier/Semaphore

1. CountDownLatch

public class CountDownLatchDemo {

public static void main(String[] args) {

for (int i = 1; i <= 6; i++) {

new Thread(() -> {

System.out.println(Thread.currentThread().getName() + "\t Study by yourself last night and leave the classroom");

}, String.valueOf(i)).start();

}

System.out.println(Thread.currentThread().getName() + " The monitor finally closed the door and left");

}

}

The results are as follows:

1. Study by yourself in the evening and leave the classroom

5. Study by yourself in the evening and leave the classroom

4 study by yourself in the evening and leave the classroom

The monitor finally closed the door and left

3. Study by yourself in the evening and leave the classroom

2 study by yourself in the evening and leave the classroom

6. Study by yourself in the evening and leave the classroom

It can be observed from the implementation results that it is not guaranteed that the monitor will not close the door until all the students are finished.

The code of improved practice is as follows:

import java.util.concurrent.CountDownLatch;

/**

* @author Jujuxiaer

*/

public class CountDownLatchDemo {

public static void main(String[] args) throws InterruptedException {

CountDownLatch countDownLatch = new CountDownLatch(6);

for (int i = 1; i <= 6; i++) {

new Thread(() -> {

System.out.println(Thread.currentThread().getName() + "\t Study by yourself last night and leave the classroom");

countDownLatch.countDown();

}, String.valueOf(i)).start();

}

countDownLatch.await();

System.out.println(Thread.currentThread().getName() + " The monitor finally closed the door and left");

}

}

The results are as follows:

1. Study by yourself in the evening and leave the classroom

6. Study by yourself in the evening and leave the classroom

4 study by yourself in the evening and leave the classroom

5. Study by yourself in the evening and leave the classroom

3. Study by yourself in the evening and leave the classroom

2 study by yourself in the evening and leave the classroom

The monitor finally closed the door and left

From the implementation results, it can be observed that the monitor can ensure that all the students leave before closing the door.

import java.util.concurrent.CountDownLatch;

public class CountDownLatchDemo2 {

public static void main(String[] args) throws InterruptedException {

CountDownLatch countDownLatch = new CountDownLatch(6);

for (int i = 1; i <= 6; i++) {

new Thread(() -> {

System.out.println(Thread.currentThread().getName() + "Country, destroyed");

countDownLatch.countDown();

}, CountryEnum.forEachCountryEnum(i).getRetMessage()).start();

}

countDownLatch.await();

System.out.println(Thread.currentThread().getName() + " Qin unified the world");

}

}

public enum CountryEnum {

ONE(1, "Qi"),

TWO(2, "Chu"),

THREE(3, "Swallow"),

FOUR(4, "Han"),

FIVE(5, "Zhao"),

SIX(6, "Wei");

private Integer retCode;

private String retMessage;

CountryEnum(Integer retCode, String retMessage) {

this.retCode = retCode;

this.retMessage = retMessage;

}

public Integer getRetCode() {

return retCode;

}

public void setRetCode(Integer retCode) {

this.retCode = retCode;

}

public String getRetMessage() {

return retMessage;

}

public void setRetMessage(String retMessage) {

this.retMessage = retMessage;

}

public static CountryEnum forEachCountryEnum(int index) {

CountryEnum[] values = CountryEnum.values();

for (CountryEnum value : values) {

if (index == value.getRetCode()) {

return value;

}

}

return null;

}

}

The results are as follows:

The state of Qi was destroyed

The state of Wei was destroyed

Yan state, destroyed

The state of Chu was destroyed

Korea, destroyed

The state of Zhao was destroyed

Qin unified the world

2. CyclicBarrier

Cyclic Barrier literally means a Barrier that can be used cyclically. What it needs to do is to block a group of threads when they reach a Barrier (also known as the synchronization point). The Barrier will not open until the last thread reaches the Barrier, and all threads intercepted by the Barrier will continue to work. Threads enter the Barrier and pass through the await() method of CyclicBarrier.

import java.util.concurrent.BrokenBarrierException;

import java.util.concurrent.CyclicBarrier;

/**

* @author Jujuxiaer

*/

public class CyclicBarrierDemo {

public static void main(String[] args) {

CyclicBarrier cyclicBarrier = new CyclicBarrier(7, () -> {

System.out.println("=====Summon the Dragon======");

});

for (int i = 1; i <= 7; i++) {

int finalI = i;

new Thread(() -> {

System.out.println(Thread.currentThread().getName() + " Collected to page" + finalI + "Dragon Ball");

try {

cyclicBarrier.await();

} catch (InterruptedException e) {

e.printStackTrace();

} catch (BrokenBarrierException e) {

e.printStackTrace();

}

}, String.valueOf(i)).start();

}

}

}

The results are as follows:

1 the first dragon ball was collected

The Seventh Dragon ball was collected

5. The fifth dragon ball was collected

4. The fourth dragon ball was collected

3. The third dragon ball was collected

2. The second dragon ball was collected

The sixth dragon ball was collected

=Summon the Dragon==

3. Semaphore

Semaphores are mainly used for two purposes: one is for mutually exclusive use of multiple shared resources, and the other is for controlling the number of concurrent threads.

import java.util.concurrent.Semaphore;

import java.util.concurrent.TimeUnit;

/**

* @author Jujuxiaer

*/

public class SemaphoreDemo {

public static void main(String[] args) {

// Simulate 3 parking spaces

Semaphore semaphore = new Semaphore(3);

for (int i = 1; i <= 6; i++) {

new Thread(() -> {

try {

semaphore.acquire();

System.out.println(Thread.currentThread().getName() +"\t Grab a parking space");

// Stop for 3 seconds

try {

TimeUnit.SECONDS.sleep(3);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println(Thread.currentThread().getName() +"\t Leave the parking space after parking for 3 seconds");

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

semaphore.release();

}

}, String.valueOf(i)).start();

}

}

}

The results are as follows:

1. Grab the parking space

2 grab the parking space

3 grab the parking space

2. Leave the parking space after parking for 3 seconds

3. Leave the parking space after parking for 3 seconds

4. Grab the parking space

5 grab the parking space

1. Leave the parking space after parking for 3 seconds

6 grab the parking space

4. Leave the parking space after parking for 3 seconds

6. Leave the parking space after parking for 3 seconds

5. Leave the parking space after parking for 3 seconds

7, Blocking queue, you know?

1. Queue + blocking queue

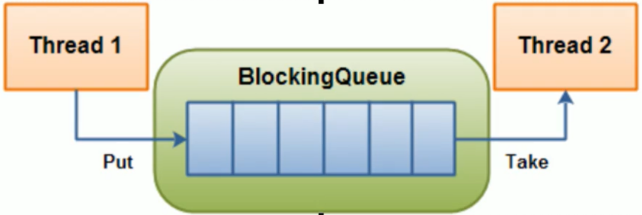

Blocking queue, as its name implies, is a queue, and the role of a blocking queue in the data structure is shown in the following figure:

Thread 1 adds elements to the blocking queue, while thread 2 removes elements from the blocking queue.

- When the blocking queue is empty, the operation of getting elements from the queue will be blocked.

- When the blocking queue is full, the operation of adding elements from the queue will be blocked.

- The thread that gets elements from the empty blocking queue will be blocked until other threads insert new elements into the empty pair queue. Similarly, a thread trying to add a new element to a full blocking queue will be blocked until another thread removes one or more elements from the queue or completely empties the queue.

2. Why? What are the benefits?

In the field of multithreading: the so-called blocking will suspend the thread (i.e. blocking) in some cases. Once the conditions are met, the suspended thread will be awakened automatically.

Why BlockingQueue? The advantage is that we don't need to care when we need to block the thread and wake up the thread, because all this is done by BlockingQueue.

Before the release of cuncurrent package, in a multithreaded environment, each programmer must control these details, especially considering efficiency and thread safety, which will bring great complexity to programmers.

3. The core method of blockqueue

| Method type | Throw exception | Special value | block | overtime |

|---|---|---|---|---|

| insert | add(e) | offer(e) | put(e) | offer(e, time, unit) |

| remove | remove() | poll() | take() | poll(time, unit) |

| inspect | element() | peek() | Not available | Not available |

Throw exception:

- When the blocking queue is full, add ing an element to the queue will throw IllegalStateException: Queue full

- When the blocking queue is empty, remo removing elements from the queue will throw NoSuchElementException

Special value:

- Insert method, success true, failure false.

- Remove the method and successfully return the elements out of the queue. If there are no elements in the queue, null will be returned

Always blocked:

- When the blocking queue is full, the producer thread is ready to put elements into the queue. The queue will block the production thread until it puts data or exits in response to an interrupt.

- When the blocking queue is empty, the consumer thread attempts to take elements from the queue, and the queue will block the consumer thread until the queue is available.

Timeout exit:

- When the blocking queue is full, the queue will block the producer thread for a certain time. After timeout, the producer thread will exit.

4. Architecture combing + Category Analysis

4.1 category analysis

- ArrayBlockingQueue: a bounded blocking queue composed of an array structure.

- LinkedBlockingQueue: a bounded (but the default size is Integer.MAX_VALUE) blocking queue composed of a linked list structure

- PriorityBlockingQueue: an unbounded blocking queue that supports prioritization

- DelayQueue: delay unbounded blocking queue implemented using priority queue

- Synchronous queue: a blocking queue that does not store elements, that is, a queue of individual elements

- LinkedTransferQueue: an unbounded blocking queue composed of a linked list structure

- LinkedBlockingDeque: a bidirectional blocking queue composed of a linked list structure

SynchronousQueue has no capacity. Unlike other blockingqueues, synchronous queue is a BlockingQueue that does not store elements. Each put operation must wait for a take operation, otherwise you cannot continue to add elements, and vice versa.

import java.util.concurrent.BlockingQueue;

import java.util.concurrent.SynchronousQueue;

import java.util.concurrent.TimeUnit;

/**

* @author Jujuxiaer

*/

public class SynchronousQueueDemo {

public static void main(String[] args) {

BlockingQueue<String> blockingQueue = new SynchronousQueue<>();

new Thread(() -> {

try {

System.out.println(Thread.currentThread().getName() + "\t put 1");

blockingQueue.put("1");

System.out.println(Thread.currentThread().getName() + "\t put 2");

blockingQueue.put("2");

System.out.println(Thread.currentThread().getName() + "\t put 3");

blockingQueue.put("3");

} catch (InterruptedException e) {

e.printStackTrace();

}

}, "AAA").start();

new Thread(() -> {

try {

try {

TimeUnit.SECONDS.sleep(2);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println(Thread.currentThread().getName() + "\t take " + blockingQueue.take());

try {

TimeUnit.SECONDS.sleep(2);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println(Thread.currentThread().getName() + "\t take " + blockingQueue.take());

try {

TimeUnit.SECONDS.sleep(2);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println(Thread.currentThread().getName() + "\t take " + blockingQueue.take());

} catch (InterruptedException e) {

e.printStackTrace();

}

}, "BBB").start();

}

}

The results are as follows:

AAA put 1

BBB take 1

AAA put 2

BBB take 2

AAA put 3

BBB take 3

5. Where is it used

5.1 producer consumer model

Traditional writing:

import java.util.concurrent.locks.Condition;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

/**

* @author Jujuxiaer

* @date 2021-09-11 16:35

* Title: for a variable with an initial value of 0, two threads operate on it alternately, one plus 1 and one minus 1 for 5 rounds

* 1. Thread operation method resource class

* 2. Judgment -- > work -- > notification

* 3. To prevent false wakeups, use while instead of if

*/

public class ProdConsumerTraditionDemo {

public static void main(String[] args) {

ShareData shareData = new ShareData();

new Thread(() -> {

for (int i = 1; i <= 5; i++) {

try {

shareData.increment();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}, "AAA").start();

new Thread(() -> {

for (int i = 1; i <= 5; i++) {

try {

shareData.decrement();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}, "BBB").start();

}

}

/**

* Resource class

*/

class ShareData {

private int number = 0;

private Lock lock = new ReentrantLock();

private Condition condition = lock.newCondition();

public void increment() throws InterruptedException {

lock.lock();

try {

// 1. Judgment

while (number != 0) {

// Waiting, unable to produce

condition.await();

}

// 2. Work

number++;

System.out.println(Thread.currentThread().getName() + "\t " + number);

// 3. Notification wake-up

condition.signalAll();

} catch (Exception e) {

e.printStackTrace();

} finally {

lock.unlock();

}

}

public void decrement() throws InterruptedException {

lock.lock();

try {

// 1. Judgment

while (number == 0) {

// Waiting, unable to produce

condition.await();

}

// 2. Work

number--;

System.out.println(Thread.currentThread().getName() + "\t " + number);

// 3. Notification wake-up

condition.signalAll();

} catch (Exception e) {

e.printStackTrace();

} finally {

lock.unlock();

}

}

}

Execution result of the above code:

AAA 1

BBB 0

AAA 1

BBB 0

AAA 1

BBB 0

AAA 1

BBB 0

AAA 1

BBB 0

5.1.1 what is the difference between * * synchronized and lock** What are the benefits of using the new lock? Examples

1. Original composition

synchronized is a keyword and belongs to the JVM level. monitorenter (the bottom layer is completed through the monitor object. In fact, wait/notify and other methods also depend on the monitor object. The wait/notify method can be called only in the synchronization block or method)

2. Usage

Synchronized does not require the user to release the lock manually. When the synchronized code is executed, the system will automatically let the thread release the lock occupation. ReentrantLock requires the user to release the lock manually. If the lock is not released actively, it may lead to deadlock. The lock() and unlock() methods need to be completed with the try/finally statement block.

3. Is waiting interruptible

synchronized cannot be interrupted unless an exception is thrown or normal operation is completed. ReentrantLock can be interrupted. The first interrupt method is to set the timeout tryLock(long timeout, TimeUnit unit); The second way: lockInterruptibly() put the code block, calling interrupt() method can be interrupted.

4. Is locking fair

synchronized unfair lock; ReentrantLock can be both. By default, it is a fair lock. The constructor can pass in a boolean value. true is a fair lock and false is a non fair lock.

5. Lock binding multiple conditions

Synchronized no; ReentrantLock is used to wake up the threads that need to be awakened. It can wake up accurately, rather than waking up one thread randomly or all threads like synchronized.

Title:

Sequential calls between multiple threads. To realize the startup of three counties a -- > B -- > C, the requirements are as follows:

A prints 5 times, B prints 10 times and C prints 15 times. Then a prints 5 times, B prints 10 times, C prints 15 times... 10 rounds.

import java.util.concurrent.locks.Condition;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

/**

* @author Jujuxiaer

*/

public class SyncAndReentrantLockDemo {

public static void main(String[] args) {

ShareResource shareResource = new ShareResource();

new Thread(() -> {

for (int i = 0; i < 10; i++) {

shareResource.print5();

}

}, "A").start();

new Thread(() -> {

for (int i = 0; i < 10; i++) {

shareResource.print10();

}

}, "B").start();

new Thread(() -> {

for (int i = 0; i < 10; i++) {

shareResource.print15();

}

}, "C").start();

}

}

class ShareResource {

/**

* Flag bit, number is 1, indicating thread A; Number is 2, indicating thread B; Number is 3, indicating C thread;

*/

private int number = 1;

private Lock lock = new ReentrantLock();

private Condition conditionA = lock.newCondition();

private Condition conditionB = lock.newCondition();

private Condition conditionC = lock.newCondition();

// 1. Judgment

public void print5() {

lock.lock();

try {

while (number != 1) {

conditionA.await();

}

// 2. Work

for (int i = 1; i <= 5; i++) {

System.out.println(Thread.currentThread().getName()+"\t " + i);

}

// 3. Notice

number = 2;

conditionB.signal();

} catch (Exception e) {

e.printStackTrace();

} finally {

lock.unlock();

}

}

// 1. Judgment

public void print10() {

lock.lock();

try {

while (number != 2) {

conditionB.await();

}

// 2. Work

for (int i = 1; i <= 10; i++) {

System.out.println(Thread.currentThread().getName()+"\t " + i);

}

// 3. Notice

number = 3;

conditionC.signal();

} catch (Exception e) {

e.printStackTrace();

} finally {

lock.unlock();

}

}

// 1. Judgment

public void print15() {

lock.lock();

try {

while (number != 3) {

conditionC.await();

}

// 2. Work

for (int i = 1; i <= 15; i++) {

System.out.println(Thread.currentThread().getName()+"\t " + i);

}

// 3. Notice

number = 1;

conditionA.signal();

} catch (Exception e) {

e.printStackTrace();

} finally {

lock.unlock();

}

}

}

5.2 thread pool

5.3 Message Oriented Middleware

import java.util.concurrent.ArrayBlockingQueue;

import java.util.concurrent.BlockingQueue;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.atomic.AtomicInteger;

/**

* @author Jujuxiaer

*/

public class ProdConsumerBlockingQueueDemo {

public static void main(String[] args) {

MyResource myResource = new MyResource(new ArrayBlockingQueue<>(10));

new Thread(() -> {

System.out.println(Thread.currentThread().getName() + "\t Production thread start");

try {

myResource.myProducer();

} catch (InterruptedException e) {

e.printStackTrace();

}

}, "Producer").start();

new Thread(() -> {

System.out.println(Thread.currentThread().getName() + "\t Production thread start");

try {

myResource.myConsumer();

} catch (InterruptedException e) {

e.printStackTrace();

}

}, "Consumer").start();

try {

TimeUnit.SECONDS.sleep(5);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println("5 Seconds, big boss main The thread stops and the activity ends");

myResource.stop();

}

}

class MyResource {

// It is enabled by default for production + consumption

private volatile boolean flag = true;

private AtomicInteger atomicInteger = new AtomicInteger();

private BlockingQueue<Object> blockingQueue;

public MyResource(BlockingQueue<Object> blockingQueue) {

this.blockingQueue = blockingQueue;

System.out.println(blockingQueue.getClass().getName());

}

public void myProducer() throws InterruptedException {

String data;

boolean isOffered;

while (flag) {

data = String.valueOf(atomicInteger.incrementAndGet());

isOffered = blockingQueue.offer(data, 2L, TimeUnit.SECONDS);

if (isOffered) {

System.out.println(Thread.currentThread().getName() + "\t Insert queue data:" + data + "success");

} else {

System.out.println(Thread.currentThread().getName() + "\t Insert queue data:" + data + "fail");

}

TimeUnit.SECONDS.sleep(1);

}

System.out.println();

System.out.println(Thread.currentThread().getName() + "\t here flag==false It means that the big boss has stopped and the production action is over.");

}

public void myConsumer() throws InterruptedException {

String result;

while (flag) {

result = (String) blockingQueue.poll(2L, TimeUnit.SECONDS);

if (result == null || "".equalsIgnoreCase(result)) {

flag = false;

System.out.println(Thread.currentThread().getName() + "\t If you don't get data for more than 2 seconds, quit consumption");

return;

}

System.out.println(Thread.currentThread().getName() + "\t Consumption queue succeeded. Data consumed:" + result);

}

}

public void stop() {

this.flag = false;

}

}

The above implementation results are as follows:

java.util.concurrent.ArrayBlockingQueue

Producer production thread started

Consumer production thread started

Producer inserted queue data: 1 succeeded

Consumer consumption queue succeeded. Data consumed: 1

Consumer consumption queue succeeded. Data consumed: 2

Producer inserted queue data: 2 succeeded

Consumer consumption queue succeeded. Data consumed: 3

Producer insert queue data: 3 successful

Producer insert queue data: 4 successful

Consumer consumption queue succeeded. Data consumed: 4

Producer insert queue data: 5 successful

Consumer consumption queue succeeded. Data consumed: 5

After 5 seconds, the main thread of the big boss stops and the activity ends

Producer's flag==false indicates that the big boss has stopped and the production action is over.

If the Consumer does not get data for more than 2 seconds, it will quit consumption

8, Has the thread pool been used? ThreadPoolExecutor talk about your understanding?

1. Why use thread pool? Advantages

The work done by the thread pool is mainly to control the number of running threads, put tasks in the queue during processing, and then start these tasks after threads are created. If the number of threads exceeds the maximum number, the threads exceeding the number will queue up and wait until other threads are executed, and then take tasks out of the queue for execution. Its main features are: thread reuse; Control the maximum concurrent number; Manage threads.

- Reduce resource consumption. Reduce the consumption caused by thread creation and destruction by reusing the created threads.

- Improve response speed. When the task arrives, the task can be executed immediately without waiting for the thread to be created.

- Improve thread manageability. Threads are scarce resources. If they are created without restrictions, they will not only consume system resources, but also reduce system stability. Using thread pool can be used for unified allocation, tuning and monitoring.

2. How to use thread pool

2.1 architecture description

Java thread pool is implemented through the Executor framework, which uses Executor, Executors, ExecutorService and ThreadPoolExecutor.

2.2 coding implementation

Learn more about:

-

Executors.newScheduledThreadPool()

-

Executors. Newworksteelingpool (int), which is new in Java 8, uses the processors available on the current machine as its usage level.

Key contents:

-

Executors.newFixedThreadPool(int)

Implement long-term tasks, and the performance is much better

public static ExecutorService newFixedThreadPool(int nThreads) { return new ThreadPoolExecutor(nThreads, nThreads, 0L, TimeUnit.MILLISECONDS, new LinkedBlockingQueue<Runnable>()); }The main features are as follows:

-

Collect a fixed length thread pool to control the maximum concurrent number of threads. The exceeded threads will wait in the queue.

-

The values of corePoolSize and maximumPoolSize of the thread pool created by newFixedThreadPool are equal, and it is LinkedBlockingQueue

-

-

Executors.newSingleThreadExecutor()

One task, one task execution scenario

public static ExecutorService newSingleThreadExecutor() { return new FinalizableDelegatedExecutorService (new ThreadPoolExecutor(1, 1, 0L, TimeUnit.MILLISECONDS, new LinkedBlockingQueue<Runnable>())); }The main features are as follows:

- Create a singleton thread pool. It will only use a unique worker thread to execute tasks to ensure that all tasks are executed in the specified order.

- The newSingleThreadExecutor sets both corePoolSize and maximumPoolSize to 1 and uses the LinkedBlockingQueue

-

Executors.newCacheThreadPool()

Used for: executing many short-term asynchronous applets or servers with light load

public static ExecutorService newCachedThreadPool() { return new ThreadPoolExecutor(0, Integer.MAX_VALUE, 60L, TimeUnit.SECONDS, new SynchronousQueue<Runnable>()); }The main features are as follows:

- Create a cacheable thread pool. If the length of the thread pool exceeds the processing needs, you can flexibly recycle idle threads. If there is no recyclable thread, you can create a new thread.

- newCachedThreadPool set corePoolSize to 0 and maximumPoolSize to Integer.MAX_VALUE: use SynchronousQueue, that is, when a task comes, create a thread to run. When the thread is idle for more than 60 seconds, destroy the thread.

import java.util.concurrent.ExecutorService; import java.util.concurrent.Executors; /** * @author Jujuxiaer */ public class MyThreadPoolDemo { public static void main(String[] args) { ExecutorService threadPool = Executors.newFixedThreadPool(5); try { // Simulate 10 users to handle business, and each user is an external request thread for (int i = 0; i < 10; i++) { threadPool.execute(() -> { System.out.println(Thread.currentThread().getName()+"\t Handle the business"); }); } } catch (Exception e) { e.printStackTrace(); } finally { threadPool.shutdown(); } } }

3. Introduction to several important parameters of thread pool

7 major parameters:

public ThreadPoolExecutor(int corePoolSize,

int maximumPoolSize,

long keepAliveTime,

TimeUnit unit,

BlockingQueue<Runnable> workQueue,

ThreadFactory threadFactory,

RejectedExecutionHandler handler) {

if (corePoolSize < 0 ||

maximumPoolSize <= 0 ||

maximumPoolSize < corePoolSize ||

keepAliveTime < 0)

throw new IllegalArgumentException();

if (workQueue == null || threadFactory == null || handler == null)

throw new NullPointerException();

this.acc = System.getSecurityManager() == null ?

null :

AccessController.getContext();

this.corePoolSize = corePoolSize;

this.maximumPoolSize = maximumPoolSize;

this.workQueue = workQueue;

this.keepAliveTime = unit.toNanos(keepAliveTime);

this.threadFactory = threadFactory;

this.handler = handler;

}

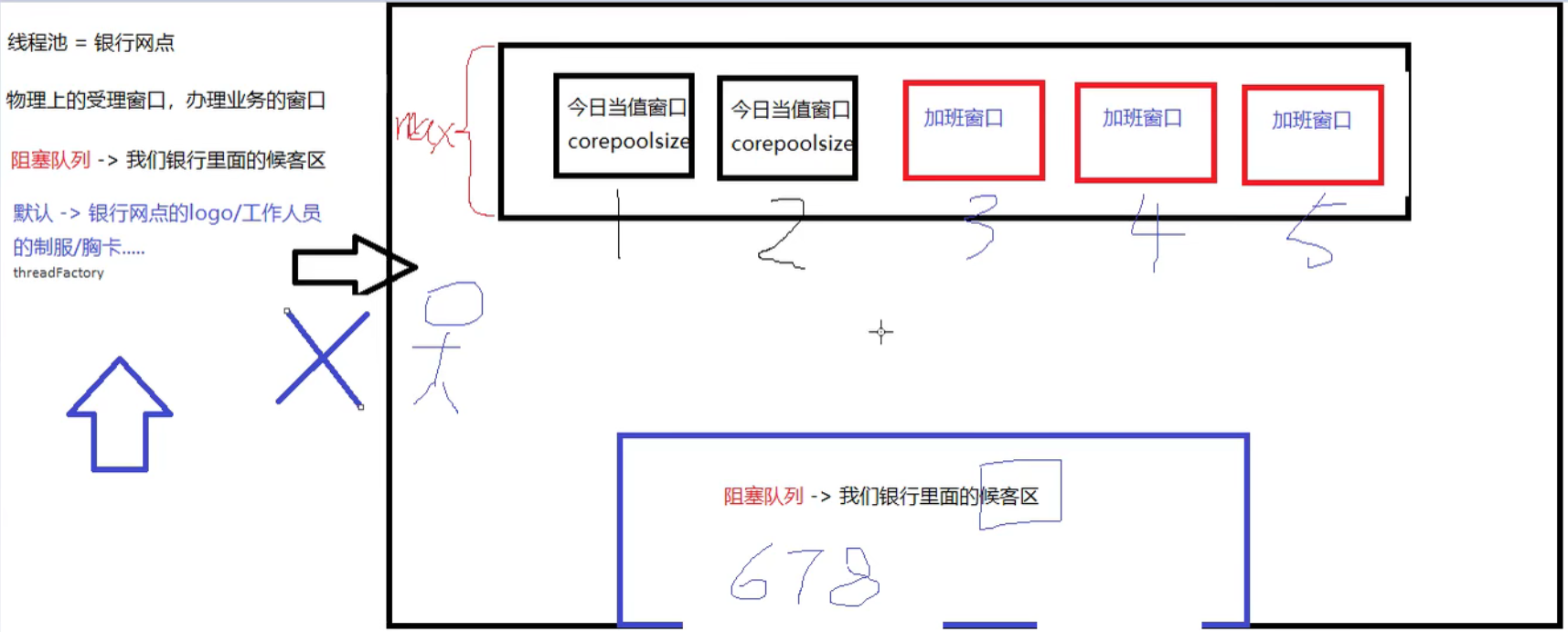

- corePoolSize: the number of resident core threads in the thread pool

- After the thread pool is created, when a request task comes, the thread in the pool will be arranged to execute the request task, which is roughly understood as the thread on duty on that day

- When the number of threads in the thread pool reaches the corePoolSize, the arriving tasks will be placed in the cache queue

- maximumPoolSize: the maximum number of threads that can be executed simultaneously in the thread pool. This value must be greater than or equal to 1

- keepAliveTime: the survival time of redundant idle threads. When the number of threads in the current thread pool exceeds corePollSize, when the idle time reaches the keepAliveTime value, the redundant idle threads will be destroyed until only corePoolSize threads are left.

- By default, keepAliveTime works only when the number of threads in the current thread pool is greater than corePoolSize until the number of threads in the thread pool is not greater than corePoolSize.

- Unit: the unit of keepAliveTime

- workQueue: task queue, a task submitted but not yet executed.

- threadFactory: refers to the thread factory that generates the working threads in the thread pool. It is used to create threads. Generally, the default is used.

- handler: reject policy, indicating how to handle when the queue is full and the worker thread is greater than or equal to the maximum poolsize of the thread pool

Refer to the example of bank outlet handling business window to understand the seven parameters:

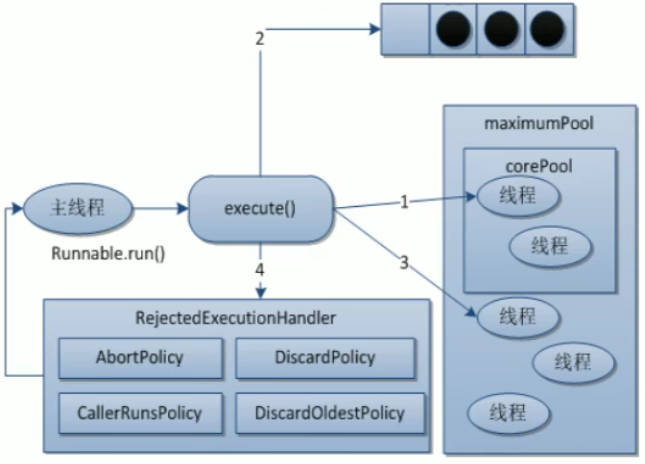

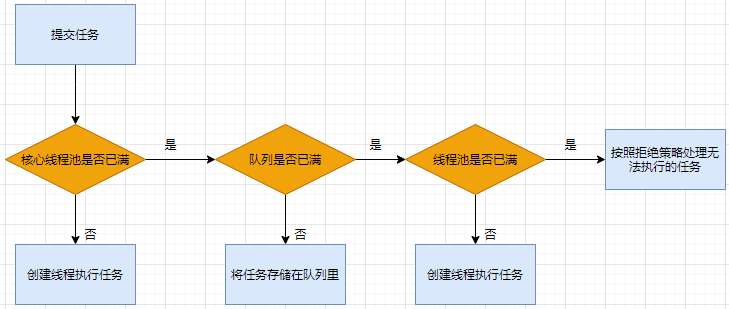

4. Talk about the underlying working principle of thread pool

The main processing flow chart of thread pool is as follows:

-

After creating the thread pool, wait for the submitted task request

-

When calling the executor() method to add a request task, the thread pool will make the following judgment:

- If the number of running threads is less than corePoolSize, create a thread to run the task immediately

- If the number of running threads is greater than or equal to corePoolSize, put the task on the queue

- If the queue is full and the number of running threads is less than the maximumPoolSize, create a non core thread to run the task immediately

- If the queue is full and the number of running threads is greater than or equal to maximumPoolSize, the thread pool will start the saturation rejection policy to execute

-

When a thread completes a task, it will remove a task from the queue for execution

-

When a thread has nothing to do for more than a certain time (keepAliveTime), the thread pool will judge:

- If the number of currently running threads is greater than corePoolSize, the thread will be stopped. Therefore, after all tasks of the thread pool are completed, the number of threads in the thread pool will eventually shrink to the size of corePoolSize.

9, Has the thread pool been used? How do you set reasonable parameters in production

1. Talk about the rejection strategy of thread pool

1.1 what is it

The waiting queue is full, no more new tasks can be filled, and the number of threads in the thread pool has reached the maximum poolsize threads. You can no longer serve new tasks. At this time, the rejection policy mechanism is needed to deal with this problem reasonably.

1.2 JDK built-in rejection policy (the following built-in rejection policy implements the RejectedExecutionHandler interface)

- AbortPolicy (default): throw the RejectedExecutionException exception directly to prevent the system from running normally.

- CallerRunsPolicy: "caller run" is a kind of adjustment mechanism, which neither discards tasks nor throws exceptions, but backs some tasks back to the caller, thus reducing the traffic of new tasks.

- Discard oldest policy: discards the longest waiting task in the queue, then adds the current task to the queue and tries to submit the current task again

- Discard policy: directly discard the task without any processing or throwing exceptions. If the task is allowed to be lost, this is the best solution.

2. Which of the three methods of creating thread pool, single / fixed / variable, do you use most in your work? Super pit

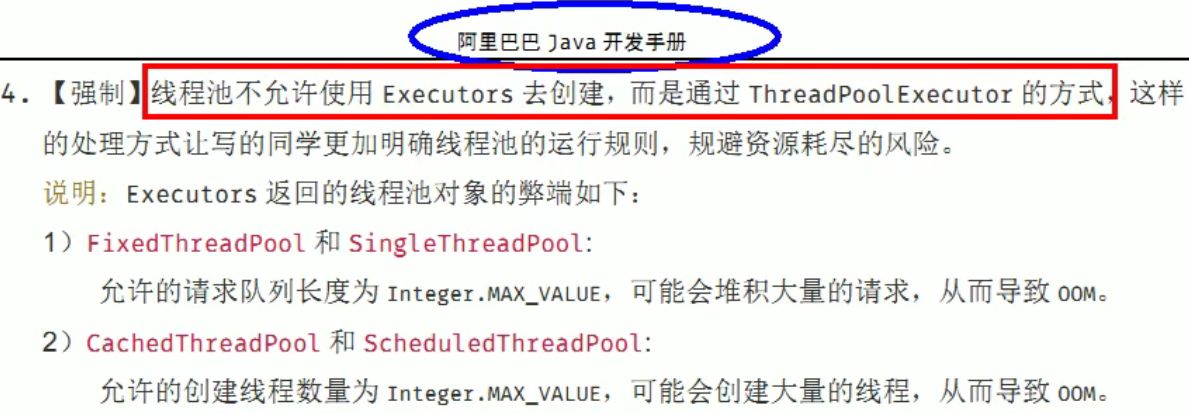

The answer is none.

Only custom can be used in production. Executors in JDK have been provided to you. Why not?

3. How do you use thread pool in your work? Have you customized the use of thread pool

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import java.util.concurrent.LinkedBlockingQueue;

import java.util.concurrent.ThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

/**

* @author Jujuxiaer

*/

public class MyThreadPoolDemo2 {

public static void main(String[] args) {

ExecutorService threadPool = new ThreadPoolExecutor(2, 5,1L, TimeUnit.SECONDS,

new LinkedBlockingQueue<>(3), Executors.defaultThreadFactory(), new ThreadPoolExecutor.AbortPolicy());

try {

// Simulate 10 users to handle business, and each user is an external request thread

for (int i = 0; i < 9; i++) {

threadPool.execute(() -> {

System.out.println(Thread.currentThread().getName()+"\t Handle the business");

});

}

} catch (Exception e) {

e.printStackTrace();

} finally {

threadPool.shutdown();

}

}

}

4. How do you consider reasonably configuring thread pool

4.1 CPU intensive

Get the number of cores of the CPU through Runtime.getRuntime().availableProcessors()

CPU intensive means that the task requires a lot of computation without blocking, and the CPU runs at full speed all the time. The number of threads in CPU intensive task configuration should be as small as possible:

General formula: CPU core + thread pool of 1 thread

4.2 IO intensive

Since IO intensive task threads are not always executing tasks, you should configure as many threads as possible, with the number of CPU cores * 2

IO intensive, that is, the task requires a lot of IO, that is, a lot of blocking. Running IO intensive tasks on a single thread leads to a waste of CPU computing power and waiting. Therefore, using multithreading in io intensive tasks can greatly speed up the running of programs. Even on a single core CPU, this acceleration mainly takes advantage of the wasted blocking time.