[JavaSE learning notes] JUC toolkit for concurrent programming

1, What is JUC

Java. 0 is provided in Java 5.0 util. Concurrent (JUC) package, in which tool classes commonly used in concurrent programming are added to define custom subsystems similar to threads, including thread pool, asynchronous IO and lightweight task framework; Collection implementations designed for multithreading contexts are also provided.

2, Threads and processes

Process: it is an execution process of a program, or a running program, which is dynamic. Is the unit of resource allocation.

Thread: an execution path within a process. As the smallest scheduling and execution unit, each thread has an independent running stack and program counter (pc).

3, Several states of threads

To distinguish from the thread life cycle, it is different from the thread life cycle.

public enum State {

/**

* newborn

*/

NEW,

/**

* function

*/

RUNNABLE,

/**

* block

*/

BLOCKED,

/**

* Wait, die, etc

*/

WAITING,

/**

* Timeout wait

*/

TIMED_WAITING,

/**

* termination

*/

TERMINATED;

}

4, Thread synchronization

1. Synchronized keyword wraps a synchronized area.

2. Lock interface implementation class.

The Lock interface implementation class ReentrantLock is in Java util. concurrent. Under the locks package, synchronized depends on the JVM and ReentrantLock depends on the API. ReentrantLock adds some advanced features to synchronized.

Compared with synchronized, ReentrantLock adds some advanced features. There are three main points:

- Waiting can be interrupted: ReentrantLock provides a mechanism to interrupt threads waiting for locks through lock Lockinterruptibly() to implement this mechanism. That is, the waiting thread can choose to give up waiting and deal with other things instead.

- Realizable fair lock: ReentrantLock can specify whether it is a fair lock or a non fair lock. synchronized can only be a non fair lock. The so-called fair lock is that the thread waiting first obtains the lock first. ReentrantLock is unfair by default. You can determine whether it is fair through the ReentrantLock(boolean fair) construction method of ReentrantLock class.

- Selective notification can be realized (locks can bind multiple conditions): the synchronized keyword can be combined with wait() and notify()/notifyAll() methods to realize the wait / notification mechanism. Of course, the ReentrantLock class can also be implemented, but it needs the help of the Condition interface and the newCondition() method.

Test code:

public class TestJava20211228{

public static void main(String[] args){

TicketSalerReentrantLock saler1 = new TicketSalerReentrantLock();

TicketSalerReentrantLock saler2 = new TicketSalerReentrantLock();

TicketSalerReentrantLock saler3 = new TicketSalerReentrantLock();

saler1.start();

saler2.start();

saler3.start();

}

}

class TicketSalerReentrantLock extends Thread{

private static final Lock lock = new ReentrantLock();

private static final Random rand = new Random();

private static int ticket = 100;

public void run(){

while(true){

try{

lock.lock();

if(ticket <= 0)

break;

Thread.sleep(rand.nextInt(100));

ticket--;

System.out.println("TICKET IS " + ticket + ".");

}catch(Exception e){

e.printStackTrace();

}finally{

lock.unlock();

}

}

}

}

5, Conditional variable

Conditional variable is a mechanism to solve the need of waiting synchronization and realize cooperative communication between threads. Condition variables are used to automatically block a thread until it is awakened by a special situation. Usually, conditional variables and locking mechanisms are used at the same time.

Conditional variables provided in Java:

1. synchronized + Object object object implementation method – [wait(), notify(), notifyAll()]

2. ReentrantLock + Condition object implementation method – [await(), signal(), signalAll()]

Use conditional variables to print odd and even numbers alternately:

Test code (synchronized + Object object):

public class TestJava20211228{

public int num = 10;

public static void main(String[] args){

TestJava20211228 test = new TestJava20211228();

PrinterOne one = new PrinterOne(test);

PrinterTwo two = new PrinterTwo(test);

one.start();

two.start();

}

}

class PrinterOne extends Thread{

private TestJava20211228 test;

public PrinterOne(TestJava20211228 test){

this.test = test;

}

public void run(){

try{

while(test.num > 0){

synchronized(test){

if(test.num%2 == 0){

test.wait();

}else{

System.out.println(Thread.currentThread().getName() + " -> " + test.num--);

test.notify();

}

}

}

}catch(Exception e){

e.printStackTrace();

}

}

}

class PrinterTwo extends Thread{

private TestJava20211228 test;

public PrinterTwo(TestJava20211228 test){

this.test = test;

}

public void run(){

try{

while(test.num > 0){

synchronized(test){

if(test.num%2 != 0){

test.wait();

}else{

System.out.println(Thread.currentThread().getName() + " -> " + test.num--);

test.notify();

}

}

}

}catch(Exception e){

e.printStackTrace();

}

}

}

Test code (ReentrantLock + Condition object):

public class TestJava20211228{

public int num = 10;

public static void main(String[] args){

TestJava20211228 test = new TestJava20211228();

Lock lock = new ReentrantLock();

Condition condition = lock.newCondition();

PrinterOne one = new PrinterOne(test, lock, condition);

PrinterTwo two = new PrinterTwo(test, lock, condition);

one.start();

two.start();

}

}

class PrinterOne extends Thread{

private Lock lock;

private TestJava20211228 test;

private Condition condition;

public PrinterOne(TestJava20211228 test, Lock lock, Condition condition){

this.test = test;

this.lock = lock;

this.condition = condition;

}

public void run(){

while(test.num > 0){

lock.lock();

try{

if(test.num%2 == 0){

condition.await();

}else{

System.out.println(Thread.currentThread().getName() + " -> " + test.num--);

condition.signal();

}

}catch(Exception e){

e.printStackTrace();

}finally{

lock.unlock();

}

}

}

}

class PrinterTwo extends Thread{

private Lock lock;

private TestJava20211228 test;

private Condition condition;

public PrinterTwo(TestJava20211228 test, Lock lock, Condition condition){

this.test = test;

this.lock = lock;

this.condition = condition;

}

public void run(){

while(test.num > 0){

lock.lock();

try{

if(test.num%2 != 0){

condition.await();

}else{

System.out.println(Thread.currentThread().getName() + " -> " + test.num--);

condition.signal();

}

}catch(Exception e){

e.printStackTrace();

}finally{

lock.unlock();

}

}

}

}

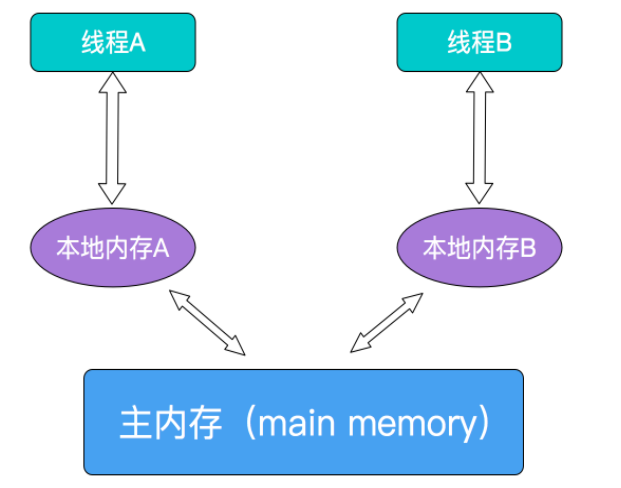

6, volatile keyword

effect:

1. Ensure variable visibility:

At jdk1 Before 2, Java's memory model implementation always read variables from main memory (i.e. shared memory), which requires no special attention. Under the current JAVA memory model, threads can save variables in local memory (such as machine registers), rather than read and write directly in main memory. This may cause a thread to modify the value of a variable in main memory, while another thread continues to use the copy of its variable value in the register, resulting in inconsistent data. To solve this problem, you need to declare the variable as volatile, which indicates to the JVM that the variable is shared and unstable, and it is read from main memory every time you use it.

2. Prevent instruction rearrangement of JVM:

Reordering is a means by which the compiler and processor sort the instruction sequence in order to optimize the program performance. Reordering needs to follow certain rules: (1) reordering will not reorder operations with data dependencies. For example: a=1;b=a; In this instruction sequence, since the second operation depends on the first operation, the two operations will not be reordered at compile time and processor runtime.

(2) reordering is to optimize performance, but no matter how reordering, the execution result of the program under single thread cannot be changed. For example: a = 1; b=2; For the three operations of c=a+b, the first step (a=1) and the second step (b=2) may be reordered because there is no data dependency, but the operation of c=a+b will not be reordered because the final result must be c=a+b=3.

7, ThreadLocal

1. What is ThreadLocal

ThreadLocal is called the local thread variable, which means that the variable of the current thread is filled in ThreadLocal, which is closed and isolated from other threads. ThreadLocal creates a copy of the variable in each thread, so that each thread can access its own internal copy variable.

2. How to use ThreadLocal

public class TestJava20211228{

public int num = 10;

public static void main(String[] args){

MyThread th1 = new MyThread();

MyThread th2 = new MyThread();

th1.start();

th2.start();

}

}

class MyThread extends Thread{

private static ThreadLocal<Integer> threadLocal = new ThreadLocal<>();

public void run(){

int value = new Random().nextInt();

threadLocal.set(value);

System.out.println(Thread.currentThread().getName() + " save value is " + value);

System.out.println(Thread.currentThread().getName() + " value is " + threadLocal.get());

}

}

ThreadLocal class has set(value) method, get() method and remove() method, which can be used to operate ThreadLocal. When the value is set, a separate location will be opened for each thread to store the value. The underlying implementation is hash table.

8, Blocking queue

1. Seven blocking queues

ArrayBlockingQueue: it is a bounded blocking queue implemented by array. This queue sorts the elements according to the first in first out (FIFO) principle. By default, the fair access queue of visitors is not guaranteed. The so-called fair access queue refers to all blocked producer threads or consumer threads. When the queue is available, the queue can be accessed according to the blocking order, that is, the producer thread blocked first can insert elements into the queue first, and the consumer thread blocked first can obtain elements from the queue first. Generally, in order to ensure fairness, the throughput will be reduced. Both acquiring data and adding data use the same lock object.

LinkedBlockingQueue: it is a bounded blocking queue implemented by linked list. The default and maximum length of this queue is the integer maximum. The underlying layer uses a linked list to maintain the queue. When adding and deleting elements in the queue, node objects will be created and destroyed. When there is high concurrency and a large amount of data, GC is under great pressure. Getting data and adding data use different lock objects.

PriorityBlockingQueue: an unbounded blocking queue that supports priority. By default, elements are arranged in natural ascending order. Inherit the Comparable class and implement the compareTo() method to specify the element sorting rule, or specify the construction parameter Comparator to sort the elements when initializing PriorityBlockingQueue. It should be noted that the order of elements with the same priority cannot be guaranteed.

DelayQueue: an unbounded blocking queue that supports Delayed acquisition of elements. Queues are implemented using PriorityQueue. The elements in the queue must implement the Delayed interface. When creating elements, you can specify how long it takes to get the current element from the queue. Elements can only be extracted from the queue when the delay expires.

Synchronous queue: a blocking queue that does not store elements. Each put operation must wait for a take operation, otherwise you cannot continue to add elements. It supports fair access queues. By default, threads access queues using an unfair policy. Use the construction method to create a SynchronousQueue for fair access. If it is set to true, the waiting thread will access the queue in first in first out order.

LinkedTransferQueue: an unbounded blocked TransferQueue composed of a linked list structure. Compared with other blocking queues, LinkedTransferQueue has more tryTransfer and transfer methods.

Transfer method. If a consumer is currently waiting to receive elements (when the consumer uses the take() method or the poll() method with time limit), the transfer method can immediately transfer the elements passed in by the producer to the consumer. If there is no consumer waiting to receive the element, the transfer method will store the element in the tail node of the queue and wait until the element is consumed by the consumer before returning.

tryTransfer method is used to test whether the elements passed in by the producer can be directly passed to the consumer. If there is no consumer waiting to receive the element, false is returned. The difference between the tryTransfer method and the transfer method is that the tryTransfer method returns immediately regardless of whether the consumer receives it or not, while the transfer method returns only after the consumer consumes it.

LinkedBlockingDeque: LinkedBlockingDeque is a bidirectional blocking queue composed of a linked list structure. The so-called two-way queue means that elements can be inserted and removed from both ends of the queue. Because there is one more entry to the two-way queue, when multiple threads join the queue at the same time, the competition is reduced by half. Compared with other blocking queues, LinkedBlockingDeque has more methods such as addFirst, addLast, offerFirst, offerLast, peekFirst and peekLast. The method ending with the word First means to insert, obtain or remove the First element of the double ended queue. A method ending with the Last word that inserts, gets, or removes the Last element of a double ended queue. In addition, the insert method add is equivalent to addLast, and the remove method remove is equivalent to removeFirst. However, the take method is equivalent to takeFirst. I don't know if it's a JDK bug. It's clearer to use the method with First and Last suffixes. When initializing LinkedBlockingDeque, you can set the capacity to prevent it from over expanding. In addition, bidirectional blocking queue can be used in work stealing mode.

2. Blocking queue common operations

/** * add remove element * add Cannot be empty. Add data to the full queue and throw an exception. * remove Remove the element and throw an exception if the queue is empty. * element Check whether there is an element in the queue. If the queue is empty, an exception will be thrown. * * offer poll peek * offer Add an element to the queue. null values cannot be added. If the addition succeeds, true will be returned. If the addition fails, false will be returned. * poll Take an element from the queue and return null if the queue is empty. * peek Check whether there is an element in the queue. If there is, return the value of the element. If not, return null. * * put take * put Insert an element. If the queue is full, block the thread that inserts the element. * take Get an element from the queue. If the queue is empty, the thread currently getting the element will be blocked. */

Test code:

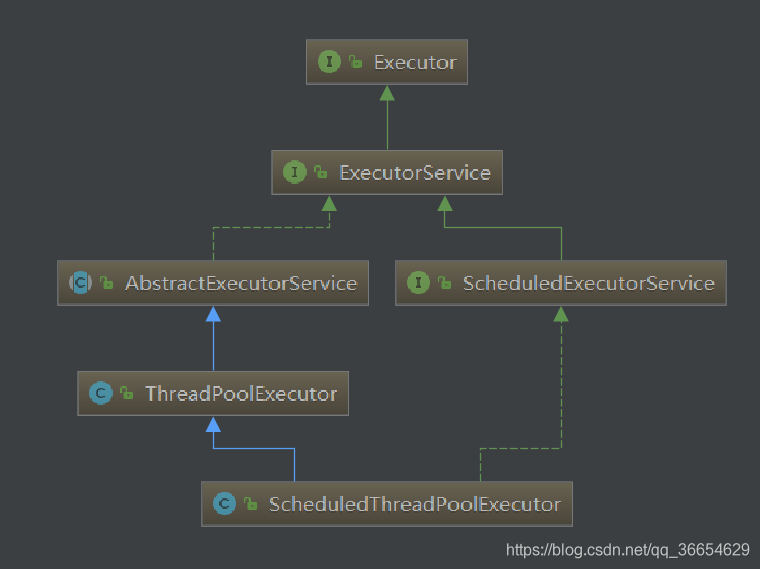

9, Executor interface and thread pool

1. Introduction

Thread pool refers to a thread collection created during the initialization of a multithreaded application. Before the task arrives, the thread pool will create a certain number of threads and put them into the idle queue These threads are in sleep, that is, they are not started, so they do not consume CPU, but occupy a small memory space When the request arrives, the thread pool allocates a free thread to the request, and passes the request into this thread for processing.

when all pre created threads are running, the thread pool can create a certain number of new threads to process more task requests. If the maximum number of threads in the thread pool is full, an exception will be thrown and the request will be rejected When the system is relatively idle, you can also remove some threads that have been deactivated. Each thread in the thread pool may be assigned multiple tasks. Once the task is completed, the thread returns to the thread pool and waits for the next task assignment.

2. Why use thread pools

If there are a large number of thread tasks with short-time tasks in the program, because creating and destroying threads need to interact with the underlying operating system, a lot of time is spent on creating and destroying threads, which is a waste of time and the system efficiency is very low. Moreover, the creation and destruction of threads consume more resources than ordinary objects. The introduction of thread pool technology is to solve this problem.

After the end of each thread task in the thread pool, it will not die, but return to the thread pool again to become idle and wait for the next object to use. Therefore, the execution efficiency of the program can be improved with the help of thread pool.

3. Thread pool related classes

// Used to receive a specific thread pool object created java.util.concurrent.ExecutorService // Thread pool factory class java.util.concurrent.Executors

4. Common thread pools

newSingleThreadExecutor

Create a single threaded thread pool. This thread pool has only one thread working, which is equivalent to a single thread executing all tasks in series. If the only thread ends abnormally, a new thread will replace it. This thread pool ensures that all tasks are executed in the order they are submitted.

newFixedThreadPool

Create a fixed size thread pool. Each time a task is submitted, a thread is created until the thread reaches the maximum size of the thread pool. Once the size of the thread pool reaches the maximum, it will remain unchanged. If a thread ends due to execution exception, the thread pool will supplement a new thread.

newCachedThreadPool

Create a cacheable thread pool. If the size of the thread pool exceeds the threads required for processing tasks, some idle threads (not executing tasks for 60 seconds) will be recycled. When the number of tasks increases, this thread pool can intelligently add new threads to process tasks. This thread pool does not limit the size of the thread pool. The size of the thread pool completely depends on the maximum thread size that the operating system (or JVM) can create.

newScheduledThreadPool

Create an unlimited thread pool. This thread pool supports the need to execute tasks regularly and periodically.

5. Reasons why the built-in thread pool is not recommended

1) the allowable request queue length of FixedThreadPool and SingleThreadPool is integer MAX_ Value, which may accumulate a large number of requests, resulting in OOM.

2) the number of threads allowed to be created by CachedThreadPool and ScheduledThreadPool is integer MAX_ Value, a large number of threads may be created, resulting in OOM.

ThreadPoolExecutor class related properties:

/**

* Thread pool properties

* corePoolSize: Minimum number of worker threads in the thread pool

* maximumPoolSize: Maximum number of threads in thread pool

* keepAliveTime: Timeout (nanoseconds) for idle threads waiting to execute tasks

* TimeUnit:Time unit

* workQueue: The task cache queue is used to store tasks waiting to be executed

* ThreadFactory: Thread factory

* handler: Task rejection policy

*/

ExecutorService threadPool = new ThreadPoolExecutor(

2, // Size of core pool

Runtime.getRuntime().availableProcessors(), // The maximum number of threads in the thread pool is the number of CPU s

2L, // Retention time of idle threads

TimeUnit.SECONDS, // Timeout reclaim idle threads

new LinkedBlockingDeque<>(3), // Set the queue size according to the business. The queue size must be set

Executors.defaultThreadFactory(), // Don't change

new ThreadPoolExecutor.AbortPolicy() //Reject policy

);

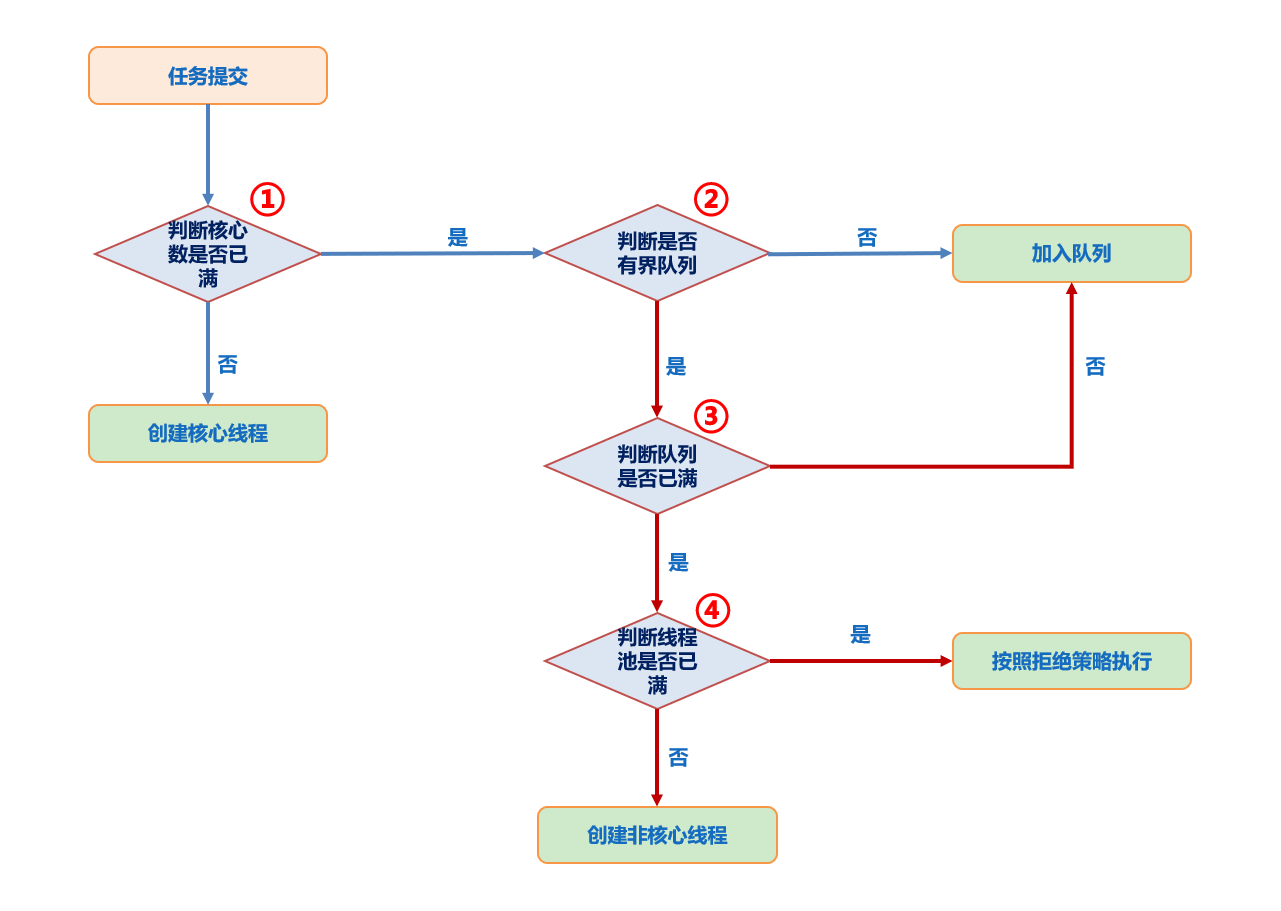

6. How thread pool works

1) create a thread pool. When no task is submitted, there are no threads in the thread pool by default. Of course, you can also call the prestartCoreThread method to pre create a core thread.

2) when there are no threads in the thread pool or the number of surviving threads in the thread pool is less than the number of core threads, the thread pool will create a thread to process the submitted task for a newly submitted task. When the number of surviving threads in the thread pool is less than or equal to the number of core threads corePoolSize, the threads in the thread pool will remain alive. Even if the idle time exceeds keepAliveTime, the threads will not be destroyed, but will be blocked and waiting for the tasks of the task queue to execute.

3) when the number of surviving threads in the thread pool is equal to corePoolSize, a newly submitted task will be put into the task queue and queued for execution. The previously created thread will not be destroyed, but will continue to get the tasks in the blocking queue. When the task queue is empty, the thread will block until a task is put into the task queue. After getting the task, the thread will continue to execute. After execution, it will continue to get the task. This is why thread pool queues use blocking queues.

4) when the number of surviving threads in the thread pool is equal to corePoolSize and the task queue is full, it is assumed that maximumPoolSize > corePoolSize (if it is equal to, it will be rejected directly). At this time, if there are new tasks, the thread pool will continue to create new threads to process new tasks until the number of threads reaches maximumPoolSize, it will not be created again. After these newly created threads finish executing the current task, when there are tasks in the task queue, they will not be destroyed. Instead, they go to the task queue to take out the tasks for execution. After the current number of threads is greater than the corePoolSize, when the thread finishes executing the current task, there will be a logic to judge whether the current thread needs to be destroyed. If the task can be obtained from the task queue, it will continue to execute. If the task is blocked (indicating that there are no tasks in the queue), it will directly return null after the keepAliveTime time and destroy the current thread, Threads will not be destroyed until the number of threads in the thread pool is equal to corePoolSize.

5) if the current number of threads reaches maximumPoolSize and the task queue is full, and there are new tasks coming, the rejected processor will be directly used for processing. The default processor logic is to throw a RejectedExecutionException exception. You can also specify other processors, or customize a rejection processor to implement the rejection logic (such as storing these tasks). JDK provides four rejection policy processing classes:

Are static inner classes in ThreadPoolExecutor class:

AbortPolicy (throw an exception, default)

DiscardPolicy (discard task directly)

Discard oldestpolicy (discard the oldest task in the queue and continue to submit the current task to the thread pool)

CallerRunsPolicy (give it to the thread where the thread pool calls for processing)

Test code:

import java.util.concurrent.*;

public class ThreadPoolExecutorTest{

public static void main(String[] args){

ExecutorService executor = new ThreadPoolExecutor(

2,

Runtime.getRuntime().availableProcessors(),

2L,

TimeUnit.SECONDS,

new LinkedBlockingQueue(3),

Executors.defaultThreadFactory(),

new ThreadPoolExecutor.AbortPolicy()

);

Future<Integer> result = executor.submit(new MyTask2());

try{

System.out.println("result is " + result.get());

}catch(Exception e){

e.printStackTrace();

}

executor.execute(new MyTask());

}

}

class MyTask extends Thread{

@Override

public void run(){

System.out.println("MyTask executed");

}

}

class MyTask2 implements Callable<Integer>{

@Override

public Integer call() throws Exception{

System.out.println("MyTask2 executed");

return 1;

}

}

10, Concurrent security collection

List List: java.util package List<String> list = new Vector<>(); List<String> list = Collections.synchronizedList(new ArrayList<>()); java.util.concurrent package List<String> list = new CopyOnWriteArrayList<>(); Set Set: java.util package Set<String> set= Collections.synchronizedSet(new HashSet<>()); java.util.concurrent package Set<String> set=new CopyOnWriteArraySet<>(); Map Set: java.util package HashTable Map<String,String> map= Collections.synchronizedMap(new HashMap<>()); java.util.concurrent package Map<String,String> map= new ConcurrentHashMap<>();

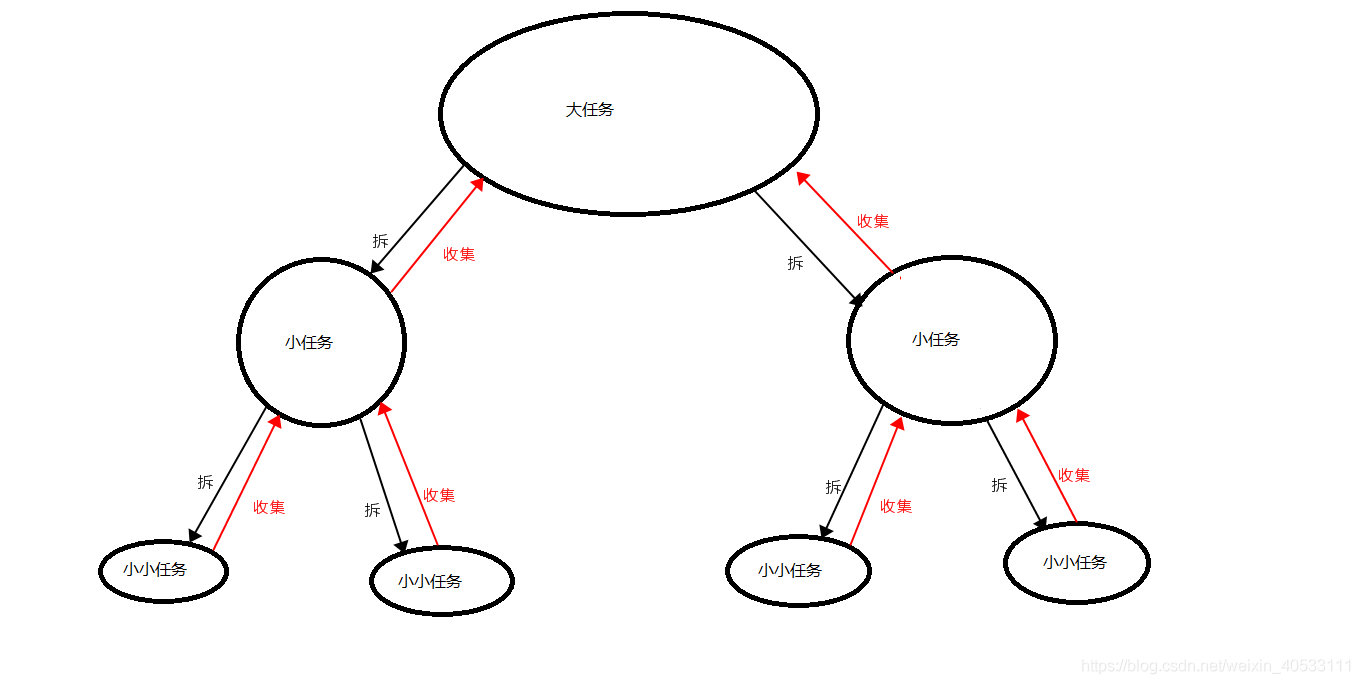

11, ForkJoin framework

1. Introduction

Fork/Join framework is a framework for parallel task execution provided by Java 7. It is a framework that divides a large task into several small tasks, and finally summarizes the results of each small task to obtain the results of the large task. As shown in the figure:

2. Job theft algorithm

The excellence of fork/join lies in this algorithm. If we need to do A large task, we can divide the task into several independent subtasks, put these subtasks into different queues, and create A separate thread for each queue to execute the tasks in the queue. The thread and queue correspond one by one, For example, thread A is responsible for processing tasks in queue A. However, some threads will finish the tasks in their queue first, while there are tasks waiting to be processed in the queue corresponding to other threads. The thread that has finished its work is better to help other threads than wait, so it steals A task from the queue of other threads to execute. At this time, they will access the same queue. Therefore, in order to reduce the competition between the stolen task thread and the stolen task thread, the dual ended queue is usually used. The stolen task thread always takes the task from the head of the dual ended queue for execution, and the stolen task thread always takes the task from the tail of the dual ended queue for execution.

3. Mode of use

ForkJoinTask class: it is the task itself. It is used to create tasks and provides core methods such as fork(), join(), compute().

Two implementation subclasses are provided:

Recursive task: when a return value is required.

RecursiveAction: when no return value is required.

ForkJoinPool class: ForkJoinTask needs to be executed through ForkJoinPool. The subtasks separated from the task will be added to the double ended queue maintained by the current working thread and enter the head of the queue. When there is no task in the queue of a worker thread, it will randomly obtain a task from the end of the queue of other worker threads.

Calculate the sum after 1-1001 accumulation:

import java.util.concurrent.*;

public class ForkJoinTest0118{

public static void main(String[] args){

// Use ForkJoinPool to perform tasks

ForkJoinPool forkJoinPool = new ForkJoinPool();

// This is similar to submitting a task to a thread pool

ForkJoinTask<Integer> task = forkJoinPool.submit(new MyForkJoinTask(1, 1001));

try{

Integer result = task.get();

System.out.println(result);

}catch(Exception e){

e.printStackTrace();

}

}

}

// Create a task class that inherits the RecursiveTask or RecursiveAction class

class MyForkJoinTask extends RecursiveTask<Integer>{

private static final Integer MAX = 200;

private Integer startValue;

private Integer endValue;

public MyForkJoinTask(Integer startValue, Integer endValue){

this.startValue = startValue;

this.endValue = endValue;

}

// Override compute method

@Override

protected Integer compute(){

// The calculation is performed when certain conditions are met

if(endValue - startValue < MAX) {

System.out.println("Part to start calculation: startValue = " + startValue + ";endValue = " + endValue);

Integer totalValue = 0;

for(int index = this.startValue ; index <= this.endValue ; index++) {

totalValue += index;

}

return totalValue;

}else {

// If the task is too large, perform the Fork operation

// The specific process creates two new forkjointasks and calls the fork method

MyForkJoinTask subTask1 = new MyForkJoinTask(startValue, (startValue + endValue) / 2);

subTask1.fork();

MyForkJoinTask subTask2 = new MyForkJoinTask((startValue + endValue) / 2 + 1 , endValue);

subTask2.fork();

// When returning, perform task merging and call the join method

return subTask1.join() + subTask2.join();

}

}

}