preface

Among the queues provided by jdk, there are blocking queues and non blocking queues

Blocking queue refers to: when adding elements, if the queue is full, the currently queued thread will be blocked, and the queued thread will be put into the synchronization condition queue of AQS. After the queue element is out of the queue, it will try to wake up the blocked thread, and the blocked thread will enter the synchronization waiting queue from the condition queue for queuing;

The same is true when leaving the queue. If the element in the queue is empty, the outgoing element will be blocked and enter the synchronization condition queue. After the element is queued in the queue, the blocked thread will wake up and the blocked outgoing thread will enter the waiting queue from the condition queue. Queue for execution permission

Non blocking queue: in juc, the non blocking queue provided is implemented through CAS, that is, if the queue fails to join, CAS spin will be performed. Instead of blocking, enter the condition queue

In addition to blocking and non blocking queues, jdk also provides priority queues and delay queues

Blocking queue

In the blocking queue, I am familiar with ArrayBlockingQueue and LinkedBlockingQueue, so take these two as examples to learn

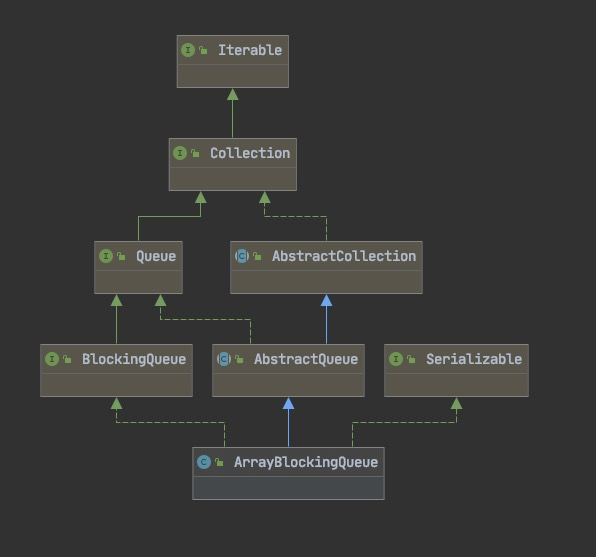

ArrayBlockingQueue

ArrayBlockingQueue, which can be seen from its name, has an array structure and is bounded. How is blocking implemented?

In the ArrayBlockingQueue, one ReentrantLock and two condition s are maintained

/** Main lock guarding all access */ final ReentrantLock lock; /** Condition for waiting takes */ private final Condition notEmpty; /** Condition for waiting puts */ private final Condition notFull;

Team source code

/**

* This is the operation of joining the team

* 1.Lock

* 2.If the number of elements in the current array is equal to the length of the array, false is returned, indicating that the queue is full at this time

* 3.Join the team. If the team is successful, return true

* 4.Unlock

*/

public boolean offer(E e) {

checkNotNull(e);

final ReentrantLock lock = this.lock;

lock.lock();

try {

if (count == items.length)

return false;

else {

enqueue(e);

return true;

}

} finally {

lock.unlock();

}

}

Out of the team source code

/**

* This is the logic of leaving the team

* 1.Lock first

* 2.If the current queue is empty, it will be blocked. Call the await() method of condition to put the current thread into the synchronization condition queue for sleep

* 3.If it is not empty, it will be out of the queue normally. The so-called out of the queue is to get out of the queue from the head of the array and return the corresponding element

* 4.Then release the lock

* @return

* @throws InterruptedException

*/

public E take() throws InterruptedException {

final ReentrantLock lock = this.lock;

lock.lockInterruptibly();

try {

while (count == 0)

notEmpty.await();

return dequeue();

} finally {

lock.unlock();

}

}

It can be seen that for ArrayBlockingQueue, the lock added for outgoing and incoming is the same lock. Therefore, at the same time, for ArrayBlockingQueue, only one thread will enter or leave the queue

In this way, the efficiency is greatly reduced, so the linkedBlockingQueue is different

LinkedBlockingQueue

linkedBlockingQueue can also be seen from its name. It adopts the linked list structure internally. If the length of the linked list is not specified during initialization, it is unbounded. That is to say, if we do not specify the length of the linked list, it is unbounded and will never be blocked during put; Therefore, the blocking here means that we can block only on the premise of specifying the length of the linked list

However, for the get() operation, if the linked list is empty, it will be blocked

These are two constructors. You can see that the value of capacity is integer without specifying the threshold MAX_ VALUE

public LinkedBlockingQueue() {

this(Integer.MAX_VALUE);

}

/**

* Creates a {@code LinkedBlockingQueue} with the given (fixed) capacity.

*

* @param capacity the capacity of this queue

* @throws IllegalArgumentException if {@code capacity} is not greater

* than zero

*/

public LinkedBlockingQueue(int capacity) {

if (capacity <= 0) throw new IllegalArgumentException();

this.capacity = capacity;

last = head = new Node<E>(null);

}

In linkedblockingqueue, there is one that is different from ArrayBlockingQueue. The biggest difference is that linkedblockingqueue internally maintains two locks and two condition s. Therefore, for linkedblockingqueue, the same lock is not used for queue entry and queue exit, which will improve the performance to a certain extent

You can see that in the class, two lock s and two condition s are maintained

/** Lock held by take, poll, etc */ private final ReentrantLock takeLock = new ReentrantLock(); /** Wait queue for waiting takes */ private final Condition notEmpty = takeLock.newCondition(); /** Lock held by put, offer, etc */ private final ReentrantLock putLock = new ReentrantLock(); /** Wait queue for waiting puts */ private final Condition notFull = putLock.newCondition();

Let's take a look at the source code of linkedBlockingQueue

It should be noted that for the linkedBlockingQueue, the offer queue operation will not block the thread. If the number of elements in the current linked list exceeds the threshold, it will return false. When the put method enters the queue, if the queue is full, it will call the await() method of condition to enter the condition queue, and the source code will not be pasted. The basic idea is the same

/**

* @throws NullPointerException if the specified element is null

* This is the source code of joining the team

* 1.If the queued element is null, an exception is thrown

* 2.Lock, putLock

* 3.If the current number of elements is less than the capacity threshold of the linked list, join the queue

* 4.

*/

public boolean offer(E e) {

if (e == null) throw new NullPointerException();

final AtomicInteger count = this.count;

if (count.get() == capacity)

return false;

int c = -1;

Node<E> node = new Node<E>(e);

final ReentrantLock putLock = this.putLock;

putLock.lock();

try {

if (count.get() < capacity) {

/**

* The enqueue method here is to add the current node node to the tail of the linked list

*/

enqueue(node);

c = count.getAndIncrement();

/**

* If the threshold is still not exceeded after + 1, the production thread will be aroused and continue to join the queue

*/

if (c + 1 < capacity)

notFull.signal();

}

} finally {

putLock.unlock();

}

/**

* c == 0 If this condition is satisfied, it means that the queue was empty before, and now one is inserted, because when initializing, c is - 1

* If an element is inserted, the consuming thread is called out of the queue

*/

if (c == 0)

signalNotEmpty();

return c >= 0;

}

/**

* This is the way to get out of the team

* 1.Add takeLock lock lock

* 2.If the current linked list is empty, await

* 3.Otherwise, get out of the team, and then put the linked list number - 1

* 4.If the number is > 1, wake up the dequeued thread and continue dequeueing

* 5.Unlock

* 6.Finally, it will be judged if the current linked list length = capacity threshold. Wake up the queue thread and continue to queue

* @return

* @throws InterruptedException

*/

public E take() throws InterruptedException {

E x;

int c = -1;

final AtomicInteger count = this.count;

final ReentrantLock takeLock = this.takeLock;

takeLock.lockInterruptibly();

try {

while (count.get() == 0) {

notEmpty.await();

}

x = dequeue();

c = count.getAndDecrement();

if (c > 1)

notEmpty.signal();

} finally {

takeLock.unlock();

}

if (c == capacity)

signalNotFull();

return x;

}

In fact, I have always had doubts about the blocking of the blocking queue. After reading the whole source code, I think the so-called blocking refers to:

1. When trying to lock, the thread may fail to lock. At this time, it will enter the synchronization waiting queue and wait for the execution permission

2. After locking successfully, if the queue is empty, the outgoing thread will block; If the queue is full, the queued thread will block; Blocking at this time means that the thread will enter the synchronization condition queue. When the queue is not empty or the queue is not satisfied, it will wake up the thread to continue the queue operation

Non blocking queue

The non blocking queue is studied from the point of view of ConcurrentLinkedQueue. The so-called non blocking is simply to enter and exit the queue through cas. In this way, if multiple threads join the queue at the same time, cas will ensure that only one thread can cas. In this way, other threads will keep cas to retry. Here is a question:

Is there high blocking efficiency for this non-stop spin operation? I think if cas lasts for a long time, will it affect the CPU?

Let's first look at the source code of joining the team

public boolean offer(E e) {

checkNotNull(e);

final Node<E> newNode = new Node<E>(e);

/**

* Here t = p = tail

* q = tail.next

* If the for loop fails to insert, it will try again until it succeeds

*/

for (Node<E> t = tail, p = t;;) {

Node<E> q = p.next;

/**

* If p.next = null, the current p node is the tail node

* Directly cas set the current newNode node to p.next

*/

if (q == null) {

// p is last node

if (p.casNext(null, newNode)) {

// Successful CAS is the linearization point

// for e to become an element of this queue,

// and for newNode to become "live".

if (p != t) // hop two nodes at a time

casTail(t, newNode); // Failure is OK.

return true;

}

// Lost CAS race to another thread; re-read next

}

/**

* If p == p.next?

*/

else if (p == q)

// We have fallen off list. If tail is unchanged, it

// will also be off-list, in which case we need to

// jump to head, from which all live nodes are always

// reachable. Else the new tail is a better bet.

p = (t != (t = tail)) ? t : head;

else

// Check for tail updates after two hops.

p = (p != t && t != (t = tail)) ? t : q;

}

}

It can be seen that when joining the queue, there are no operations such as locking and await like blocking the queue

Moreover, in the concurrent linkedqueue, the maintained node nodes are modified by volatile

private transient volatile Node<E> head; private transient volatile Node<E> tail;

And in the node node

volatile E item; volatile Node<E> next;

It is also modified by volatile