JDK source code learning 06 currenthashmap analysis

The principle of CurrentHashMap is very complex. You can only record what you understand. As we all know, the thread safety implementation principle of CurrentHashMap is Synchronized+CAS. Now let's take a look.

Brief reading of notes

* Overview: * The main design goal of this hash table is to maintain concurrent readability (usually get()Methods, also including iterators and related methods), * At the same time, minimize update contention. The secondary goal is to keep space consumption in line with java.util.HashMap Same or better, * It also supports the high initial insertion rate of many threads into empty tables. This mapping typically acts as a hash table for boxing (buckets). Each key value mapping is saved in a node. * Most nodes are instances of basic node classes, with hashes, keys, values, and next fields. However, there are various subclasses: TreeNode Arranged in the balance tree, * Not in the list. TreeBins have TreeNode The root of the collection. During resizing, ForwardingNodes Put it on the top of the dustbin. * stay computeIfAbsent And related methods, ReservationNode Use as a placeholder. * TreeBin,ForwardingNode and ReservationNode Types do not contain regular user keys, values or hashes, and are easy to distinguish in the process of search, etc, * Because they have negative hash fields and empty key and value fields.

Hash function spread

It is worth noting that in CurrentHashMap, the hash of a node has three values

- Positive number: indicates that this is a normal node, a normal bucket node (the first node of the linked list) or a linked list node

- -1: Indicates that the barrel is expanding

- Other negative numbers: indicates that this is a tree node

// In the hash function of hashmap, the first bit is forced to be 0

static final int spread(int h) {

return (h ^ (h >>> 16)) & HASH_BITS;

}

static const

Other constants are similar to HashMap, so we won't look at them. Here we mainly look at the core fields.

- If the element is being expanded and migrated, the hash value of the bucket head node is MOVED

- If the element is a tree, the hash value of the bucket head node is TREEBIN

- HASH_BITS is a 32-bit int, only the first bit is 0, and other binary bits are 1. You can only take the last 31 bits of the hash value (why, because hash is used not only as hash, but also as identification bit)

static final int MOVED = -1; // hash for forwarding nodes

static final int TREEBIN = -2; // hash for roots of trees

static final int RESERVED = -3; // hash for transient reservations

static final int HASH_BITS = 0x7fffffff; // usable bits of normal node hash

// In the hash function of hashmap, the first bit is forced to be 0

static final int spread(int h) {

return (h ^ (h >>> 16)) & HASH_BITS;

}

Core data structure

Node type introduction

There will be a little more details later

Node Ordinary node TreeNode Tree node TreeBins A collection of tree nodes, including TreeNode Type root node ReservationNode Use as placeholder ForwardingNode Used during capacity expansion

volatile modifies val and next to ensure the visibility of multithreading (val modifies to prevent dirty data, and next modifies to prevent concurrency problems)

sizeCtl is the core, which is very difficult to understand

static class Node<K,V> implements Map.Entry<K,V> {

final int hash;

final K key;

volatile V val;

volatile Node<K,V> next;

}

// Data bucket table

transient volatile Node<K,V>[] table;

// Use the next table; Non null only when resizing

private transient volatile Node<K,V>[] nextTable;

// The basic counter value is mainly used when there is no contention. It is also used as a backup during the table initialization competition. Update via CAS.

private transient volatile long baseCount;

/**

* Table initialization and sizing control. If negative, the table will be initialized or resized: - 1 for initialization,

* Otherwise - (1 + number of resizing threads active). Otherwise, when the table is null, keep the initial table size to be used when creating,

* Or it defaults to 0. After initialization, keep the count value of the next element to resize the table.

*/

private transient volatile int sizeCtl;

// Next node index to be moved during capacity expansion

private transient volatile int transferIndex;

Core function

The core functions of CAS can be used according to their names. The underlying functions are implemented with operating system instructions cmpxchg. On the basis of implementing CAS, the semantics are the same as volatile (learn from JUC)

static final <K,V> Node<K,V> tabAt(Node<K,V>[] tab, int i) {

return (Node<K,V>)U.getObjectVolatile(tab, ((long)i << ASHIFT) + ABASE);

}

static final <K,V> boolean casTabAt(Node<K,V>[] tab, int i,

Node<K,V> c, Node<K,V> v) {

return U.compareAndSwapObject(tab, ((long)i << ASHIFT) + ABASE, c, v);

}

static final <K,V> void setTabAt(Node<K,V>[] tab, int i, Node<K,V> v) {

U.putObjectVolatile(tab, ((long)i << ASHIFT) + ABASE, v);

}

Let's start with a simple look

1. get function

The get function is very simple. The only thing to note is that EH < 0. At this time, the bucket head node is either a tree node or in the capacity expansion state. What is more noteworthy is the find method invoked in this judgment, and in the case of polymorphism, there will be various kinds of Sao operation.

public V get(Object key) {

Node<K,V>[] tab; Node<K,V> e, p; int n, eh; K ek;

int h = spread(key.hashCode());

if ((tab = table) != null && (n = tab.length) > 0 &&

(e = tabAt(tab, (n - 1) & h)) != null) {

// If the node found in the table is the same as the hash value of the key

if ((eh = e.hash) == h) {

// The key addresses are the same or the keys are the same

if ((ek = e.key) == key || (ek != null && key.equals(ek)))

return e.val;

}

// EH < 0 indicates that TreeNode may be in the process of capacity expansion

else if (eh < 0)

// This find method belongs to the method of node < K, V >, which can be overridden by subclasses

// There will be different types of nodes calling different find methods, which is very clever

return (p = e.find(h, key)) != null ? p.val : null;

// EH > = 0 indicates that the bucket is filled with a normal linked list and can be circulated directly

while ((e = e.next) != null) {

if (e.hash == h &&

((ek = e.key) == key || (ek != null && key.equals(ek))))

return e.val;

}

}

return null;

}

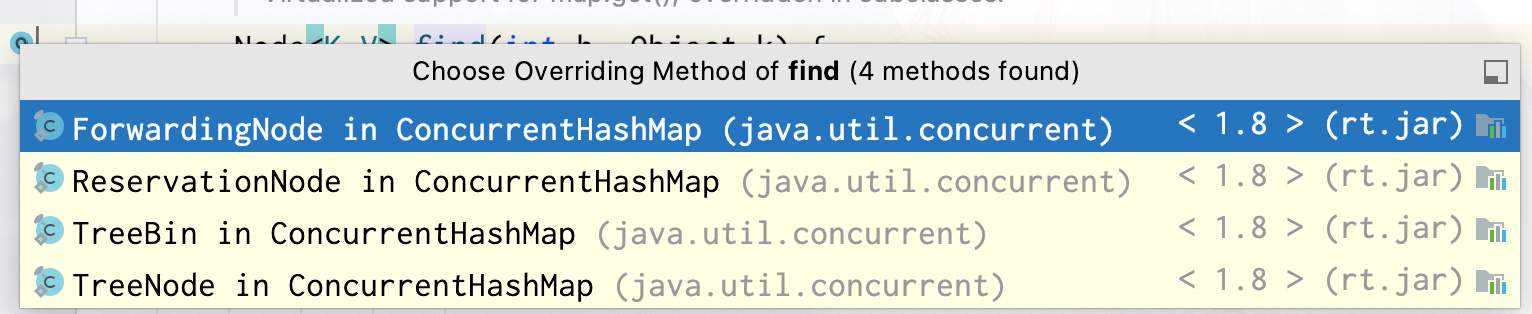

We can see that there are four very important subclasses of find methods

- ForwardingNode indicates the node being expanded

- I don't know the ReservationNode. This is a place holder node used in computeIfAbsent and compute. The consciousness is: the placeholder node used for computeIfAbsent and compute

- The tree bin data structure stores the root node of the tree, volatile treenode < K, V > first; So TreeBin is just a description of the structure of red and black trees

- TreeNode can only be the description of each node in the red black tree

2. put function

Logically, the put function is not difficult. It is unclear only about the helpTransfer function, but the most difficult is this function, which contains the core concurrency idea of CurrentHashMap. And before looking at this function, you should first look at the resize function

/** Implementation for put and putIfAbsent */

final V putVal(K key, V value, boolean onlyIfAbsent) {

// We can see that CurrentHashMap does not support null keys and null values

if (key == null || value == null) throw new NullPointerException();

int hash = spread(key.hashCode());

int binCount = 0;

// This loop is nice classic failure retry

for (Node<K,V>[] tab = table;;) {

Node<K,V> f; int n, i, fh;

if (tab == null || (n = tab.length) == 0)

tab = initTable();

// If the bucket in the table is empty

else if ((f = tabAt(tab, i = (n - 1) & hash)) == null) {

// Insert elements through cas

if (casTabAt(tab, i, null,

new Node<K,V>(hash, key, value, null)))

break; // no lock when adding to empty bin

}

// At this time, f points to a bucket of the table

// If the hash value is - 1, it means that the capacity is being expanded. This thread will join in to help expand the capacity. It feels like openmp

else if ((fh = f.hash) == MOVED)

tab = helpTransfer(tab, f);

else {

// This judgment indicates that it is either a linked list or a collection of tree nodes TreeBin - treenodes

//(but it's not locked yet. It may change before locking)

// If multiple threads come here at the same time and want to put, they will be blocked

// If the slot in the table is not empty and has no expansion

V oldVal = null;

// Lock current slot

synchronized (f) {

// What's the use of this judgment== Used to determine whether references are equal

// If only one bucket node is deleted between the above assignment and the current locking, this situation can be found here

// But the data of put was lost soon?

// This is because I didn't look carefully. It turns out that the whole code block is in an endless loop. If put fails, try again

if (tabAt(tab, i) == f) {

// Linked list structure has nothing to say

if (fh >= 0) {

binCount = 1;

for (Node<K,V> e = f;; ++binCount) {

K ek;

if (e.hash == hash &&

((ek = e.key) == key ||

(ek != null && key.equals(ek)))) {

oldVal = e.val;

if (!onlyIfAbsent)

e.val = value;

break;

}

Node<K,V> pred = e;

if ((e = e.next) == null) {

pred.next = new Node<K,V>(hash, key,

value, null);

break;

}

}

}

// If it is a tree node

else if (f instanceof TreeBin) {

Node<K,V> p;

binCount = 2;

if ((p = ((TreeBin<K,V>)f).putTreeVal(hash, key,

value)) != null) {

oldVal = p.val;

if (!onlyIfAbsent)

p.val = value;

}

}

}

}

if (binCount != 0) {

// binCount = 1; There are actually two nodes when it comes out

// Therefore, the following is that the number of nodes is greater than or equal to 9 (the number of nodes is greater than 8). The internal will judge that the length of the table needs to be greater than or equal to 64

if (binCount >= TREEIFY_THRESHOLD)

treeifyBin(tab, i);

if (oldVal != null)

return oldVal;

break;

}

}

}

addCount(1L, binCount);

return null;

}

3. Tryprevize function

The most complex transfer function will be called in the capacity expansion function

/**

* Tries to presize table to accommodate the given number of elements.

*

* @param size number of elements (doesn't need to be perfectly accurate)

*/

private final void tryPresize(int size) {

int c = (size >= (MAXIMUM_CAPACITY >>> 1)) ? MAXIMUM_CAPACITY :

tableSizeFor(size + (size >>> 1) + 1);

int sc;

// Sizecl note:

// Table initialization and sizing control. If negative, the table will be initialized or resized:

// -1 for initialization, otherwise - (1 + number of resizing threads active)

// Otherwise, when the table is null, the initial table size to be used during creation is retained, or the default is 0.

// After initialization, keep the count value of the next element to resize the table.

// Keep trying to expand the capacity until it enters the initialization or expansion state

// If it is initialization, first enter the first judgment to obtain the threshold, and then enter the third judgment in the second cycle to enter the capacity expansion state

// In case of capacity expansion, enter the third judgment directly

while ((sc = sizeCtl) >= 0) {

Node<K,V>[] tab = table; int n;

// Shouldn't lazy loading be an initTable function?

if (tab == null || (n = tab.length) == 0) {

n = (sc > c) ? sc : c;

if (U.compareAndSwapInt(this, SIZECTL, sc, -1)) {

try {

if (table == tab) {

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n];

table = nt;

// sc=0.75*n calculate the next expansion threshold

sc = n - (n >>> 2);

}

} finally {

sizeCtl = sc;

}

}

}

// The second judgment C < = SC is not easy to understand. It means that the power of 2 is less than or equal to sizeCtl

// -Assuming that the original capacity is 100, the sizeCtl is 75. At this time, many elements are remove d

// When you put the element again, it will appear that the power of 2 is less than or equal to sizeCtl

else if (c <= sc || n >= MAXIMUM_CAPACITY)

break;

else if (tab == table) {

// Look at the name, it feels like returning a random number

int rs = resizeStamp(n);

// Expanding capacity

if (sc < 0) {

Node<K,V>[] nt;

if ((sc >>> RESIZE_STAMP_SHIFT) != rs || sc == rs + 1 ||

sc == rs + MAX_RESIZERS || (nt = nextTable) == null ||

transferIndex <= 0)

break;

if (U.compareAndSwapInt(this, SIZECTL, sc, sc + 1))

transfer(tab, nt);

}

else if (U.compareAndSwapInt(this, SIZECTL, sc,

(rs << RESIZE_STAMP_SHIFT) + 2))

transfer(tab, null);

}

}

}

4. helpTransfer function

Visually, the transfer function segments the table, and then this method will help expand the capacity and move the elements in the bucket to the new table. This method page calls the transfer function and will not return from this method until the movement is completed.

/**

* Helps transfer if a resize is in progress.

* If you are resizing, it is helpful to transfer

*/

final Node<K,V>[] helpTransfer(Node<K,V>[] tab, Node<K,V> f) {

Node<K,V>[] nextTab; int sc;

if (tab != null && (f instanceof ForwardingNode) &&

(nextTab = ((ForwardingNode<K,V>)f).nextTable) != null) {

int rs = resizeStamp(tab.length);

while (nextTab == nextTable && table == tab &&

(sc = sizeCtl) < 0) {

if ((sc >>> RESIZE_STAMP_SHIFT) != rs || sc == rs + 1 ||

sc == rs + MAX_RESIZERS || transferIndex <= 0)

break;

if (U.compareAndSwapInt(this, SIZECTL, sc, sc + 1)) {

transfer(tab, nextTab);

break;

}

}

return nextTab;

}

return table;

}

5. transfer function

It's too complicated. Just analyze the previous part, that is, segment the CurrentHashMap

/**

* Moves and/or copies the nodes in each bin to new table. See

* above for explanation.

*/

private final void transfer(Node<K,V>[] tab, Node<K,V>[] nextTab) {

int n = tab.length, stride;

// If the number of CPUs is 1, the length of the whole table is n and divided into one segment, that is, a total of one segment

// If the number of CPUs is greater than 1, the whole table is divided into n/(8*cpu) segments

if ((stride = (NCPU > 1) ? (n >>> 3) / NCPU : n) < MIN_TRANSFER_STRIDE)

stride = MIN_TRANSFER_STRIDE; // subdivide range

if (nextTab == null) { // initiating

try {

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n << 1];

nextTab = nt;

} catch (Throwable ex) { // try to cope with OOME

sizeCtl = Integer.MAX_VALUE;

return;

}

nextTable = nextTab;

// Start from the last bucket and move to the new watch

transferIndex = n;

}

int nextn = nextTab.length;

ForwardingNode<K,V> fwd = new ForwardingNode<K,V>(nextTab);

boolean advance = true;

boolean finishing = false; // to ensure sweep before committing nextTab

for (int i = 0, bound = 0;;) {

Node<K,V> f; int fh;

while (advance) {

int nextIndex, nextBound;

if (--i >= bound || finishing)

advance = false;

else if ((nextIndex = transferIndex) <= 0) {

i = -1;

advance = false;

}

else if (U.compareAndSwapInt

(this, TRANSFERINDEX, nextIndex,

nextBound = (nextIndex > stride ?

nextIndex - stride : 0))) {

bound = nextBound;

i = nextIndex - 1;

advance = false;

}

}

if (i < 0 || i >= n || i + n >= nextn) {

int sc;

if (finishing) {

nextTable = null;

table = nextTab;

sizeCtl = (n << 1) - (n >>> 1);

return;

}

if (U.compareAndSwapInt(this, SIZECTL, sc = sizeCtl, sc - 1)) {

if ((sc - 2) != resizeStamp(n) << RESIZE_STAMP_SHIFT)

return;

finishing = advance = true;

i = n; // recheck before commit

}

}

else if ((f = tabAt(tab, i)) == null)

advance = casTabAt(tab, i, null, fwd);

else if ((fh = f.hash) == MOVED)

advance = true; // already processed

else {

synchronized (f) {

if (tabAt(tab, i) == f) {

Node<K,V> ln, hn;

if (fh >= 0) {

int runBit = fh & n;

Node<K,V> lastRun = f;

for (Node<K,V> p = f.next; p != null; p = p.next) {

int b = p.hash & n;

if (b != runBit) {

runBit = b;

lastRun = p;

}

}

if (runBit == 0) {

ln = lastRun;

hn = null;

}

else {

hn = lastRun;

ln = null;

}

for (Node<K,V> p = f; p != lastRun; p = p.next) {

int ph = p.hash; K pk = p.key; V pv = p.val;

if ((ph & n) == 0)

ln = new Node<K,V>(ph, pk, pv, ln);

else

hn = new Node<K,V>(ph, pk, pv, hn);

}

setTabAt(nextTab, i, ln);

setTabAt(nextTab, i + n, hn);

setTabAt(tab, i, fwd);

advance = true;

}

else if (f instanceof TreeBin) {

TreeBin<K,V> t = (TreeBin<K,V>)f;

TreeNode<K,V> lo = null, loTail = null;

TreeNode<K,V> hi = null, hiTail = null;

int lc = 0, hc = 0;

for (Node<K,V> e = t.first; e != null; e = e.next) {

int h = e.hash;

TreeNode<K,V> p = new TreeNode<K,V>

(h, e.key, e.val, null, null);

if ((h & n) == 0) {

if ((p.prev = loTail) == null)

lo = p;

else

loTail.next = p;

loTail = p;

++lc;

}

else {

if ((p.prev = hiTail) == null)

hi = p;

else

hiTail.next = p;

hiTail = p;

++hc;

}

}

ln = (lc <= UNTREEIFY_THRESHOLD) ? untreeify(lo) :

(hc != 0) ? new TreeBin<K,V>(lo) : t;

hn = (hc <= UNTREEIFY_THRESHOLD) ? untreeify(hi) :

(lc != 0) ? new TreeBin<K,V>(hi) : t;

setTabAt(nextTab, i, ln);

setTabAt(nextTab, i + n, hn);

setTabAt(tab, i, fwd);

advance = true;

}

}

}

}

}

}