catalogue

1, What is ForkJoin

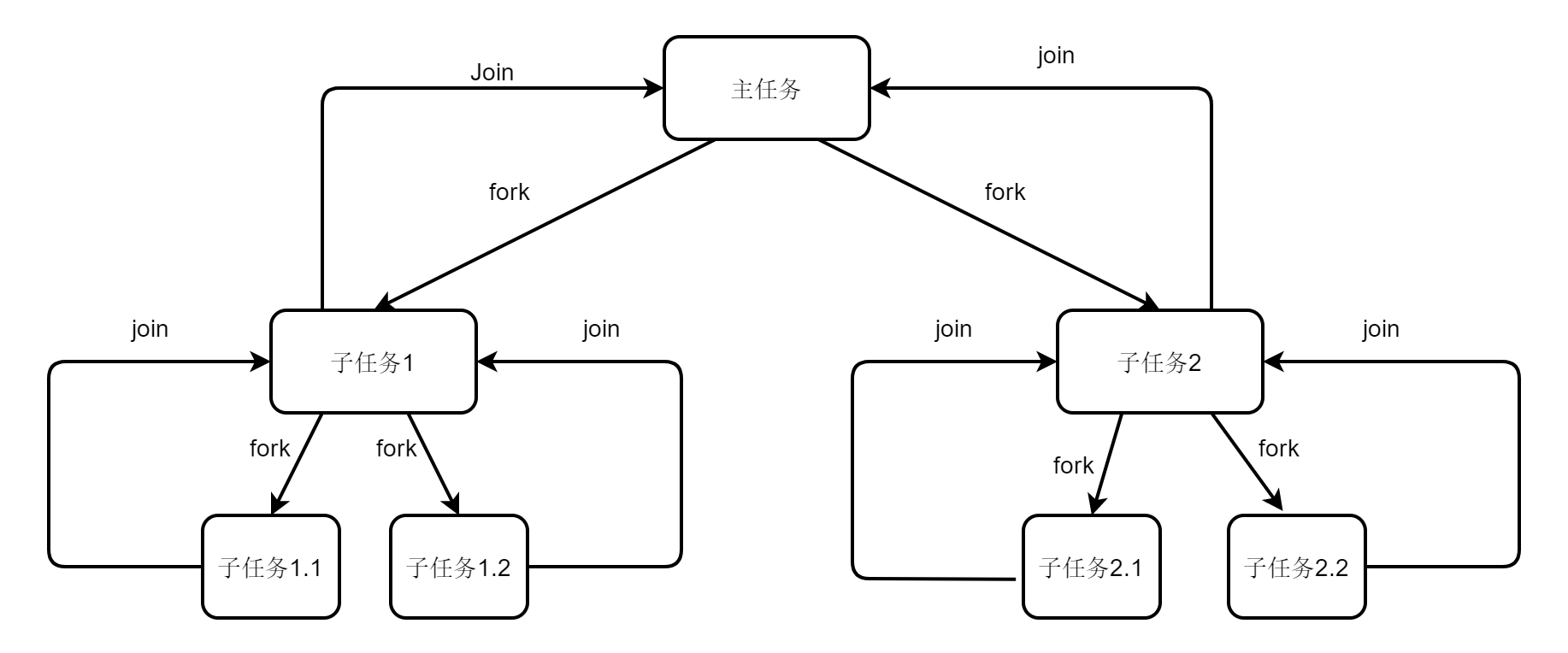

"Divide and conquer" is an idea. The so-called "divide and conquer" is to divide a complex algorithm problem into several smaller parts according to a certain "decomposition" method, then solve them one by one, find out the solutions of each part respectively, and finally merge the solutions of each part. The ForkJoin mode is this idea, which decomposes a large task into many independent subtasks, and then opens multiple threads to execute these subtasks in parallel. Split the task until it reaches the smallest unit.

2, ForkJoin is easy to use

The demand is the sum of 1-1000000

package com.xiaojie.fork;

import java.util.concurrent.ExecutionException;

import java.util.concurrent.ForkJoinPool;

import java.util.concurrent.ForkJoinTask;

import java.util.concurrent.RecursiveTask;

/**

* @description: Calculate the sum of 1-1000000 using forkJoin

* @author xiaojie

* @date 2022/1/20 9:56

* @version 1.0

*/

public class ForkJoinDemo extends RecursiveTask<Integer> {

//The recursive task has a return value

//RecursiveAction has no return value

int start;

int end;

int max = 5000; //Group in 5000 units

int sum;

@Override

protected Integer compute() {

if (end - start < max) {

System.out.println(Thread.currentThread().getName() + "," + "start:" + start + ",end:" + end);

for (int i = start; i < end; i++) {

sum += i;

}

} else {

//split

int middle = (start + end) / 2;

ForkJoinDemo left = new ForkJoinDemo(start, middle);

left.fork();//split

ForkJoinDemo right = new ForkJoinDemo(middle + 1, end);

right.fork();//split

try {

//Merge tasks

sum = left.get() + right.get();

} catch (InterruptedException e) {

e.printStackTrace();

} catch (ExecutionException e) {

e.printStackTrace();

}

}

return sum;

}

public ForkJoinDemo(int start, int end) {

this.start = start;

this.end = end;

}

public static void main(String[] args) throws ExecutionException, InterruptedException {

ForkJoinDemo forkJoinDemo = new ForkJoinDemo(1, 1000000);

ForkJoinPool forkJoinPool = new ForkJoinPool();

ForkJoinTask<Integer> result = forkJoinPool.submit(forkJoinDemo);

System.out.println(result.get());

while (true) {

// ForkJoinPool uses threads in daemon mode, so there is no need to explicitly close such a pool when the program exits

}

}

}

be careful:

1. ForkJoinPool uses threads in daemon mode, so the general program does not need to explicitly close such a pool when exiting, but automatically ends the task.

2. ForkJoin mode is suitable only when the number of tasks is large. It is not suitable when the number of tasks is small, because ForkJoin also consumes time and resources when splitting tasks (when the number of tasks is small, most of the time is used to split tasks rather than cost-effective).

3, ForkJoin principle

Core API

The core of the ForkJoin framework is the ForkJoinPool thread pool. The thread pool uses an unlocked stack to manage idle threads. If a worker thread cannot get an executable task temporarily, it may hang, and the suspended threads will be pushed into the stack maintained by ForkJoinPool. When a new task arrives, wake up these threads from the stack.

constructor

public ForkJoinPool(int parallelism,

ForkJoinWorkerThreadFactory factory,

UncaughtExceptionHandler handler,

boolean asyncMode) {

this(parallelism, factory, handler, asyncMode,

0, MAX_CAP, 1, null, DEFAULT_KEEPALIVE, TimeUnit.MILLISECONDS);

}Parameter interpretation

int parallelism -- the default parallelism is the number of CPU s, and the minimum value is 1, which determines the number of threads executing in parallel.

ForkJoinWorkerThreadFactory factory -- thread creation factory

Uncaughtexceptionhandler -- exception handling class, which is caught by the handler when an exception occurs during task execution.

boolean asyncMode - whether it is asynchronous mode. The default value is false. If true, it means that the execution of subtasks follows FIFO (first in first out) order. If false, it means that the execution order of subtasks is LIFO (last in first out) order, and subtasks can be merged.

The ForkJoin framework creates a corresponding work queue for each independent working thread to execute tasks. This work queue is a two-way queue that uses arrays for two-way combination.

non-parameter constructor

public ForkJoinPool() {

this(Math.min(MAX_CAP, Runtime.getRuntime().availableProcessors()),

defaultForkJoinWorkerThreadFactory, null, false,

0, MAX_CAP, 1, null, DEFAULT_KEEPALIVE, TimeUnit.MILLISECONDS);

}The meaning of this constructor is that the parallelism is the number of cores of the CPU. The thread factory is defaultForkJoinWorkerThreadFactory. If the exception handling class is null, it means that exceptions are not handled. If the asynchronous mode is false, it means that sub tasks can be merged when LIFO (last in first out) is executed.

Job theft algorithm

The tasks of the ForkJoinPool thread pool are divided into "external tasks" and "internal tasks (subtasks separated from tasks)". The storage locations of the two tasks are different. The external tasks are stored in the global queue of the ForkJoinPool, and the subtasks are placed in the internal queue as "internal tasks". Each thread in the ForkJoinPool maintains an internal queue, Used to store these "internal tasks". Since ForkJoinPool has multiple threads, there will be multiple queues corresponding to it, resulting in uneven task steps. Some queues have many tasks and are always executing tasks, and some queues are empty and have no tasks and are always idle. Then we need a way to solve this problem. The answer is to use the work stealing algorithm.

The core idea of work stealing is that when the work thread completes its own task execution, it will find out whether there are tasks in other task queues, and if there are, it will execute tasks in other queues, which also improves efficiency. In fact, to put it bluntly, I'm dead, but I'm busy. I'll help you and others. Then, there will be a situation where one's own task will help other threads when it is completed, but will it execute the same task with other threads at the same time, resulting in competition. The simplified scheme is that the thread's own local queue adopts LIFO (last in first out), and the queue task stealing other tasks adopts FIFO (first in first out) strategy, It is executed simultaneously from both ends of the queue.

Advantages of job theft

1. The thread will not be blocked because it is waiting for a subtask to execute or no task to execute. Instead, it will scan all queues to steal tasks and will not hang until all queues are empty.

2. ForkJoin maintains a queue for each thread. This pair of columns is an array based two-way pair of columns, which can obtain tasks from both ends, greatly reducing the possibility of competition and improving parallel performance.

ForKJoin principle

- The tasks of ForkJoinPool thread pool are divided into "external tasks" and "internal tasks". "Internal task" and "external task" are only abstract concepts, not really internal and external.

- External tasks are stored in the global queue of ForkJoinPool.

- Each thread in the ForkJoinPool pool maintains a task queue for storing "internal tasks". The subtasks obtained from thread cutting tasks are placed in the internal queue as "internal tasks".

- When the worker thread wants to obtain the execution result of the subtask, it will first judge whether the subtask has been completed. If it has not been completed, it will then judge whether the subtask has been stolen by other threads. If it has not been stolen, this thread will help complete it. If the subtask has been stolen, it will execute other tasks in the "internal queue" of this thread, Or scan other queues and steal tasks.

- When the worker thread completes the "internal task" and is idle, it will scan other task queues to steal tasks, so as not to block the waiting as much as possible.

When a ForkJoin thread waits for a task to complete, it either completes the task itself or executes other tasks when other threads steal the task. It will not block the waiting, so as to avoid resource waste, unless all task queues are empty.

reference resources

JAVA high concurrency core programming (Volume 2): multithreading, locking, JMM, JUC, high concurrency design - edited by Nien