Review the basic content of threads

Program: static code installed on the hard disk.

Process: the running program is the space unit of memory allocated by the operating system.

Thread: it is the smallest execution unit in the process and the scheduling unit of cpu. Threads depend on the process.

Create thread

- Thread class inherits thread

- Class implements the Runnable interface and overrides run()

Create an object of the Thread class and assign tasks to it. - Class implements the Callable interface and rewrites the call() method. It has a return value and can throw an exception.

Create an object of the Thread class and assign tasks to it.

Common methods

run()

call()

start()

Thread state

start() ready

join() blocking

sleep() blocking

yield() thread concession allows the thread to actively give up from the running state - > enter the ready state.

Multithreading

If there are multiple tasks in the program, multithreading is required.

Multithreading can improve the efficiency of program execution.

Improve CPU utilization

Insufficient:

Increased requirements for CPU

Multiple threads access the same shared resource at the same time

Thread safety issues:

Multiple threads and multiple threads access a shared data.

Solution: queue + lock

- Syschronized

Keyword modification method modifies a code block, which is implicit lock, automatic locking and automatic release of lock. - ReentrantLock

Only a certain section of code can be locked. If it is a display lock, it can be added and released manually.

Daemon thread

deadlock

Thread communication

wait()

notify()

sleep() will enter the ready state and will not release the lock after the specified sleep time.

wait() makes the thread wait. It must wake up through notify(). The lock will be released when waiting.

Concurrent programming

What is concurrent programming?

- Concurrency: in a certain period of time, do several things in turn (one by one)

Multiple threads access the same resource

No problem with single core CPU

With the powerful function of CPU, it has developed to multi-core, which means that multiple threads can be executed at the same time, so it is possible for multiple threads to access the same shared data at the same time. (spike rush)

Concurrent programming is to allow only one thread at a time to access shared data in the case of multi-core and multi-threaded.

Now we can realize it by locking, but shackle is not the only solution. There are other ways to realize it.

- Now let's talk about how to generate and solve concurrency problems, learn new ways to solve them, and the internal principle of locking.

Multi core CPUs can really achieve parallel execution, but we want to make the program execute concurrently in a certain scenario.

- Parallel: in a time node, you can do many things at the same time

Advantages of multithreading:

Improve the performance of the program and perform multiple tasks at the same time.

Extract the residual value of hardware.

Disadvantages of multithreading:

Security (access to shared variables)

Performance (CPU to switch threads)

Reasons for problems in Concurrency:

The read and write speeds of cpu, memory and hard disk are different. (cpu > memory > hard disk)

In order to solve such problems, the system has made some optimization

The cpu adds cache function.

You can optimize the execution order of code. (sometimes optimization can cause problems)

The data in cache and memory is inconsistent.

It may affect the execution results of subsequent code.

Java Memory Model (JMM)

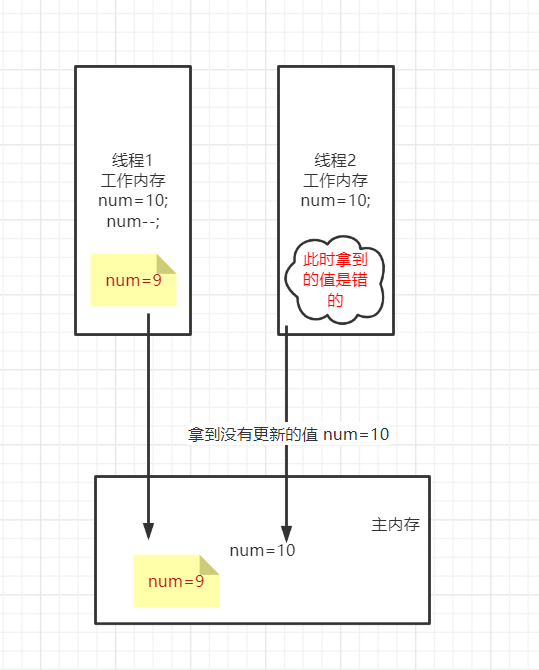

Note the Java Memory Model (JMM)

- When Java multithreading works, it operates the data. After the operation is completed, it writes the data back to the main memory.

- This creates visibility issues

Thread B cannot see the data operated in thread A.

The operating system may rearrange the execution order of instructions. This will lead to the problem of order.

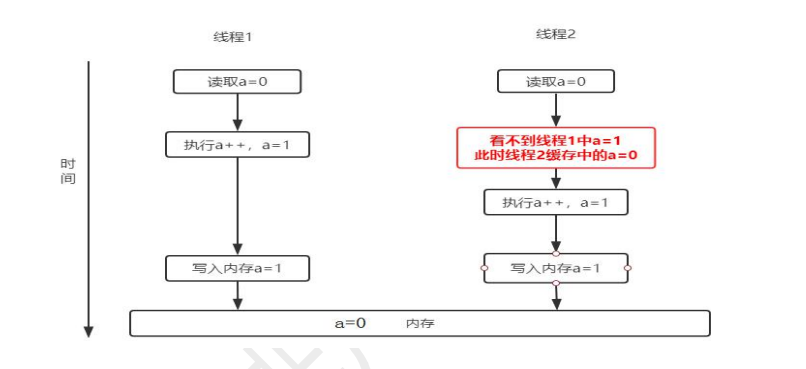

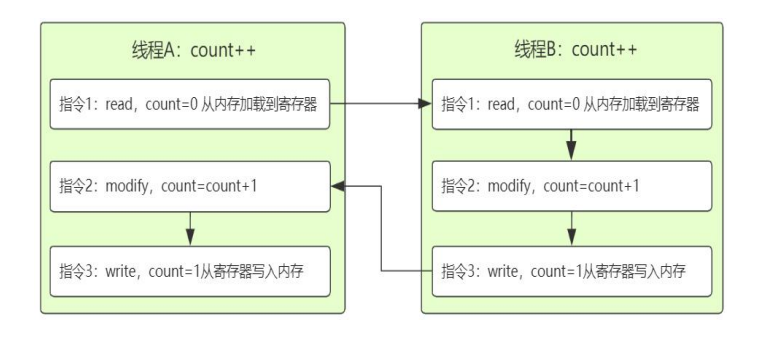

- Thread switching brings atomicity (inseparable) problems

Core issues of concurrent programming:

- Thread local caching can cause visibility problems.

- Compiling optimized replay instructions can lead to ordering problems.

- Thread switching execution can cause atomicity problems.

How to solve the problem

-

volatile keyword

1. cover volatile The modified shared variable can be immediately visible in another thread after one thread operates. 2. cover volatile Modified shared variables are prohibited from optimization and reordering 3. volatile Atomicity of variable operation cannot be guaranteed.

Case test

public class ThreadDemo implements Runnable{

/*

volatile Modified variables are immediately visible to other threads after being modified in one thread

Prohibit the cpu from reordering instructions

*/

private volatile boolean flag = false;//shared data

@Override

public void run() {

try {

//Prevent this thread from executing first and then modifying the flag state before the main method executes

Thread.sleep(200);

} catch (InterruptedException e) {

e.printStackTrace();

}

this.flag = true;//Let a thread modify the shared variable value

System.out.println(this.flag);

}

public boolean getFlag() {

return flag;

}

public void setFlag(boolean flag) {

this.flag = flag;

}

}

public class TestVolatile {

public static void main(String[] args) {

ThreadDemo td = new ThreadDemo();

Thread t = new Thread(td);//Create thread

t.start();

//The flag variable is also required in the main thread

while(true){

if(td.getFlag()){

System.out.println("main---------------");

break;

}

}

}

}

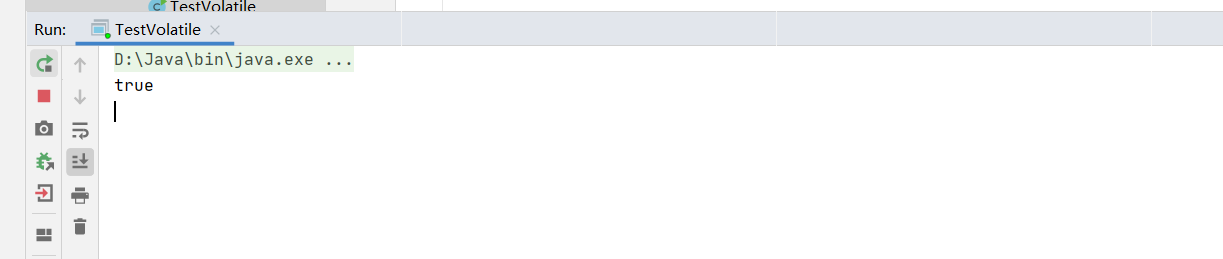

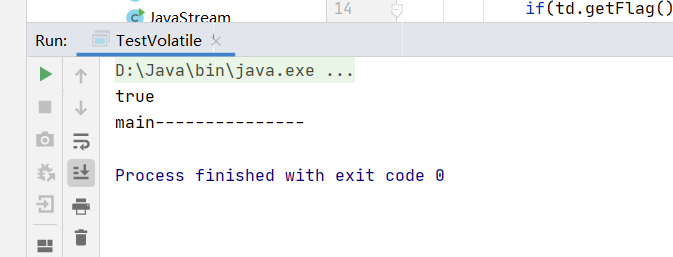

Operation effect without volatile

- If the main cannot see another thread modifying the flag, it will continue to loop.

Operation effect with volatile - main can see another thread modifying the flag

How to ensure atomicity

Lock- Lock ReentrantLock

- Synchronized

Java.util.concurrent package

Atomiclnteger realizes atomic operation through volatile +CAS.

Case test

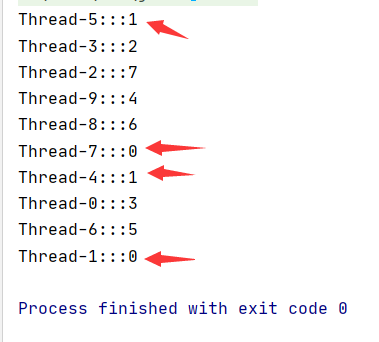

- General situation

public class ThreadDemo implements Runnable{

private int num = 0;//Shared variable

@Override

public void run() {

try {

Thread.sleep(200);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println(Thread.currentThread().getName()+":::"+getNum());

}

public int getNum() {

return num++;

}

}

public class Test {

public static void main(String[] args) {

ThreadDemo td = new ThreadDemo();

for (int i = 0; i <10 ; i++) {//Create 10 threads in a loop

Thread t = new Thread(td);

t.start();

}

}

}

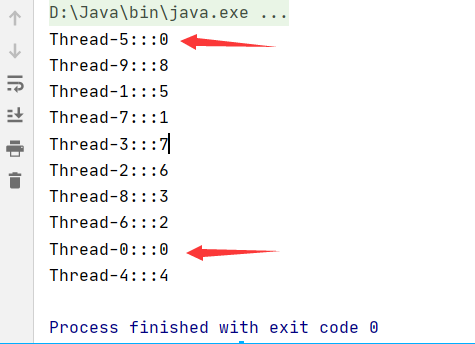

- Add volatile keyword

public class ThreadDemo implements Runnable{

private volatile int num = 0;

@Override

public void run() {

try {

Thread.sleep(200);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println(Thread.currentThread().getName()+":::"+getNum());

}

public int getNum() {

return num++;

}

}

public class Test {

public static void main(String[] args) {

ThreadDemo td = new ThreadDemo();

for (int i = 0; i <10 ; i++) {//Create 10 threads in a loop

Thread t = new Thread(td);

t.start();

}

}

}

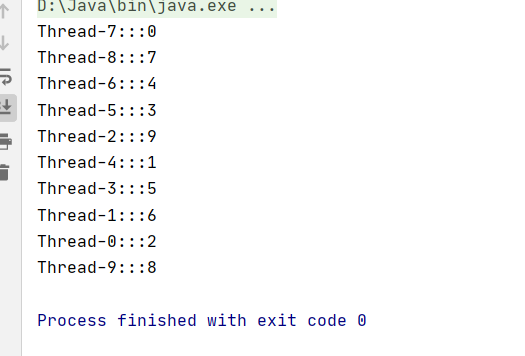

volatile keyword cannot guarantee atomicity:

- Through atomic class (can be solved)

java.util.concurrent.atomic.AtomicInteger;

public class ThreadDemo implements Runnable{

private AtomicInteger num = new AtomicInteger(0);

@Override

public void run() {

try {

Thread.sleep(200);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println(Thread.currentThread().getName()+":::"+getNum());

}

public int getNum() {

return num.getAndIncrement();

}

}

public class Test {

public static void main(String[] args) {

ThreadDemo td = new ThreadDemo();

for (int i = 0; i <10 ; i++) {//Create 10 threads in a loop

Thread t = new Thread(td);

t.start();

}

}

}

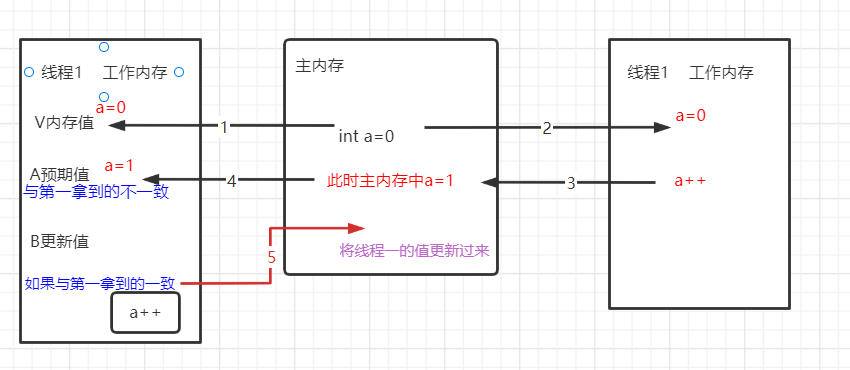

CASS(Compare-And-Swap)

Compared and exchanged, the algorithm is the hardware support for concurrent operation, and is suitable for the case of low concurrency.

CAS is an implementation of optimistic locking. It adopts the idea of spin locking and is a medium and lightweight locking mechanism.

-

Optimistic lock refers to a way that can be solved without locking

-

The spin lock (wheel Patrol) keeps checking the cycle.

CAS Contains three operands 1. Memory value V 2. Estimated value A(The value read from memory again during comparison) 3. Update value B(Updated value) If and only if the expected value A==V,Set memory value V=B,Or do nothing.

This will not cause thread blocking because there is no lock.

Disadvantages:

- In the case of high concurrency, using spin to cycle continuously will improve the CPU utilization.

- There may be ABA problems. You can add a version number to the class to distinguish whether the value has been modified.

The ABA problem is that although the value obtained by thread 1 for the first time is the same as that obtained by thread 2 for the second time, the value obtained by thread 2 for the second time is changed, but the final result is the same as the original value.

JUC common classes

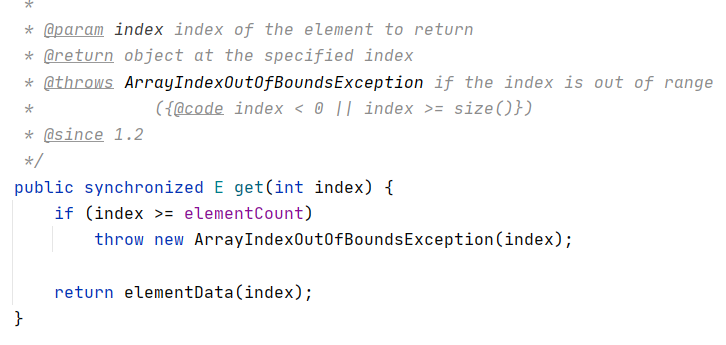

HashMap Thread unsafe Hashtable Thread safety ArrayList Thread unsafe Vecotor Thread safety

In the case of extremely high concurrency, although Hashtable Vector is thread safe, it is inefficient.

The JUC package provides some classes for high concurrency. The security is reliable, and the efficiency is higher than that of Hashtable Vector.

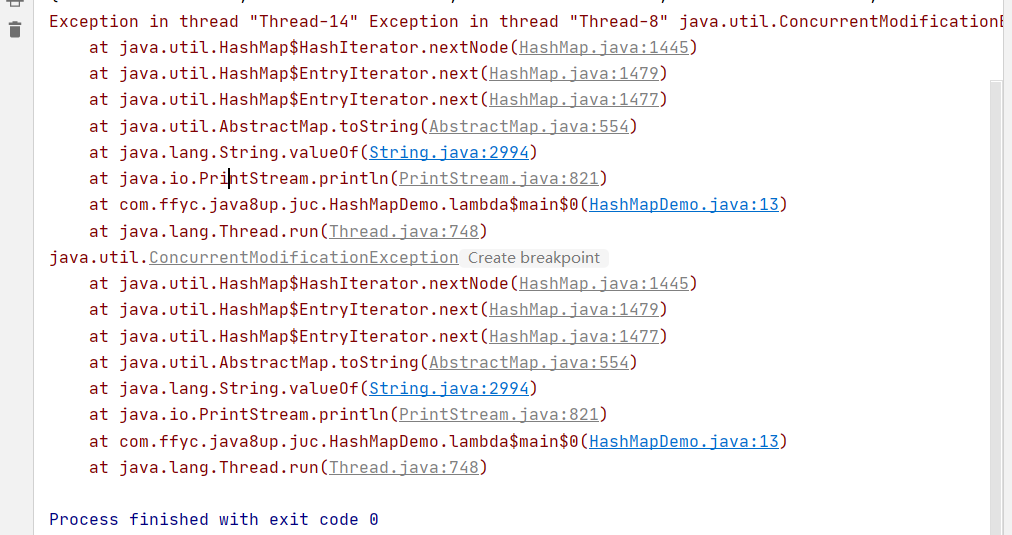

/*

HashMap Thread unsafe

ConcurrentModificationException Concurrent modification exception

*/

public class HashMapDemo {

public static void main(String[] args) {

HashMap<String,Integer> hashMap=new HashMap<>();

for (int i = 0; i < 20; i++) {

new Thread(()->{

hashMap.put(Thread.currentThread().getName(),new Random().nextInt());

System.out.println(hashMap);

})

.start();

}

}

}

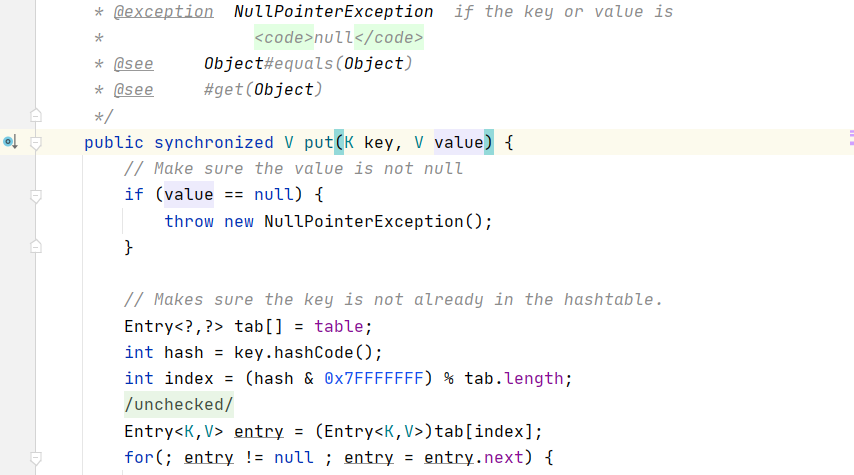

Hashtable and ConcurrentHashMap

- Hashtable locks the put method directly, which is inefficient and can be used in low concurrency situations.

- ConcurrentHashMap adopts the segment lock mechanism and does not lock the entire hash table. jdk8 after that, it will never use the segment lock again (create a lock flag object for each location).

CAS thought + synchronized is adopted to realize

When inserting, check whether the position corresponding to the hash table is the first node. If so, use CAS mechanism (circular inspection) to insert data to the first insertion position.

If this position has a value, the first Node object is used as the lock flag for locking, and the synchronized implementation is used.

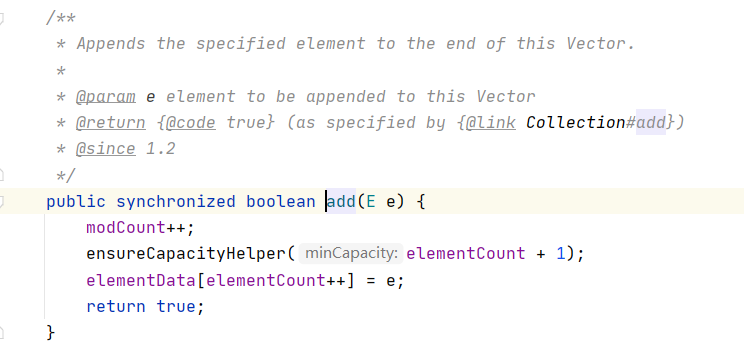

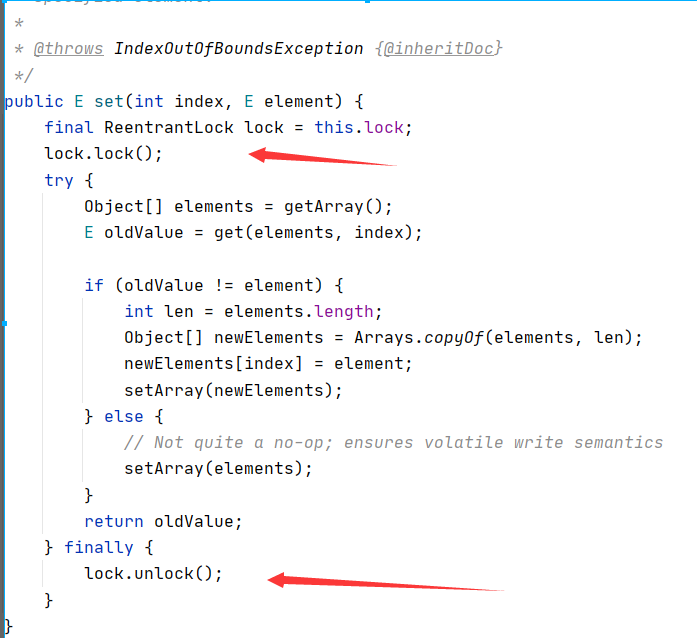

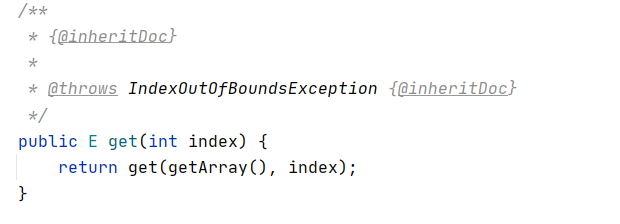

CopyOnWriteArrayList and ArrayList and Vector

- ArraysList is thread unsafe, and problems may occur in the case of high concurrency.

- Although Vector is thread safe, its read and write operations are locked, which is a waste of resources. When reading, we should allow multiple threads to access at the same time, because the read operation is thread safe.

- CopyOnWriteArrayList class gives full play to the performance of reading. Fetching is completely unlocked. What's more, writing does not block the operation of reading. Only writing and writing need to wait synchronously, and the performance of reading has been greatly improved.

When adding, first copy out a copy of the original array

Then add the data to the replica

Finally, replace the original array with a copy

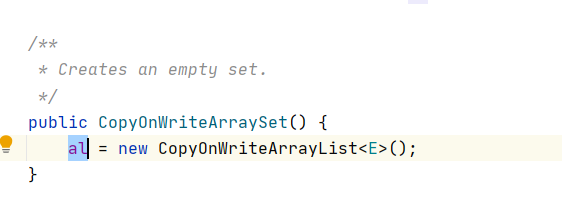

CopyOnWriteArraySet

- The implementation of CopyOnWriteArraySet is based on CopyOnWriteArrayList and cannot store duplicate data

Auxiliary class CountDownLatch and auxiliary class CyclicBarrier

-

CountDownLatch class enables a thread to wait for other threads to execute respectively before executing. It is realized through a counter. The initial value of the counter is the number of threads. Every time a thread is executed, the value of the counter is - 1. When the value of the counter is 0, it means that all threads are executed, and then the threads waiting on the lock can resume work.

-

If the value of the counter is greater than the number of threads, it will deadlock.

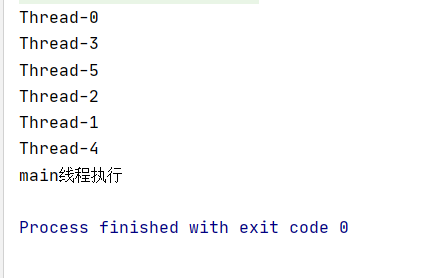

public class CountDownLatchDemo {

/*

CountDownLatch Auxiliary class

Causes a thread to wait for other threads to execute before executing

Equivalent to a thread counter, it is a decreasing counter

Specify a number first. When a thread ends execution, it will be - 1 until it is zero, and the counter will be closed.

So the thread can execute

*/

public static void main(String[] args) throws InterruptedException {

CountDownLatch downLatch = new CountDownLatch(6);//count

for (int i = 0; i < 6; i++) {

new Thread(

()-> {

System.out.println(Thread.currentThread().getName());

downLatch.countDown();//Counter minus one operation

}

).start();

}

downLatch.await();//Turn off counter

System.out.println("main Thread execution");

}

}

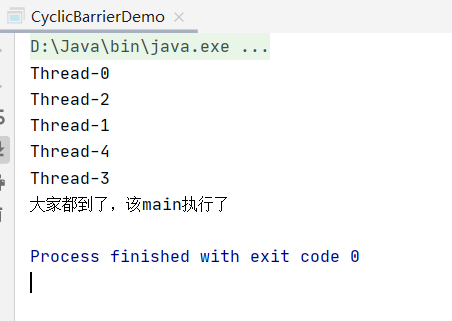

- CyclicBarrier is a synchronization helper class that allows a group of threads to be blocked when they reach a barrier. The barrier will not open until the last thread reaches the barrier.

public class CyclicBarrierDemo {

/*

CyclicBarrier A group of threads is blocked when they reach a barrier,

The barrier will not open until the last thread reaches the barrier

*/

public static void main(String[] args) {

CyclicBarrier c = new CyclicBarrier(5,

() -> {

System.out.println("Everybody's here. It's time main Yes");

}

);

for (int i = 0; i < 5; i++) {

new Thread(() -> {

System.out.println(Thread.currentThread().getName());

try {

c.await();

} catch (InterruptedException e) {

e.printStackTrace();

} catch (BrokenBarrierException e) {

e.printStackTrace();

}

}).start();

}

}

}

There are many terms of lock, some refer to the characteristics of lock, some refer to the design of lock, and some refer to the state of lock.

- Optimistic lock

Optimistic locking is not locking, and there is no problem with the modification of task concurrency, such as CAS mechanism.

- Pessimistic lock

Pessimistic locks believe that concurrent operations will cause problems and need to be locked to ensure security.

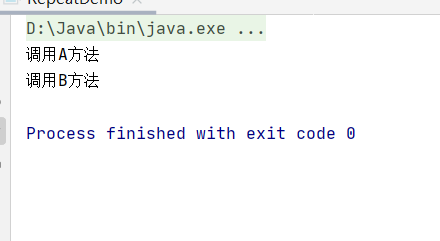

- Reentrant lock

When a synchronous method calls another method that uses the same lock with him, in the outer method, even if the lock is not released, it can enter another synchronization method.

Synchronized reentrantlocks are reentrant locks

public class RepeatDemo {

synchronized void setA(){

System.out.println("call A method");

setB();

}

synchronized void setB(){

System.out.println("call B method");

}

public static void main(String[] args) {

new RepeatDemo().setA();

}

}

If it is not a reentrant lock, setB() will not be executed by the current thread and will cause deadlock.

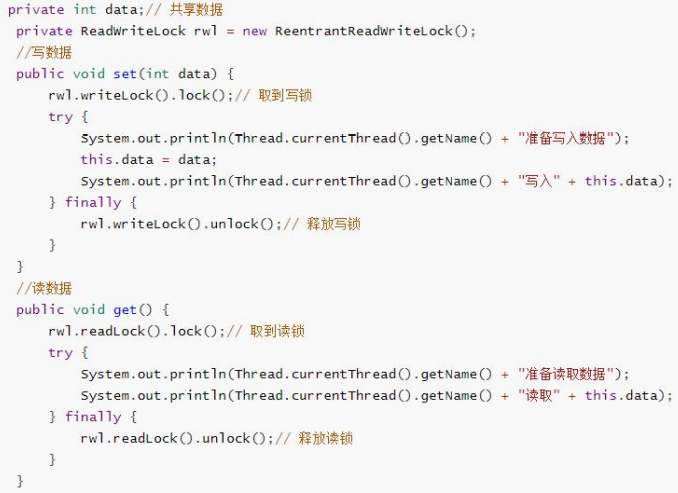

Read write lock

- Read and write two locks for classified use

- Multiple threads can read at the same time.

- Write mutex (only one thread is allowed to write, and cannot read and write at the same time)

- Write takes precedence over read (once there is a write, the read must wait, and the write thread can be considered first when waking up).

Sectional lock

It is not the realization of a lock, but an idea of locking. It adopts segmented locking to reduce the granularity and improve the efficiency.

Spin lock

It is not a lock implementation. It uses spin (circular retry) to try to obtain the execution right, which will not let the thread enter the blocking state. It is suitable for the case of short lock time.

Shared lock

A lock that can be shared by multiple threads

ReadWriteLock's read lock is shared, and multiple threads can read data at the same time.

Exclusive lock

Only one thread can acquire a lock at a time.

ReentrantLock, synchronized and ReadWriteLock are exclusive locks.

The implementation method in ReadWriteLock uses a synchronous queue. For example, read threads obtain resources, set the standard state to used, and then add other threads to a queue to wait.

AQS(AbstractQueuedSynchronizer)

A queue is maintained to queue waiting threads.

Fair lock

It will maintain the waiting queue of a thread and execute the threads in turn.

ReentrantLock is non fair by default. You can specify whether it is a fair lock or a non fair lock through the constructor method during creation.

Unfair lock

There is no queue. Once the lock is released, the thread will start to preempt. Whoever grabs the execution right will control it first. synchronized is unfair.

Lock status

No lock state, biased lock state, lightweight lock state and heavyweight lock state.

The status of the lock is indicated by the field of the object monitor in the object header.

-

Biased lock: only one thread has been acquiring locks, which makes it easier to acquire locks.

-

Lightweight lock: when a partial lock is accessed by another thread, the lock state will be upgraded to lightweight lock.

If it is a lightweight lock, the waiting thread will not enter the blocking state. The spin method is adopted to try to obtain the lock again, which improves the efficiency. -

Heavyweight lock: when the lock state is a lightweight lock, if some threads spin too many times or have a large number of thread accesses, the lock state will be upgraded to a heavyweight lock. At this time, the thread that obtains the lock will no longer spin and enter the blocking state.

Object header

In the Hotspot virtual machine, the layout of objects in memory is divided into three areas: object header, instance data and alignment filling; Java object headers are the basis for implementing synchronized lock objects. Generally speaking, the lock objects used by synchronized are stored in Java object headers. It is the key of lightweight lock and bias lock.

synchronized

- It is a reentrant lock, not a fair lock.

- Is a keyword that can modify code blocks or methods.

- Is an implicit automatic acquisition and release lock

- synchronized implements locking and releasing locks at the instruction level

- There is an entry monitor and an exit monitor

Thread entry monitor + 1 object header lock flag has been used.

After execution, exit monitor - 1 0 object header flag is changed to no lock.

ReentrantLock

- Reentrant locks can be fair or unfair.

- Is a class that can only decorate the code block. If it is displayed, add it manually and release it manually

- It is controlled at the class level and implemented by CAS and AQS queues.

- The state of a lock is maintained inside the class. Once a thread preempts, the state is changed to 1. Other threads enter the queue and wait for the lock to be released. Once the lock is released, the head node will wake up and start to wake up the lock.

Thread pool

The database Connection pool of the pool creates a Connection object with the data Connection every time. After the Connection operation is completed, it will be destroyed frequently, and the creation of xiong'an will be more time-consuming.

Create a pool and initialize a part of the connection objects in the pool in advance. When using, you can directly obtain them and return them after use. There is no need to create and destroy them frequently.

Why use thread pools?

When the amount of concurrency is large, the frequent creation and destruction of threads is expensive. Creating a thread can relieve the pressure.

After JDK5, ThreadPoolExecutor class is provided to realize the creation of thread pool. It is recommended to use. There are seven parameters to set the definition of the characteristics of thread pool.

The meaning of each parameter in the constructor

- corePoolSize: the number of core thread pools. After creation, the number of core thread pools is zero by default. When a task comes, it will be created and executed, or it will call the prestartAllCoreThreads() or prestartCoreThread() method to create it.

- maximumPoolSize: the total number of thread pools, indicating how many threads the thread pool can hold.

- keepAliveTime: refers to how long a thread in the non core thread pool will be destroyed when it is not executed.

- Unit: defines the time unit for keepAliveTime.

- workQueue waiting queue can specify its own implementation class.

- threadFactory: thread factory, which is mainly used to build threads;

- handler: indicates the policy when the task is rejected.

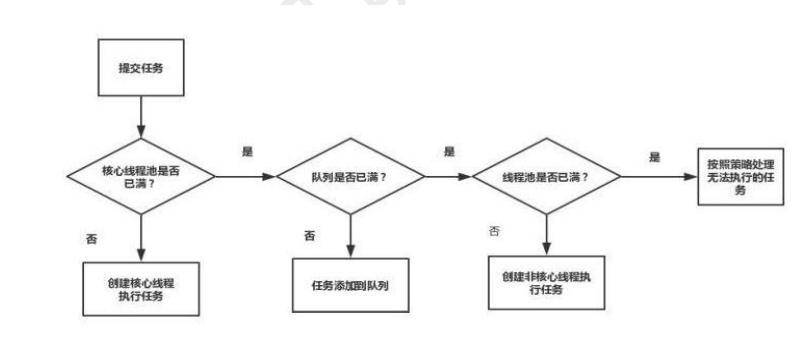

Thread pool execution

When there is a thread pool for task submission,

- First, check whether the core process pool is full

If the core thread pool is not full: create a thread processing in the core thread pool.

If core thread pool is full: check waiting queue

2. If the waiting queue is full: check whether the thread pool is full

If the thread pool is full and the waiting queue is full, a non core thread is created to process the task.

3. If the number of tasks continues to increase and the core thread pool, waiting column and non core thread pool are full, the response rejection policy is used.

Queue in thread pool

There are the following work queues in the thread pool

- ArrayBlockingQueue: bounded queue, which is a sort quantity of unblocked queue implemented by array according to FIFO.

-LinkedBlockingQueue: the capacity queue can be set. The blocking queue based on linked list structure sorts tasks according to FIFO. The capacity can be set optionally. If it is not set, it will be an unbounded blocking queue with a maximum length of integer MAX_ Value, the throughput is usually higher than arrayblockingqueue.

Thread pool reject policy

- AbortPolicy policy: exception thrown.

- DiscardOleddestPolicy policy: this policy will discard the oldest request.

- DiscardPolicy policy: this policy discards tasks that cannot be processed and does not interact with any processing.

- CallerRunsPolicy: let the thread submitting the task execute it, such as the main method

The difference between execute and submit

- execute is suitable for scenarios that do not need to focus on the return value.

- The submit method is applicable to scenarios that need to pay attention to the return value.

Close thread pool

To close the thread pool, you can call two methods, shuttowmnow and shuttowm.

- Shutdown now: immediately terminate the thread task.

- Shutdown: do not receive new tasks and close them after the task is executed.

Four ways to create threads

- Inherit Thread

- Implement Runnable

- Implement Callable

- Use thread pool

ThreadLocal

Thread variable

Save a copy of variables for each thread so that multiple thread variables do not affect each other.

Principle analysis of ThreadLocal

Create a ThreadLocal object

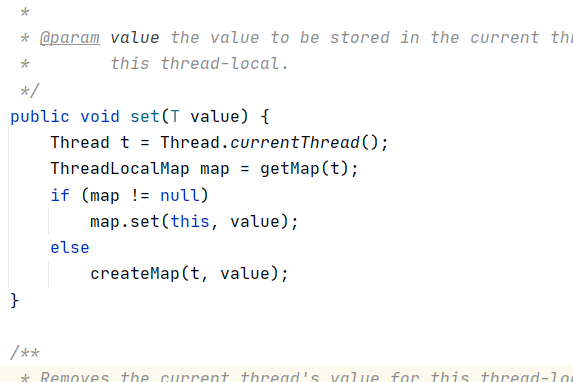

When calling the set method, we will get the currently executing object at the bottom and create a ThreadLocalMap object for our current thread. When the key of ThreadLocalMap is ThreadLocal object, the value is our own set value.

When searching, first find the ThreadLocalMap of the object through our own thread

Thread t = Thread.currentThread(); ThreadLocalMap map = getMap(t);

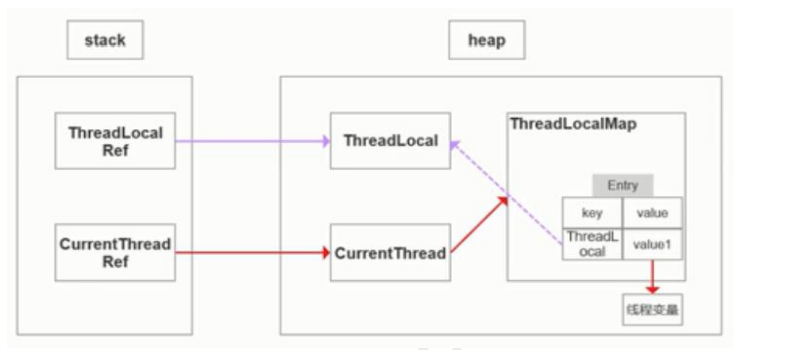

ThreadLocalMap memory leak

Memory leak: some objects are no longer used in memory, but cannot be recycled.

The key of ThreadLocalMap is ThreadLocal, which is managed by weakly referenced objects.

Key is a weak reference. If the thread executes for a long time, the key will be recycled and null, but value is a strong reference and cannot be recycled, resulting in memory leakage.

Solution: delete the variable in ThreadLocal immediately after it is used up.