The notes are to learn and record according to the video of UP master linux super brother in station B. if there are mistakes and omissions, those in a good mood can point out them.

https://www.bilibili.com/video/BV1yP4y1x7Vj?spm_id_from=333.999.0.0

Machine environment preparation:

2C4G virtual machine

| host name | Node ip | role | Deploy components |

|---|---|---|---|

| k8s-master01 | 192.168.131.128 | master01 | etcd,kube-apiserver,kube-controller-manager,kubectl,kubeadm,kubelet,kube-proxy,flannel |

| k8s-note01 | 192.168.131.129 | note01 | kubectl,kubelet,kube-proxy,flannel |

| k8s-note02 | 192.168.131.130 | note02 | kubectl,kubelet,kube-proxy,flannel |

Create virtual machines and improve basic configuration

Define the host name

Configure host resolution

cat >> /etc/hosts << EOF 192.168.131.128 k8s-master01 192.168.131.129 k8s-note01 192.168.131.130 k8s-note02 EOF

Firewall rule adjustment( Prevent FORWARD from being rejected, so that clusters cannot access each other directly)

iptables -P FORWARD ACCEPT

Turning off the swap cache # is important

swapoff -a

Prevent boot auto mount swap partition

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

Close selinux and disable firewall

sed -ri 's#(SELINUX=).*#\1disabled#' /etc/selinux/config setenforce 0 systemctl disable firewalld && systemctl stop firewalld

Turn on forwarding of internal check traffic

cat << EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward=1 vm.max_map_count=262144 EOF

Load kernel parameters

modprobe br_netfilter sysctl -p /etc/sysctl.d/k8s.conf

Download Alibaba cloud's basic source

curl -o /etc/yum.repos.d/Centos-7.repo http://mirrors.aliyun.com/repo/Centos-7.repo

Download Alibaba cloud's docker source

curl -o /etc/yum.repos.d/docker-ce.repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Configure K8S source

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubrenetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

Clear cache and rebuild cache

yum clean all && yum makecache

Start installing docker (all nodes)

View docker version

yum list docker-ce --showduplicates |sort -r

Start installation

yum install docker-ce -y

Configure docker image acceleration

mkdir -p /etc/docker

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://vw6szajx.mirror.aliyuncs.com "]# this image acceleration address is generated through alicloud

}

EOF

Supplementary accelerator knowledge:

- What is a mirror accelerator

Because the docker image needs to be pulled from the warehouse, the domestic download of this warehouse (dockerHub) is very slow. Therefore, Alibaba cloud has made an image acceleration function, which is equivalent to copying a copy of the dockerHub warehouse to China, so that domestic users can download it easily.

Therefore, the accelerator is equivalent to a forwarding address, which can also be used as a docker The download address of hub.

Start docker

systemctl enable docker && systemctl start docker

Install k8s-master01

Install kubedm, kubelet, and kubectl

- kubeadm: the instruction used to initialize the cluster and install the cluster

- kubelet: used to start pods, containers, etc. and manage docker s on each node in the cluster.

- kubectl: command line tool for communicating with cluster

Installation of all nodes

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

Check kubedm version

[root@k8s-master01 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.0", GitCommit:"c2b5237ccd9c0f1d600d3072634ca66cefdf272f", GitTreeState:"clean", BuildDate:"2021-08-04T18:02:08Z", GoVersion:"go1.16.6", Compiler:"gc", Platform:"linux/amd64"}

- Configure kubelet startup to manage containers, download images, create containers, and start and stop containers

- Make sure that once the machine starts up and the kubelet server starts up, it will automatically manage the pod (container)

root@k8s-master01 ~]# systemctl enable kubelet Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

Initialize the configuration file (only executed by the master node)

Generate profile

Create a directory to hold yaml files

mkdir ~/k8s-install && cd ~/k8s-install

Use the command to initialize and generate a yaml file

kubeadm config print init-defaults> kubeadm.yaml

The configuration file is as follows:

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 1.2.3.4#The default information is 1.2.3.4, which needs to be changed to k8s-master01 intranet address ip

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

imagePullPolicy: IfNotPresent

name: node#The node name is defined here and needs to be adjusted to k8s-master01

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io#You need to change this to the domestic image source registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.22.0#Note that this paragraph is for your version. If there are differences, remember to adjust it to the consistent state

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16#This is a new segment. Add a pod segment and set the network in the container

serviceSubnet: 10.96.0.0/12

scheduler: {}

Download Image in advance

kubeadm config images pull --config kubeadm.yaml

Error reported:

[root@k8s-master01 k8s-install]# kubeadm config print init-defaults> kubeadm.yaml [root@k8s-master01 k8s-install]# vim kubeadm.yaml [root@k8s-master01 k8s-install]# kubeadm config images pull --config kubeadm.yaml [config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.22.0 [config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.22.0 [config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.22.0 [config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.22.0 [config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.5 [config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.0-0 failed to pull image "registry.aliyuncs.com/google_containers/coredns:v1.8.4": output: Error response from daemon: manifest for registry.aliyuncs.com/google_containers/coredns:v1.8.4 not found: manifest unknown: manifest unknown <u>#This prompt cannot pull the image < / u > , error: exit status 1 To see the stack trace of this error execute with --v=5 or higher

Adopt the way of saving the country by curve

1, Directly use docker to pull

[root@k8s-master01 k8s-install]# docker pull coredns/coredns:1.8.4 1.8.4: Pulling from coredns/coredns c6568d217a00: Pull complete bc38a22c706b: Pull complete Digest: sha256:6e5a02c21641597998b4be7cb5eb1e7b02c0d8d23cce4dd09f4682d463798890 Status: Downloaded newer image for coredns/coredns:1.8.4 docker.io/coredns/coredns:1.8.4

2, Change mirror label

[root@k8s-master01 k8s-install]# docker tag coredns/coredns:1.8.4 registry.aliyuncs.com/google_containers/coredns:v1.8.4 [root@k8s-master01 k8s-install]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE registry.aliyuncs.com/google_containers/kube-apiserver v1.22.0 838d692cbe28 8 days ago 128MB registry.aliyuncs.com/google_containers/kube-controller-manager v1.22.0 5344f96781f4 8 days ago 122MB registry.aliyuncs.com/google_containers/kube-scheduler v1.22.0 3db3d153007f 8 days ago 52.7MB registry.aliyuncs.com/google_containers/kube-proxy v1.22.0 bbad1636b30d 8 days ago 104MB registry.aliyuncs.com/google_containers/etcd 3.5.0-0 004811815584 8 weeks ago 295MB coredns/coredns 1.8.4 8d147537fb7d 2 months ago 47.6MB registry.aliyuncs.com/google_containers/coredns v1.8.4 8d147537fb7d 2 months ago 47.6MB registry.aliyuncs.com/google_containers/pause 3.5 ed210e3e4a5b 4 months ago 683kB

Initialize cluster

kubeadm init --config kubeadm.yaml

[root@k8s-master01 k8s-install]# kubeadm init --config kubeadm.yaml

[init] Using Kubernetes version: v1.22.0

[preflight] Running pre-flight checks

[WARNING Hostname]: hostname "node" could not be reached

[WARNING Hostname]: hostname "node": lookup node on 114.114.114.114:53: no such host

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR Swap]: running with swap on is not supported. Please disable swap#Running on Swap is not supported. Please disable Swap

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

When configuring the basic environment, you forgot to turn off the use of the exchange partition, resulting in an error here

Troubleshooting errors

Re execute

swapoff -a

Then report the error again

[root@k8s-master01 k8s-install]# kubeadm init --config kubeadm.yaml

[init] Using Kubernetes version: v1.22.0

[preflight] Running pre-flight checks

[WARNING Hostname]: hostname "node" could not be reached

[WARNING Hostname]: hostname "node": lookup node on 114.114.114.114:53: no such host

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR Swap]: running with swap on is not supported. Please disable swap

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

[root@k8s-master01 k8s-install]# swapoff -a

[root@k8s-master01 k8s-install]# kubeadm init --config kubeadm.yaml

[init] Using Kubernetes version: v1.22.0

[preflight] Running pre-flight checks

[WARNING Hostname]: hostname "node" could not be reached

[WARNING Hostname]: hostname "node": lookup node on 114.114.114.114:53: no such host

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local node] and IPs [10.96.0.1 192.168.131.128]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost node] and IPs [192.168.131.128 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost node] and IPs [192.168.131.128 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

Unfortunately, an error has occurred:

timed out waiting for the condition

This error is likely caused by:

- The kubelet is not running

- The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)

If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:

- 'systemctl status kubelet'

- 'journalctl -xeu kubelet'

Additionally, a control plane component may have crashed or exited when started by the container runtime.

To troubleshoot, list all containers using your preferred container runtimes CLI.

Here is one example how you may list all Kubernetes containers running in docker:

- 'docker ps -a | grep kube | grep -v pause'

Once you have found the failing container, you can inspect its logs with:

- 'docker logs CONTAINERID'

error execution phase wait-control-plane: couldn't initialize a Kubernetes cluster

To see the stack trace of this error execute with --v=5 or highe

- After checking for a long time, systemctl status kubelet and journalctl -xeu kubelet were executed, but no specific problems were found

- Check tail / var / log / messages | grep Kube and find

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.842063 13772 container_manager_linux.go:320] "Creating device plugin manager" devicePluginEnabled=true

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.842120 13772 state_mem.go:36] "Initialized new in-memory state store"

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.842196 13772 kubelet.go:314] "Using dockershim is deprecated, please consider using a full-fledged CRI implementation"

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.842230 13772 client.go:78] "Connecting to docker on the dockerEndpoint" endpoint="unix:///var/run/docker.sock"

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.842248 13772 client.go:97] "Start docker client with request timeout" timeout="2m0s"

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.861829 13772 docker_service.go:566] "Hairpin mode is set but kubenet is not enabled, falling back to HairpinVeth" hairpinMode=promiscuous-bridge

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.861865 13772 docker_service.go:242] "Hairpin mode is set" hairpinMode=hairpin-veth

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.861964 13772 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d"

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.866488 13772 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d"

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.866689 13772 docker_service.go:257] "Docker cri networking managed by the network plugin" networkPluginName="cni"

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.866915 13772 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d"

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.882848 13772 docker_service.go:264] "Docker Info" dockerInfo=&{ID:TSG7:3K3U:2XNU:OXWS:7CUM:VUO4:EM23:4NLT:GAGI:XIE2:KIQ7:JOZJ Containers:0 ContainersRunning:0 ContainersPaused:0 ContainersStopped:0 Images:7 Driver:overlay2 DriverStatus:[[Backing Filesystem xfs] [Supports d_type true] [Native Overlay Diff true] [userxattr false]] SystemStatus:[] Plugins:{Volume:[local] Network:[bridge host ipvlan macvlan null overlay] Authorization:[] Log:[awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog]} MemoryLimit:true SwapLimit:true KernelMemory:true KernelMemoryTCP:true CPUCfsPeriod:true CPUCfsQuota:true CPUShares:true CPUSet:true PidsLimit:true IPv4Forwarding:true BridgeNfIptables:true BridgeNfIP6tables:true Debug:false NFd:25 OomKillDisable:true NGoroutines:34 SystemTime:2021-08-13T00:08:12.86797053-04:00 LoggingDriver:json-file CgroupDriver:cgroupfs CgroupVersion:1 NEventsListener:0 KernelVersion:3.10.0-1160.36.2.el7.x86_64 OperatingSystem:CentOS Linux 7 (Core) OSVersion:7 OSType:linux Architecture:x86_64 IndexServerAddress:https://index.docker.io/v1/ RegistryConfig:0xc00075dea0 NCPU:2 MemTotal:3953950720 GenericResources:[] DockerRootDir:/var/lib/docker HTTPProxy: HTTPSProxy: NoProxy: Name:k8s-master01 Labels:[] ExperimentalBuild:false ServerVersion:20.10.8 ClusterStore: ClusterAdvertise: Runtimes:map[io.containerd.runc.v2:{Path:runc Args:[] Shim:<nil>} io.containerd.runtime.v1.linux:{Path:runc Args:[] Shim:<nil>} runc:{Path:runc Args:[] Shim:<nil>}] DefaultRuntime:runc Swarm:{NodeID: NodeAddr: LocalNodeState:inactive ControlAvailable:false Error: RemoteManagers:[] Nodes:0 Managers:0 Cluster:<nil> Warnings:[]} LiveRestoreEnabled:false Isolation: InitBinary:docker-init ContainerdCommit:{ID:e25210fe30a0a703442421b0f60afac609f950a3 Expected:e25210fe30a0a703442421b0f60afac609f950a3} RuncCommit:{ID:v1.0.1-0-g4144b63 Expected:v1.0.1-0-g4144b63} InitCommit:{ID:de40ad0 Expected:de40ad0} SecurityOptions:[name=seccomp,profile=default] ProductLicense: DefaultAddressPools:[] Warnings:[]}

Aug 13 00:08:12 localhost kubelet: E0813 00:08:12.882894 13772 server.go:294] "Failed to run kubelet" err="failed to run Kubelet: misconfiguration: kubelet cgroup driver: \"systemd\" is different from docker cgroup driver: \"cgroupfs\"" #This shows that the cgroup driver used by kubelet is systemd, while the cgroup driver used by docker is cgroupfs, which is inconsistent.

Aug 13 00:08:12 localhost systemd: kubelet.service: main process exited, code=exited, status=1/FAILURE

Aug 13 00:08:12 localhost systemd: Unit kubelet.service entered failed state.

Aug 13 00:08:12 localhost systemd: kubelet.service failed.

Resolve errors

- Read the post

https://blog.csdn.net/sinat_28371057/article/details/109895176

It says:

Because the official k8s document prompts to use cgroupfs to manage docker and k8s resources, while using systemd to manage other process resources on the node will be unstable when the resource pressure is high, it is recommended to modify docker and k8s to use systemd to manage resources uniformly.

You can use this command to view the used by docker

docker info |grep "Cgroup Driver"

Therefore, we need to adjust the cgroup of docker driver

The change method is as follows:

Add a specified driver to the previously created daemon.json

[root@k8s-master01 k8s-install]# cat !$

cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://vw6szajx.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]#Add this configuration

}

systemctl daemon-reload &&systemctl restart docker

Because there is previous data, you need to reset the cluster

echo y|kubeadm reset

Initialize k8s cluster again

[root@k8s-master01 k8s-install]# kubeadm init --config kubeadm.yaml

[init] Using Kubernetes version: v1.22.0

[preflight] Running pre-flight checks

[WARNING Hostname]: hostname "node" could not be reached

[WARNING Hostname]: hostname "node": lookup node on 114.114.114.114:53: no such host

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local node] and IPs [10.96.0.1 192.168.131.128]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost node] and IPs [192.168.131.128 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost node] and IPs [192.168.131.128 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 9.003862 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.22" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node node as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node node as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS #From here on, the following large section appears, indicating that the cluster initialization is successful.

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

#Follow the steps below to create a configuration file as prompted

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

#Tip: you need to create a pod network before your pod can work normally. This is the cluster network of K8S, and you need to use additional plug-ins

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root: #You need to use the following command to join the node node to the cluster

kubeadm join 192.168.131.128:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:95373e9880f7a6fed65093e8ee081d25e739c4ea45feac7c3aa8728f7a93f237

- #The yellow section indicates that the cluster initialization is successful! It's really not easy.

The default validity period of this token information is 24 hours. After expiration, it needs to be recreated

kubeadm token create --print-join-command

Viewing pods information using kubectl

kubectl get pods [root@k8s-master01 k8s-install]# kubectl get pods No resources found in default namespace.

View node information

kubectl get nodes [root@k8s-master01 k8s-install]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master01 NotReady control-plane,master 2m8s v1.22.0

You can see that the node currently has only the master node

Add node nodes (node01 and node02)

Use the configuration to which the master node was created

[root@k8s-node01 ~]# kubeadm join 192.168.131.128:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:95373e9880f7a6fed65093e8ee081d25e739c4ea45feac7c3aa8728f7a93f237 [preflight] Running pre-flight checks [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@k8s-node02 ~]# kubeadm join 192.168.131.128:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:95373e9880f7a6fed65093e8ee081d25e739c4ea45feac7c3aa8728f7a93f237 [preflight] Running pre-flight checks [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

There was a small problem before. Because the three hosts are configured at the same time, that is, the swap partition was not closed before, and the same error as before was reported;

Similarly, docker is used by default and can be adjusted according to previous operations.

- Go back to the master node to view

But the status is not ready

[root@k8s-master01 k8s-install]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master01 NotReady control-plane,master 20m v1.22.0 k8s-node01 NotReady <none> 11m v1.22.0 k8s-node02 NotReady <none> 7m56s v1.22.0

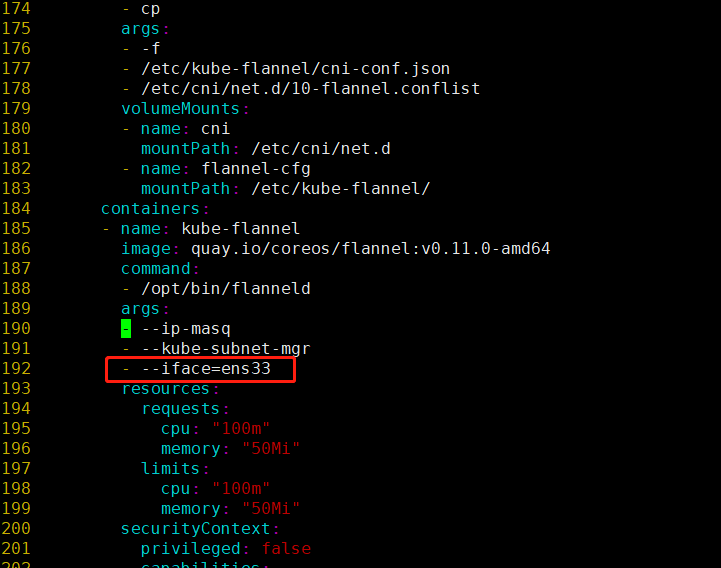

Install the flannel network plug-in (executed by master01)

Get yml file

According to the above tips when deploying the master node, the k8s cluster needs to generate a network belonging to the pod

First download a kube-flannel.yml file, which can be downloaded from the official website github (need to climb the wall)

- Because you need to access github, the page may open, but the machine wget cannot.

- You can get the content on the page, paste it into Notepad and save it as kube-flannel.yml

After the file is downloaded successfully, you need to adjust the configuration slightly

About 190 lines, add a new one under Kube subnet Mgr

Specify the network card name of the machine (when writing the network card, remember to confirm the network card name of your machine.)

Create network

Before creating a network, you need to download the image of flannel

[root@k8s-master01 k8s-install]# docker pull quay.io/coreos/flannel:v0.11.0-amd64 v0.11.0-amd64: Pulling from coreos/flannel cd784148e348: Pull complete 04ac94e9255c: Pull complete e10b013543eb: Pull complete 005e31e443b1: Pull complete 74f794f05817: Pull complete Digest: sha256:7806805c93b20a168d0bbbd25c6a213f00ac58a511c47e8fa6409543528a204e Status: Downloaded newer image for quay.io/coreos/flannel:v0.11.0-amd64 quay.io/coreos/flannel:v0.11.0-amd64

View with command

[root@k8s-master01 ~]# kubectl get nodes -owide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME k8s-master01 NotReady control-plane,master 4d19h v1.22.0 192.168.131.128 <none> CentOS Linux 7 (Core) 3.10.0-1160.36.2.el7.x86_64 docker://20.10.8 k8s-node01 NotReady <none> 4d19h v1.22.0 192.168.131.129 <none> CentOS Linux 7 (Core) 3.10.0-1160.36.2.el7.x86_64 docker://20.10.8 k8s-node02 NotReady <none> 4d19h v1.22.0 192.168.131.130 <none> CentOS Linux 7 (Core) 3.10.0-1160.36.2.el7.x86_64 docker://20.10.8

Reason for installing the network node: the node information is displayed as NotReady because the network of the node node has not been configured

Read the kube-flannel.yaml file and start installing the flannel network plug-in

kubectl create -f kube-flannel.yml [root@k8s-master01 k8s-install]# kubectl create -f kube-flannel.yml Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+ podsecuritypolicy.policy/psp.flannel.unprivileged created serviceaccount/flannel created configmap/kube-flannel-cfg created Warning: spec.template.spec.affinity.nodeAffinity.requiredDuringSchedulingIgnoredDuringExecution.nodeSelectorTerms[0].matchExpressions[0].key: beta.kubernetes.io/os is deprecated since v1.14; use "kubernetes.io/os" instead Warning: spec.template.spec.affinity.nodeAffinity.requiredDuringSchedulingIgnoredDuringExecution.nodeSelectorTerms[0].matchExpressions[1].key: beta.kubernetes.io/arch is deprecated since v1.14; use "kubernetes.io/arch" instead daemonset.apps/kube-flannel-ds-amd64 created daemonset.apps/kube-flannel-ds-arm64 created daemonset.apps/kube-flannel-ds-arm created daemonset.apps/kube-flannel-ds-ppc64le created daemonset.apps/kube-flannel-ds-s390x created unable to recognize "kube-flannel.yml": no matches for kind "ClusterRole" in version "rbac.authorization.k8s.io/v1beta1" unable to recognize "kube-flannel.yml": no matches for kind "ClusterRoleBinding" in version "rbac.authorization.k8s.io/v1beta1"

The previously used yml file in the video is relatively old, so it cannot be recognized.

Recreate

- Log in to github, find flannel, find the latest download address, adjust the previous yaml file, and recreate it

[root@k8s-master01 k8s-install]# kubectl create -f kube-flannel.yml Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+ clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created daemonset.apps/kube-flannel-ds created Error from server (AlreadyExists): error when creating "kube-flannel.yml": podsecuritypolicies.policy "psp.flannel.unprivileged" already exists Error from server (AlreadyExists): error when creating "kube-flannel.yml": serviceaccounts "flannel" already exists Error from server (AlreadyExists): error when creating "kube-flannel.yml": configmaps "kube-flannel-cfg" already exists

Those that could not be created before were successfully created.

verification

- View the node detailed status again

[root@k8s-master01 k8s-install]# kubectl get nodes -owide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME k8s-master01 Ready control-plane,master 4d19h v1.22.0 192.168.131.128 <none> CentOS Linux 7 (Core) 3.10.0-1160.36.2.el7.x86_64 docker://20.10.8 k8s-node01 Ready <none> 4d19h v1.22.0 192.168.131.129 <none> CentOS Linux 7 (Core) 3.10.0-1160.36.2.el7.x86_64 docker://20.10.8 k8s-node02 Ready <none> 4d19h v1.22.0 192.168.131.130 <none> CentOS Linux 7 (Core) 3.10.0-1160.36.2.el7.x86_64 docker://20.10.8

The node is in Ready status, indicating that the network of the pod has been created successfully.

- Using kubectl get pods -A view all configuration status to ensure that all are Running;

[root@k8s-master01 k8s-install]# kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-7f6cbbb7b8-9w8j5 1/1 Running 0 4d19h kube-system coredns-7f6cbbb7b8-rg56b 1/1 Running 0 4d19h kube-system etcd-k8s-master01 1/1 Running 1 (4d18h ago) 4d19h kube-system kube-apiserver-k8s-master01 1/1 Running 1 (4d18h ago) 4d19h kube-system kube-controller-manager-k8s-master01 1/1 Running 1 (4d18h ago) 4d19h kube-system kube-flannel-ds-amd64-btsdx 1/1 Running 7 (9m41s ago) 15m kube-system kube-flannel-ds-amd64-c64z6 1/1 Running 6 (9m47s ago) 15m kube-system kube-flannel-ds-amd64-x5g44 1/1 Running 7 (8m49s ago) 15m kube-system kube-flannel-ds-d27bs 1/1 Running 0 7m20s kube-system kube-flannel-ds-f5t9v 1/1 Running 0 7m20s kube-system kube-flannel-ds-vjdtg 1/1 Running 0 7m20s kube-system kube-proxy-7tfk9 1/1 Running 1 (4d18h ago) 4d19h kube-system kube-proxy-cf4qs 1/1 Running 1 (4d18h ago) 4d19h kube-system kube-proxy-dncdt 1/1 Running 1 (4d18h ago) 4d19h kube-system kube-scheduler-k8s-master01 1/1 Running 1 (4d18h ago) 4d19h

8s has many configurations and components, which are managed by namespace

- PS: kubectl is used when there is no Running in the coredns namespace get nodes will see

[root@k8s-master01 ~]# kubectl get pods No resources found in default namespace.

- Use kubectl again at this time get nodes view cluster status

[root@k8s-master01 k8s-install]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master01 Ready control-plane,master 4d19h v1.22.0 k8s-node01 Ready <none> 4d19h v1.22.0 k8s-node02 Ready <none> 4d19h v1.22.0

This is the normal state of the cluster. You need to ensure that all nodes are Ready

Test deploying applications using K8S

Quickly use k8s to create a pod, run nginx, and access the nginx page in the cluster

Installation services

Deploying nginx using k8s web service

[root@k8s-master01 k8s-install]# kubectl run test-nginx --image=nginx:alpine pod/test-nginx created

Verify installation

[root@k8s-master01 k8s-install]# kubectl get pods -o wide --watch NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES test-nginx 1/1 Running 0 2m32s 10.244.2.2 k8s-node02 <none> <none>

- status, you can intuitively see the current situation of the container;

- ip refers to the ip of flannel network and internal LAN;

- Node, the node to which it belongs

- By accessing nginx, you can directly access the LAN IP in the cluster, that is, the IP created by flannel

- Verify application usage

[root@k8s-master01 k8s-install]# curl 10.244.2.2

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

You can clearly see the page content, which indicates that the master node has been accessed successfully.

Verify node program status

node01

[root@k8s-node01 ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES d7ff94443f74 8522d622299c "/opt/bin/flanneld -..." 23 minutes ago Up 23 minutes k8s_kube-flannel_kube-flannel-ds-f5t9v_kube-system_1db5e255-936d-4c7f-a920-a01845675bb2_0 c53005a05dfe ff281650a721 "/opt/bin/flanneld -..." 24 minutes ago Up 24 minutes k8s_kube-flannel_kube-flannel-ds-amd64-c64z6_kube-system_3ed24627-e4f0-48ae-bb31-458039ddfe11_6 adba14b324bf registry.aliyuncs.com/google_containers/pause:3.5 "/pause" 24 minutes ago Up 24 minutes k8s_POD_kube-flannel-ds-f5t9v_kube-system_1db5e255-936d-4c7f-a920-a01845675bb2_0 1b765df0d569 registry.aliyuncs.com/google_containers/pause:3.5 "/pause" 32 minutes ago Up 32 minutes k8s_POD_kube-flannel-ds-amd64-c64z6_kube-system_3ed24627-e4f0-48ae-bb31-458039ddfe11_0 92975ce5b0d8 bbad1636b30d "/usr/local/bin/kube..." About an hour ago Up About an hour k8s_kube-proxy_kube-proxy-cf4qs_kube-system_e554677b-2723-4d96-8021-dd8b0b5961ce_1 89c7e9a3119b registry.aliyuncs.com/google_containers/pause:3.5 "/pause" About an hour ago Up About an hour k8s_POD_kube-proxy-cf4qs_kube-system_e554677b-2723-4d96-8021-dd8b0b5961ce_1 [root@k8s-node01 ~]# [root@k8s-node01 ~]# docker ps |grep test [root@k8s-node01 ~]#

No test related containers were found

node02

[root@k8s-node02 ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 3644313f47fa nginx "/docker-entrypoint...." 6 minutes ago Up 6 minutes k8s_test-nginx_test-nginx_default_925e1358-2845-455e-8079-101c8a823c77_0 e5937fa65f8d registry.aliyuncs.com/google_containers/pause:3.5 "/pause" 7 minutes ago Up 7 minutes k8s_POD_test-nginx_default_925e1358-2845-455e-8079-101c8a823c77_0 ed982452d79e ff281650a721 "/opt/bin/flanneld -..." 20 minutes ago Up 20 minutes k8s_kube-flannel_kube-flannel-ds-amd64-x5g44_kube-system_007ea640-6b4e-47f2-9eb9-bad78e0d0df0_7 b01ac01ed2c9 8522d622299c "/opt/bin/flanneld -..." 23 minutes ago Up 23 minutes k8s_kube-flannel_kube-flannel-ds-d27bs_kube-system_6b2736d9-f316-4693-b7a1-1980efafe7a6_0 8d6066c5fe7d registry.aliyuncs.com/google_containers/pause:3.5 "/pause" 24 minutes ago Up 24 minutes k8s_POD_kube-flannel-ds-d27bs_kube-system_6b2736d9-f316-4693-b7a1-1980efafe7a6_0 98daec830156 registry.aliyuncs.com/google_containers/pause:3.5 "/pause" 32 minutes ago Up 32 minutes k8s_POD_kube-flannel-ds-amd64-x5g44_kube-system_007ea640-6b4e-47f2-9eb9-bad78e0d0df0_0 023b31545099 bbad1636b30d "/usr/local/bin/kube..." About an hour ago Up About an hour k8s_kube-proxy_kube-proxy-dncdt_kube-system_d1053902-b372-4add-be59-a6a1dd9ac651_1 4f9883a37b2b registry.aliyuncs.com/google_containers/pause:3.5 "/pause" About an hour ago Up About an hour k8s_POD_kube-proxy-dncdt_kube-system_d1053902-b372-4add-be59-a6a1dd9ac651_1 [root@k8s-node02 ~]# docker ps |grep test 3644313f47fa nginx "/docker-entrypoint...." 7 minutes ago Up 7 minutes k8s_test-nginx_test-nginx_default_925e1358-2845-455e-8079-101c8a823c77_0 e5937fa65f8d registry.aliyuncs.com/google_containers/pause:3.5 "/pause" 8 minutes ago Up 8 minutes k8s_POD_test-nginx_default_925e1358-2845-455e-8079-101c8a823c77_0

It can be clearly seen that there are two test related containers in the container on node02 node

PS:

1, docker is the runtime environment of each node

2, kubectl is responsible for the start and stop of all containers to ensure the normal operation of nodes.

Detailed explanation of minimum dispatching unit pod

- docker schedules containers

- In the k8s cluster, pod (POD) is the smallest scheduling unit

k8s create a pod and run the container instance in the pod

Working status in the cluster

Suppose a machine is k8s-node01. Many pods are created on this machine. These pods can run many containers, and each pod will also be assigned its own IP address

- pod manages the shared resources of a group of containers:

- Storage, vloumes

- Network, each pod has a unique ip in the k8s cluster

- Container, basic information of the container, such as image version, port mapping, etc

When creating K8S applications, the cluster first creates a pod containing containers, and then the pod is bound to a node and accessed or deleted.

Each k8s node must run at least

- kubelet is responsible for the communication between the master and node, as well as managing the pod and the container running inside the pod

- kube-proxy Traffic forwarding

- The container runtime environment is responsible for downloading images, creating and running container instances.

pod

- k8s supports high availability of containers

- The application on node01 hangs up and can be restarted automatically

- Support capacity expansion and capacity reduction

- Load balancing

Deploying nginx applications using deployment

- Here, first create a deployment resource, which is the focus of k8s deploying applications.

- After the deployment resource is created, the deployment instructs k8s how to create an application instance. The k8s master schedules the application to a specific node, that is, generates a pod and an internal container instance.

- After the application is created, deployment will continuously monitor these pod s. If the node node fails, the deployment controller will automatically find a better node and re create a new instance, which provides self-healing ability to solve the server failure problem.

There are two ways to create k8s resources

- yaml configuration file, used in production environment

- Command line, debugging, using

Create yaml, apply yaml

apiVersion: apps/v1

kind:Deployment

metadata:

name: test02-nginx

labels:

app: nginx

spec:

#Create 2 nginx containers

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:alpine

ports:

- containerPort: 80

Start creating

[root@k8s-master01 k8s-install]# kubectl apply -f test02-nginx.yaml deployment.apps/test02-nginx created [root@k8s-master01 k8s-install]# kubectl get pods NAME READY STATUS RESTARTS AGE test-nginx 1/1 Running 0 5h5m test02-nginx-7fb7fd49b4-dxj5c 0/1 ContainerCreating 0 10s test02-nginx-7fb7fd49b4-r28gc 0/1 ContainerCreating 0 10s [root@k8s-master01 k8s-install]# kubectl get pods NAME READY STATUS RESTARTS AGE test-nginx 1/1 Running 0 5h6m test02-nginx-7fb7fd49b4-dxj5c 1/1 Running 0 31s test02-nginx-7fb7fd49b4-r28gc 0/1 ContainerCreating 0 31s [root@k8s-master01 k8s-install]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES test-nginx 1/1 Running 0 5h9m 10.244.2.2 k8s-node02 <none> <none> test02-nginx-7fb7fd49b4-dxj5c 1/1 Running 0 3m25s 10.244.1.2 k8s-node01 <none> <none> test02-nginx-7fb7fd49b4-r28gc 1/1 Running 0 3m25s 10.244.1.3 k8s-node01 <none> <none>

#You can see from pods that two nginx programs have been created

Test the IP addresses of the two pod s to see if the program runs normally

[root@k8s-master01 k8s-install]# curl 10.244.1.2

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@k8s-master01 k8s-install]# curl 10.244.1.3

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

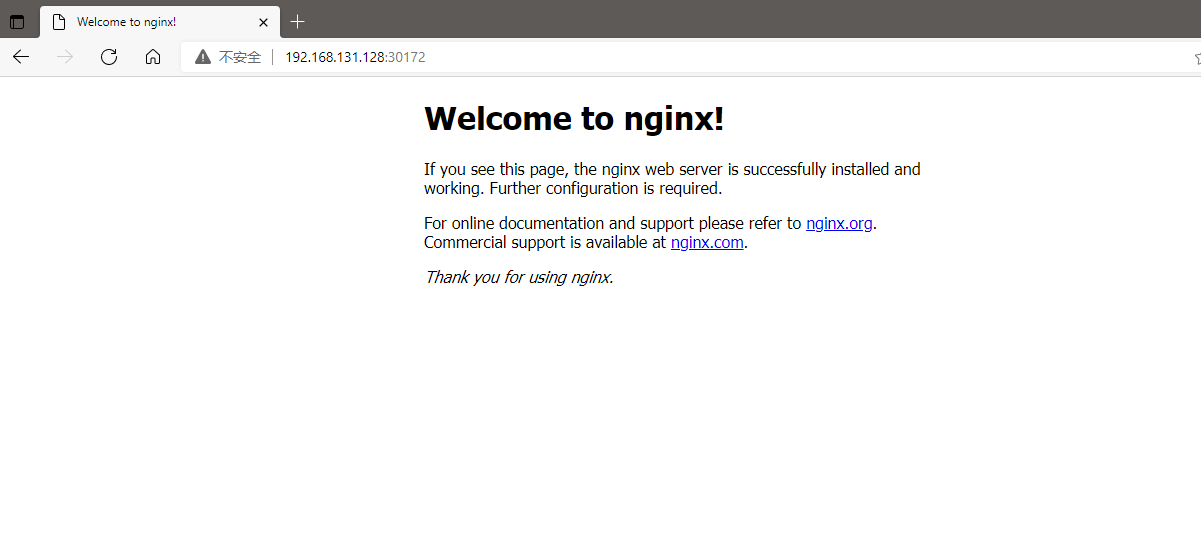

service

Service is an important concept in k8s, which mainly provides load balancing and automatic service discovery.

The problem goes back to the beginning. Nginx created with deployment has run successfully. So how should the external access to this nginx?

- pod IP is only the virtual IP visible in the cluster and cannot be accessed externally.

- pod IP will follow the pod When ReplicaSet dynamically scales the pod, the pod will disappear IP may change anytime, anywhere, which will increase the difficulty of accessing this service

Therefore, it is basically unrealistic to access services through pod IP. The solution is to use new resources (service) load balancing resources

kubectl expose deployment test02-nginx --port=80 --type=LoadBalancer

The deployment is specified as TEST02 nginx, the port is 80, and the label is LoadBalancer

[root@k8s-master01 k8s-install]# kubectl get deployment NAME READY UP-TO-DATE AVAILABLE AGE test02-nginx 2/2 2 2 17m

#View all deployment s

[root@k8s-master01 k8s-install]# kubectl expose deployment test02-nginx --port=80 --type=LoadBalancer service/test02-nginx exposed [root@k8s-master01 k8s-install]# kubectl get services -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5d1h <none> test02-nginx LoadBalancer 10.98.122.29 <pending> 80:30172/TCP 19s app=nginx

#If you can directly access this port, you can access the applications in this pod.

Configure kubectl command completion

yum install -y bash-completion source /usr/share/bash-completion/bash_completion source < (kubectl completion bash) echo "source < (kubectl completion bash)" >> ~/.bashrc

Deploy dashboard

https://github.com/kubernetes/dashboard

kubernetes Dashboards are the common, web-based UI for kubernetes clusters. It allows users to manage and troubleshoot applications running in the cluster, as well as the ability to manage the cluster.

Download the yaml file of this application from github

Add a new paragraph around line 44 of the file

39 spec: 40 ports: 41 - port: 443 42 targetPort: 8443 43 selector: 44 k8s-app: kubernetes-dashboard 45 type: NodePort #In addition, if you can directly access the IP of the host, you can access the dashboard page in the cluster

Create resource

[root@k8s-master01 k8s-install]# kubectl create -f recommended.yaml namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created Warning: spec.template.metadata.annotations[seccomp.security.alpha.kubernetes.io/pod]: deprecated since v1.19; use the "seccompProfile" field instead deployment.apps/dashboard-metrics-scraper created [root@k8s-master01 k8s-install]# kubectl get po -o wide -A #List all namespace s, plus -- watch, do not stop monitoring NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES default test-nginx 1/1 Running 0 5h57m 10.244.2.2 k8s-node02 <none> <none> default test02-nginx-7fb7fd49b4-dxj5c 1/1 Running 0 52m 10.244.1.2 k8s-node01 <none> <none> default test02-nginx-7fb7fd49b4-r28gc 1/1 Running 0 52m 10.244.1.3 k8s-node01 <none> <none> kube-system coredns-7f6cbbb7b8-9w8j5 1/1 Running 0 5d2h 10.244.0.2 k8s-master01 <none> <none> kube-system coredns-7f6cbbb7b8-rg56b 1/1 Running 0 5d2h 10.244.0.3 k8s-master01 <none> <none> kube-system etcd-k8s-master01 1/1 Running 1 (5d ago) 5d2h 192.168.131.128 k8s-master01 <none> <none> kube-system kube-apiserver-k8s-master01 1/1 Running 1 (5d ago) 5d2h 192.168.131.128 k8s-master01 <none> <none> kube-system kube-controller-manager-k8s-master01 1/1 Running 1 (5d ago) 5d2h 192.168.131.128 k8s-master01 <none> <none> kube-system kube-flannel-ds-amd64-btsdx 1/1 Running 7 (6h17m ago) 6h23m 192.168.131.128 k8s-master01 <none> <none> kube-system kube-flannel-ds-amd64-c64z6 1/1 Running 6 (6h17m ago) 6h23m 192.168.131.129 k8s-node01 <none> <none> kube-system kube-flannel-ds-amd64-x5g44 1/1 Running 7 (6h16m ago) 6h23m 192.168.131.130 k8s-node02 <none> <none> kube-system kube-flannel-ds-d27bs 1/1 Running 0 6h15m 192.168.131.130 k8s-node02 <none> <none> kube-system kube-flannel-ds-f5t9v 1/1 Running 0 6h15m 192.168.131.129 k8s-node01 <none> <none> kube-system kube-flannel-ds-vjdtg 1/1 Running 0 6h15m 192.168.131.128 k8s-master01 <none> <none> kube-system kube-proxy-7tfk9 1/1 Running 1 (5d ago) 5d2h 192.168.131.128 k8s-master01 <none> <none> kube-system kube-proxy-cf4qs 1/1 Running 1 (5d ago) 5d1h 192.168.131.129 k8s-node01 <none> <none> kube-system kube-proxy-dncdt 1/1 Running 1 (5d ago) 5d1h 192.168.131.130 k8s-node02 <none> <none> kube-system kube-scheduler-k8s-master01 1/1 Running 1 (5d ago) 5d2h 192.168.131.128 k8s-master01 <none> <none> kubernetes-dashboard dashboard-metrics-scraper-856586f554-6n48q 1/1 Running 0 98s 10.244.1.4 k8s-node01 <none> <none>#This is the dashboard kubernetes-dashboard kubernetes-dashboard-67484c44f6-fch2q 1/1 Running 0 98s 10.244.2.3 k8s-node02 <none> <none>#This is the dashboard

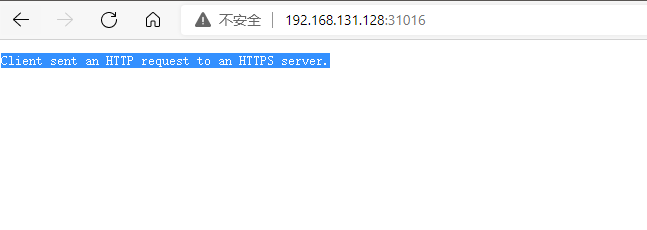

After the container runs correctly, the test scheme is to view the service resources

[root@k8s-master01 k8s-install]# kubectl -n kubernetes-dashboard get services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE dashboard-metrics-scraper ClusterIP 10.104.11.88 <none> 8000/TCP 19m kubernetes-dashboard NodePort 10.109.201.42 <none> 443:31016/TCP 19m

- service can be abbreviated as svc and pod can be abbreviated as po

- Command explanation: kubectl specifies a namespace as kubernetes dashboard. View the service s under this namespace

- As can be seen from the above, the type of kubernetes dashboard namespace is NodePort, which can be directly used to access the web

Direct access to the port of the host IP + kubernetes dashboard

This is because we use http requests to access https

After using https, you can access it correctly.

Because all requests in the K8S cluster follow the https protocol, a two-way authentication is done to ensure the security of the request to the greatest extent

Token acquisition method

An authentication system needs to be created

Create yaml file

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard --- apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard

Then create

[root@k8s-master01 k8s-install]# kubectl create -f admin-user.yaml clusterrolebinding.rbac.authorization.k8s.io/admin-user created serviceaccount/admin-user created

Get a token to log in to dashboard

#View token information

[root@k8s-master01 k8s-install]# kubectl -n kubernetes-dashboard get secret |grep admin-user admin-user-token-kq6vm kubernetes.io/service-account-token 3 3m16s

#Decrypt token

[root@k8s-master01 k8s-install]# kubectl -n kubernetes-dashboard get secret admin-user-token-kq6vm -o jsonpath={.data.token}|base64 -d

eyJhbGciOiJSUzI1NiIsImtpZCI6ImNZeVdGNDdGMFRFY0lEWWRnTUhwcllfdzNrMG1vTkswYlphYzJIWUlZQ3cifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWtxNnZtIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIwNmU4ODhiNS0xMjk5LTRjOTktOGI0NS0xNTFmOWI5MjMzMTkiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.qPdCZzydj9jKIjoSxBf7rPFjiDkpzyIoSgKz1hWpSRpx-xSvSjJ5rwuEYtcmeRE4CKw17783Y-DEDH-jPeyEajFZ1XkSCR19zXgfp9uAU1_vKVOxSK77zBxqXDzQY0bzq8hjhcYUlHcAtFkWS_9aGjyL94r2cyjdgbTDxHJpFUCByuIOeVtGDtIa2YbqFvLRMaSoSOOdJnN_EYBZJW5LoeD3p1NN1gkbqXT56rvKzPtElQFv4tBU1uo501_Iz_MKPxH9lslwReORjE5ttWRbUAd4XguXOmTs0BWeRIG6I9sF0X4JEvS2TSbnCREslJKTBYHU6wkh1ssoMqWbFlD-eg

Using the decrypted information, go back to the web page and enter the Token to log in