Author Home Page ( Silicon-based Workshop for Potato Sugar): Blog of Potato Sugar (Wang Wenbing)_ Silicon-based Workshop for Potato Sugar_ CSDN Blog

Website address: https://blog.csdn.net/HiWangWenBing/article/details/122832783

Catalog

1.1 Problems with NFS Network File System

Chapter 2 How to Create a Static Persistent Store Using PV

2.1 Preconditions (Executed on NFS server)

2.2 PV configuration file (executed on either NFS server or Client)

2.3 Creating a persistent space using PV

Chapter 3 How to dynamically apply for persistent storage using PVC

3.1 PVC profile (usually with Pod on Client)

3.2 Use PVC to apply for persistence space

Chapter 4 Use of persistent space by pod (same as NFS)

4.2 Access to shared storage through PVC applications

4.3 Generating files in shared storage

Chapter 1 Overview of PV+PVC

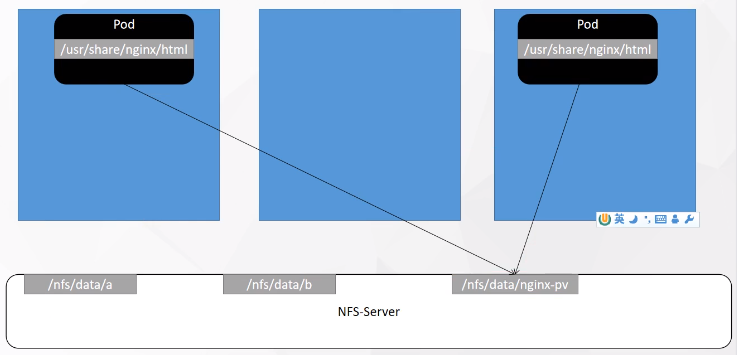

1.1 Problems with NFS Network File System

(1) Shared directories need to be created manually on the Client side

(2) After the Pod dies, manually created directories are not automatically deleted, and need to be deleted and unmount ed manually

(3) It is impossible to control the pod to limit the size of storage space occupied by the shared file directory. It is possible that one pod will fill up the entire shared storage space and lack the security control mechanism.

(4) Strong association between Pod and NFS

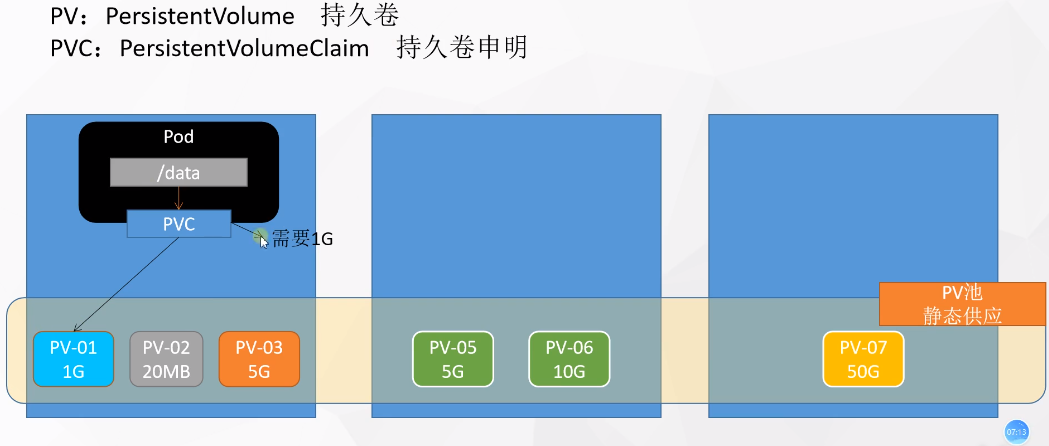

Introduction of 1.2 PV+PVC

To solve these problems, K8S adds a PV+PVC layer between NFS and od.

PV (Persistent Volume) is the meaning of persistent volumes and is an abstraction of the underlying shared storage. In general, PV is created and configured by the k8s administrator, it is related to the underlying specific shared storage technology, and it is docked with the shared storage through plug-ins.

PVC (Persistent Volume Claim) is the meaning of the Persistent Volume declaration and is a user's declaration of storage requirements. In other words, PVC is actually a kind of resource demand application that users send to the k8s system.

As shown in the figure below, users persist data through the PVC interface, while PVs bind to specific underlying file systems.

The introduction of PV+PVC has successfully solved the problems encountered in using NFS alone.

For further principles of PV+PVC, please refer to:

Chapter 2 How to Create a Static Persistent Store Using PV

2.1 Preconditions (Executed on NFS server)

PV is built on top of NFS or other network file systems, so NFS network file systems need to be created first

[root@k8s-master1 nfs]# mkdir volumes

[root@k8s-master1 nfs]# cd volumes

[root@k8s-master1 volumes]# mkdir v{1,2,3,4,5}

[root@k8s-master1 volumes]# ls

v1 v2 v3 v4 v5

[root@localhost volumes]# vi /etc/exports

/nfs/volumes/v1 *(insecure,rw,async,no_root_squash)

/nfs/volumes/v2 *(insecure,rw,async,no_root_squash)

/nfs/volumes/v3 *(insecure,rw,async,no_root_squash)

/nfs/volumes/v4 *(insecure,rw,async,no_root_squash)

/nfs/volumes/v5 *(insecure,rw,async,no_root_squash)

[root@localhost volumes]# exportfs -r

[root@localhost volumes]# showmount -e

Export list for localhost.localdomain:

/nfs/volumes/v5

/nfs/volumes/v4

/nfs/volumes/v3

/nfs/volumes/v2

/nfs/volumes/v1

$ Create an identifying test file in a shared directory for later validation

cd /nfs/volumes/

[root@k8s-master1 volumes]# touch ./v1/v1.txt

[root@k8s-master1 volumes]# touch ./v2/v2.txt

[root@k8s-master1 volumes]# touch ./v3/v3.txt

[root@k8s-master1 volumes]# touch ./v4/v5.txt

[root@k8s-master1 volumes]# touch ./v4/v4.txt

[root@k8s-master1 volumes]# touch ./v5/v5.txt2.2 PV configuration file (executed on either NFS server or Client)

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv001

labels:

name: pv001

spec:

nfs:

path: /nfs/volumes/v1

server: 172.24.130.172

accessModes: ["ReadWriteMany","ReadWriteOnce"]

capacity:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv002

labels:

name: pv002

spec:

nfs:

path: /nfs/volumes/v2

server: 172.24.130.172

accessModes: ["ReadWriteMany","ReadWriteOnce"]

capacity:

storage: 2Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv003

labels:

name: pv003

spec:

nfs:

path: /nfs/volumes/v3

server: 172.24.130.172

accessModes: ["ReadWriteMany","ReadWriteOnce"]

capacity:

storage: 3Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv004

labels:

name: pv004

spec:

nfs:

path: /nfs/volumes/v4

server: 172.24.130.172

accessModes: ["ReadWriteMany","ReadWriteOnce"]

capacity:

storage: 4Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv005

labels:

name: pv005

spec:

nfs:

path: /nfs/volumes/v5

server: 172.24.130.172

accessModes: ["ReadWriteMany","ReadWriteOnce"]

capacity:

storage: 5Gi

Key parameters:

server: 172.24.130.172: IP address of NFS server

/nfs/volumes/v1:Shared store from NFS server export

This means that there are PV s responsible for mapping and mount ing the underlying NFS

2.3 Creating a persistent space using PV

$ kubectl apply -f pv.yaml

2.4 Check current PV

[root@k8s-node1 ~]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pv001 1Gi RWO,RWX Retain Available 39s pv002 2Gi RWO,RWX Retain Available 39s pv003 3Gi RWO,RWX Retain Available 39s pv004 4Gi RWO,RWX Retain Available 39s pv005 5Gi RWO,RWX Retain Available 39s

Remarks:

Creation of PVS can be performed on either NFS Server or NFS Client, and K8S kubectl masks these differences.

Chapter 3 How to dynamically apply for persistent storage using PVC

3.1 PVC profile (usually with Pod on Client)

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mypvc

namespace: default

spec:

accessModes: ["ReadWriteMany"]

resources:

requests:

storage: 4Gi

---

apiVersion: v1

kind: Pod

metadata:

name: pod-vol-pvc

namespace: default

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

persistentVolumeClaim:

claimName: mypvcmountPath: /usr/share/nginx/html: Specify the mount path for the pod

storage: 4Gi: The size of disk space requested.

Remarks:

PVC does not need to know the NFS file path shared by NFS server s, which is PV's responsibility. PVC only needs to know the size of disk space it needs to apply for, and PV will return NFS shared storage space that has the closest hard disk space and meets the size requirements to PVC.

3.2 Use PVC to apply for persistence space

$ kubectl apply -f pvc.yaml

3.3 Check current PVC, PV

[root@k8s-node1 ~]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pv001 1Gi RWO,RWX Retain Bound default/mypvc1 12m pv002 2Gi RWO,RWX Retain Available 12m pv003 3Gi RWO,RWX Retain Available 12m pv004 4Gi RWO,RWX Retain Bound default/mypvc 12m pv005 5Gi RWO,RWX Retain Available 12m [root@k8s-node1 ~]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE mypvc Bound pv004 4Gi RWO,RWX 5m7s mypvc1 Bound pv001 1Gi RWO,RWX 2m9s

Chapter 4 Use of persistent space by pod (same as NFS)

4.1 Enter inside pod

(1) Entry through dashboard

(2) Enter by command line

[root@k8s-node1 ~]# kubectl get pod NAME READY STATUS RESTARTS AGE my-deploy-8686b49bbd-b8w65 1/1 Running 0 7h9m my-deploy-8686b49bbd-f2m4c 1/1 Running 0 7h8m my-deploy-8686b49bbd-jz5cx 1/1 Running 0 7h9m nginx-pv-demo-db866fc95-6d9nz 1/1 Running 0 63m nginx-pv-demo-db866fc95-qpx48 1/1 Running 0 63m pod-vol-pvc 1/1 Running 0 6m23s $ kubectl exec -it pod-vol-pvc -- /bin/sh

4.2 Access to shared storage through PVC applications

The shared storage space requested by the pvc has a size limit that is limited by the size of the shared storage allocated by the PV.

cd /usr/share/nginx/html/ /usr/share/nginx/html # ls v4.txt

4.3 Generating files in shared storage

# Persist files deep in pod $ touch pod-v4.txt # Check on master [root@k8s-master1 v4]# ls pod-v4.txt v4.txt [root@k8s-master1 v4]#

Generate persistence files in the / usr/share/nginx/html/directory of the pod as seen in master/nfs/volumes/v4/.

Files generated in master's/nfs/volumes/v4/can also be seen in pod's/usr/share/nginx/html/directory.

Reference resources:

Author Home Page ( Silicon-based Workshop for Potato Sugar): Blog of Potato Sugar (Wang Wenbing)_ Silicon-based Workshop for Potato Sugar_ CSDN Blog

Website address for this article: https://blog.csdn.net/HiWangWenBing/article/details/122832783

https://blog.csdn.net/HiWangWenBing/article/details/122820381

https://blog.csdn.net/HiWangWenBing/article/details/122820381 https://www.cnblogs.com/yxh168/p/11031003.html

https://www.cnblogs.com/yxh168/p/11031003.html