preface

The last article introduced k8s how to use pv/pvc and cephfs,

K8s (XI). Use of distributed storage Cephfs

Ceph storage has three storage interfaces:

Object store Ceph Object Gateway

Block device RBD

File system CEPHFS

Kubernetes supports the latter two storage interfaces, and the supported access modes are as follows:

In this article, we will test the use of ceph rbd as a persistent storage backend

RBD create test

The use of rbd is divided into three steps:

1. The server / client creates a block device image

2. The client maps the image into the linux system kernel. After the kernel recognizes the device, it generates the file ID under dev

3. Format the block device and mount it for use

# establish [root@h020112 ~]# rbd create --size 10240000 rbd/test1 #Creates a node of the specified size [root@h020112 ~]# rbd info test1 rbd image 'test1': size 10000 GB in 2560000 objects order 22 (4096 kB objects) block_name_prefix: rbd_data.31e3b6b8b4567 format: 2 features: layering, exclusive-lock,object-map, fast-diff, deep-flatten flags: #Before mapping into the kernel, first check the kernel version. The default format of ceph in jw version is 2. Five features are enabled by default, 2 X and earlier kernel versions need to manually adjust the format to 1 ,4.x Close before object-map, fast-diff, deep-flatten Functions can be successfully mapped to the kernel,What is used here is centos7.4,Kernel version 3.10 [root@h020112 ~]# rbd feature disable test1 object-map fast-diff deep-flatten # Map into kernel [root@h020112 ~]# rbd map test1 [root@h020112 ~]# ls /dev/rbd0 /dev/rbd0 #Mount use [root@h020112 ~]# mkdir /mnt/cephrbd [root@h020112 ~]# mkfs.ext4 -m0 /dev/rbd0 [root@h020112 ~]# mount /dev/rbd0 /mnt/cephrbd/

Kubernetes Dynamic Pv uses

First, review the usage of cephfs in k8s:

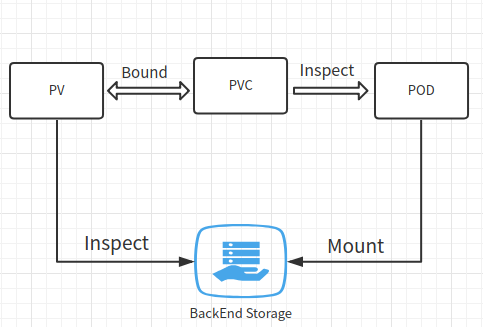

Binding usage process: pv specifies the storage type and interface, pvc binds to pv, and pod mount specifies pvc This one-to-one correspondence is static pv

For example, the use process of cephfs:

So what is dynamic pv?

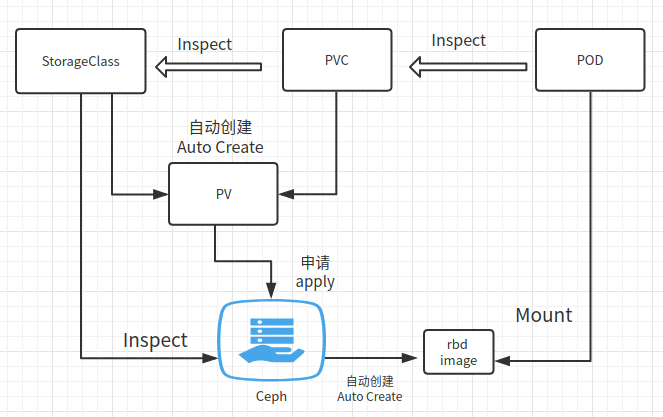

When the existing pv does not meet the request of PVC, you can use storage class. After PVC connects to sc, k8s it will dynamically create pv and send resource requests to the corresponding storage backend

The specific process is described as follows: first, create sc classification, and PVC requests the resources of the created sc to automatically create pv, so as to achieve the effect of dynamic configuration. That is, through an object called Storage Class, the storage system automatically creates pv and uses storage resources according to the requirements of PVC

Kubernetes' pv supports rbd. From the process of testing rbd in the first part, it can be seen that the actual unit of storage is image. First, an image must be created on the ceph side before pv/pvc can be created, and then it can be mounted and used in pod This is a standard static pv binding process However, such a process brings a disadvantage, that is, a complete operation of mounting and using storage resources is divided into two segments. One segment is divided into rbd image on the ceph end and the other is bound to pv on the k8s end. This increases the complexity of management and is not conducive to automatic operation and maintenance

Therefore, dynamic pv is a good choice. The implementation process of dynamic pv of ceph rbd interface is as follows:

According to the declaration of pvc and sc, pv is automatically generated, and an application is sent to the ceph back end specified by sc to automatically create RBD images of corresponding size for final binding

Work flow chart:

Let's start the demonstration

1. Create a secret

Refer to the previous article for key acquisition methods

~/mytest/ceph/rbd# cat ceph-secret-rbd.yaml apiVersion: v1 kind: Secret metadata: name: ceph-secret-rbd type: "kubernetes.io/rbd" data: key: QVFCL3E1ZGIvWFdxS1JBQTUyV0ZCUkxldnRjQzNidTFHZXlVYnc9PQ==

2. Create sc

~/mytest/ceph/rbd# cat ceph-secret-rbd.yaml kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: cephrbd-test2 provisioner: kubernetes.io/rbd reclaimPolicy: Retain parameters: monitors: 192.168.20.112:6789,192.168.20.113:6789,192.168.20.114:6789 adminId: admin adminSecretName: ceph-secret-rbd pool: rbd userId: admin userSecretName: ceph-secret-rbd fsType: ext4 imageFormat: "2" imageFeatures: "layering"

Create pvc

~/mytest/ceph/rbd# cat cephrbd-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: cephrbd-pvc2

spec:

storageClassName: cephrbd-test2

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

Create deploy, and the container mount uses the created pvc

:~/mytest/ceph/rbd# cat deptest13dbdm.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

app: deptest13dbdm

name: deptest13dbdm

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: deptest13dbdm

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app: deptest13dbdm

spec:

containers:

image: registry.xxx.com:5000/mysql:56v6

imagePullPolicy: Always

name: deptest13dbdm

ports:

- containerPort: 3306

protocol: TCP

resources:

limits:

memory: 16Gi

requests:

memory: 512Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/lib/mysql

name: datadir

subPath: deptest13dbdm

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- name: datadir

persistentVolumeClaim:

claimName: cephrbd-pvc2

There will be a big pit:

~# kubectl describe pvc cephrbd-pvc2 ... Error creating rbd image: executable file not found in $PATH

This is because Kube controller manager needs to call rbd client api to create rbd image. CEPH common has been installed on each node of the cluster, but the cluster is deployed using kubedm. The Kube controller manager component works as a container and does not contain rbd execution files. A solution is found in this issue later:

Github issue

The external rbd provider is used to provide the Kube controller manager with the rbd execution entry. Note that the image version specified in the demo in the issue is bug gy, and the file system format used after rbd mounting cannot be specified. The latest version demo needs to be used. Project address: rbd-provisioner

Full demo yaml file:

root@h009027:~/mytest/ceph/rbd/rbd-provisioner# cat rbd-provisioner.yaml

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-provisioner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["services"]

resourceNames: ["kube-dns","coredns"]

verbs: ["list", "get"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-provisioner

subjects:

- kind: ServiceAccount

name: rbd-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: rbd-provisioner

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: rbd-provisioner

rules:

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: rbd-provisioner

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: rbd-provisioner

subjects:

- kind: ServiceAccount

name: rbd-provisioner

namespace: default

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: rbd-provisioner

spec:

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: rbd-provisioner

spec:

containers:

- name: rbd-provisioner

image: quay.io/external_storage/rbd-provisioner:latest

env:

- name: PROVISIONER_NAME

value: ceph.com/rbd

serviceAccount: rbd-provisioner

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: rbd-provisioner

After deployment, recreate the sc/pv above. At this time, the pod will run normally. Check the pvc. You can see that this pvc triggers the automatic generation of a pv and binds it. The StorageClass bound by pv is the newly created sc:

# pod ~/mytest/ceph/rbd/rbd-provisioner# kubectl get pods | grep deptest13 deptest13dbdm-c9d5bfb7c-rzzv6 2/2 Running 0 6h # pvc ~/mytest/ceph/rbd/rbd-provisioner# kubectl get pvc cephrbd-pvc2 NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE cephrbd-pvc2 Bound pvc-d976c2bf-c2fb-11e8-878f-141877468256 20Gi RWO cephrbd-test2 23h # Automatically created pv ~/mytest/ceph/rbd/rbd-provisioner# kubectl get pv pvc-d976c2bf-c2fb-11e8-878f-141877468256 NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-d976c2bf-c2fb-11e8-878f-141877468256 20Gi RWO Retain Bound default/cephrbd-pvc2 cephrbd-test2 23h # sc ~/mytest/ceph/rbd/rbd-provisioner# kubectl get sc cephrbd-test2 NAME PROVISIONER AGE cephrbd-test2 ceph.com/rbd 23h

Go back to the ceph node to see if the rbd image is automatically created

~# rbd ls kubernetes-dynamic-pvc-d988cfb1-c2fb-11e8-b2df-0a58ac1a084e test1 ~# rbd info kubernetes-dynamic-pvc-d988cfb1-c2fb-11e8-b2df-0a58ac1a084e rbd image 'kubernetes-dynamic-pvc-d988cfb1-c2fb-11e8-b2df-0a58ac1a084e': size 20480 MB in 5120 objects order 22 (4096 kB objects) block_name_prefix: rbd_data.47d0b6b8b4567 format: 2 features: layering flags:

It can be found that the dynamic creation of pv/rbd is successful

Mount test

Recalling the screenshot of the access mode supported by kubernetes at the top, RBD does not support ReadWriteMany, while k8s's concept is to manage your container like an animal. Container nodes may expand and shrink horizontally at any time and drift between nodes. Therefore, RBD mode that does not support multi-point mounting is obviously not suitable for shared file storage among multi application nodes, Here is a further verification

Multipoint mount test

Create a new deploy and mount the same pvc:

root@h009027:~/mytest/ceph/rbd# cat deptest14dbdm.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "4"

creationTimestamp: 2018-09-26T07:32:41Z

generation: 4

labels:

app: deptest14dbdm

domain: dev.deptest14dbm.kokoerp.com

nginx_server: ""

name: deptest14dbdm

namespace: default

resourceVersion: "26647927"

selfLink: /apis/extensions/v1beta1/namespaces/default/deployments/deptest14dbdm

uid: 5a1a62fe-c15e-11e8-878f-141877468256

spec:

replicas: 1

selector:

matchLabels:

app: deptest14dbdm

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: deptest14dbdm

domain: dev.deptest14dbm.kokoerp.com

nginx_server: ""

spec:

containers:

- env:

- name: DB_NAME

value: deptest14dbdm

image: registry.youkeshu.com:5000/mysql:56v6

imagePullPolicy: Always

name: deptest14dbdm

ports:

- containerPort: 3306

protocol: TCP

resources:

limits:

memory: 16Gi

requests:

memory: 512Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/lib/mysql

name: datadir

subPath: deptest14dbdm

- env:

- name: DATA_SOURCE_NAME

value: exporter:6qQidF3F5wNhrLC0@(127.0.0.1:3306)/

image: registry.youkeshu.com:5000/mysqld-exporter:v0.10

imagePullPolicy: Always

name: mysql-exporter

ports:

- containerPort: 9104

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

nodeSelector:

192.168.20.109: ""

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- name: datadir

persistentVolumeClaim:

claimName: cephrbd-pvc2

Use nodeselector to restrict this deptest13 and deptest14 to the same node:

root@h009027:~/mytest/ceph/rbd# kubectl get pods -o wide | grep deptest1[3-4] deptest13dbdm-c9d5bfb7c-rzzv6 2/2 Running 0 6h 172.26.8.82 h020109 deptest14dbdm-78b8bb7995-q84dk 2/2 Running 0 2m 172.26.8.84 h020109

It is not difficult to find that multiple pod s can share RBD images in the same node. This can also be confirmed by viewing the mount entry on the container's work node:

192.168.20.109:~# mount | grep rbd /dev/rbd0 on /var/lib/kubelet/plugins/kubernetes.io/rbd/rbd/rbd-image-kubernetes-dynamic-pvc-d988cfb1-c2fb-11e8-b2df-0a58ac1a084e type ext4 (rw,relatime,stripe=1024,data=ordered) /dev/rbd0 on /var/lib/kubelet/pods/68b8e3c5-c38d-11e8-878f-141877468256/volumes/kubernetes.io~rbd/pvc-d976c2bf-c2fb-11e8-878f-141877468256 type ext4 (rw,relatime,stripe=1024,data=ordered) /dev/rbd0 on /var/lib/kubelet/pods/1e9b04b1-c3c3-11e8-878f-141877468256/volumes/kubernetes.io~rbd/pvc-d976c2bf-c2fb-11e8-878f-141877468256 type ext4 (rw,relatime,stripe=1024,data=ordered)

Modify the nodeselector of the new deploy to point to other nodes:

~/mytest/ceph/rbd# kubectl get pods -o wide| grep deptest14db deptest14dbdm-78b8bb7995-q84dk 0/2 Terminating 0 7m 172.26.8.84 h020109 deptest14dbdm-79fd495579-rkl92 0/2 ContainerCreating 0 49s <none> h009028 # kubectl describe pod deptest14dbdm-79fd495579-rkl92 ... Warning FailedAttachVolume 1m attachdetach-controller Multi-Attach error for volume "pvc-d976c2bf-c2fb-11e8-878f-141877468256" Volume is already exclusively attached to one node and can't be attached to another

The pod will be blocked in the ContainerCreating state. The description of the error message clearly indicates that the controller does not support multi-point mounting of this pv

Delete the temporarily added deploy before proceeding to the next test

Rolling update test

The update method specified in the deploy file of deptest13dbdm is rolling update:

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

Here, let's try to schedule the running pod from one node to another. Can rbd be migrated to a new node for mounting

# Modify nodeselector root@h009027:~/mytest/ceph/rbd# kubectl edit deploy deptest13dbdm deployment "deptest13dbdm" edited # Observe the migration process of pod scheduling root@h009027:~/mytest/ceph/rbd# kubectl get pods -o wide| grep deptest13 deptest13dbdm-6999f5757c-fk9dp 0/2 ContainerCreating 0 4s <none> h009028 deptest13dbdm-c9d5bfb7c-rzzv6 2/2 Terminating 0 6h 172.26.8.82 h020109 root@h009027:~/mytest/ceph/rbd# kubectl describe pod deptest13dbdm-6999f5757c-fk9dp ... Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 2m default-scheduler Successfully assigned deptest13dbdm-6999f5757c-fk9dp to h009028 Normal SuccessfulMountVolume 2m kubelet, h009028 MountVolume.SetUp succeeded for volume "default-token-7q8pq" Warning FailedMount 45s kubelet, h009028 Unable to mount volumes for pod "deptest13dbdm-6999f5757c-fk9dp_default(28e9cdd6-c3c5-11e8-878f-141877468256)": timeout expired waiting for volumes to attach/mount for pod "default"/"deptest13dbdm-6999f5757c-fk9dp". list of unattached/unmounted volumes=[datadir] Normal SuccessfulMountVolume 35s (x2 over 36s) kubelet, h009028 MountVolume.SetUp succeeded for volume "pvc-d976c2bf-c2fb-11e8-878f-141877468256" Normal Pulling 27s kubelet, h009028 pulling image "registry.youkeshu.com:5000/mysql:56v6" Normal Pulled 27s kubelet, h009028 Successfully pulled image "registry.youkeshu.com:5000/mysql:56v6" Normal Pulling 27s kubelet, h009028 pulling image "registry.youkeshu.com:5000/mysqld-exporter:v0.10" Normal Pulled 26s kubelet, h009028 Successfully pulled image "registry.youkeshu.com:5000/mysqld-exporter:v0.10" Normal Created 26s kubelet, h009028 Created container Normal Started 26s kubelet, h009028 Started container root@h009027:~/mytest/ceph/rbd# kubectl get pods -o wide| grep deptest13 deptest13dbdm-6999f5757c-fk9dp 2/2 Running 0 3m 172.26.0.87 h009028 #View the rbd image block mapped in the kernel on the original node. The device description file has disappeared and the mount has been cancelled root@h020109:~/tpcc-mysql# ls /dev/rbd* ls: cannot access '/dev/rbd*': No such file or directory root@h020109:~/tpcc-mysql# mount | grep rbd root@h020109:~/tpcc-mysql#

It can be seen that the rolling update of migration between nodes can be completed automatically. The whole scheduling process is a little long, nearly 3 minutes, because it involves at least the following steps:

1. Terminate the original pod and release resources

2. Unmount the rbd from the original node and release the kernel mapping

3. Map rbd image to the new node kernel and mount rbd to the file directory

4. Create a pod and mount the specified directory

Conclusion:

ceph rbd does not support multi - node mounting and supports rolling updates

follow-up

After completing the test of two k8s distributed storage docking methods, cephfs and ceph rbd, the next article will test the database performance of these two methods and discuss the applicable scenarios

Reprinted to https://blog.csdn.net/ywq935/article/details/82900200