Environmental requirements

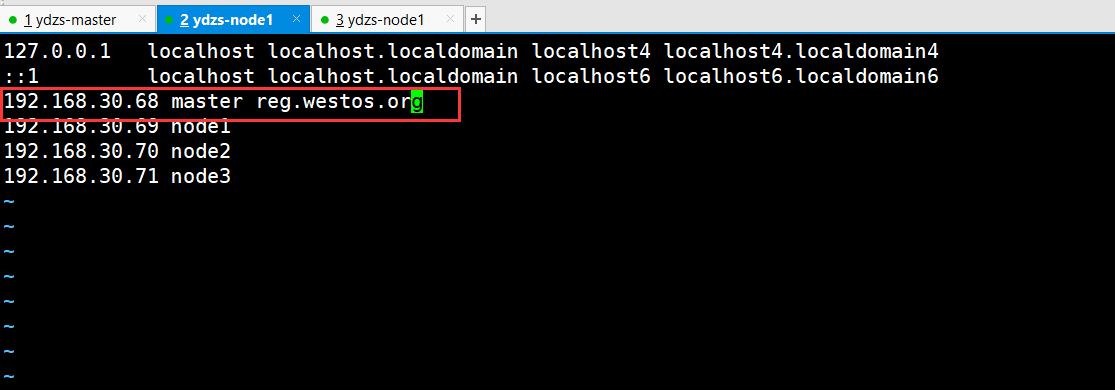

Three machines are prepared here. The machine 192.168.30.68 is also the host of the harbor warehouse

[root@master1 yum.repos.d]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost 127.0.0.1 master14.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.30.68 master reg.westos.org 192.168.30.69 node1 192.168.30.70 node2 192.168.30.71 node3

A compatible Linux host. The Kubernetes project provides general instructions for Debian and Red Hat based Linux distributions and some distributions that do not provide a package manager

2 GB or more RAM per machine (less than this number will affect the running memory of your application)

2 CPU cores or more

The networks of all machines in the cluster can be connected to each other (both public network and intranet)

Disable swap partition. In order for kubelet to work properly, the swap partition must be disabled.

[root@master1 yum.repos.d]# free -m #view memory

total used free shared buff/cache available

Mem: 7821 1632 835 49 5353 5832

Swap: 0 0 0

[root@master1 yum.repos.d]# swapoff -a #Close swap partition

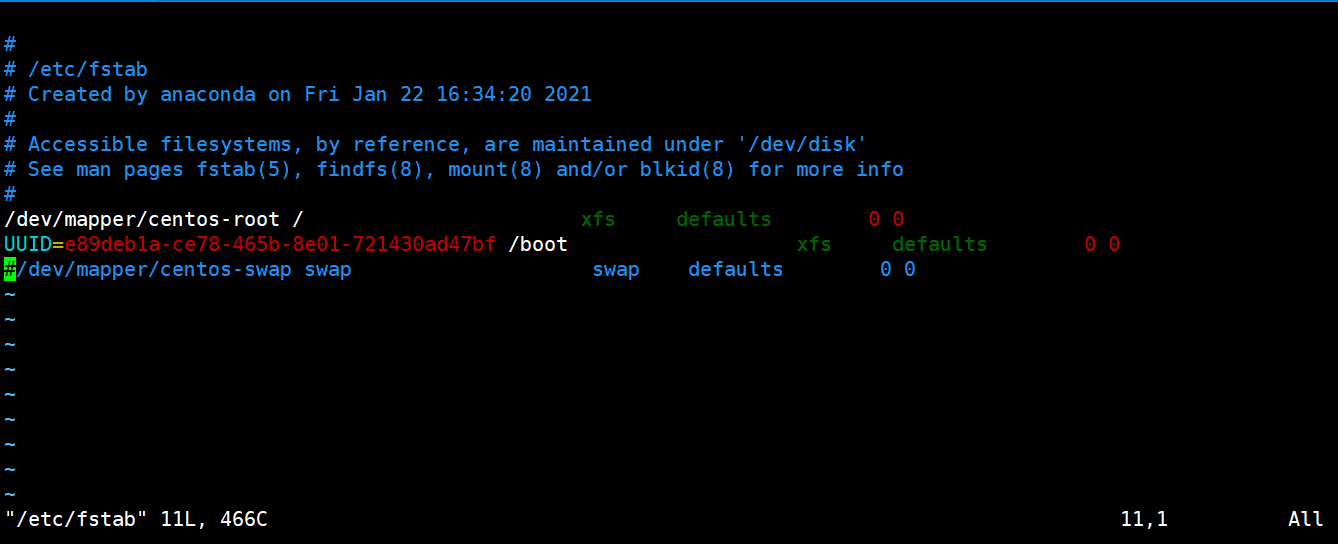

[root@master1 yum.repos.d]# vim /etc/fstab #Comment on the swap line of this file

Early start

Disable firewall

systemctl stop firewalld

systemctl disable firewalld

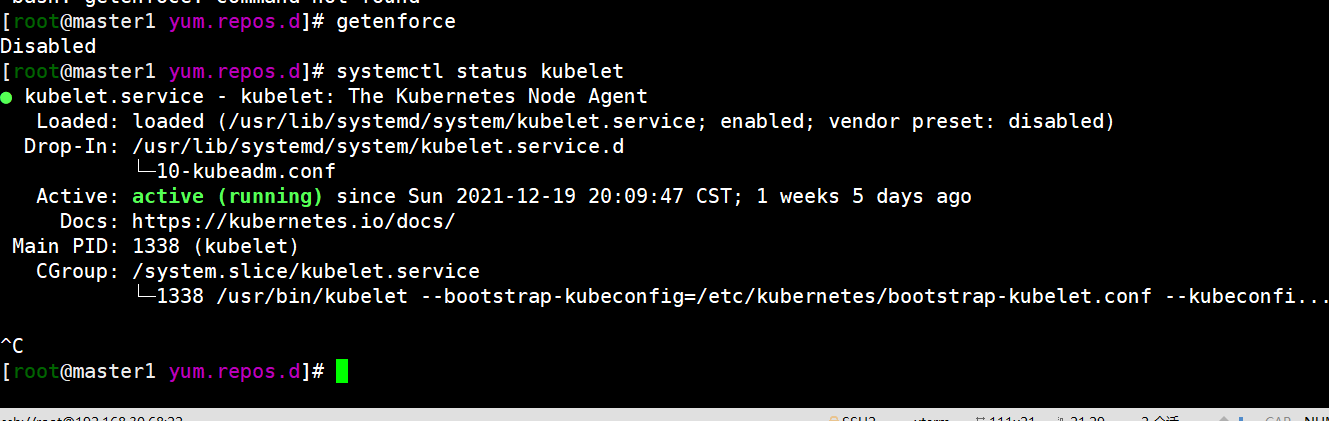

#Set SELinux to permissive mode (equivalent to disabling it)

setenforce 0

sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

Create / etc / sysctl d/k8s. Conf file, add the following:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

The kernel ipv4 forwarding needs to be loaded.br_netfilter module, so load this module:

modprobe br_netfilter

Execute the following command to make the modification effective:

sysctl -p /etc/sysctl.d/k8s.conf

Install docker

# step 1: install some necessary system tools

yum install -y yum-utils device-mapper-persistent-data lvm2

# Step 2: add software source information

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# Step 3

sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

# Step 4: update and install docker CE

yum makecache fast

sudo yum -y install docker-ce

# Step 4: start Docker service

systemctl start docker

systemctl enable docker

Configure mirror acceleration

mkdir -p /etc/docker # If you don't have this directory, create it first, and then add daemon JSON file

vi /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"registry-mirrors" : [

"https://ot2k4d59.mirror.aliyuncs.com/"

]

}

Then we'll restart docker

systemctl restart docker

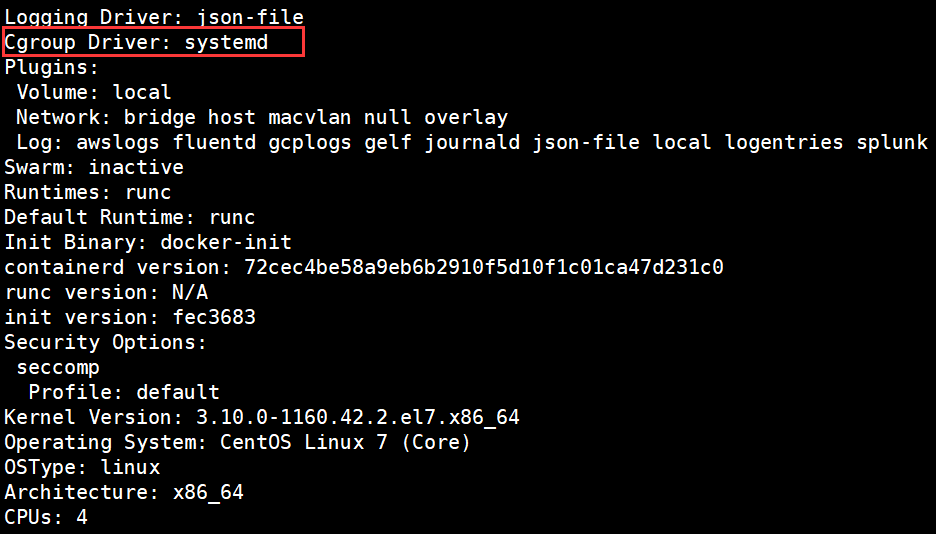

#docker info view cGroup Driver is systemd

Installation k8s

be based on redhat(centos)Release version Alibaba cloud source cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF yum install -y kubelet kubeadm kubectl systemctl enable kubelet && systemctl start kubelet

The above steps need to be done at all three nodes

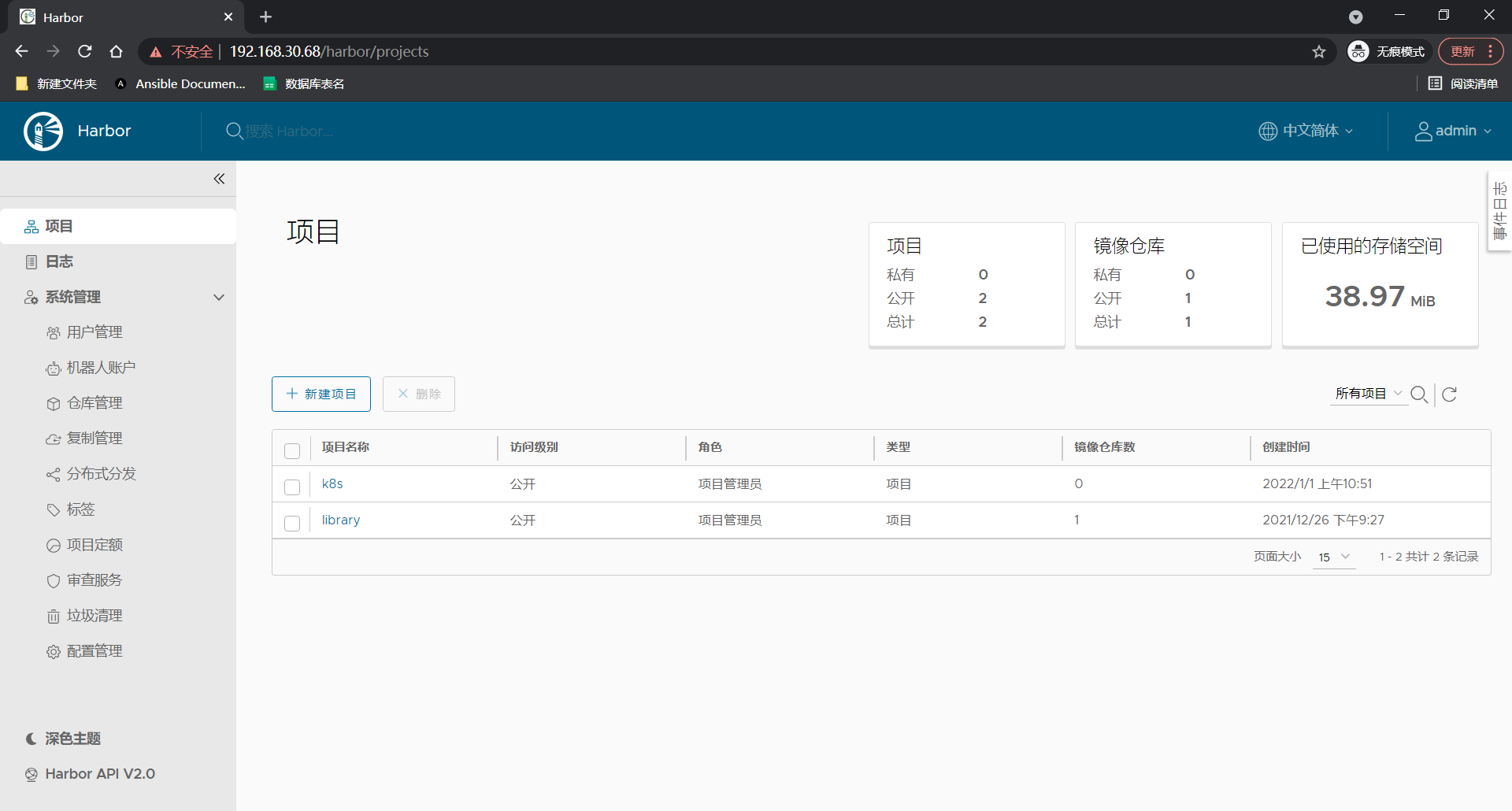

Because the deployment services in the production environment must be batch, the establishment of private warehouse here plays a role.

We push the images pulled from the primary node to the private warehouse so that other nodes can pull them directly.

PS: if the harbor warehouse has not been built before, it needs to be rebuilt Construction of harbo warehouse

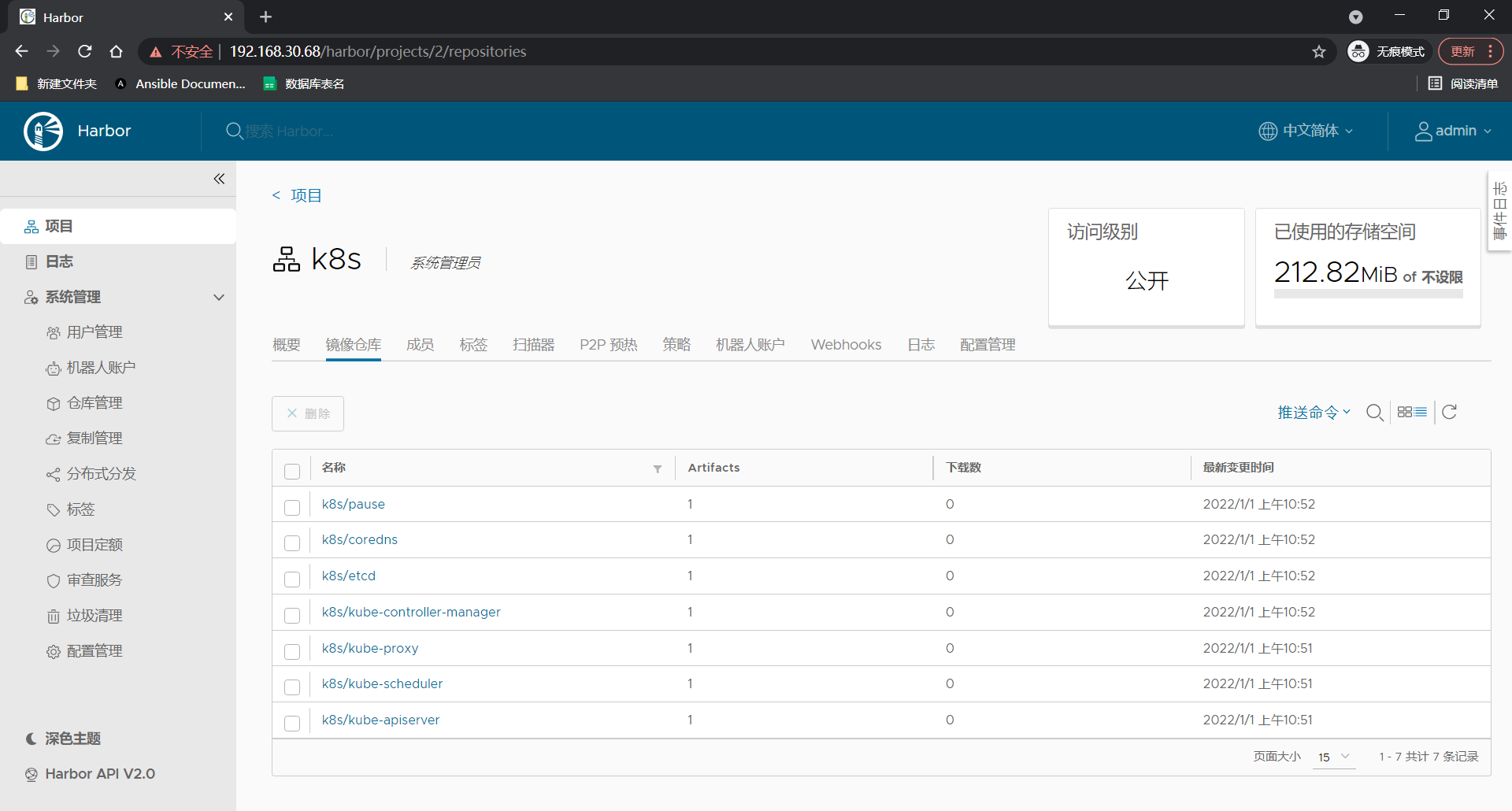

Create a k8s project in advance

PS: if the user name and password cannot be entered during the construction process, check the configuration file and re execute install sh

Pull image

[root@master1 yum.repos.d]# kubeadm config images pull --image-repository registry.aliyuncs.com/google_containers

I1231 22:13:31.956040 20707 version.go:255] remote version is much newer: v1.23.1; falling back to: stable-1.22

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.22.5

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.22.5

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.22.5

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.22.5

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.5

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.0-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.8.4

[root@master1 yum.repos.d]# docker images|grep ^reg|grep -v nginx

registry.aliyuncs.com/google_containers/kube-apiserver v1.22.5 059e6cd8cf78 2 weeks ago 128MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.22.5 935d8fdc2d52 2 weeks ago 52.7MB

registry.aliyuncs.com/google_containers/kube-proxy v1.22.5 8f8fdd6672d4 2 weeks ago 104MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.22.5 04185bc88e08 2 weeks ago 122MB

registry.aliyuncs.com/google_containers/etcd 3.5.0-0 004811815584 6 months ago 295MB

registry.aliyuncs.com/google_containers/coredns v1.8.4 8d147537fb7d 7 months ago 47.6MB

registry.aliyuncs.com/google_containers/pause 3.5 ed210e3e4a5b 9 months ago 683kB

#Filter images and organize formats

[root@master1 yum.repos.d]# docker images|grep ^reg|grep -v nginx|awk '{print $1":"$2}'

registry.aliyuncs.com/google_containers/kube-apiserver:v1.22.5

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.22.5

registry.aliyuncs.com/google_containers/kube-scheduler:v1.22.5

registry.aliyuncs.com/google_containers/kube-proxy:v1.22.5

registry.aliyuncs.com/google_containers/etcd:3.5.0-0

registry.aliyuncs.com/google_containers/coredns:v1.8.4

registry.aliyuncs.com/google_containers/pause:3.5

#Label the image as a private warehouse for easy upload in the next step

[root@master1 yum.repos.d]# docker images|grep ^reg|grep -v nginx|awk '{print $1":"$2}'|awk -F / '{system("docker tag "$0" reg.westos.org/k8s/"$3"")}'

As shown in the figure below, the image is uploaded to the private warehouse

Here, we need to do one thing when other hosts pull images

On master node

[root@master1 harbor]# kubeadm config images list --image-repository reg.westos.org/k8s

I0101 11:06:27.107533 29126 version.go:255] remote version is much newer: v1.23.1; falling back to: stable-1.22

reg.westos.org/k8s/kube-apiserver:v1.22.5

reg.westos.org/k8s/kube-controller-manager:v1.22.5

reg.westos.org/k8s/kube-scheduler:v1.22.5

reg.westos.org/k8s/kube-proxy:v1.22.5

reg.westos.org/k8s/pause:3.5

reg.westos.org/k8s/etcd:3.5.0-0

reg.westos.org/k8s/coredns:v1.8.4

[root@master1 harbor]# kubeadm init --pod-network-cidr=10.244.0.0/16 --image-repository reg.westos.org/k8s

Installing the network plug-in flannel

Pull the image and upload it to the private warehouse

[root@master1 ~]# docker pull quay.io/coreos/flannel:v0.14.0 v0.14.0: Pulling from coreos/flannel 801bfaa63ef2: Pull complete e4264a7179f6: Pull complete bc75ea45ad2e: Pull complete 78648579d12a: Pull complete 3393447261e4: Pull complete 071b96dd834b: Pull complete 4de2f0468a91: Pull complete Digest: sha256:4a330b2f2e74046e493b2edc30d61fdebbdddaaedcb32d62736f25be8d3c64d5 Status: Downloaded newer image for quay.io/coreos/flannel:v0.14.0 [root@master1 ~]# docker tag quay.io/coreos/flannel:v0.14.0 reg.westos.org/k8s/flannel:v0.14.0 [root@master1 ~]# docker push reg.westos.org/k8s/flannel:v0.14.0 The push refers to repository [reg.westos.org/k8s/flannel] 814fbd599e1f: Pushed 8a984b390686: Pushed b613d890216c: Pushed eb738177d102: Pushed 2e16188127c8: Pushed 815dff9e0b57: Pushed 777b2c648970: Pushed v0.14.0: digest: sha256:635d42b8cc6e9cb1dee3da4d5fe8bbf6a7f883c9951b660b725b0ed2c03e6bde size: 1785

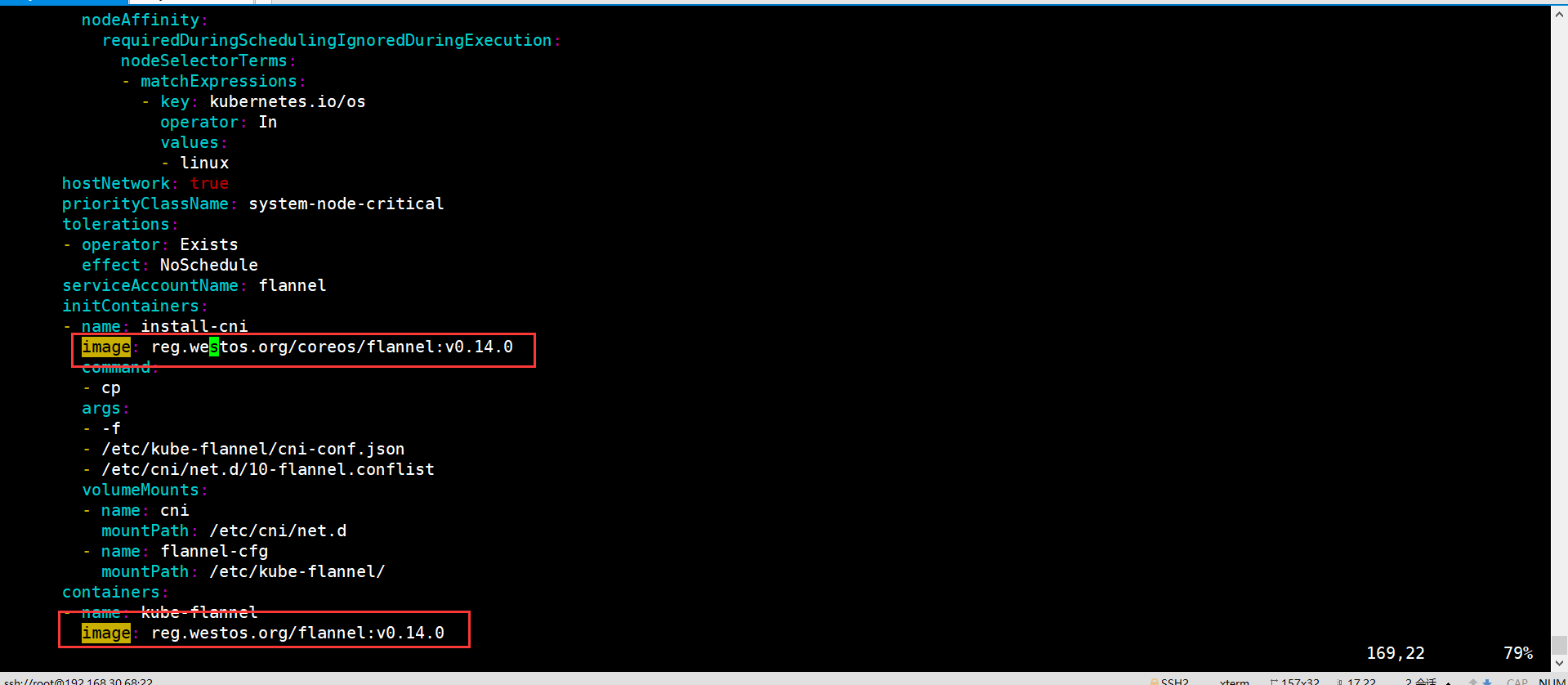

And Kube flannel Replace the beginning of the image in the YML file with reg westos. org

install

[root@master1 ~]# kubectl apply -f kube-flannel.yml Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+ podsecuritypolicy.policy/psp.flannel.unprivileged configured clusterrole.rbac.authorization.k8s.io/flannel unchanged clusterrolebinding.rbac.authorization.k8s.io/flannel unchanged serviceaccount/flannel unchanged configmap/kube-flannel-cfg unchanged daemonset.apps/kube-flannel-ds configured

Other node configurations

vim /etc/hosts

The join command is missing after the primary node is initialized due to previous configuration. You can find it through this command

[root@master1 ~]# kubeadm token create --print-join-command

kubeadm join 192.168.30.68:6443 --token bz92fi.l5iy7q0lwwdu5zds --discovery-token-ca-cert-hash sha256:520eacddeb308018afe4ed0537a2cf48b287c83ff84a76e33161dc3f01913ae5

It is then executed at the node1 node

[root@node1 ~]# kubeadm join 192.168.30.68:6443 --token bz92fi.l5iy7q0lwwdu5zds --discovery-token-ca-cert-hash sha256:520eacddeb308018afe4ed0537a2cf48b287c83ff84a76e33161dc3f01913ae5 [preflight] Running pre-flight checks error execution phase preflight: [preflight] Some fatal errors occurred: [ERROR FileAvailable--etc-kubernetes-kubelet.conf]: /etc/kubernetes/kubelet.conf already exists [ERROR Port-10250]: Port 10250 is in use [ERROR FileAvailable--etc-kubernetes-pki-ca.crt]: /etc/kubernetes/pki/ca.crt already exists [preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...` To see the stack trace of this error execute with --v=5 or higher

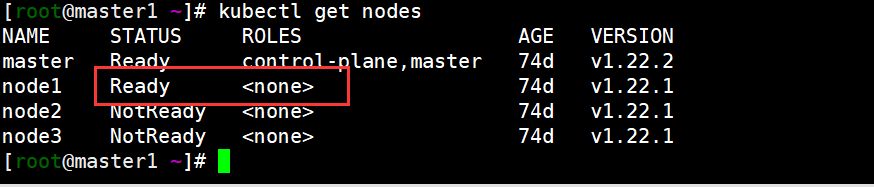

You can see that the node is ready

Here k8s, the deployment is preliminary

For batch deployment We copy the certificate file to the corresponding node on the master node [root@master1 ~]# scp -r /data1/certs/westos.org.crt node1:/etc/docker/ node1 On node [root@node1 certs.d]# mkdir /etc/docker/certs.d/reg.westos.org/ -p [root@node1 certs.d]# mv /etc/docker/westos.org.crt /etc/docker/certs.d/reg.westos.org/ Now pull the data downloaded directly from the warehouse [root@node1 certs.d]# docker pull reg.westos.org/k8s/flannel:v0.14.0 v0.14.0: Pulling from k8s/flannel 801bfaa63ef2: Pull complete e4264a7179f6: Pull complete bc75ea45ad2e: Pull complete 78648579d12a: Pull complete 3393447261e4: Pull complete 071b96dd834b: Pull complete 4de2f0468a91: Pull complete Digest: sha256:635d42b8cc6e9cb1dee3da4d5fe8bbf6a7f883c9951b660b725b0ed2c03e6bde Status: Downloaded newer image for reg.westos.org/k8s/flannel:v0.14.0 reg.westos.org/k8s/flannel:v0.14.0