k8s preliminary preparation

I mainly use it here docker-case Building local k8s(v1.21.2) clusters

Software preparation

- vagrant 2.2.16 Cross platform virtualization tools

- virtualbox Open source free virtual machine

- k8s version v1.21.2

vagrant environment description

- You need to use the root user to start the installation, otherwise you will encounter many problems

| node | Connection mode | password |

|---|---|---|

| master | ssh root@127.0.0.1 -p 2200 | vagrant |

| node1 | ssh root@127.0.0.1 -p 2201 | vagrant |

| node2 | ssh root@127.0.0.1 -p 2202 | vagrant |

Configuration process

Create three virtual machines first

Enter the directory and execute the following command: docker case / k8s

vagrant up

Modify images to Alibaba cloud

View the required version

kubeadm config images list

master starts installation

Using alicloud image acceleration, coredns: v1 8.0 can't download due to tag problem, so download coredns: v1.0 first eight

View the list of software to be installed

kubeadm config images list

Here we mainly look at the coredns version

k8s.gcr.io/kube-apiserver:v1.21.2 k8s.gcr.io/kube-controller-manager:v1.21.2 k8s.gcr.io/kube-scheduler:v1.21.2 k8s.gcr.io/kube-proxy:v1.21.2 k8s.gcr.io/pause:3.4.1 k8s.gcr.io/etcd:3.4.13-0 k8s.gcr.io/coredns/coredns:v1.8.0

Download coredns:1.8.0

docker pull registry.aliyuncs.com/google_containers/coredns:1.8.0

Replace tag

docker tag registry.aliyuncs.com/google_containers/coredns:1.8.0 registry.aliyuncs.com/google_containers/coredns:v1.8.0

Delete old

docker rmi registry.aliyuncs.com/google_containers/coredns:1.8.0

kubeadm init

kubeadm init \ --apiserver-advertise-address=192.168.56.200 \ --image-repository registry.aliyuncs.com/google_containers \ --kubernetes-version v1.21.2 \ --pod-network-cidr=10.244.0.0/16

Initialize kube

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config sh -c "echo 'export KUBECONFIG=/etc/kubernetes/admin.conf' >> /etc/profile" source /etc/profile

Installing the network plug-in flannle

Since the ip(192.168.56.200) we used above is not on the eth0 network card, we need to modify the network card. You can view the network card through ip add

1. Download first

You can use it directly kube-flannel.yml

curl https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml > kube-flannel.yml

2. Start editing VI Kube flannel yml

181 containers:

182 - name: kube-flannel

183 image: quay.io/coreos/flannel:v0.14.0

184 command:

185 - /opt/bin/flanneld

186 args:

187 - --ip-masq

188 - --kube-subnet-mgr

189 - --iface=eth1 # This place specifies the network card

3.apply flannel

[root@k8s-master ~]# kubectl apply -f kube-flannel.yml Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+ podsecuritypolicy.policy/psp.flannel.unprivileged created clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.apps/kube-flannel-ds created

4. Verification results

Get pods first, and then view the log of a pod

[root@k8s-master ~]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-59d64cd4d4-2d46n 1/1 Running 0 19m kube-system coredns-59d64cd4d4-jvbvs 1/1 Running 0 19m kube-system etcd-k8s-master 1/1 Running 0 20m kube-system kube-apiserver-k8s-master 1/1 Running 0 20m kube-system kube-controller-manager-k8s-master 1/1 Running 0 15m kube-system kube-flannel-ds-hvsmm 1/1 Running 0 95s kube-system kube-flannel-ds-lhdpp 1/1 Running 0 95s kube-system kube-flannel-ds-nbxl4 1/1 Running 0 95s kube-system kube-proxy-6gj8p 1/1 Running 0 18m kube-system kube-proxy-jf8v6 1/1 Running 0 18m kube-system kube-proxy-srx5t 1/1 Running 0 19m kube-system kube-scheduler-k8s-master 1/1 Running 0 15m

From the log, you can see Using interface with name eth1 and address 192.168.56.200

[root@k8s-master ~]# kubectl logs -n kube-system kube-flannel-ds-nbxl4 I0709 08:28:28.858390 1 main.go:533] Using interface with name eth1 and address 192.168.56.200 I0709 08:28:28.859608 1 main.go:550] Defaulting external address to interface address (192.168.56.200) W0709 08:28:29.040955 1 client_config.go:608] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

Using ipvs load balancing

What is ipvs You can see

28 iptables:

29 masqueradeAll: false

30 masqueradeBit: null

31 minSyncPeriod: 0s

32 syncPeriod: 0s

33 ipvs:

34 excludeCIDRs: null

35 minSyncPeriod: 0s

36 scheduler: ""

37 strictARP: false

38 syncPeriod: 0s

39 tcpFinTimeout: 0s

40 tcpTimeout: 0s

41 udpTimeout: 0s

42 kind: KubeProxyConfiguration

43 metricsBindAddress: ""

44 mode: "ipvs" # ipvs is specified here

45 nodePortAddresses: null

46 oomScoreAdj: null

Kubedm token reset

This step is not necessary. If the above join is forgotten, it can be regenerated

kubeadm token create --print-join-command

Possible problems

scheduler and controller manager ports do not work

[root@k8s-master ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused

etcd-0 Healthy {"health":"true"}

solve

The main problem is -- port=0. Just comment it out

- vi /etc/kubernetes/manifests/kube-scheduler.yaml comment port

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-scheduler

tier: control-plane

name: kube-scheduler

namespace: kube-system

spec:

containers:

- command:

- kube-scheduler

- --authentication-kubeconfig=/etc/kubernetes/scheduler.conf

- --authorization-kubeconfig=/etc/kubernetes/scheduler.conf

- --bind-address=127.0.0.1

- --kubeconfig=/etc/kubernetes/scheduler.conf

- --leader-elect=true

# - --port=0 # Comment port

image: registry.aliyuncs.com/google_containers/kube-scheduler:v1.21.2

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 10259

scheme: HTTPS

.....

- vi /etc/kubernetes/manifests/kube-controller-manager.yaml also annotates ports

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-controller-manager

tier: control-plane

name: kube-controller-manager

namespace: kube-system

spec:

containers:

- command:

- kube-controller-manager

- --allocate-node-cidrs=true

- --authentication-kubeconfig=/etc/kubernetes/controller-manager.conf

- --authorization-kubeconfig=/etc/kubernetes/controller-manager.conf

- --bind-address=127.0.0.1

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --cluster-cidr=10.24.0.0/16

- --cluster-name=kubernetes

- --cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt

- --cluster-signing-key-file=/etc/kubernetes/pki/ca.key

- --controllers=*,bootstrapsigner,tokencleaner

- --kubeconfig=/etc/kubernetes/controller-manager.conf

- --leader-elect=true

# - --port=0

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --root-ca-file=/etc/kubernetes/pki/ca.crt

- --service-account-private-key-file=/etc/kubernetes/pki/sa.key

- --service-cluster-ip-range=10.96.0.0/12

- --use-service-account-credentials=true

image: registry.aliyuncs.com/google_containers/kube-controller-manager:v1.21.2

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 10257

scheme: HTTPS

.....

- Restart

systemctl restart kubelet.service

When kubedm joins, it is prompted that bridge NF call iptables is not closed

error execution phase preflight: [preflight] Some fatal errors occurred: [ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1

solve

sudo sh -c " echo '1' > /proc/sys/net/bridge/bridge-nf-call-iptables"

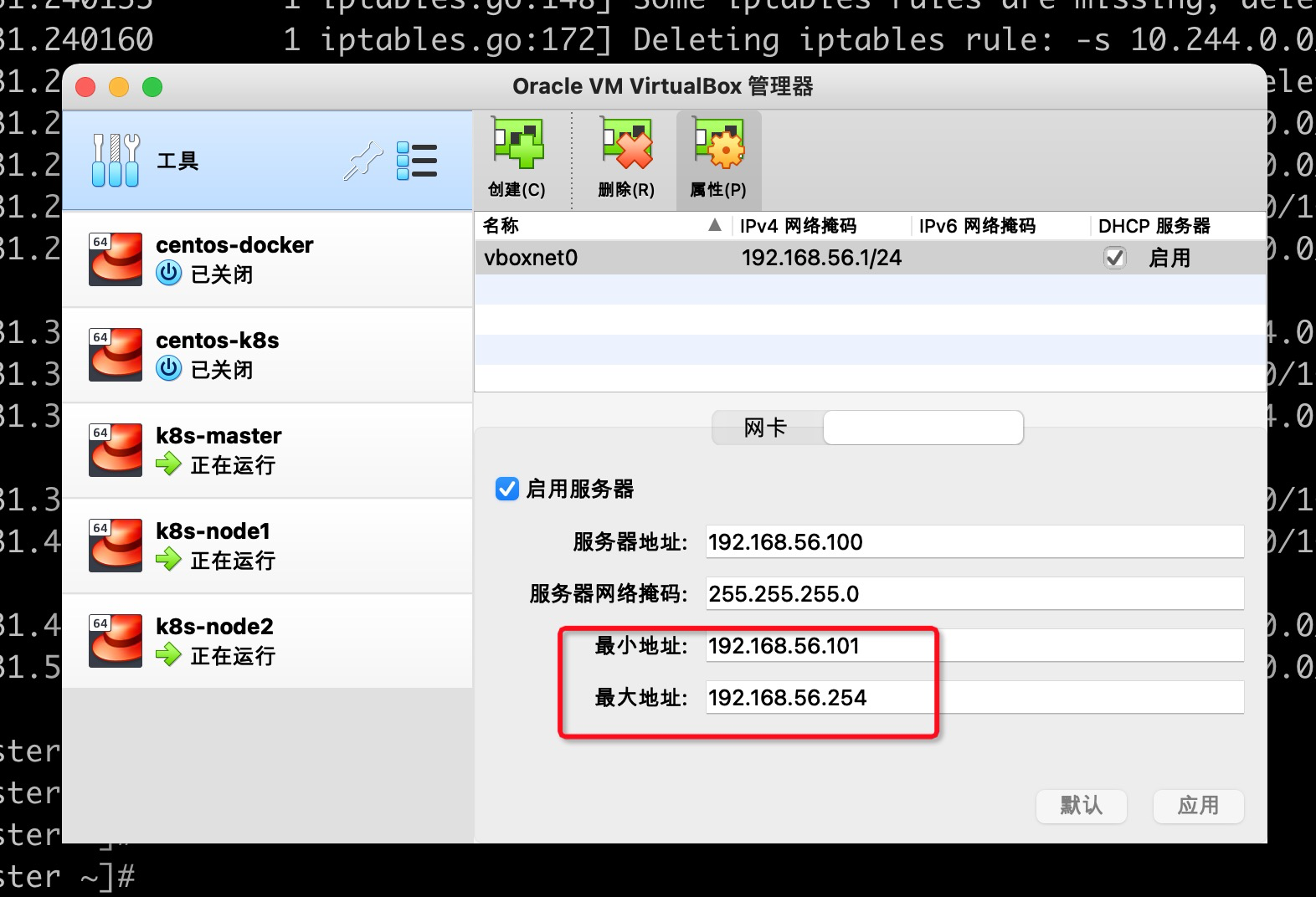

Node cannot join the master node

The ip I used before is 192.168.56.100, which conflicts with the DHCP service of VirualBox. When selecting a port, you need to pay attention to the ip selection range

k8s preliminary preparation

I mainly use it here docker-case Building local k8s(v1.21.2) clusters

Software preparation

- vagrant 2.2.16 Cross platform virtualization tools

- virtualbox Open source free virtual machine

- k8s version v1.21.2

vagrant environment description

- You need to use the root user to start the installation, otherwise you will encounter many problems

| node | Connection mode | password |

|---|---|---|

| master | ssh root@127.0.0.1 -p 2200 | vagrant |

| node1 | ssh root@127.0.0.1 -p 2201 | vagrant |

| node2 | ssh root@127.0.0.1 -p 2202 | vagrant |

Configuration process

Create three virtual machines first

Enter the directory and execute the following command: docker case / k8s

vagrant up

Modify images to Alibaba cloud

View required version

kubeadm config images list

master starts installation

Using alicloud image acceleration, coredns: v1 8.0 can't download due to tag problem, so download coredns: v1.0 first eight

View the list of software to be installed

kubeadm config images list

Here we mainly look at the coredns version

k8s.gcr.io/kube-apiserver:v1.21.2 k8s.gcr.io/kube-controller-manager:v1.21.2 k8s.gcr.io/kube-scheduler:v1.21.2 k8s.gcr.io/kube-proxy:v1.21.2 k8s.gcr.io/pause:3.4.1 k8s.gcr.io/etcd:3.4.13-0 k8s.gcr.io/coredns/coredns:v1.8.0

Download coredns:1.8.0

docker pull registry.aliyuncs.com/google_containers/coredns:1.8.0

Replace tag

docker tag registry.aliyuncs.com/google_containers/coredns:1.8.0 registry.aliyuncs.com/google_containers/coredns:v1.8.0

Delete old

docker rmi registry.aliyuncs.com/google_containers/coredns:1.8.0

kubeadm init

kubeadm init \ --apiserver-advertise-address=192.168.56.200 \ --image-repository registry.aliyuncs.com/google_containers \ --kubernetes-version v1.21.2 \ --pod-network-cidr=10.244.0.0/16

Initialize kube

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config sh -c "echo 'export KUBECONFIG=/etc/kubernetes/admin.conf' >> /etc/profile" source /etc/profile

Installing the network plug-in flannle

Since the ip(192.168.56.200) we used above is not on the eth0 network card, we need to modify the network card. You can view the network card through ip add

1. Download first

You can use it directly kube-flannel.yml

curl https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml > kube-flannel.yml

2. Start editing VI Kube flannel yml

181 containers:

182 - name: kube-flannel

183 image: quay.io/coreos/flannel:v0.14.0

184 command:

185 - /opt/bin/flanneld

186 args:

187 - --ip-masq

188 - --kube-subnet-mgr

189 - --iface=eth1 # This place specifies the network card

3.apply flannel

[root@k8s-master ~]# kubectl apply -f kube-flannel.yml Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+ podsecuritypolicy.policy/psp.flannel.unprivileged created clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.apps/kube-flannel-ds created

4. Verification results

Get pods first, and then view the log of a pod

[root@k8s-master ~]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-59d64cd4d4-2d46n 1/1 Running 0 19m kube-system coredns-59d64cd4d4-jvbvs 1/1 Running 0 19m kube-system etcd-k8s-master 1/1 Running 0 20m kube-system kube-apiserver-k8s-master 1/1 Running 0 20m kube-system kube-controller-manager-k8s-master 1/1 Running 0 15m kube-system kube-flannel-ds-hvsmm 1/1 Running 0 95s kube-system kube-flannel-ds-lhdpp 1/1 Running 0 95s kube-system kube-flannel-ds-nbxl4 1/1 Running 0 95s kube-system kube-proxy-6gj8p 1/1 Running 0 18m kube-system kube-proxy-jf8v6 1/1 Running 0 18m kube-system kube-proxy-srx5t 1/1 Running 0 19m kube-system kube-scheduler-k8s-master 1/1 Running 0 15m

From the log, you can see Using interface with name eth1 and address 192.168.56.200

[root@k8s-master ~]# kubectl logs -n kube-system kube-flannel-ds-nbxl4 I0709 08:28:28.858390 1 main.go:533] Using interface with name eth1 and address 192.168.56.200 I0709 08:28:28.859608 1 main.go:550] Defaulting external address to interface address (192.168.56.200) W0709 08:28:29.040955 1 client_config.go:608] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

Using ipvs load balancing

What is ipvs You can see

28 iptables:

29 masqueradeAll: false

30 masqueradeBit: null

31 minSyncPeriod: 0s

32 syncPeriod: 0s

33 ipvs:

34 excludeCIDRs: null

35 minSyncPeriod: 0s

36 scheduler: ""

37 strictARP: false

38 syncPeriod: 0s

39 tcpFinTimeout: 0s

40 tcpTimeout: 0s

41 udpTimeout: 0s

42 kind: KubeProxyConfiguration

43 metricsBindAddress: ""

44 mode: "ipvs" # ipvs is specified here

45 nodePortAddresses: null

46 oomScoreAdj: null

Kubedm token reset

This step is not necessary. If the above join is forgotten, it can be regenerated

kubeadm token create --print-join-command

Possible problems

scheduler and controller manager ports do not work

[root@k8s-master ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused

etcd-0 Healthy {"health":"true"}

solve

The main problem is -- port=0. Just comment it out

- vi /etc/kubernetes/manifests/kube-scheduler.yaml comment port

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-scheduler

tier: control-plane

name: kube-scheduler

namespace: kube-system

spec:

containers:

- command:

- kube-scheduler

- --authentication-kubeconfig=/etc/kubernetes/scheduler.conf

- --authorization-kubeconfig=/etc/kubernetes/scheduler.conf

- --bind-address=127.0.0.1

- --kubeconfig=/etc/kubernetes/scheduler.conf

- --leader-elect=true

# - --port=0 # Comment port

image: registry.aliyuncs.com/google_containers/kube-scheduler:v1.21.2

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 10259

scheme: HTTPS

.....

- vi /etc/kubernetes/manifests/kube-controller-manager.yaml also annotates ports

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-controller-manager

tier: control-plane

name: kube-controller-manager

namespace: kube-system

spec:

containers:

- command:

- kube-controller-manager

- --allocate-node-cidrs=true

- --authentication-kubeconfig=/etc/kubernetes/controller-manager.conf

- --authorization-kubeconfig=/etc/kubernetes/controller-manager.conf

- --bind-address=127.0.0.1

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --cluster-cidr=10.24.0.0/16

- --cluster-name=kubernetes

- --cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt

- --cluster-signing-key-file=/etc/kubernetes/pki/ca.key

- --controllers=*,bootstrapsigner,tokencleaner

- --kubeconfig=/etc/kubernetes/controller-manager.conf

- --leader-elect=true

# - --port=0

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --root-ca-file=/etc/kubernetes/pki/ca.crt

- --service-account-private-key-file=/etc/kubernetes/pki/sa.key

- --service-cluster-ip-range=10.96.0.0/12

- --use-service-account-credentials=true

image: registry.aliyuncs.com/google_containers/kube-controller-manager:v1.21.2

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 10257

scheme: HTTPS

.....

- Restart

systemctl restart kubelet.service

When kubedm joins, it is prompted that bridge NF call iptables is not closed

error execution phase preflight: [preflight] Some fatal errors occurred: [ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1

solve

sudo sh -c " echo '1' > /proc/sys/net/bridge/bridge-nf-call-iptables"

Node cannot join the master node

The ip I used before is 192.168.56.100, which conflicts with the DHCP service of VirualBox. When selecting a port, you need to pay attention to the ip selection range