Quickly create StorageClass of NFS type to realize dynamic provisioning

Introduction: persistent storage in K8S

K8s also introduces the concept of Persistent Volumes, which can separate storage and computing, manage storage resources and computing resources through different components, and then decouple the life cycle association between pod and Volume. In this way, after the pod is deleted, the PV used by it still exists and can be reused by the new pod.

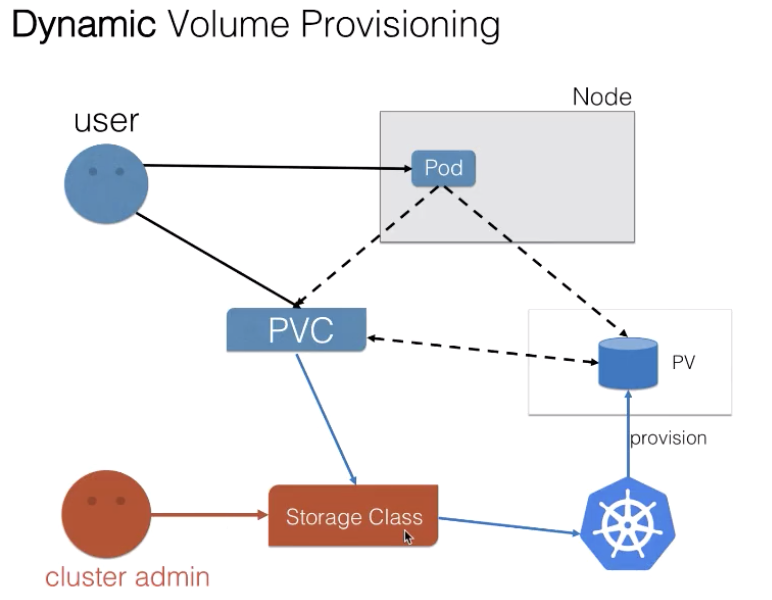

Persistent storage in K8S is divided into Static Volume Provisioning and Dynamic Volume Provisioning

What does dynamic supply mean? That is, the cluster administrator does not pre allocate PV. He writes a template file, which is used to represent some parameters required to create a certain type of storage (block storage, file storage, etc.). These parameters are not concerned by the user and implement relevant parameters for the storage itself. Users only need to submit their own storage requirements, that is, PVC files, and specify the storage template (StorageClass) to be used in PVC.

The management and control components in the K8s cluster will dynamically generate the storage (PV) required by users in combination with the information of PVC and StorageClass. After binding PVC and PV, pod can use PV. The storage template required for storage is generated through StorageClass configuration, and then PV objects are dynamically created according to the needs of users to achieve on-demand distribution, which not only does not increase the difficulty of users, but also liberates the operation and maintenance work of cluster administrators.

Alibaba cloud native open class: Application storage and persistent data volumes: core knowledge

(image source: Alibaba cloud native open class: Application storage and persistent data volumes: core knowledge)

Environmental Science:

Kubernetes 1.21.1

Deploying NFS provisioner

Note: k8s NFS provisioner will report an error when creating pvc in version 1.21

E0903 08:00:24.858523 1 controller.go:1004] provision "default/test-claim" class "managed-nfs-storage": unexpected error getting claim reference: selfLink was empty, can't make reference

resolvent:

Modify the / etc / kubernetes / manifest / Kube apiserver.yaml file

Add -- feature gates = removeselflink = false

spec:

containers:

- command:

- kube-apiserver

- --feature-gates=RemoveSelfLink=false # Add this line

- --advertise-address=172.24.0.5

- --allow-privileged=true

- --authorization-mode=Node,RBAC

- --client-ca-file=/etc/kubernetes/pki/ca.crt

Because it is a cluster deployed by kubedm, it will be automatically overloaded after modifying the kube-apiserver.yaml file

Here's how to deploy NFS provisioner

(1) Create ServiceAccount, ClusterRole, ClusterRoleBinding, etc. to authorize NFS client provider

# rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

(2) Deploying NFS client provisioner

Note: the address and directory should be changed to the corresponding configuration of the actual NFS service

# nfs-provisioner.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default #Consistent with the namespace in the RBAC file

spec:

replicas: 1

selector:

matchLabels:

app: nfs-client-provisioner

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: gxf-nfs-storage #The name of the provisioner. Make sure that the name is consistent with the provisioner name in the nfs-StorageClass.yaml file

- name: NFS_SERVER

value: 10.24.X.X #NFS Server IP address

- name: NFS_PATH

value: /home/nfs/1 #NFS mount volume

volumes:

- name: nfs-client-root

nfs:

server: 10.24.X.X #NFS Server IP address

path: /home/nfs/1 #NFS mount volume

# deploy sudo kubectl apply -f rbac.yaml sudo kubectl apply -f nfs-provisioner.yaml

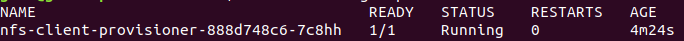

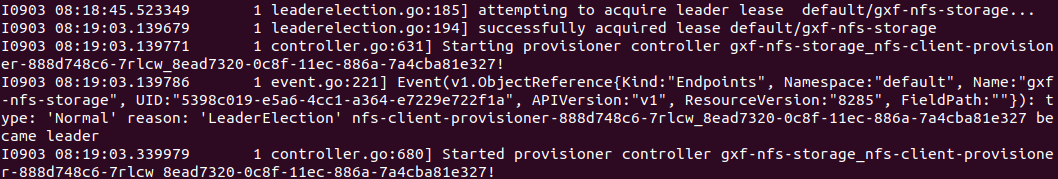

NFS provisioner startup

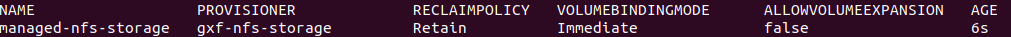

Create StorageClass

# nfs-StorageClass.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: managed-nfs-storage provisioner: gxf-nfs-storage #The name here should be the same as the environment variable provider in the provisioner configuration file_ Name consistent reclaimPolicy: Retain # The default is delete parameters: archiveOnDelete: "true" # false means that when pv is deleted, the corresponding folder under nfs will also be deleted. true is the opposite

- The reclaimPolicy in nfs-StorageClass.yaml is not written (or deleted by default), and archiveOnDelete: "false". When pvc is deleted, the corresponding pv will be automatically deleted, and the files in the NFS file directory will be deleted at the same time;

- When reclaimPolicy: retain, archiveOnDelete: "true" in nfs storageclass.yaml, pv needs to be deleted manually after pvc is deleted, and files on nfs will not be deleted.

# deploy sudo kubectl nfs-StorageClass.yaml

experiment

Experiment 1: deployment

Deploy a deployment with two copies and mount the same directory

Create pvc

Specify storageClass as managed NFS storage created above

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Mi

storageClassName: managed-nfs-storage

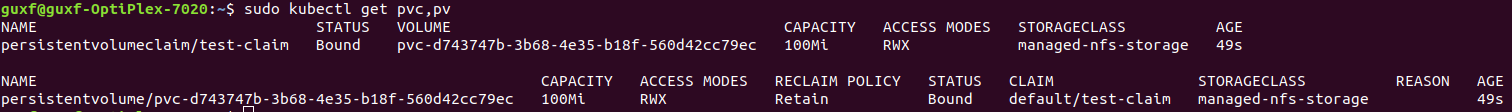

pv and pvc are created

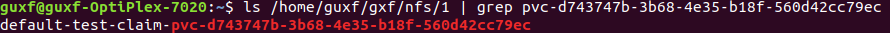

A folder is generated in the nfs directory (corresponding to pv)

deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deploy

labels:

app: test-deploy

namespace: default #Consistent with the namespace in the RBAC file

spec:

replicas: 2

selector:

matchLabels:

app: test-deploy

strategy:

type: Recreate

selector:

matchLabels:

app: test-deploy

template:

metadata:

labels:

app: test-deploy

spec:

containers:

- name: test-pod

image: busybox:1.24

command:

- "/bin/sh"

args:

- "-c"

# - "touch /mnt/SUCCESS3 && exit 0 || exit 1" #Exit after creating a SUCCESS file

- touch /mnt/SUCCESS5; sleep 50000

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

# subPath: test-pod-3 # Sub path (this represents the test pod subdirectory under the storage volume)

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: test-claim #Consistent with PVC name

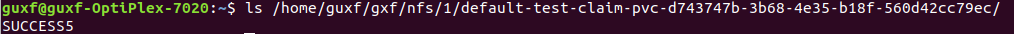

This deployment will create a file called "SUCCESS5" in the persistent volume

Experiment 2: deploy statefulset

# test-sts-1.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: test-sts

labels:

k8s-app: test-sts

spec:

serviceName: test-sts-svc

replicas: 3

selector:

matchLabels:

k8s-app: test-sts

template:

metadata:

labels:

k8s-app: test-sts

spec:

containers:

- image: busybox:1.24

name: test-pod

command:

- "/bin/sh"

args:

- "-c"

# - "touch /mnt/SUCCESS3 && exit 0 || exit 1" #Exit after creating a SUCCESS file

- touch /mnt/SUCCESS5; sleep 50000

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

volumeClaimTemplates:

- metadata:

name: nfs-pvc

spec:

accessModes: ["ReadWriteMany"]

storageClassName: managed-nfs-storage

resources:

requests:

storage: 20Mi

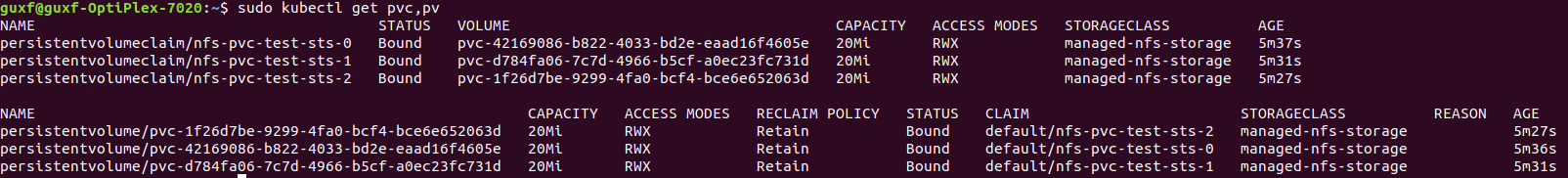

kubectl apply -f test-sts-1.yaml kubectl get sts NAME READY AGE test-sts 3/3 4m46s

Here, directly use volumeClaimTemplates to specify storageClass and required storage capacity. Without creating pvc in advance, k8s directly create corresponding pvc and pv. Since it is a stateful application, three PVCs and corresponding PVS are created.

reference resources:

StorageClass+NFS in k8s learning notes

Build NFS service under Ubuntu