1, Development preparation

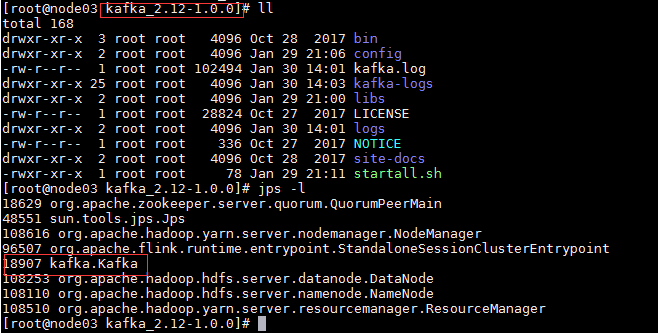

First of all, after setting up the kafka (version 1.0.0) environment, the development language used here is Java, the build tool Maven.

Maven relies on the following:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>1.0.0</groupId>

<artifactId>kafka-consumerExample</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>jar</packaging>

<name>kafka-consumerExample</name>

<url>http://maven.apache.org</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<java.version>1.7</java.version>

<slf4j.version>1.7.25</slf4j.version>

<logback.version>1.2.3</logback.version>

<kafka.version>1.0.0</kafka.version>

</properties>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

<version>${logback.version}</version>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-core</artifactId>

<version>${logback.version}</version>

</dependency>

<!-- kafka -->

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.12</artifactId>

<version>${kafka.version}</version>

<exclusions>

<exclusion>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

</exclusion>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

<exclusion>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

</exclusion>

</exclusions>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>${kafka.version}</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-streams</artifactId>

<version>${kafka.version}</version>

</dependency>

</dependencies>

</project>

2, Kafka Producer

package com.lxk.kafka.producer;

import java.util.Properties;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.common.serialization.StringSerializer;

public class KafkaProducerTest implements Runnable {

private final KafkaProducer<String, String> producer;

private final String topic;

public KafkaProducerTest(String topicName) {

Properties props = new Properties();

props.put("bootstrap.servers", "192.168.18.103:9092,192.168.18.104:9092,192.168.18.105:9092");

// acks=0: if set to 0, the producer does not wait for a response from kafka.

// acks=1: this configuration means kafka will write this message to the local log file, but will not wait for the successful response of other machines in the cluster.

// acks=all: this configuration means that the leader will wait for all follower synchronization to complete. This ensures that messages are not lost unless all machines in the kafka cluster hang up. This is the strongest guarantee of availability.

props.put("acks", "all");

// If it is configured to a value greater than 0, the client will resend the message if it fails to send.

props.put("retries", 0);

// When multiple messages need to be sent to the same partition, the producer tries to merge the network requests. This improves the efficiency of client s and producers

props.put("batch.size", 16384);

props.put("key.serializer", StringSerializer.class.getName());

props.put("value.serializer", StringSerializer.class.getName());

this.producer = new KafkaProducer<String, String>(props);

this.topic = topicName;

}

public void run() {

int messageNo = 1;

try {

for (;;) {

String messageStr = "Hello, this is number one" + messageNo + "Bar data";

producer.send(new ProducerRecord<String, String>(topic, "Message", messageStr));

// Print when you produce 10

if (messageNo % 10 == 0) {

System.out.println("Messages sent:" + messageStr);

}

// Production 100 exit

if (messageNo % 100 == 0) {

System.out.println("Successfully sent" + messageNo + "strip");

break;

}

messageNo++;

// Utils.sleep(1);

}

} catch (Exception e) {

e.printStackTrace();

} finally {

producer.close();

}

}

public static void main(String args[]) {

KafkaProducerTest test = new KafkaProducerTest("GMALL_STARTUP");

Thread thread = new Thread(test);

thread.start();

}

}output:

14:10:43.436 [main] INFO org.apache.kafka.clients.producer.ProducerConfig - ProducerConfig values:

acks = all

batch.size = 16384

bootstrap.servers = [192.168.18.103:9092, 192.168.18.104:9092, 192.168.18.105:9092]

buffer.memory = 33554432

client.id =

compression.type = none

connections.max.idle.ms = 540000

enable.idempotence = false

interceptor.classes = null

key.serializer = class org.apache.kafka.common.serialization.StringSerializer

linger.ms = 0

max.block.ms = 60000

max.in.flight.requests.per.connection = 5

max.request.size = 1048576

metadata.max.age.ms = 300000

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

partitioner.class = class org.apache.kafka.clients.producer.internals.DefaultPartitioner

receive.buffer.bytes = 32768

reconnect.backoff.max.ms = 1000

reconnect.backoff.ms = 50

request.timeout.ms = 30000

retries = 0

retry.backoff.ms = 100

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.mechanism = GSSAPI

security.protocol = PLAINTEXT

send.buffer.bytes = 131072

ssl.cipher.suites = null

ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm = null

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

transaction.timeout.ms = 60000

transactional.id = null

value.serializer = class org.apache.kafka.common.serialization.StringSerializerCreatePartitions(37): 0 [usable: 0])

//Message sent: Hello, this is the 10th data

14:10:44.113 [kafka-producer-network-thread | producer-1] DEBUG org.apache.kafka.clients.NetworkClient - [Producer clientId=producer-1] Initiating connection to node node05:9092 (id: 2 rack: null)

//Message sent: Hello, this is the 20th data

//Message sent: Hello, this is the 30th data

//Message sent: Hello, this is data 40

//Message sent: Hello, this is the 50th data

//Message sent: Hello, this is the 60th data

//Message sent: Hello, this is the 70th data

//Message sent: Hello, this is the 80th data

//Message sent: Hello, this is the 90th data

//Message sent: Hello, this is the 100th data

//100 sent successfully

14:10:44.116 [Thread-1] INFO org.apache.kafka.clients.producer.KafkaProducer - [Producer clientId=producer-1] Closing the Kafka producer with timeoutMillis = 9223372036854775807 ms.

Configuration description

bootstrap.servers: address of kafka.

acks: the confirmation mechanism of the message. The default value is 0.

- acks=0: if set to 0, the producer does not wait for a response from kafka.

- acks=1: this configuration means kafka will write this message to the local log file, but will not wait for the successful response of other machines in the cluster.

- acks=all: this configuration means that the leader will wait for all follower s to complete synchronization. This ensures that messages are not lost unless all machines in the kafka cluster hang up. This is the strongest guarantee of availability.

retries: if configured to a value greater than 0, the client will resend the message if it fails to send.

batch.size: when multiple messages need to be sent to the same partition, the producer will try to merge the network requests. This improves the efficiency of client s and producers.

key.serializer: key serialization, default org.apache.kafka.common.serialization.StringDeserializer.

value.deserializer: value serialization, default org.apache.kafka.common.serialization.StringDeserializer.

...

There are more configurations. You can check the official documents, which are not explained here.

After the configuration of kafka is added, we start to produce data. The production data code only needs to be as follows:

producer.send(new ProducerRecord<String, String>(topic,key,value));

Topic: the name of the message queue, which can be created in kafka service first. If the topic is not created in kafka, it will be created automatically!

Key: key value, that is, the value corresponding to value, is similar to Map.

value: the data to be sent. The data format is String type.

After the producer program is written, it can be produced. The message I send here is:

String messageStr="Hello, this is number one"+messageNo+"Bar data";

And just send 100 to exit.

3, Kafka Consumer

package com.lxk.kafka.consumer;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.List;

import java.util.Properties;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.common.serialization.StringDeserializer;

public class KafkaConsumerTest implements Runnable {

private KafkaConsumer<String, String> consumer;

private ConsumerRecords<String, String> msgList;

private String topic;

private static final String GROUPID = "groupE4";

public KafkaConsumerTest(String topicName) {

this.topic = topicName;

init();

}

public void run() {

System.out.println("---------Start consumption---------");

int messageNo = 1;

List<String> list = new ArrayList<String>();

List<Long> list2 = new ArrayList<Long>();

try {

for (;;) {

msgList = consumer.poll(100);

if (null != msgList && msgList.count() > 0) {

for (ConsumerRecord<String, String> record : msgList) {

if (messageNo % 10 == 0) {

System.out.println(messageNo + "=======receive: key = " + record.key() + ", value = "

+ record.value() + " offset===" + record.offset());

}

list.add(record.value());

list2.add(record.offset());

messageNo++;

}

if (list.size() == 50) {

// Manual submission

consumer.commitSync();

System.out.println("Submit successfully" + list.size() + "strip,At this point offset by:" + list2.get(49));

} else if (list.size() > 50) {

consumer.close();

init();

list.clear();

list2.clear();

}

} else {

Thread.sleep(1000);

}

}

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

consumer.close();

}

}

private void init() {

Properties props = new Properties();

// Address of kafka consumption

props.put("bootstrap.servers", "node03:9092,node04:9092,node05:9092");

// Group name different group name can be consumed repeatedly

props.put("group.id", GROUPID);

// Auto submit or not

props.put("enable.auto.commit", "false");

// Timeout time

props.put("session.timeout.ms", "30000");

// Maximum number of pull at a time

props.put("max.poll.records", 10);

// When there are submitted offsets in each partition, the consumption starts from the submitted offset; when there is no submitted offset, the consumption starts from the beginning

// latest

// When there are submitted offsets under each partition, consumption starts from the submitted offset; when there is no submitted offset, consumption of the newly generated data under the partition

// none

// When the submitted offset exists in each partition of topic, consumption starts after the offset; if there is no submitted offset in one partition, an exception is thrown

props.put("auto.offset.reset", "earliest");

// serialize

props.put("key.deserializer", StringDeserializer.class.getName());

props.put("value.deserializer", StringDeserializer.class.getName());

this.consumer = new KafkaConsumer<String, String>(props);

// Subscribe to topic list

this.consumer.subscribe(Arrays.asList(topic));

System.out.println("Initialization!");

}

public static void main(String args[]) {

KafkaConsumerTest test1 = new KafkaConsumerTest("GMALL_STARTUP");

Thread thread1 = new Thread(test1);

thread1.start();

}

}

output:

14:13:41.119 [Thread-1] INFO org.apache.kafka.clients.consumer.ConsumerConfig - ConsumerConfig values:

auto.commit.interval.ms = 5000

auto.offset.reset = earliest

bootstrap.servers = [node03:9092, node04:9092, node05:9092]

check.crcs = true

client.id =

connections.max.idle.ms = 540000

enable.auto.commit = false

exclude.internal.topics = true

fetch.max.bytes = 52428800

fetch.max.wait.ms = 500

fetch.min.bytes = 1

group.id = groupE4

heartbeat.interval.ms = 3000

interceptor.classes = null

internal.leave.group.on.close = true

isolation.level = read_uncommitted

key.deserializer = class org.apache.kafka.common.serialization.StringDeserializer

max.partition.fetch.bytes = 1048576

max.poll.interval.ms = 300000

max.poll.records = 10

metadata.max.age.ms = 300000

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

partition.assignment.strategy = [class org.apache.kafka.clients.consumer.RangeAssignor]

receive.buffer.bytes = 65536

reconnect.backoff.max.ms = 1000

reconnect.backoff.ms = 50

request.timeout.ms = 305000

retry.backoff.ms = 100

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.mechanism = GSSAPI

security.protocol = PLAINTEXT

send.buffer.bytes = 131072

session.timeout.ms = 30000

ssl.cipher.suites = null

ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm = null

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

value.deserializer = class org.apache.kafka.common.serialization.StringDeserializer14:13:41.123 [Thread-1] INFO org.apache.kafka.common.utils.AppInfoParser - Kafka version : 1.0.0

14:13:41.123 [Thread-1] INFO org.apache.kafka.common.utils.AppInfoParser - Kafka commitId : aaa7af6d4a11b29d

14:13:41.123 [Thread-1] DEBUG org.apache.kafka.clients.consumer.KafkaConsumer - [Consumer clientId=consumer-5, groupId=groupE4] Kafka consumer initialized

14:13:41.123 [Thread-1] DEBUG org.apache.kafka.clients.consumer.KafkaConsumer - [Consumer clientId=consumer-5, groupId=groupE4] Subscribed to topic(s): GMALL_STARTUP

//Initialization!14:13:41.157 [Thread-1] DEBUG org.apache.kafka.clients.consumer.internals.Fetcher - [Consumer clientId=consumer-5, groupId=groupE4] Fetch READ_UNCOMMITTED at offset 800 for partition GMALL_STARTUP-2 returned fetch data (error=NONE, highWaterMark=900, lastStableOffset = -1, logStartOffset = 0, abortedTransactions = null, recordsSizeInBytes=4389)

14:13:41.158 [Thread-1] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name GMALL_STARTUP-2.records-lag

250=======receive: key = Message, value = Hello, this is number 10 offset===809

260=======receive: key = Message, value = Hello, this is number 20 offset===819

270=======receive: key = Message, value = Hello, this is number 30 offset===829

280=======receive: key = Message, value = Hello, this is number 40 offset===839

290=======receive: key = Message, value = Hello, this is the 50th data offset===849

14:13:41.162 [Thread-1] DEBUG org.apache.kafka.clients.consumer.internals.ConsumerCoordinator - [Consumer clientId=consumer-5, groupId=groupE4] Committed offset 0 for partition GMALL_STARTUP-0

14:13:41.162 [Thread-1] DEBUG org.apache.kafka.clients.consumer.internals.ConsumerCoordinator - [Consumer clientId=consumer-5, groupId=groupE4] Committed offset 0 for partition GMALL_STARTUP-1

14:13:41.162 [Thread-1] DEBUG org.apache.kafka.clients.consumer.internals.ConsumerCoordinator - [Consumer clientId=consumer-5, groupId=groupE4] Committed offset 850 for partition GMALL_STARTUP-2

//50 entries have been submitted successfully. At this time, the offset is 84914:13:41.162 [thread-1] debug org. Apache. Kafka. Clients. Consumer. Internal. Consumercoordinator - [consumer ClientID = consumer-5, groupid = groupe4] committed offset 850 for partition gmal_startup-2

//50 entries have been submitted successfully. At this time, the offset is 849

300=======receive: key = Message, value = Hello, this is number 60 offset===85914:13:41.209 [Thread-1] DEBUG org.apache.kafka.clients.consumer.internals.Fetcher - [Consumer clientId=consumer-6, groupId=groupE4] Fetch READ_UNCOMMITTED at offset 850 for partition GMALL_STARTUP-2 returned fetch data (error=NONE, highWaterMark=900, lastStableOffset = -1, logStartOffset = 0, abortedTransactions = null, recordsSizeInBytes=4389)

14:13:41.209 [Thread-1] DEBUG org.apache.kafka.common.metrics.Metrics - Added sensor with name GMALL_STARTUP-2.records-lag

310=======receive: key = Message, value = Hello, this is number 60 offset===859

320=======receive: key = Message, value = Hello, this is Number 70 offset===869

330=======receive: key = Message, value = Hello, this is number 80 offset===879

340=======receive: key = Message, value = Hello, this is number 90 offset===889

350=======receive: key = Message, value = Hello, this is the 100th data offset===899

14:13:41.216 [Thread-1] DEBUG org.apache.kafka.clients.consumer.internals.ConsumerCoordinator - [Consumer clientId=consumer-6, groupId=groupE4] Committed offset 0 for partition GMALL_STARTUP-0

14:13:41.217 [Thread-1] DEBUG org.apache.kafka.clients.consumer.internals.ConsumerCoordinator - [Consumer clientId=consumer-6, groupId=groupE4] Committed offset 0 for partition GMALL_STARTUP-1

14:13:41.217 [Thread-1] DEBUG org.apache.kafka.clients.consumer.internals.ConsumerCoordinator - [Consumer clientId=consumer-6, groupId=groupE4] Committed offset 900 for partition GMALL_STARTUP-2

//50 entries have been submitted successfully. At this time, the offset is 899

Configuration description

kafka consumption should be the focus, after all, most of the time, we mainly use the data for consumption.

The configuration of kafka consumption is as follows:

bootstrap.servers: address of kafka.

group.id: different group names can be consumed repeatedly. For example, you first used group name A to consume 1000 pieces of kafka data, but you still want to consume these 100 pieces of data again, and you don't want to regenerate them, so here you only need to change the group name to be able to re consume.

enable.auto.commit: whether to submit automatically. The default value is true.

auto.commit.interval.ms: the length of callback processing from poll (pull).

session.timeout.ms: timeout.

max.poll.records: the maximum number of pull pieces at a time.

auto.offset.reset: consumption rule, default to earliest.

- earliest: when there are submitted offsets under each partition, consumption starts from the submitted offset; when there is no submitted offset, consumption starts from the beginning.

- latest: when there is a submitted offset under each partition, consumption starts from the submitted offset; when there is no submitted offset, consumption of the newly generated data under the partition.

- None: when the submitted offset exists in each partition of the topic, consumption starts after the offset; if there is no submitted offset in one partition, an exception will be thrown.

key.serializer: key serialization, default org.apache.kafka.common.serialization.StringDeserializer.

value.deserializer: value serialization, default org.apache.kafka.common.serialization.StringDeserializer.

Since this is a set auto submit, the consumption code is as follows:

We need to subscribe to a topic first, which is to specify which topic to consume.

consumer.subscribe(Arrays.asList(topic));

After subscription, we pull data from kafka:

ConsumerRecords<String, String> msgList=consumer.poll(1000);

Generally speaking, monitoring is used for consumption. Here we use for(;;) to monitor and set 100 consumption items to exit!

Note the following auto commit: Details on Kafka's consumer manual submission

offset: refers to the subscript of each consumption group in kafka's topic.

Simply put, a message corresponds to an offset subscript. If an offset is submitted each time data is consumed, the next consumption will start from the submitted offset plus one.

For example, if there are 100 pieces of data in a topic, and I consume 50 pieces of data and submit them, then the offset submitted by kafka server record at this time is 49 (the offset starts from 0), and the next time I consume, the offset starts from 50.

summary

Simple development of a kafka program requires the following steps:

- Successfully set up kafka server and start it successfully!

- Get the kafka service information, and then configure it in the code.

- After the configuration is completed, listen for messages generated in the message queue in kafka.

- Process the generated data in business logic!

Refer to official documents for kafka introduction: http://kafka.apache.org/intro