In the previous article, we learned about specific terms in Kafka and related topic concepts, which you can refer to in more detail Kafka Learning Notes (2): Understanding Kafka Cluster and Topic Next we'll use the Java language to call Kafka's API. Today we'll first look at what we can do with Producer.

Add Dependency

First let's add maven dependencies, including the following two

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>0.11.0.0</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.10</artifactId>

<version>0.10.2.1</version>

</dependency>

Fundamentals

Here producer or cosumer is actually a developer-developed program. If a program is developed to send a message to Kafka, then that program is the Producer producer. If the program is developed to read messages from Kafka, then that program is the Consumer consumer. Kafka provides Java client API s that developers can use.Develop applications to interact with Kafka.

The producer program is used to create new messages that are sent to the kafka cluster. In other messaging systems, producers may be called publisher s or writer s. Typically, a new message is sent by the producer to a specific topic. By default, producers do not care which partition the message is sent to, and producers cause the message to be in allUniform distribution in partition.

In some cases, producer will send messages to a specific partition (you can map messages to a specific partition by using message keys and custom partitioner s to generate hash values for keys). Developing programs that send messages to kafka mainly considers the following:

- Is every message sent important or acceptable for message loss?

- Can I accept occasional duplicate messages?

- Are there strict requirements for message latency and throughput?

For example, in a banking transaction system, the message requirements are strict, no message loss or repeated receipt is allowed, message delay is less than 500 milliseconds, and the throughput of millions of messages per second is required (similar in all scenarios involving money)Another scenario, such as storing click event information from a website to Kafka, is one in which loss or duplication of some messages is acceptable and message latency can be high, as long as it has no impact on the user experience (for example, the common business of sending text messages after an order is created can allow a certain delay in sending text messages)Different scenarios and different requirements will affect how the producer API is used and configured.

Develop Producer Program

Before starting the experiment, remember to start the Kafka cluster. See Experiment 1. We will use the Eclipse development tool to develop the Producer program and send a message to Kafka.

First you need to create the KafkaProducer object. The KafkaProducer object requires three essential attributes: bootstrap.servers, key.serializer, value.serializer.

Write code in the main method of the MyFirstProducer class:

package com.bupt.kafka.producer;

/**

* @author melooo

* @date 2021/10/13 8:53 Afternoon

*/

import java.util.Properties;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

public class MyFirstProducer {

public static void main(String[] args) {

Properties kafkaProps = new Properties();

kafkaProps.put("bootstrap.servers", "localhost:9092");

//Sends messages to the kafka cluster, including key information in addition to the message value itself, which is used to distribute messages evenly across partition s.

//The key to send the message, type String, using a serializer of type String

kafkaProps.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

//The value of the message sent, of type String, using a serializer of type String

kafkaProps.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

//Create a KafkaProducer object and pass in the Proerties object created above

KafkaProducer<String, String> producer = new KafkaProducer<String, String>(kafkaProps);

/**

* Create message objects using the ProducerRecord <String, String> (String topic, String key, String value) constructor

* The constructor accepts three parameters:

* topic--Tell kafkaProducer which topic the message was sent to;

* key--Tell kafkaProducer the key value of the message sent, noting that the key value type needs to match the key.serializer value set earlier

* value--Tell kafkaProducer the value of the message sent, which is the message content. Note that the value type needs to match the value.serializer value set earlier

*/

ProducerRecord<String, String> record = new ProducerRecord<>("mySecondTopic", "messageKey", "hello kafka");

try {

//Send the previously created message object ProducerRecord to the kafka cluster

//Errors may be sent during message sending, such as the failure to connect to the kafka cluster, so use catch exception code here

producer.send(record);

producer.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

Start the command line terminal and run the following commands in turn:

# Enter kafka bin directory cd /usr/local/Cellar/kafka/2.6.0/bin #Start a command line consumer to consume messages sent to mySecondTopic by previous Java developers ./kafka-console-consumer --bootstrap-server localhost:9092 --topic mySecondTopic

Run the MyFirstProducer java class and look at the command line terminal that started kafka-console-consumer. You can see that the sending message was printed to the command line terminal. The result of the operation is shown in the following figure:

As you can see, control of the producer object can be accomplished by setting different parameters of the Properties object. The official Kafka document lists all the configuration parameters, so look at them yourself. The above snippet instantiates a producer and then calls the send() method to send the message.

There are three main ways to send messages:

- Fire-and-forget: This method is used to send a message to the broker, regardless of whether the message arrived successfully or not. In most cases, the message will reach the broker successfully because Kafka is highly available and the producer will automatically retry sending. However, there will still be cases of message loss; this method is used in this experiment.

- Synchronous Send: Sends a message, and the send() method returns a Future object. Using the get() blocking method of this object, different business processes can be done depending on whether the send() method is successful or not. This method focuses on whether the message arrives successfully, but because synchronous sending is used, the message is sent at a low speed, that is, throughput is reduced.

- Asynchronous Send: Calls the send() method as a callback function that triggers the callback function to execute when a broker response is received. This method focuses on both the success of the message arrival and the speed at which it is sent.

Synchronized Send

Create a java class named MySecondProducer and write code in the main method of the MySecondProducer class:

package com.bupt.kafka.producer;

/**

* @author melooo

* @date 2021/10/13 9:07 Afternoon

*/

import java.util.Properties;

import java.util.concurrent.Future;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

public class MySecondProducer {

public static void main(String[] args) {

Properties kafkaProps = new Properties();

kafkaProps.put("bootstrap.servers", "localhost:9092");

kafkaProps.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

kafkaProps.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

KafkaProducer<String, String> producer = new KafkaProducer<String, String>(kafkaProps);

ProducerRecord<String, String> record =new ProducerRecord<>("mySecondTopic", "messageKey", "hello kafka");

try {

Future<RecordMetadata> future = producer.send(record);

//The send method of producer returns a Future object, and we use the get method of the Future object to synchronize the sending of messages.

//The get method of the Future object will block until it gets a response from the kafka cluster, which has two results:

//1. There is an exception in the response: the get method will throw an exception at this time, and we can catch the exception for corresponding business processing

//2. There is no exception in the response: at this point the get method returns a RecordMetadata object that contains offset, partition, and other information in Topic about the currently sent successful message.

RecordMetadata recordMetadata = future.get();

long offset=recordMetadata.offset();

int partition=recordMetadata.partition();

System.out.println("the message offset : "+offset+" ,partition:"+partition+"?");

} catch (Exception e) {

e.printStackTrace();

}

}

}

Run the MySecondProducer java class, then look at the command line terminal that started kafka-console-consumer.sh, and you can see that the sending message was printed to the command line terminal.Object We can know if the message was sent successfully, if it was sent successfully, we can continue to send the next message. If it fails, we can send it again or store it.

Asynchronous Send

package com.bupt.kafka.producer;

/**

* @author melooo

* @date 2021/10/13 9:16 Afternoon

*/

import java.util.Properties;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

public class MyThirdProducer {

public static void main(String[] args) {

Properties kafkaProps = new Properties();

kafkaProps.put("bootstrap.servers", "localhost:9092");

//Sends messages to the kafka cluster, including key information in addition to the message value itself, which is used to distribute messages evenly across partition s.

//The key to send the message, type String, using a serializer of type String

kafkaProps.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

//The value of the message sent, of type String, using a serializer of type String

kafkaProps.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

//Create a KafkaProducer object and pass in the Proerties object created above

KafkaProducer<String, String> producer = new KafkaProducer<String, String>(kafkaProps);

/*

* Create message objects using the ProducerRecord <String, String> (String topic, String key, String value) constructor

* The constructor accepts three parameters:

* topic--Tell kafkaProducer which topic the message was sent to;

* key--Tell kafkaProducer the key value of the message sent, noting that the key value type needs to match the key.serializer value set earlier

* value--Tell kafkaProducer the value of the message sent, which is the message content. Note that the value type needs to match the value.serializer value set earlier

*/

ProducerRecord<String, String> record = new ProducerRecord<>("mySecondTopic", "messageKey", "hello kafka3");

try {

//When a message is sent, an object that implements the Callback interface is passed in, at which point the message will not be blocked and the onCompletion method of the Callback interface will be called when the message is sent.

producer.send(record, new DemoProducerCallback());

producer.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

The code above uses an object DemoProducerCallback that implements the Callback interface, which we need to create to implement the org.apache.kafka.clients.producer.Callback interface. Write the following code in the interface method onCompletion:Send messages using multithreading

package com.bupt.kafka.producer;

import org.apache.kafka.clients.producer.Callback;

import org.apache.kafka.clients.producer.RecordMetadata;

/**

* @author melooo

* @date 2021/10/13 9:16 Afternoon

*/

public class DemoProducerCallback implements Callback {

//When the message is sent, this method is called and the value of the method parameter exception is not null if the response of kafka is abnormal

//If the response of kafka is not abnormal, the value of the method parameter exception is null, and different business processes can be made based on different responses in production environments.

@Override

public void onCompletion(RecordMetadata recordMetadata, Exception e) {

if (e != null) {

//In a production environment, appropriate business processing can be done. Here we simply print out exceptions.

e.printStackTrace();

} else {

//Print out offset and partition information for the message if the response is not abnormal

long offset = recordMetadata.offset();

int partition = recordMetadata.partition();

String topic = recordMetadata.topic();

System.out.println("the message topic: " + topic + ",offset: " + offset + ",partition: " + partition);

}

}

}

Send messages using multithreading

package com.bupt.kafka.producer;

/**

* @author melooo

* @date 2021/10/13 9:29 Afternoon

*/

import java.util.Properties;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

public class MultiThreadProducer extends Thread {

//Declares a global variable of type KafkaProducer, declared final because it is in a multithreaded environment

private final KafkaProducer<String, String> producer;

//Declare the global variable used to store the topic name and, because in a multithreaded environment, declare it as final

private final String topic;

//Set the number of messages sent at a time

private final int messageNumToSend = 10;

/**

* Multithreaded Producer constructor creates a KafkaProducer object in the constructor

*

* @param topicName Topic Name

*/

public MultiThreadProducer(String topicName) {

Properties kafkaProps = new Properties();

kafkaProps.put("bootstrap.servers", "localhost:9092");

kafkaProps.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

kafkaProps.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

producer = new KafkaProducer<String, String>(kafkaProps);

topic = topicName;

}

/**

* The producer thread executes the function and loops through the messages.

*/

@Override

public void run() {

//The number of bars used to record the sent information as the key value of the message.

int messageNo = 0;

while (messageNo <= messageNumToSend) {

String messageContent = "Message_" + messageNo;

ProducerRecord<String, String> record = new ProducerRecord<String, String>(topic, messageNo + "", messageContent);

producer.send(record, new DemoProducerCallback());

messageNo++;

}

//Refresh the cache and send messages to the kafka cluster

producer.flush();

}

public static void main(String[] args) {

for (int i = 0; i < 3; i++) {

new MultiThreadProducer("mySecondTopic").start();

}

}

}

Run the MultiThreadProducer java class first, and then look at the command line terminal that started kafka-console-consumer. You can see that the sending message was printed to the command line terminal. The results are similar to the following:

<img src="/Users/melooo/Library/Application Support/typora-user-images/image-20211016013508274.png" alt="image-20211016013508274" style="zoom: 33%;" /

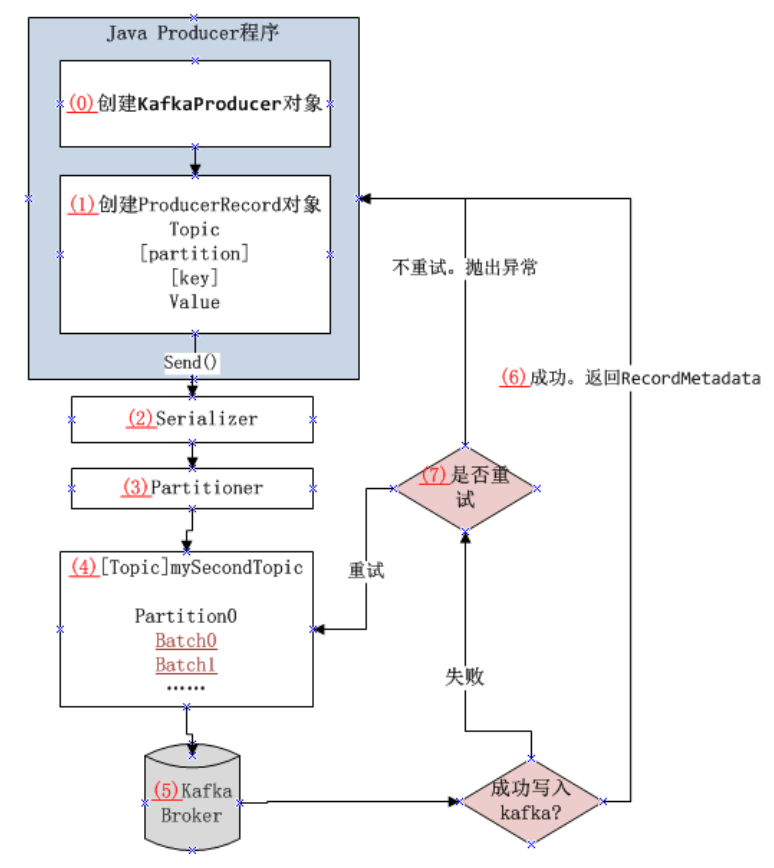

Overall flow of sending messages

We use a flowchart to represent the basic steps for sending messages to the Kafka cluster:

Numbers are labeled for each step in the diagram, from (0) - 7, and the corresponding instructions are as follows:

- (0): Create a KafkaProducer object that receives parameters of type Properties and we configure three parameters: bootstrap.servers, key.serializer, value.serializer.

- (1): Then a ProducerRecord object is created, and when it is created, we pass in parameters such as topic, message key, and message value. Then we use (0)The send method of the KafkaProducer object created in the step sends out the message. The above two steps are the work done by the Producer program we developed. After the message is sent out, what happens next?

- (2): After the message is sent out, the KafkaProducer object first serializes the key and value of the message so that the serialized data can be transmitted over the network. The serialization class used is the class to which the values of the key.serializer and value.serializer parameters we configured point.

- (3): The message is then sent to the partitioner, which is responsible for sending the message to a partition of the top. If we declare partitions when creating a ProducerRecord object, the partitioner will directly return the declared partition. If no partitions are declared, the partitioner will select a partition, usually based on the message's key.Value for partition selection. Once the partition is selected, producer knows which partition the message is sent to.

- (4): The producer then packages the messages sent to the same topic, the same partition to form a Batch, and subsequent messages, if there are the same topic and partition, are added to the corresponding Batch. The producer starts a separate thread to send the packaged messages in batches to the corresponding kafka broker.

- (5): When the broker receives a message, a response is sent back.

- (6): If the message is successfully written to Kafka, it will respond to a RecordMetadata object to the Java Producer program, including topic, partition, offset, and so on. If the message is not successfully written to Kafka, it will respond to an error.

- (7): When producer receives an error response, producer attempts to resend the message, and when the number of attempts reaches the configured value, fails to send, an error is returned to the Java Producer program.

That's how the Java Producer program sends messages.

Configuration parameters in Producer

Create a KafkaProducer object that receives parameters of type Properties. We configure three parameters, bootstrap.servers, key.serializer, value.serializer, which are required. The meaning of the three parameters is detailed below:

- bootstrap.servers:kafka brokers host:port list. This list does not require that all brokers in the cluster be included, and producer s query other brokers based on the broker on the connection. It is recommended that the list contain at least two brokers, as this allows one broker to connect to another broker even if one broker is not connected, which improves the robustness of the program.

- Key.serializer: Serializer for the key part of the message. The Producer interface uses generics to define key.serializer to send any Java object. KafkaProducer<String in the code,String>The first String indicates that key.serializer is of type String, the second String indicates that value.serializer is of type String. Producer must know how to convert these Java objects into a binary array byte arrays for network transmission. Key.serializer should be set to a class with a full path name, which implementsThe org.apache.kafka.common.serialization.Serializer interface is used by producer to serialize key objects into byte arrays. There are three types of serializers in the Kafka client package: ByteArraySerializer, StringSerializer, IntegerSerializer. If you send messages of common types, you do not need to customize the serializer. Note that even if the send contains only value.The key.serializer is also set for messages.

- value.serializer: Serializer for the value part of the message. Same as key.serializer, its value can be the same or different as key.serializer.

For more information on the configuration of producer parameters, see the official documentation:http://kafka.apache.org/documentation/ .