We know that kafka is based on TCP connections. It does not use netty as a TCP server like many middleware. Instead, I wrote a set based on Java NIO.

Several Important Classes

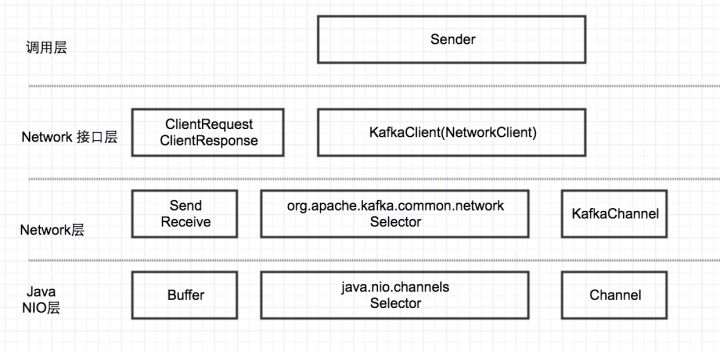

Look at Kafka Client's network architecture first.

This paper mainly analyses the Network layer.

The Network layer has two important classes: Selector and Kafka Channel.

These two classes are somewhat similar to java.nio.channels.Selector and Channel in the Java NIO layer.

Selector Several key fields are as follows // jdk nio Medium Selector java.nio.channels.Selector nioSelector; // Record the current Selector All connection information Map<String, KafkaChannel> channels; // Completed requests sent List<Send> completedSends; // Requests received List<NetworkReceive> completedReceives; // Requests not yet fully received,Not visible to the upper level Map<KafkaChannel, Deque<NetworkReceive>> stagedReceives; // Act as client End, call connect Return when connecting distal end true Connection Set<SelectionKey> immediatelyConnectedKeys; // Connections completed List<String> connected; // Maximum size of one read int maxReceiveSize;

From the network layer, kafka is divided into client end (producer and consumer, broker as client) and server end (broker). This article will analyze how the client end establishes connections and receives and receives data. Server also relies on Selector and kafka Channel for network transmission. There is not much difference between the two ends of the Network layer.

Establish a connection

When the client side of Kafka starts up, the Selector connect method will be invoked to establish the connection (if not specified hereinafter, all refer to org.apache.kafka.common.network.Selector).

public void connect(String id, InetSocketAddress address, int sendBufferSize, int receiveBufferSize) throws IOException { if (this.channels.containsKey(id)) throw new IllegalStateException("There is already a connection for id " + id); // Create a SocketChannel SocketChannel socketChannel = SocketChannel.open(); // Set to non-blocking mode socketChannel.configureBlocking(false); // Establish socket And set related properties Socket socket = socketChannel.socket(); socket.setKeepAlive(true); if (sendBufferSize != Selectable.USE_DEFAULT_BUFFER_SIZE) socket.setSendBufferSize(sendBufferSize); if (receiveBufferSize != Selectable.USE_DEFAULT_BUFFER_SIZE) socket.setReceiveBufferSize(receiveBufferSize); socket.setTcpNoDelay(true); boolean connected; try { // call SocketChannel Of connect Method, which is initiated to the far end tcp Request for company establishment // Because it is non-blocking, the connection may not have been established (i.e., three handshakes) when the method returns. If the connection has been established, return true,Otherwise return false. generally speaking server and client On a machine, this method may return true. connected = socketChannel.connect(address); } catch (UnresolvedAddressException e) { socketChannel.close(); throw new IOException("Can't resolve address: " + address, e); } catch (IOException e) { socketChannel.close(); throw e; } // Yes CONNECT Event registration SelectionKey key = socketChannel.register(nioSelector, SelectionKey.OP_CONNECT); KafkaChannel channel; try { // Construct a KafkaChannel channel = channelBuilder.buildChannel(id, key, maxReceiveSize); } catch (Exception e) { ... } // take kafkachannel Bind to SelectionKey upper key.attach(channel); // Put it in map Medium, id Is the name of the remote server this.channels.put(id, channel); // connectct by true Represents that the connection will not be triggered again CONNECT Events, so we have to deal with them separately. if (connected) { // OP_CONNECT won't trigger for immediately connected channels log.debug("Immediately connected to node {}", channel.id()); // Join in a separate collection immediatelyConnectedKeys.add(key); // Cancel the connection CONNECT Event monitoring key.interestOps(0); } }

The process here is similar to the standard NIO process. It's the scenario where the socketChannel#connect method returns true, which is mentioned in the annotations to this method.

* <p> If this channel is in non-blocking mode then an invocation of this * method initiates a non-blocking connection operation. If the connection * is established immediately, as can happen with a local connection, then * this method returns <tt>true</tt>. Otherwise this method returns * <tt>false</tt> and the connection operation must later be completed by * invoking the {@link #finishConnect finishConnect} method.

That is to say, in non-blocking mode, for local connection, the connection may be established immediately, then this method will return true, in this case, the connection event after triggering will not be triggered again. So kafka records these special connections with a separate set of immediatelyConnectedKeys. Special treatment will be taken in the next steps.

The poll method is then invoked to monitor network events:

public void poll(long timeout) throws IOException { ... // select The method is right. java.nio.channels.Selector#A Simple Package for select int readyKeys = select(timeout); ... // If there is a ready event or immediatelyConnectedKeys Not empty if (readyKeys > 0 || !immediatelyConnectedKeys.isEmpty()) { // Processing ready events, the second parameter is false pollSelectionKeys(this.nioSelector.selectedKeys(), false, endSelect); // Yes immediatelyConnectedKeys Processing. The second parameter is true pollSelectionKeys(immediatelyConnectedKeys, true, endSelect); } addToCompletedReceives(); ... } private void pollSelectionKeys(Iterable<SelectionKey> selectionKeys, boolean isImmediatelyConnected, long currentTimeNanos) { Iterator<SelectionKey> iterator = selectionKeys.iterator(); // Ergodic set while (iterator.hasNext()) { SelectionKey key = iterator.next(); // Remove the current element, or next time poll I'll deal with it again. iterator.remove(); // obtain connect Created when KafkaChannel KafkaChannel channel = channel(key); ... try { // If the current processing is immediatelyConnectedKeys The elements of a collection or what it deals with are CONNECT Event if (isImmediatelyConnected || key.isConnectable()) { // finishconnect China will increase READ Event monitoring if (channel.finishConnect()) { this.connected.add(channel.id()); this.sensors.connectionCreated.record(); ... } else continue; } // about ssl There are some additional steps to the connection if (channel.isConnected() && !channel.ready()) channel.prepare(); // If it is READ Event if (channel.ready() && key.isReadable() && !hasStagedReceive(channel)) { NetworkReceive networkReceive; while ((networkReceive = channel.read()) != null) addToStagedReceives(channel, networkReceive); } // If it is WRITE Event if (channel.ready() && key.isWritable()) { Send send = channel.write(); if (send != null) { this.completedSends.add(send); this.sensors.recordBytesSent(channel.id(), send.size()); } } // If the connection fails if (!key.isValid()) close(channel, true); } catch (Exception e) { String desc = channel.socketDescription(); if (e instanceof IOException) log.debug("Connection with {} disconnected", desc, e); else log.warn("Unexpected error from {}; closing connection", desc, e); close(channel, true); } finally { maybeRecordTimePerConnection(channel, channelStartTimeNanos); } } }

Because the connection in the immediatelyConnectedKey does not trigger the CONNNECT event, the finishConnect method is invoked on the channel of the immediatelyConnectedKey separately during poll ing. In plaintext transport mode, this method calls PlaintextTransportLayer finishConnect, which is implemented as follows:

public boolean finishConnect() throws IOException { // Return true Representatives are connected. boolean connected = socketChannel.finishConnect(); if (connected) // Cancellation of interception CONNECt Incidents, Increases READ Event monitoring key.interestOps(key.interestOps() & ~SelectionKey.OP_CONNECT | SelectionKey.OP_READ); return connected; }

See more details about immediatelyConnectedKeys Here.

send data

kafka sends data in two steps:

1. Calling Selector#send saves the data to be sent in the corresponding Kafka Channel. This method does not perform real network IO.

// Selector#send public void send(Send send) { String connectionId = send.destination(); // If the connection is closing, join the failed set failedSends in if (closingChannels.containsKey(connectionId)) this.failedSends.add(connectionId); else { KafkaChannel channel = channelOrFail(connectionId, false); try { channel.setSend(send); } catch (CancelledKeyException e) { this.failedSends.add(connectionId); close(channel, false); } } } //KafkaChannel#setSend public void setSend(Send send) { // If there is still data not sent out, the error will be reported. if (this.send != null) throw new IllegalStateException("Attempt to begin a send operation with prior send operation still in progress."); // Preserved this.send = send; // Add pairs WRITE Event monitoring this.transportLayer.addInterestOps(SelectionKey.OP_WRITE); } //Call Selector#poll, and in the first step the channel has registered a listener for WRITE events, so when the channel is writable, pollSelectionKey is called to actually send the data. private void pollSelectionKeys(Iterable<SelectionKey> selectionKeys, boolean isImmediatelyConnected, long currentTimeNanos) { Iterator<SelectionKey> iterator = selectionKeys.iterator(); // Ergodic set while (iterator.hasNext()) { SelectionKey key = iterator.next(); // Remove the current element, or next time poll I'll deal with it again. iterator.remove(); // obtain connect Created when KafkaChannel KafkaChannel channel = channel(key); ... try { ... // If it is WRITE Event if (channel.ready() && key.isWritable()) { // Real Web Writing Send send = channel.write(); // One Send Objects may be split up and sent several times. write Non-empty represents one send Send completed if (send != null) { // completedSends Represents the completed collection sent this.completedSends.add(send); this.sensors.recordBytesSent(channel.id(), send.size()); } } ... } catch (Exception e) { ... } finally { maybeRecordTimePerConnection(channel, channelStartTimeNanos); } } }

When writable, the KafkaChannel#write method is called, in which real network IO is performed:

public Send write() throws IOException { Send result = null; if (send != null && send(send)) { result = send; send = null; } return result; } private boolean send(Send send) throws IOException { // Final call SocketChannel#write for real writing send.writeTo(transportLayer); if (send.completed()) // If it's finished, remove the pair WRITE Event monitoring transportLayer.removeInterestOps(SelectionKey.OP_WRITE); return send.completed(); }

receive data

If the data is sent from the remote end, the poll method is called to process the received data.

public void poll(long timeout) throws IOException { ... // select The method is right. java.nio.channels.Selector#A Simple Package for select int readyKeys = select(timeout); ... // If there is a ready event or immediatelyConnectedKeys Not empty if (readyKeys > 0 || !immediatelyConnectedKeys.isEmpty()) { // Processing ready events, the second parameter is false pollSelectionKeys(this.nioSelector.selectedKeys(), false, endSelect); // Yes immediatelyConnectedKeys Processing. The second parameter is true pollSelectionKeys(immediatelyConnectedKeys, true, endSelect); } addToCompletedReceives(); ... } private void pollSelectionKeys(Iterable<SelectionKey> selectionKeys, boolean isImmediatelyConnected, long currentTimeNanos) { Iterator<SelectionKey> iterator = selectionKeys.iterator(); // Ergodic set while (iterator.hasNext()) { SelectionKey key = iterator.next(); // Remove the current element, or next time poll I'll deal with it again. iterator.remove(); // obtain connect Created when KafkaChannel KafkaChannel channel = channel(key); ... try { ... // If it is READ Event if (channel.ready() && key.isReadable() && !hasStagedReceive(channel)) { NetworkReceive networkReceive; // read The method reads data from the network, but it may read only one at a time. req Part of the data. Only read a complete req In this case, the method returns non- null while ((networkReceive = channel.read()) != null) // The request to be read exists stagedReceives in addToStagedReceives(channel, networkReceive); } ... } catch (Exception e) { ... } finally { maybeRecordTimePerConnection(channel, channelStartTimeNanos); } } } private void addToStagedReceives(KafkaChannel channel, NetworkReceive receive) { if (!stagedReceives.containsKey(channel)) stagedReceives.put(channel, new ArrayDeque<NetworkReceive>()); Deque<NetworkReceive> deque = stagedReceives.get(channel); deque.add(receive); }

This collection is then processed in the addToCompletedReceives method.

private void addToCompletedReceives() { if (!this.stagedReceives.isEmpty()) { Iterator<Map.Entry<KafkaChannel, Deque<NetworkReceive>>> iter = this.stagedReceives.entrySet().iterator(); while (iter.hasNext()) { Map.Entry<KafkaChannel, Deque<NetworkReceive>> entry = iter.next(); KafkaChannel channel = entry.getKey(); // about client On the one hand, this is the case. isMute Return to false,server The end relies on this method to ensure the order of messages. if (!channel.isMute()) { Deque<NetworkReceive> deque = entry.getValue(); addToCompletedReceives(channel, deque); if (deque.isEmpty()) iter.remove(); } } } } private void addToCompletedReceives(KafkaChannel channel, Deque<NetworkReceive> stagedDeque) { // Each channel First NetworkReceive Join in completedReceives NetworkReceive networkReceive = stagedDeque.poll(); this.completedReceives.add(networkReceive); this.sensors.recordBytesReceived(channel.id(), networkReceive.payload().limit()); }

After reading out the data, it is first placed in the stage dReceives collection, and then in the addToCompletedReceives method, for each channel, a Network Receive (if any) is extracted from the stage dReceives and put into the complete Receives.

There are two reasons for this:

- For the connection of SSL, the data content is encrypted, so the size of the data that needs to be read can not be determined accurately, only read as much as possible, which may lead to more data than requested. If there is no data to read after the channel, the data that will cause multiple reads will not be processed.

- kafka needs to ensure that the order in which requests are processed on a channel is the order in which they are sent. Therefore, for each channel, the upper layer of each poll can only see one request at most, and when the request is processed, other requests are processed. On the sever side, after each poll, the channel is dropped to the mute, that is, the data is no longer read from the channel. When the processing is complete, the channelunmute is read from the socket. The client side is controlled by InFlightRequests#canSendMore.

The comments on this logic in the code are as follows:

/* In the "Plaintext" setting, we are using socketChannel to read & write to the network. But for the "SSL" setting,

* we encrypt the data before we use socketChannel to write data to the network, and decrypt before we return the responses.

* This requires additional buffers to be maintained as we are reading from network, since the data on the wire is encrypted

* we won't be able to read exact no.of bytes as kafka protocol requires. We read as many bytes as we can, up to SSLEngine's

* application buffer size. This means we might be reading additional bytes than the requested size.

* If there is no further data to read from socketChannel selector won't invoke that channel and we've have additional bytes

* in the buffer. To overcome this issue we added "stagedReceives" map which contains per-channel deque. When we are

* reading a channel we read as many responses as we can and store them into "stagedReceives" and pop one response during

* the poll to add the completedReceives. If there are any active channels in the "stagedReceives" we set "timeout" to 0

* and pop response and add to the completedReceives.

* Atmost one entry is added to "completedReceives" for a channel in each poll. This is necessary to guarantee that

* requests from a channel are processed on the broker in the order they are sent. Since outstanding requests added

* by SocketServer to the request queue may be processed by different request handler threads, requests on each

* channel must be processed one-at-a-time to guarantee ordering.

*/

End

This paper analyses the implementation of kafka network layer. When reading the source code of kafka, it will be confusing if the network layer is not clear, such as req/resp sequence guarantee mechanism, the real way of network IO is not send method, etc.

Portal: https://mp.weixin.qq.com/s/JzddfH-7yNudmkjT0IRL8Q