Click "taro source code" above and select“ Set as star"

Whether she's ahead or behind?

A wave that can wave is a good wave!

Update the article at 10:33 every day and lose 100 million hair every day

Source code boutique column

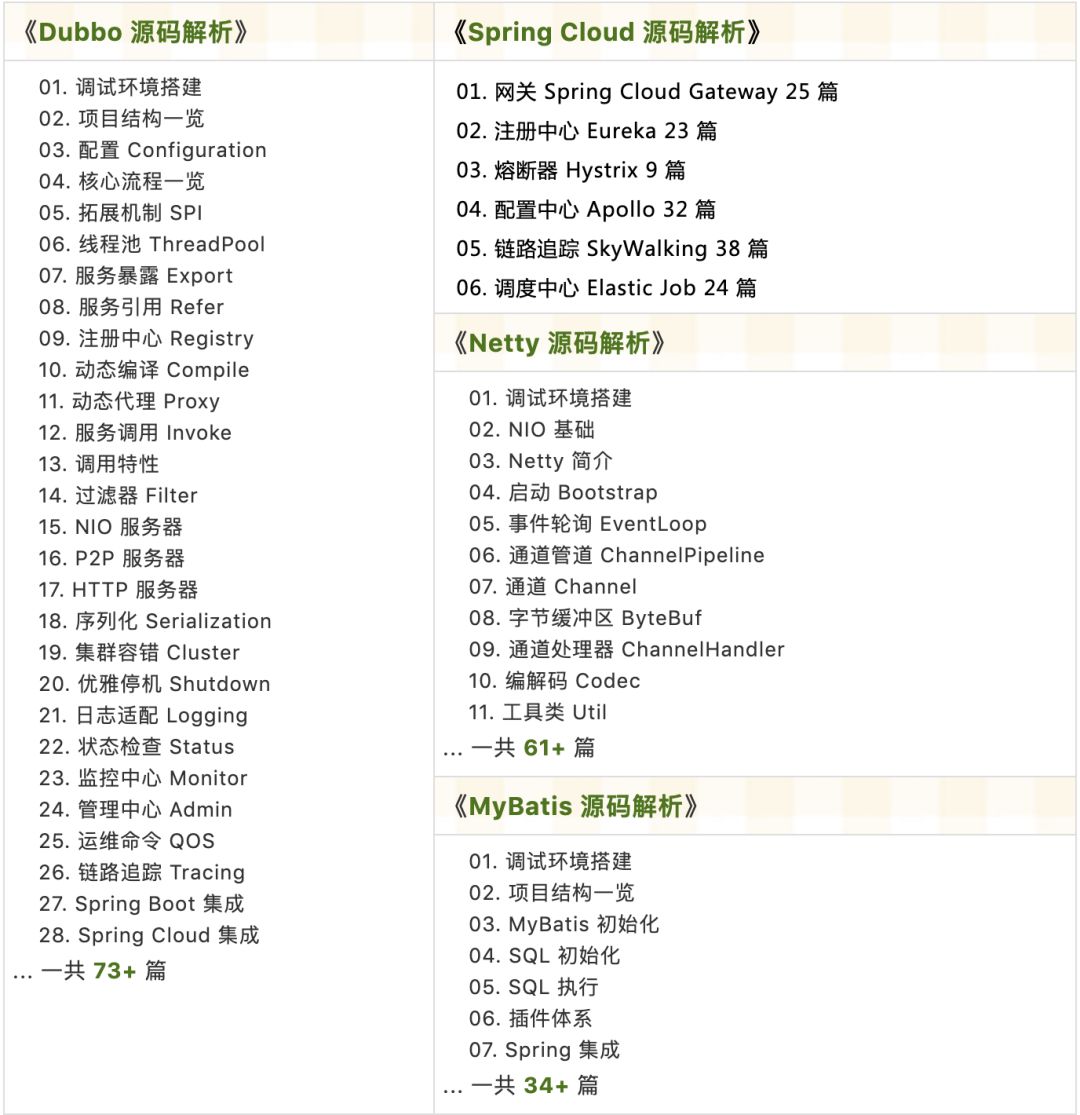

- Original | Java 2021 super God Road, very liver~

- Open source project with detailed Chinese Notes

- RPC framework Dubbo source code analysis

- Analysis of Netty source code of network application framework

- Analysis of message middleware RocketMQ source code

- Source code analysis of database middleware sharding JDBC and MyCAT

- Analysis of job scheduling middleware elastic job source code

- Source code analysis of distributed transaction middleware TCC transaction

- Eureka and Hystrix source code analysis

- Java Concurrent source code

Source: blog csdn. net/qq_ 38245668/

preface

This paper aims to solve the problem of sequential consumption when there is a certain data association between different topics in Kafka. If there is Topic insert and Topic update, they are the insertion and update of data respectively. When the insert and update operations are the same data, it should be ensured to insert first and then update.

1. Problem introduction

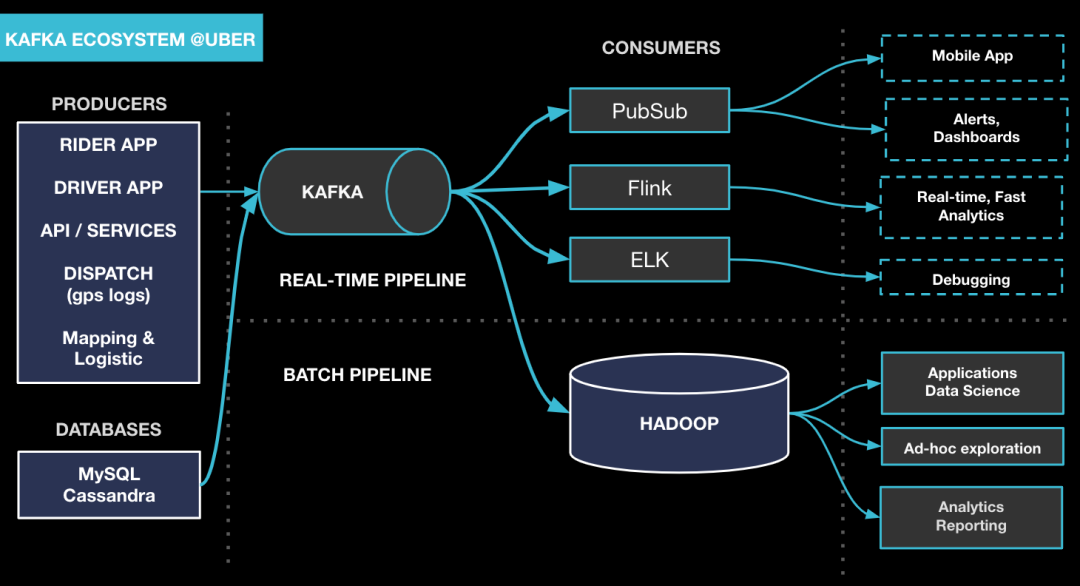

kafka's sequential consumption has always been a difficult problem to solve. kafka's consumption strategy is to ensure sequential consumption for messages with the same Topic and Partition, and the rest cannot be guaranteed. If a Topic has only one Partition, the consumption of consumers corresponding to this Topic must be orderly. In any case, different topics cannot guarantee that the consumption order of the consumer is consistent with the sending order of the producer.

If there is data association between different topics and there are requirements for consumption order, how to deal with it? This paper mainly solves this problem.

2. Solution ideas

For the existing topic insert and topic update, the unique ID of the data is ID. for the data with id=1, it is necessary to ensure that topic insert consumption is first and topic update consumption is last.

The consumption of two topics is processed by different threads. Therefore, in order to ensure that there is only one business logic processing messages with the same data ID at the same time, it is necessary to add a lock operation to the business. Locking with synchronized will affect the data consumption ability of unrelated inserts and updates, such as inserts with id=1 and updates with id=2. In the case of synchronized, it is not necessary to process them concurrently. What we need is that there is only one insert with id=1 and update with id=1 at the same time, Therefore, fine-grained locks are used to complete the locking operation.

Fine grained lock implementation: https://blog.csdn.net/qq_38245668/article/details/105891161

PS: if it is a distributed system, fine-grained locks need to use the corresponding implementation of distributed locks.

After locking the insert and update, the problem of consumption order is not solved, but only one business is processed at the same time. For the problem of abnormal consumption order, that is, update is consumed first and then insert is consumed.

Processing method: when consuming the update data, check whether the current data exists in the library (that is, whether to execute the insert). If not, store the current update data in the cache, and the key is the data id. check whether there is an update cache corresponding to the id during insert consumption. If so, it proves that the consumption order of the current data is abnormal, and the update operation needs to be executed, Then remove the cached data.

3. Implementation scheme

Message sending:

kafkaTemplate.send("TOPIC_INSERT", "1");

kafkaTemplate.send("TOPIC_UPDATE", "1");Listening code example:

KafkaListenerDemo.java

@Component

@Slf4j

public class KafkaListenerDemo {

//Data cache consumed

private Map<String, String> UPDATE_DATA_MAP = new ConcurrentHashMap<>();

//Data storage

private Map<String, String> DATA_MAP = new ConcurrentHashMap<>();

private WeakRefHashLock weakRefHashLock;

public KafkaListenerDemo(WeakRefHashLock weakRefHashLock) {

this.weakRefHashLock = weakRefHashLock;

}

@KafkaListener(topics = "TOPIC_INSERT")

public void insert(ConsumerRecord<String, String> record, Acknowledgment acknowledgment) throws InterruptedException{

//Simulation sequence exception, that is, consumption after insert, where thread sleep

Thread.sleep(1000);

String id = record.value();

log.info("Received insert : : {}", id);

Lock lock = weakRefHashLock.lock(id);

lock.lock();

try {

log.info("Start processing {} of insert", id);

//Simulate {insert} business processing

Thread.sleep(1000);

//Get the update data from the cache

if (UPDATE_DATA_MAP.containsKey(id)){

//Cache data exists, execute update

doUpdate(id);

}

log.info("handle {} of insert end", id);

}finally {

lock.unlock();

}

acknowledgment.acknowledge();

}

@KafkaListener(topics = "TOPIC_UPDATE")

public void update(ConsumerRecord<String, String> record, Acknowledgment acknowledgment) throws InterruptedException{

String id = record.value();

log.info("Received update : : {}", id);

Lock lock = weakRefHashLock.lock(id);

lock.lock();

try {

//The test is used without database verification

if (!DATA_MAP.containsKey(id)){

//The corresponding data is not found, which proves that the consumption order is abnormal. Add the current data to the cache

log.info("The consumption order is abnormal, and update data {} Add cache", id);

UPDATE_DATA_MAP.put(id, id);

}else {

doUpdate(id);

}

}finally {

lock.unlock();

}

acknowledgment.acknowledge();

}

void doUpdate(String id) throws InterruptedException{

//Simulation update

log.info("Start processing update: : {}", id);

Thread.sleep(1000);

log.info("handle update: : {} end", id);

}

}Log (scenarios with abnormal consumption order have been simulated in the code):

Received update : : 1 The consumption order is abnormal, and update data 1 Add cache Received insert : : 1 Start processing 1 of insert Start processing update: : 1 handle update: : 1 end handle 1 of insert end

By observing the log, this scheme can normally deal with the consumption order problem of data association between different topics.

Welcome to discuss the source code and the structure of the planet. Join method: long press the QR code below:

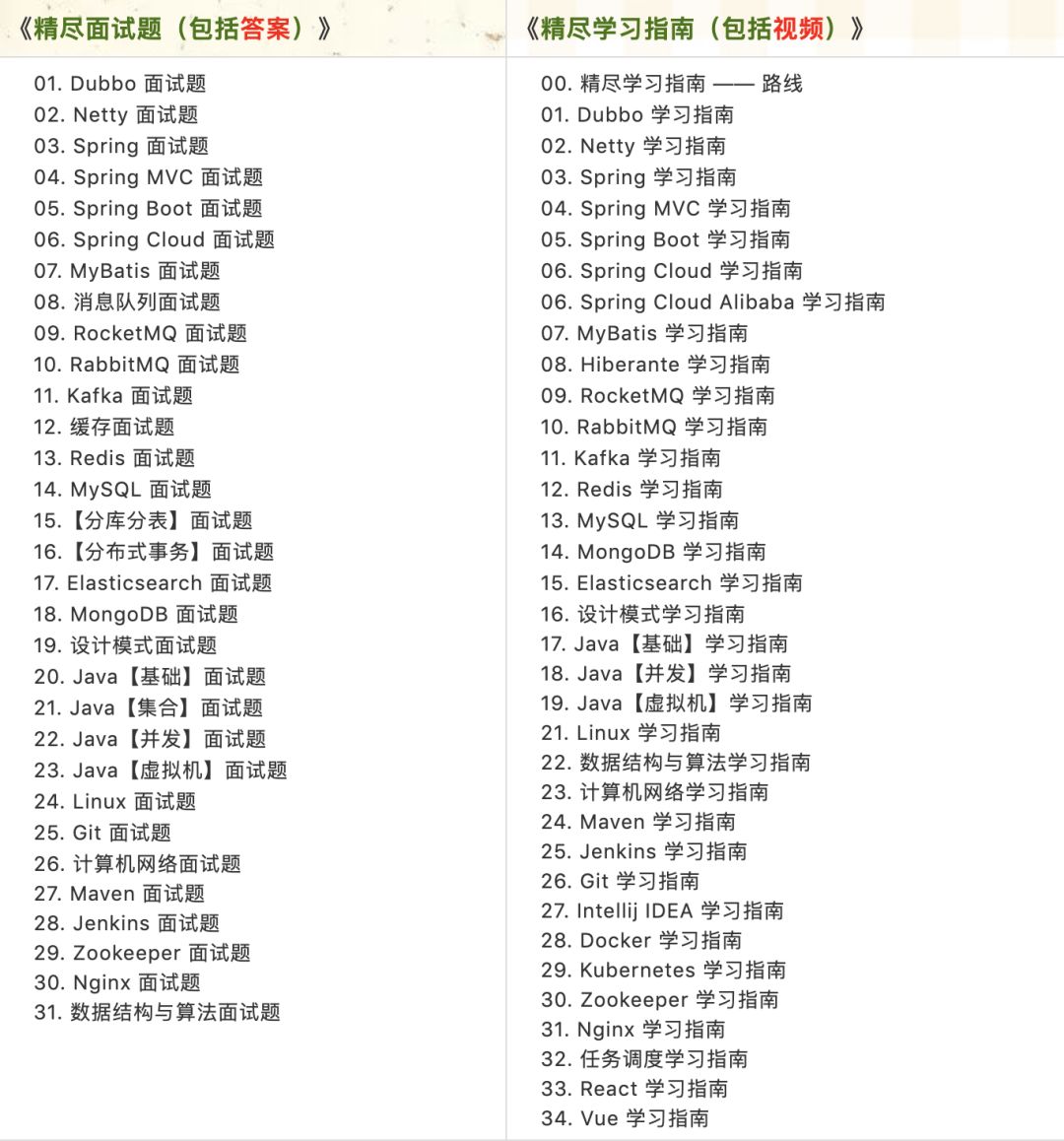

The source code has been updated on the knowledge planet. The analysis is as follows:

Recently updated the "Introduction to taro SpringBoot 2.X" series, which has more than 101 articles, covering MyBatis, Redis, MongoDB, ES, sub database and sub table, read-write separation, SpringMVC, Webflux, permissions, WebSocket, Dubbo, RabbitMQ, RocketMQ, Kafka, performance test, etc.

Provide SpringBoot examples with nearly 3W lines of code and e-commerce micro service projects with more than 4W lines of code.

The way of obtaining: click "see", pay attention to the official account and reply to 666, and more contents will be offered.

If the article is helpful, read it and forward it. Thank you for your support (*^__^*)