Case description:

This test case is used for the online capacity expansion test of kingbaseES V8R3 read-write separation cluster, which is mainly divided into three steps.

1, adopt sys_basebackup Create a new standby database. 2, Add spare database to Cluster nodes Management, can use kingbase_monitor.sh One key start stop. 3, Active / standby replication switching test.

Operating system and database version:

1)Operating system environment

[kingbase@#localhost ~]$ cat /etc/centos-release

CentOS Linux release 7.6.1810 (Core)

2)Database version

TEST=# select version();

version

-------------------------------------------------------------------------------

Kingbase V008R003C002B0100 on x86_64-pc-linux-gnu, compiled by gcc (GCC) 4.1.2 20080704 (Red Hat 4.1.2-46), 64-bit

(1 row)

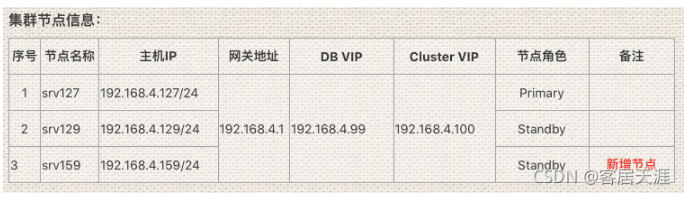

Preliminary preparation:

1, Original node, main library(192.168.4.127),Spare database(192.168.4.129),New node(192.168.4.159). 2, Install the same version of on the new node kingbaseES database 3, Configure the from the new node to the original node ssh Trust relationship( root,kingbase). 4, Turn off the new node firewall and selinux And start crond Service.

1, Add database replication nodes Online

1.1 viewing kingbase cluster node processes

=First, confirm that the Cluster and kingbases services of the original primary and standby databases are in normal status=

Main library: kingbase@srv127 bin]$ ps -ef |grep kingbase kingbase 15845 1 0 15:11 ? 00:00:00 /home/kingbase/cluster/ESHA/db/bin/kingbase -D /home/kingbase/cluster/ESHA/db/data kingbase 15877 15845 0 15:11 ? 00:00:00 kingbase: logger process kingbase 15879 15845 0 15:11 ? 00:00:00 kingbase: checkpointer process kingbase 15880 15845 0 15:11 ? 00:00:00 kingbase: writer process kingbase 15881 15845 0 15:11 ? 00:00:00 kingbase: wal writer process kingbase 15882 15845 0 15:11 ? 00:00:00 kingbase: autovacuum launcher process kingbase 15883 15845 0 15:11 ? 00:00:00 kingbase: archiver process kingbase 15884 15845 0 15:11 ? 00:00:00 kingbase: stats collector process kingbase 15885 15845 0 15:11 ? 00:00:00 kingbase: bgworker: syslogical supervisor kingbase 16851 15845 0 15:11 ? 00:00:00 kingbase: wal sender process SYSTEM 192.168.4.127(52967) streaming 0/180000D0 root 17781 1 0 15:11 ? 00:00:00 ./kingbasecluster -n root 17830 17781 0 15:11 ? 00:00:00 kingbasecluster: watchdog root 17895 17781 0 15:11 ? 00:00:00 kingbasecluster: lifecheck root 17897 17895 0 15:11 ? 00:00:00 kingbasecluster: heartbeat receiver root 17898 17895 0 15:11 ? 00:00:00 kingbasecluster: heartbeat sender root 17899 17781 0 15:11 ? 00:00:00 kingbasecluster: wait for connection request root 17900 17781 0 15:11 ? 00:00:00 kingbasecluster: wait for connection request root 17901 17781 0 15:11 ? 00:00:00 kingbasecluster: wait for connection request root 17912 17781 0 15:11 ? 00:00:00 kingbasecluster: wait for connection request root 17913 17781 0 15:11 ? 00:00:00 kingbasecluster: wait for connection request root 17914 17781 0 15:11 ? 00:00:00 kingbasecluster: wait for connection request root 17924 17781 0 15:11 ? 00:00:00 kingbasecluster: PCP: wait for connection request root 17925 17781 0 15:11 ? 00:00:00 kingbasecluster: worker process kingbase 20397 15845 0 15:12 ? 00:00:00 kingbase: SUPERMANAGER_V8ADMIN TEST 192.168.4.127(53139) idle Spare warehouse: [kingbase@srv129 bin]$ ps -ef |grep kingbase kingbase 30708 1 0 15:11 ? 00:00:00 /home/kingbase/cluster/ESHA/db/bin/kingbase -D /home/kingbase/cluster/ESHA/db/data kingbase 30709 30708 0 15:11 ? 00:00:00 kingbase: logger process kingbase 30710 30708 0 15:11 ? 00:00:00 kingbase: startup process recovering 000000030000000000000018 kingbase 30714 30708 0 15:11 ? 00:00:00 kingbase: checkpointer process kingbase 30715 30708 0 15:11 ? 00:00:00 kingbase: writer process kingbase 30716 30708 0 15:11 ? 00:00:00 kingbase: stats collector process kingbase 30995 1 0 10 June 18 ? 00:00:17 /home/kingbase/cluster/KHA6/KHA/kingbase/bin/kbha -A daemon -f /home/kingbase/cluster/KHA6/KHA/kingbase/bin/../etc/repmgr.conf kingbase 31173 30708 0 15:11 ? 00:00:00 kingbase: wal receiver process streaming 0/180000D0 root 31222 1 0 15:11 ? 00:00:00 ./kingbasecluster -n root 31264 31222 0 15:11 ? 00:00:00 kingbasecluster: watchdog root 31396 31222 0 15:11 ? 00:00:00 kingbasecluster: lifecheck root 31398 31396 0 15:11 ? 00:00:00 kingbasecluster: heartbeat receiver root 31399 31396 0 15:11 ? 00:00:00 kingbasecluster: heartbeat sender root 31400 31222 0 15:11 ? 00:00:00 kingbasecluster: wait for connection request root 31402 31222 0 15:11 ? 00:00:00 kingbasecluster: wait for connection request ...... root 31421 31222 0 15:11 ? 00:00:00 kingbasecluster: PCP: wait for connection request root 31422 31222 0 15:11 ? 00:00:00 kingbasecluster: worker process kingbase 32062 30708 0 15:12 ? 00:00:00 kingbase: SUPERMANAGER_V8ADMIN TEST 192.168.4.127(19332) idle

1.2 viewing active / standby replication information

=Ensure that the active and standby replication status in the cluster is normal=

kingbase@srv127 ~]$ ksql -U SYSTEM -W 123456 TEST warning: License file will expire in 1 days. ksql (V008R003C002B0160) Type "help" for help. TEST=# select * from sys_stat_replication; pid | usesysid | usename | application_name | client_addr | client_hostname | client_port | backend_start | backend_xmin | state | sent_lo cation | write_location | flush_location | replay_location | sync_priority | sync_state -------+----------+---------+------------------+---------------+------------- 16851 | 10 | SYSTEM | srv129 | 192.168.4.129 | | 52967 | 2020-10-23 15:11:17.836557+08 | | streaming | 0/18000 0D0 | 0/180000D0 | 0/180000D0 | 0/180000D0 | 0 | async (1 row)

1.3 add new standby database nodes Online

=In order to ensure that the test environment is close to the production environment, when creating the standby database, conduct large transactions at the primary database end. After the standby database is created, check whether it is consistent with the data of the primary database=

Transaction in master library (simulate production Library): prod=# insert into t10 values (generate_series(1,10000000),'tom'); prod=# select count(*) from t10; count ---------- 11000000 (1 row)

1.3. 1. Use sys on the new standby database_ Basebackup creates a standby database online

1) Create a backup database data storage directory

=Note: the directory location should be consistent with the directory location of other existing nodes as far as possible, so that there are few changes to the later configuration=

[kingbase@srv159 v8r3]$ mkdir -p /home/kingbase/cluster/ESHA/db/data [kingbase@srv159 v8r3]$ chmod 0700 /home/kingbase/cluster/ESHA/db/data

2) Via sys_ Create a backup database based on backup

[kingbase@srv159 v8r3]$sys_basebackup -h 192.168.4.127 -D /home/kingbase/cluster/ESHA/db/data -F p -x -v -P -U SYSTEM -W 123456 -p 54321 The transaction log starts at a point in time: 0/B20060D0, Based on schedule 3 2079583/2079583 kB (100%), 1/1 Tablespace transaction log end point: 0/D5C48538 sys_basebackup: base backup completed

1.3. 2. Configure standby database recovery Conf file

[kingbase@srv159 data]$ pwd /home/kingbase/cluster/ESHA/db/data [kingbase@srv159 data]$ cat recovery.conf standby_mode='on' primary_conninfo='port=54321 host=192.168.4.127 user=SYSTEM password=MTIzNDU2 application_name=srv159' recovery_target_timeline='latest' primary_slot_name ='slot_srv159'

1.3. 3. Configure standby database Kingbase Conf file (partial content)

# Add settings for extensions here max_wal_senders=4 wal_keep_segments=256 hot_standby_feedback=on max_prepared_transactions=100 port=54321 control_file_copy='/home/kingbase/cluster/ESHA/template.bk' fsync=on wal_log_hints=on archive_mode=on hot_standby=on wal_level=replica ###synchronous_standby_names='1(srv129)' archive_dest='/home/kingbase/cluster/ESHA/archivedir/' max_replication_slots=4 log_directory='/home/kingbase/cluster/ESHA/db/data/sys_log/'

1.3. 4. Configure all nodes sys_hba.conf file

# "local" is for Unix domain socket connections only local all all md5 # IPv4 local connections: host all all 127.0.0.1/32 md5 host all all 0.0.0.0/0 md5 # IPv6 local connections: host all all ::1/128 md5 host all all ::0/0 md5 # Allow replication connections from localhost, by a user with the # replication private host all SYSTEM 192.168.4.127/16 md5 host all SYSTEM 192.168.4.129/16 md5 host all SYSTEM 192.168.4.159/16 md5 host replication SYSTEM 192.168.4.127/16 md5 host replication SYSTEM 192.168.4.129/16 md5 host replication SYSTEM 192.168.4.159/16 md5

=Note: modify the sys of an existing node_ hba. After the conf file, the reload database process is required to make the configuration effective=

[kingbase@srv129 data]$ sys_ctl reload -D /home/kingbase/cluster/ESHA/db/data Server process signaling

1.3. 5 copy the following directories and files from the primary database to the new standby database

Remotely copy the following directories and files to the same location of the new node through scp:

=Note: "/ home/kingbase/cluster/ESHA" is the directory of cluster deployment, where "ESHA" is the cluster name. For archivedir, you can also create an empty directory=

[kingbase@#localhost ESHA]$ pwd /home/kingbase/cluster/ESHA [kingbase@#localhost ESHA]$ ls -lh Total consumption 8.0K drwxrwxr-x 2 kingbase kingbase 6 10 June 30-14:23 archivedir drwxrwxr-x 7 kingbase kingbase 84 10 June 30-17:00 db drwxrwxr-x 3 kingbase kingbase 181 10 June 30-17:23 log drwxrwxr-x 3 kingbase kingbase 29 10 June 30-14:55 run -rw------- 1 kingbase kingbase 8.0K 10 March 31, 2013:19 template.bk [kingbase@srv127 db]$ pwd /home/kingbase/cluster/ESHA/db [kingbase@srv127 db]$ ls -lh Total consumption 20 K drwxrwxr-x 2 kingbase kingbase 4.0K 10 June 29-17:29 bin drwxrwxr-x 2 kingbase kingbase 295 10 June 29-17:26 etc -rw------- 1 kingbase kingbase 151 8 June 18-20:13 kingbase.log drwxrwxr-x 2 kingbase kingbase 4.0K 7 October 27:41 lib drwxrwxr-x 9 kingbase kingbase 4.0K 7 October 27:41 share

=Note: do not copy the kingbasecluster directory to the new node for directory and file copying. In V8R3 cluster, the cluster only supports two active and standby nodes, and the new node can only be data node=

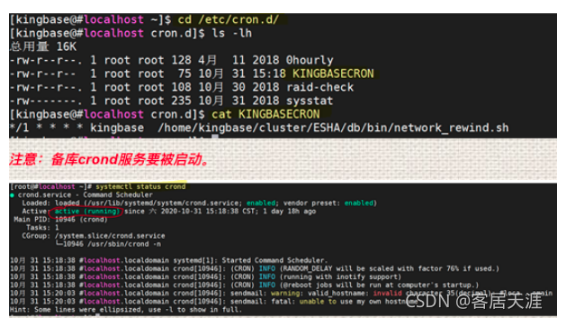

Copy the main library / etc / cron D / kingbasecron file to the same directory of the standby database:

1.3. 6. Add replication on the primary database side_ slot

1) View the replication that has been created_ slot:

TEST=# select * from sys_replication_slots; slot_name | plugin | slot_type | datoid | database | active | active_pid | xmin | catalog_xmin | restart_lsn | confirmed_flush_lsn -------------+--------+-----------+--------+----------+--------+------------+------+--------------+-------------+--------------------- slot_srv127 | | physical | | | f | | | | | slot_srv129 | | physical | | | f | | | | | (2 rows)

2) Create a replication for the new standby database_ slot

TEST=# select * from sys_create_physical_replication_slot('slot_srv159');

slot_name | xlog_position

-------------+---------------

slot_srv159 |

(1 row)

3) View the replication of active and standby replication_slots

TEST=# select * from sys_replication_slots; slot_name | plugin | slot_type | datoid | database | active | active_pid | xmin | catalog_xmin | restart_lsn | confirmed_flush_lsn -------------+--------+-----------+--------+----------+--------+------------+------+--------------+-------------+--------------------- slot_srv127 | | physical | | | f | | | | | slot_srv129 | | physical | | | f | | | | | slot_srv159 | | physical | | | f | | | | | (3 rows)

1.3. 7. Start the standby database instance to join the active / standby replication architecture

[kingbase@srv159 data]$ sys_ctl start -D /home/kingbase/cluster/ESHA/db/data Starting server process [kingbase@srv159 data]$ journal: Redirect log output to the log collection process Tips: Subsequent log output will appear in the directory "/home/kingbase/cluster/ESHA/db/data/sys_log"in. To view the standby database process: [kingbase@srv159 data]$ ps -ef |grep kingbase kingbase 5071 1 0 20:01 pts/3 00:00:00 /opt/Kingbase/ES/V8R3/Server/bin/kingbase -D /home/kingbase/cluster/ESHA/db/data kingbase 5072 5071 0 20:01 ? 00:00:00 kingbase: logger process kingbase 5073 5071 90 20:01 ? 00:00:19 kingbase: startup process waiting for 0000000300000000000000D6 kingbase 5077 5071 0 20:01 ? 00:00:00 kingbase: checkpointer process kingbase 5078 5071 0 20:01 ? 00:00:00 kingbase: writer process kingbase 5199 5071 0 20:01 ? 00:00:00 kingbase: stats collector process kingbase 5200 5071 0 20:01 ? 00:00:00 kingbase: wal receiver process kingbase 5201 5071 0 20:01 ? 00:00:00 kingbase: wal sender process SYSTEM 192.168.4.159(62391) idle

** 1.3. 8. View active / standby replication information**

1) View active / standby replication status information

TEST=# select * from sys_stat_replication; pid | usesysid | usename | application_name | client_addr | client_hostname | client_port | backend_start | backend_xmin | state | sent_lo cation | write_location | flush_location | replay_location | sync_priority | sync_state -------+----------+---------+------------------+---------------+------------- 16851 | 10 | SYSTEM | srv129 | 192.168.4.129 | | 52967 | 2020-10-23 15:11:17.836557+08 | | streaming | 0/ED000 000 | 0/ED000000 | 0/ED000000 | 0/ECFFF418 | 0 | async 12202 | 10 | SYSTEM | srv159 | 192.168.4.159 | | 47993 | 2020-10-23 20:10:32.521960+08 | 2059 | streaming | 0/ED000 000 | 0/ED000000 | 0/ED000000 | 0/ECFFF418 | 0 | async (2 rows)

2) View database data information

Main library: prod=# select count(*) from t10; count ---------- 21000000 (1 row) Spare warehouse: prod=# select count(*) from t10; count ---------- 21000000 (1 row)

remarks:

1) The new node has been viewed in the active / standby status information( srv159)Replication status information for. 2) Check that the synchronization data of the primary database and the standby database are consistent, which indicates that the standby database and the primary database maintain data consistency during the creation of basic backup. 3) It can be seen from the above that the standby database is created.

2, Configure a new node to join the cluster service and start and stop with one click

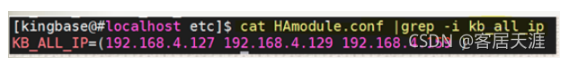

2.1 edit all node hamodule conf

Note: in KB_ ALL_ Add the ip of the new standby database node to the ip parameter.

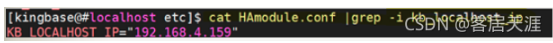

2.2 in the new node, modify the hamodule conf

Note: KB_ LOCALHOST_ The ip parameter is configured as native ip.

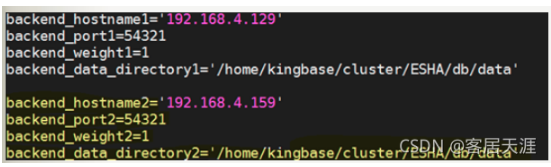

2.3 configure the kingbase_cluster.conf file of existing nodes (primary and standby libraries of the Cluster)

Note: the yellow part in the figure shows the configuration of new nodes. Modify it carefully, otherwise it will be displayed in show pool_ New nodes cannot be found while nodes.

2.4 test kingbase_monitor.sh one click Start / stop cluster:

[kingbase@srv129 bin]$ ./kingbase_monitor.sh restart ----------------------------------------------------------------------- 2020-10-29 17:32:33 KingbaseES automation beging... 2020-10-29 17:32:33 stop kingbasecluster [192.168.4.127] ... DEL VIP NOW AT 2020-10-29 17:32:32 ON ens192 No VIP on my dev, nothing to do. 2020-10-29 17:32:33 Done... 2020-10-29 17:32:33 stop kingbasecluster [192.168.4.129] ... DEL VIP NOW AT 2020-10-29 17:32:34 ON ens192 No VIP on my dev, nothing to do. 2020-10-29 17:32:34 Done... 2020-10-29 17:32:34 stop kingbase [192.168.4.127] ... set /home/kingbase/cluster/ESHA/db/data down now... 2020-10-29 17:32:37 Done... 2020-10-29 17:32:38 Del kingbase VIP [192.168.4.99/24] ... DEL VIP NOW AT 2020-10-29 17:32:37 ON ens192 No VIP on my dev, nothing to do. 2020-10-29 17:32:38 Done... 2020-10-29 17:32:38 stop kingbase [192.168.4.129] ... set /home/kingbase/cluster/ESHA/db/data down now... 2020-10-29 17:32:40 Done... 2020-10-29 17:32:41 Del kingbase VIP [192.168.4.99/24] ... DEL VIP NOW AT 2020-10-29 17:32:42 ON ens192 execute: [/sbin/ip addr del 192.168.4.99/24 dev ens192] Oprate del ip cmd end. 2020-10-29 17:32:42 Done... 2020-10-29 17:32:42 stop kingbase [192.168.4.159] ... 2020-10-29 17:32:42 Done... 2020-10-29 17:32:43 Del kingbase VIP [192.168.4.99/24] ... DEL VIP NOW AT 2020-10-29 17:32:43 ON ens192 No VIP on my dev, nothing to do. 2020-10-29 17:32:43 Done... ...................... all stop.. start crontab kingbase position : [3] Redirecting to /bin/systemctl restart crond.service start crontab kingbase position : [3] Redirecting to /bin/systemctl restart crond.service ADD VIP NOW AT 2020-10-29 17:32:51 ON ens192 execute: [/sbin/ip addr add 192.168.4.99/24 dev ens192 label ens192:2] execute: /usr/sbin/arping -U 192.168.4.99 -I ens192 -w 1 ARPING 192.168.4.99 from 192.168.4.99 ens192 Sent 1 probes (1 broadcast(s)) Received 0 response(s) Redirecting to /bin/systemctl restart crond.service wait kingbase recovery 5 sec... start crontab kingbasecluster line number: [4] Redirecting to /bin/systemctl restart crond.service start crontab kingbasecluster line number: [4] Redirecting to /bin/systemctl restart crond.service ...................... all started.. ... now we check again ================================================================== | ip | program| [status] [ 192.168.4.127]| [kingbasecluster]| [active] [ 192.168.4.129]| [kingbasecluster]| [active] [ 192.168.4.127]| [kingbase]| [active] [ 192.168.4.129]| [kingbase]| [active] [ 192.168.4.159]| [kingbase]| [active] ==================================================================

Note: as can be seen from the above, the cluster architecture is a 2-node cluster and a 3-node data node.

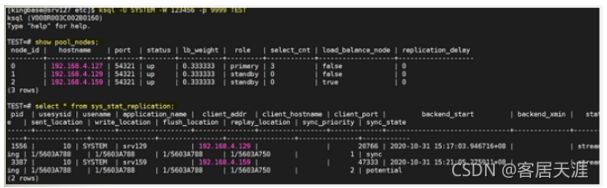

2.5 viewing new node information

3, Cluster handover test

3.1 test objectives

When the database service of the original standby database stops or goes down, and when the primary database service or goes down, the new standby database switches to the primary database.

3.2 test steps

1,Stop the of the original / standby database first kingbaseES Service. 2,Then stop the of the main library kingbaseES Service. 3,Check whether the new standby database is switched to the primary database.

3.3 implement active / standby switching

1. Stop the kingbases service of the original and standby databases

[kingbase@srv129 bin]$ sys_ctl stop -D /home/kingbase/cluster/ESHA/db/data Wait for the server process to shut down .... complete The server process has shut down

2. View replication status information

1) Copy status information

TEST=# select * from sys_stat_replication; pid | usesysid | usename | application_name | client_addr | client_hostname | client_port | backend_start | backend_xmin | stat e | sent_location | write_location | flush_location | replay_location | sync_priority | sync_state ------+----------+---------+------------------+---------------+-------------- 1556 | 10 | SYSTEM | srv129 | 192.168.4.129 | | 20766 | 2020-10-31 15:17:03.946716+08 | | stream ing | 1/5603A788 | 1/5603A788 | 1/5603A788 | 1/5603A750 | 1 | sync 3387 | 10 | SYSTEM | srv159 | 192.168.4.159 | | 47333 | 2020-10-31 15:21:05.275911+08 | | stream ing | 1/5603A788 | 1/5603A788 | 1/5603A788 | 1/5603A750 | 2 | potential (2 rows) Note: in the architecture of one master and two slaves, the number of new nodes sync_stat Status is potential"

2) The original and standby database kingbases are stopped

TEST=# select * from sys_stat_replication; pid | usesysid | usename | application_name | client_addr | client_hostname | client_port | backend_start | backend_xmin | stat e | sent_location | write_location | flush_location | replay_location | sync_priority | sync_state ------+----------+---------+------------------+---------------+--------------- 3387 | 10 | SYSTEM | srv159 | 192.168.4.159 | | 47333 | 2020-10-31 15:21:05.275911+08 | | stream ing | 1/5603A980 | 1/5603A980 | 1/5603A980 | 1/5603A8D8 | 0 | async (1 row) Note: in the one master and one slave architecture, the number of new nodes sync_stat Status is“ async".

3) View pool node information

TEST=# show pool_nodes; node_id | hostname | port | status | lb_weight | role | select_cnt | load_balance_node | replication_delay ---------+---------------+-------+--------+-----------+---------+------------+-------------------+------------------- 0 | 192.168.4.127 | 54321 | up | 0.333333 | primary | 5 | false | 0 1 | 192.168.4.129 | 54321 | down | 0.333333 | standby | 0 | false | 0 2 | 192.168.4.159 | 54321 | up | 0.333333 | standby | 0 | true | 0 (3 rows)

3. Stop the kingbases database service of the main library

[kingbase@srv127 etc]$ sys_ctl stop -D /home/kingbase/cluster/ESHA/db/data Wait for the server process to shut down .... complete The server process has shut down

**4. After switching, view the standby database information**

1) View the kingbases process of the new standby database

[kingbase@#localhost log]$ ps -ef |grep kingbase kingbase 11680 1 0 15:21 pts/1 00:00:01 /home/kingbase/cluster/ESHA/db/bin/kingbase -D /home/kingbase/cluster/ESHA/db/data kingbase 11681 11680 0 15:21 ? 00:00:00 kingbase: logger process kingbase 11686 11680 0 15:21 ? 00:00:00 kingbase: checkpointer process kingbase 11687 11680 0 15:21 ? 00:00:00 kingbase: writer process kingbase 11689 11680 0 15:21 ? 00:00:00 kingbase: stats collector process kingbase 14821 31212 0 10 June 30 pts/0 00:00:00 ../bin/kingbasecluster -n -d kingbase 14840 14821 0 10 June 30 pts/0 00:00:35 kingbasecluster: watchdog root 14850 14675 0 09:48 pts/1 00:00:00 su - kingbase kingbase 14851 14850 0 09:48 pts/1 00:00:00 -bash kingbase 15518 11680 0 15:33 ? 00:00:00 kingbase: wal writer process kingbase 15519 11680 0 15:33 ? 00:00:00 kingbase: autovacuum launcher process kingbase 15520 11680 0 15:33 ? 00:00:00 kingbase: archiver process last was 00000005.history kingbase 15521 11680 0 15:33 ? 00:00:00 kingbase: bgworker: syslogical supervisor kingbase 16075 11680 0 15:35 ? 00:00:00 kingbase: SUPERMANAGER_V8ADMIN TEST 192.168.4.127(34260) idle

2) Connect instance to view database status

[kingbase@#localhost data]$ ksql -U system -W 123456 TEST ksql (V008R003C002B0160) Type "help" for help. TEST=# select sys_is_in_recovery(); sys_is_in_recovery -------------------- f (1 row) Note: the standby database has been switched to the primary database.

3) Viewing the recovery log

[kingbase@#localhost log]$ tail -f recovery.log --------------------------------------------------------------------- 2020-10-31 15:36:02 recover beging... 2020-10-31 15:36:02 check if the network is ok ping trust ip 192.168.4.1 scuccess ping times :[3], scuccess times:[3] determine if i am master or standby I,m node is primary, determine whether there is a standby db can be set to synchronous standby ===As can be seen from the above, the standby database has been switched to the primary database.===

4, Summary

1,kingbaseES V8R3 The read-write separation cluster can add new nodes online and manually to expand the cluster architecture horizontally. 2,In modification kingbasecluster.conf Restart required after kingbasecluster Service, you can first cluster Standby node pass command(kingbasecluster –m fast stop Shut down the service) and then shut down cluster Master node service; Start first cluster Master node service(kingbasecluster –n >kingbasecluster.log 2>&1 &)Start the cluster service in the mode, and start it after the master node is started cluster Standby node. 3,Testing should be done well before adding nodes, which should be completed at low peak times. 4,This test document is only for CentOS 7 Under the environment, please test offline before using other versions.