Kube scheduler source code analysis (1) - initialization and startup analysis

Introduction to Kube scheduler

Kube scheduler component is one of the core components in kubernetes. It is mainly responsible for the scheduling of pod resource objects. Specifically, Kube scheduler component is responsible for scheduling the unscheduled pod to the appropriate and optimal node node according to the scheduling algorithm (including pre selection algorithm and optimization algorithm).

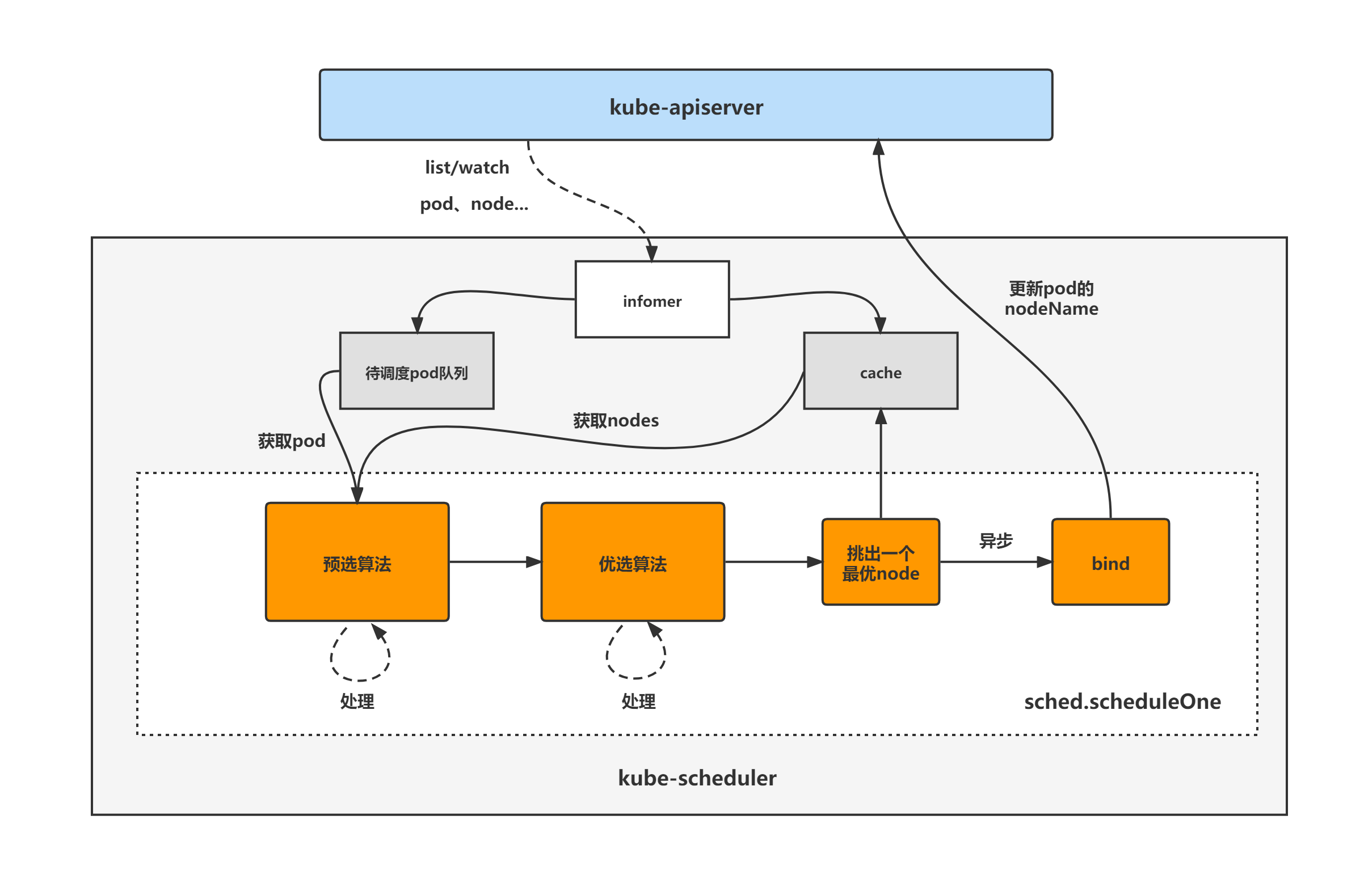

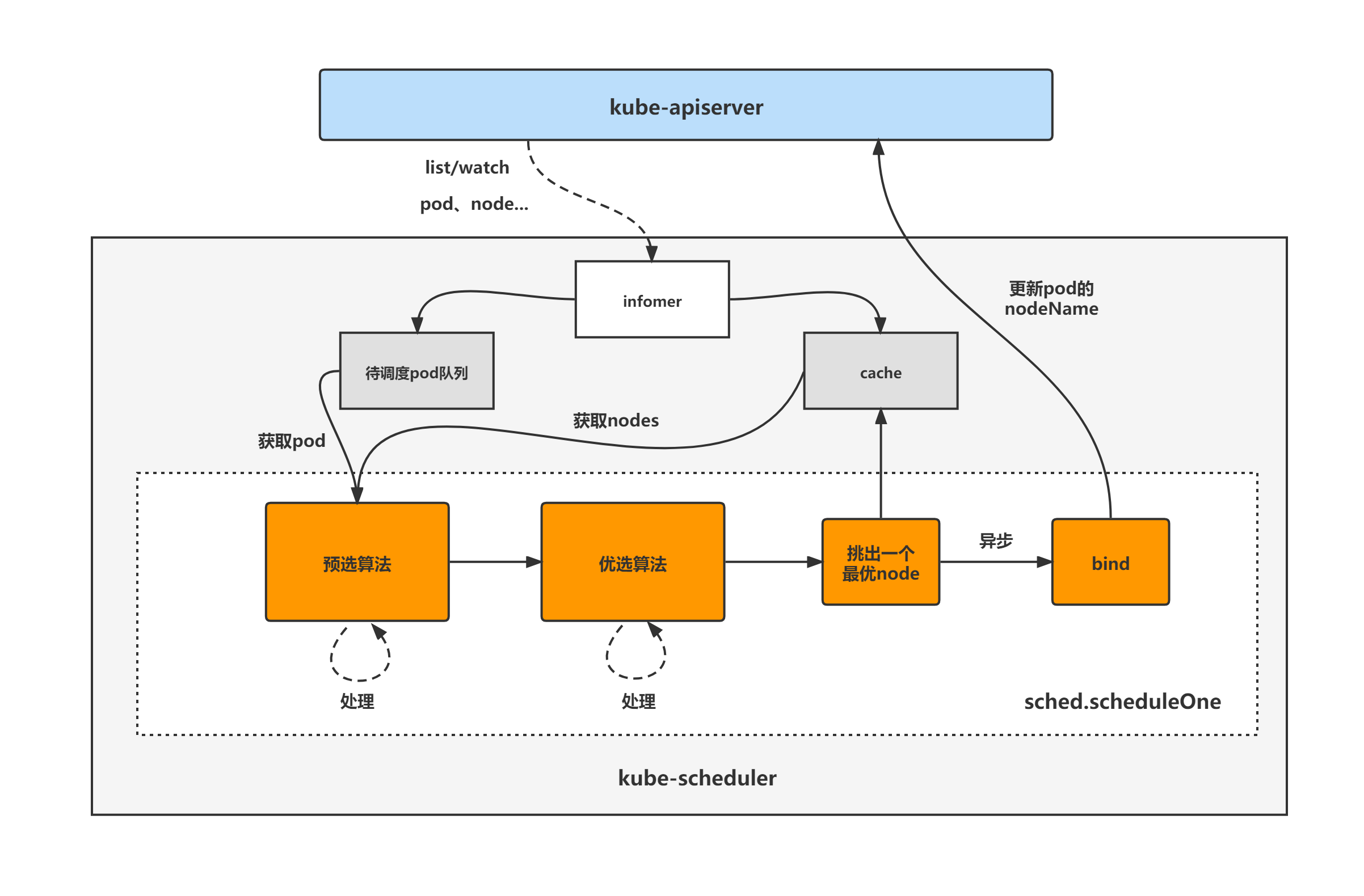

Kube scheduler architecture diagram

The general composition and processing flow of Kube scheduler are shown in the following figure. Kube scheduler lists / watches the pod, node and other objects, puts the unscheduled pod into the pod queue to be scheduled according to the informer, constructs the scheduler cache according to the informer (for quickly obtaining the required node and other objects), and then schedules The scheduleone method is the core processing logic of the Kube scheduler component for scheduling pods. A pod is taken from the unscheduled pod queue. After pre selection and optimization algorithm, an optimal node is finally selected, and then the cache is updated and the bind operation is performed asynchronously, that is, the nodeName field of the pod is updated. So far, the scheduling of a pod is completed.

The analysis of Kube scheduler component will be divided into two parts:

(1) Kube scheduler initialization and startup analysis;

(2) Kube scheduler core processing logic analysis.

This paper first analyzes the initialization and startup of Kube scheduler component, and then analyzes the core processing logic.

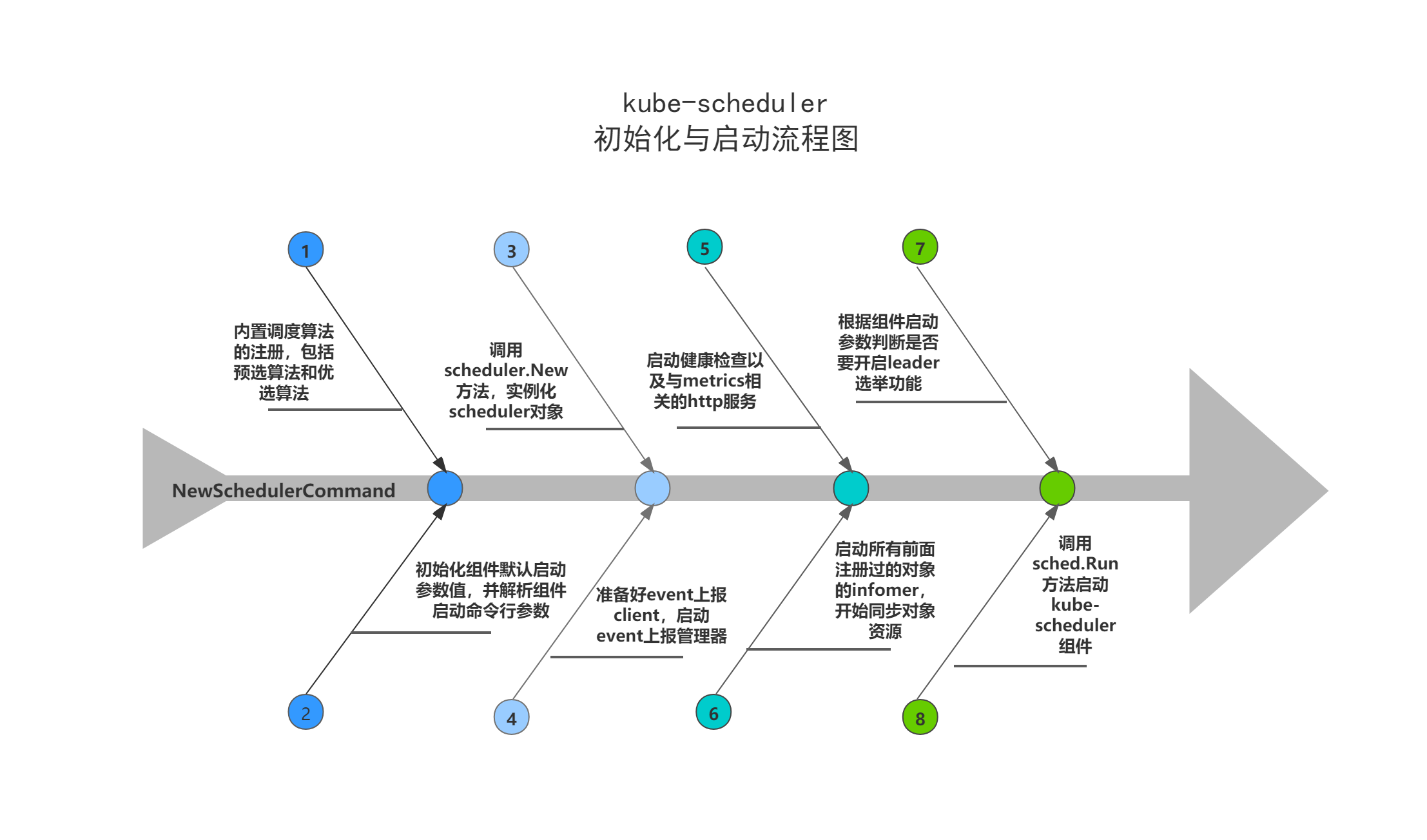

1. Kube scheduler initialization and startup analysis

Based on tag v1 seventeen point four

https://github.com/kubernetes/kubernetes/releases/tag/v1.17.4

You can directly see the NewSchedulerCommand function of Kube scheduler as the entry for Kube scheduler initialization and start analysis.

NewSchedulerCommand

The main logic of the NewSchedulerCommand function:

(1) Initialize the default startup parameter value of the component;

(2) Define the operation command method of Kube scheduler component, namely runCommand function (runCommand function finally calls Run function to Run and start Kube scheduler component, and the analysis of Run function will be carried out below);

(3) The Kube scheduler component starts command line parameter parsing.

// cmd/kube-scheduler/app/server.go

func NewSchedulerCommand(registryOptions ...Option) *cobra.Command {

// 1. Initialize the default startup parameter value of the component

opts, err := options.NewOptions()

if err != nil {

klog.Fatalf("unable to initialize command options: %v", err)

}

// 2. Define the run command method of Kube scheduler component, i.e. runCommand function

cmd := &cobra.Command{

Use: "kube-scheduler",

Long: `The Kubernetes scheduler is a policy-rich, topology-aware,

workload-specific function that significantly impacts availability, performance,

and capacity. The scheduler needs to take into account individual and collective

resource requirements, quality of service requirements, hardware/software/policy

constraints, affinity and anti-affinity specifications, data locality, inter-workload

interference, deadlines, and so on. Workload-specific requirements will be exposed

through the API as necessary.`,

Run: func(cmd *cobra.Command, args []string) {

if err := runCommand(cmd, args, opts, registryOptions...); err != nil {

fmt.Fprintf(os.Stderr, "%v\n", err)

os.Exit(1)

}

},

}

// 3. Component command line startup parameter analysis

fs := cmd.Flags()

namedFlagSets := opts.Flags()

verflag.AddFlags(namedFlagSets.FlagSet("global"))

globalflag.AddGlobalFlags(namedFlagSets.FlagSet("global"), cmd.Name())

for _, f := range namedFlagSets.FlagSets {

fs.AddFlagSet(f)

}

...

}

runCommand

runCommand defines the run command function of the Kube scheduler component. You can mainly see the following two logics:

(1) Call algorithmprovider The applyfeaturegates method determines whether to add and register the corresponding preselection and optimization algorithms according to whether the FeatureGate is turned on;

(2) Call Run to start the Kube scheduler component.

// cmd/kube-scheduler/app/server.go

// runCommand runs the scheduler.

func runCommand(cmd *cobra.Command, args []string, opts *options.Options, registryOptions ...Option) error {

...

// Apply algorithms based on feature gates.

// TODO: make configurable?

algorithmprovider.ApplyFeatureGates()

// Configz registration.

if cz, err := configz.New("componentconfig"); err == nil {

cz.Set(cc.ComponentConfig)

} else {

return fmt.Errorf("unable to register configz: %s", err)

}

ctx, cancel := context.WithCancel(context.Background())

defer cancel()

return Run(ctx, cc, registryOptions...)

}

1.1 algorithmprovider.ApplyFeatureGates

According to whether the FeatureGate is turned on, decide whether to additionally register the corresponding preselection and optimization algorithms.

// pkg/scheduler/algorithmprovider/plugins.go

import (

"k8s.io/kubernetes/pkg/scheduler/algorithmprovider/defaults"

)

func ApplyFeatureGates() func() {

return defaults.ApplyFeatureGates()

}

1.1.1 init

plugins. The go file import s the defaults package, so look at defaults Before applying the featuregates method, let's first see the init function of the defaults package, which mainly registers the built-in scheduling algorithm, including pre selection algorithm and optimization algorithm.

(1) First, let's see the defaults in the defaults package Go file init function.

// pkg/scheduler/algorithmprovider/defaults/defaults.go

func init() {

registerAlgorithmProvider(defaultPredicates(), defaultPriorities())

}

Budget algorithm:

// pkg/scheduler/algorithmprovider/defaults/defaults.go

func defaultPredicates() sets.String {

return sets.NewString(

predicates.NoVolumeZoneConflictPred,

predicates.MaxEBSVolumeCountPred,

predicates.MaxGCEPDVolumeCountPred,

predicates.MaxAzureDiskVolumeCountPred,

predicates.MaxCSIVolumeCountPred,

predicates.MatchInterPodAffinityPred,

predicates.NoDiskConflictPred,

predicates.GeneralPred,

predicates.PodToleratesNodeTaintsPred,

predicates.CheckVolumeBindingPred,

predicates.CheckNodeUnschedulablePred,

)

}

Preferred algorithm:

// pkg/scheduler/algorithmprovider/defaults/defaults.go

func defaultPriorities() sets.String {

return sets.NewString(

priorities.SelectorSpreadPriority,

priorities.InterPodAffinityPriority,

priorities.LeastRequestedPriority,

priorities.BalancedResourceAllocation,

priorities.NodePreferAvoidPodsPriority,

priorities.NodeAffinityPriority,

priorities.TaintTolerationPriority,

priorities.ImageLocalityPriority,

)

}

The registerAlgorithmProvider function registers the algorithm provider, which stores the list of all types of scheduling algorithms, including pre selected algorithms and preferred algorithms (only the list of algorithm key s is stored, not the algorithm itself).

// pkg/scheduler/algorithmprovider/defaults/defaults.go

func registerAlgorithmProvider(predSet, priSet sets.String) {

// Registers algorithm providers. By default we use 'DefaultProvider', but user can specify one to be used

// by specifying flag.

scheduler.RegisterAlgorithmProvider(scheduler.DefaultProvider, predSet, priSet)

// Cluster autoscaler friendly scheduling algorithm.

scheduler.RegisterAlgorithmProvider(ClusterAutoscalerProvider, predSet,

copyAndReplace(priSet, priorities.LeastRequestedPriority, priorities.MostRequestedPriority))

}

Finally, assign the registered algorithm provider to the variable algorithmprovidemap (which stores the list of scheduling algorithms of all types), which is the global variable of the package.

// pkg/scheduler/algorithm_factory.go

// RegisterAlgorithmProvider registers a new algorithm provider with the algorithm registry.

func RegisterAlgorithmProvider(name string, predicateKeys, priorityKeys sets.String) string {

schedulerFactoryMutex.Lock()

defer schedulerFactoryMutex.Unlock()

validateAlgorithmNameOrDie(name)

algorithmProviderMap[name] = AlgorithmProviderConfig{

FitPredicateKeys: predicateKeys,

PriorityFunctionKeys: priorityKeys,

}

return name

}

// pkg/scheduler/algorithm_factory.go var ( ... algorithmProviderMap = make(map[string]AlgorithmProviderConfig) ... )

(2) Let's see the register in the defaults package_ predicates. The init function of go file mainly registers the preselection algorithm.

// pkg/scheduler/algorithmprovider/defaults/register_predicates.go

func init() {

...

// Fit is defined based on the absence of port conflicts.

// This predicate is actually a default predicate, because it is invoked from

// predicates.GeneralPredicates()

scheduler.RegisterFitPredicate(predicates.PodFitsHostPortsPred, predicates.PodFitsHostPorts)

// Fit is determined by resource availability.

// This predicate is actually a default predicate, because it is invoked from

// predicates.GeneralPredicates()

scheduler.RegisterFitPredicate(predicates.PodFitsResourcesPred, predicates.PodFitsResources)

...

(3) Finally, you can see the register in the defaults package_ priorities. The init function of go file mainly registers the optimization algorithm.

// pkg/scheduler/algorithmprovider/defaults/register_priorities.go

func init() {

...

// Prioritize nodes by least requested utilization.

scheduler.RegisterPriorityMapReduceFunction(priorities.LeastRequestedPriority, priorities.LeastRequestedPriorityMap, nil, 1)

// Prioritizes nodes to help achieve balanced resource usage

scheduler.RegisterPriorityMapReduceFunction(priorities.BalancedResourceAllocation, priorities.BalancedResourceAllocationMap, nil, 1)

...

}

The final result of the registration of the preselection algorithm and the optimization algorithm is assigned to the global variable. After the registration of the preselection algorithm, it is assigned to fitPredicateMap, and after the registration of the optimization algorithm, it is assigned to priorityFunctionMap.

// pkg/scheduler/algorithm_factory.go var ( ... fitPredicateMap = make(map[string]FitPredicateFactory) ... priorityFunctionMap = make(map[string]PriorityConfigFactory) ... )

1.1.2 defaults.ApplyFeatureGates

It is mainly used to judge whether to open a specific FeatureGate, and then add and register the corresponding preselection and optimization algorithms.

// pkg/scheduler/algorithmprovider/defaults/defaults.go

func ApplyFeatureGates() (restore func()) {

...

// Only register EvenPodsSpread predicate & priority if the feature is enabled

if utilfeature.DefaultFeatureGate.Enabled(features.EvenPodsSpread) {

klog.Infof("Registering EvenPodsSpread predicate and priority function")

// register predicate

scheduler.InsertPredicateKeyToAlgorithmProviderMap(predicates.EvenPodsSpreadPred)

scheduler.RegisterFitPredicate(predicates.EvenPodsSpreadPred, predicates.EvenPodsSpreadPredicate)

// register priority

scheduler.InsertPriorityKeyToAlgorithmProviderMap(priorities.EvenPodsSpreadPriority)

scheduler.RegisterPriorityMapReduceFunction(

priorities.EvenPodsSpreadPriority,

priorities.CalculateEvenPodsSpreadPriorityMap,

priorities.CalculateEvenPodsSpreadPriorityReduce,

1,

)

}

// Prioritizes nodes that satisfy pod's resource limits

if utilfeature.DefaultFeatureGate.Enabled(features.ResourceLimitsPriorityFunction) {

klog.Infof("Registering resourcelimits priority function")

scheduler.RegisterPriorityMapReduceFunction(priorities.ResourceLimitsPriority, priorities.ResourceLimitsPriorityMap, nil, 1)

// Register the priority function to specific provider too.

scheduler.InsertPriorityKeyToAlgorithmProviderMap(scheduler.RegisterPriorityMapReduceFunction(priorities.ResourceLimitsPriority, priorities.ResourceLimitsPriorityMap, nil, 1))

}

...

}

1.2 Run

The Run function mainly runs and starts the Kube scheduler component according to the configuration parameters. Its core logic is as follows:

(1) Prepare the event reporting client, which is used to report various events generated by Kube scheduler to API server;

(2) Call scheduler New method to instantiate the scheduler object;

(3) Start the event reporting manager;

(4) Set the health check of Kube scheduler component and start the health check and http service related to metrics;

(5) Start the infomer of all previously registered objects and start synchronizing object resources;

(6) Call WaitForCacheSync and wait for the synchronization of all informer objects to make the local cache data consistent with the data in etcd;

(7) Judge whether to enable the leader election function according to the component startup parameters;

(8) Call sched The run method starts the Kube scheduler component (sched.Run will be used as the entry of the following Kube scheduler core processing logic analysis).

// cmd/kube-scheduler/app/server.go

func Run(ctx context.Context, cc schedulerserverconfig.CompletedConfig, outOfTreeRegistryOptions ...Option) error {

// To help debugging, immediately log version

klog.V(1).Infof("Starting Kubernetes Scheduler version %+v", version.Get())

outOfTreeRegistry := make(framework.Registry)

for _, option := range outOfTreeRegistryOptions {

if err := option(outOfTreeRegistry); err != nil {

return err

}

}

// 1. Prepare the event reporting client to report various events generated by Kube scheduler to API server

// Prepare event clients.

if _, err := cc.Client.Discovery().ServerResourcesForGroupVersion(eventsv1beta1.SchemeGroupVersion.String()); err == nil {

cc.Broadcaster = events.NewBroadcaster(&events.EventSinkImpl{Interface: cc.EventClient.Events("")})

cc.Recorder = cc.Broadcaster.NewRecorder(scheme.Scheme, cc.ComponentConfig.SchedulerName)

} else {

recorder := cc.CoreBroadcaster.NewRecorder(scheme.Scheme, v1.EventSource{Component: cc.ComponentConfig.SchedulerName})

cc.Recorder = record.NewEventRecorderAdapter(recorder)

}

// 2. Call scheduler New method to instantiate the scheduler object

// Create the scheduler.

sched, err := scheduler.New(cc.Client,

cc.InformerFactory,

cc.PodInformer,

cc.Recorder,

ctx.Done(),

scheduler.WithName(cc.ComponentConfig.SchedulerName),

scheduler.WithAlgorithmSource(cc.ComponentConfig.AlgorithmSource),

scheduler.WithHardPodAffinitySymmetricWeight(cc.ComponentConfig.HardPodAffinitySymmetricWeight),

scheduler.WithPreemptionDisabled(cc.ComponentConfig.DisablePreemption),

scheduler.WithPercentageOfNodesToScore(cc.ComponentConfig.PercentageOfNodesToScore),

scheduler.WithBindTimeoutSeconds(cc.ComponentConfig.BindTimeoutSeconds),

scheduler.WithFrameworkOutOfTreeRegistry(outOfTreeRegistry),

scheduler.WithFrameworkPlugins(cc.ComponentConfig.Plugins),

scheduler.WithFrameworkPluginConfig(cc.ComponentConfig.PluginConfig),

scheduler.WithPodMaxBackoffSeconds(cc.ComponentConfig.PodMaxBackoffSeconds),

scheduler.WithPodInitialBackoffSeconds(cc.ComponentConfig.PodInitialBackoffSeconds),

)

if err != nil {

return err

}

// 3. Start the event escalation manager

// Prepare the event broadcaster.

if cc.Broadcaster != nil && cc.EventClient != nil {

cc.Broadcaster.StartRecordingToSink(ctx.Done())

}

if cc.CoreBroadcaster != nil && cc.CoreEventClient != nil {

cc.CoreBroadcaster.StartRecordingToSink(&corev1.EventSinkImpl{Interface: cc.CoreEventClient.Events("")})

}

// 4. Set the health check of Kube scheduler component and start the health check and http service related to metrics

// Setup healthz checks.

var checks []healthz.HealthChecker

if cc.ComponentConfig.LeaderElection.LeaderElect {

checks = append(checks, cc.LeaderElection.WatchDog)

}

// Start up the healthz server.

if cc.InsecureServing != nil {

separateMetrics := cc.InsecureMetricsServing != nil

handler := buildHandlerChain(newHealthzHandler(&cc.ComponentConfig, separateMetrics, checks...), nil, nil)

if err := cc.InsecureServing.Serve(handler, 0, ctx.Done()); err != nil {

return fmt.Errorf("failed to start healthz server: %v", err)

}

}

if cc.InsecureMetricsServing != nil {

handler := buildHandlerChain(newMetricsHandler(&cc.ComponentConfig), nil, nil)

if err := cc.InsecureMetricsServing.Serve(handler, 0, ctx.Done()); err != nil {

return fmt.Errorf("failed to start metrics server: %v", err)

}

}

if cc.SecureServing != nil {

handler := buildHandlerChain(newHealthzHandler(&cc.ComponentConfig, false, checks...), cc.Authentication.Authenticator, cc.Authorization.Authorizer)

// TODO: handle stoppedCh returned by c.SecureServing.Serve

if _, err := cc.SecureServing.Serve(handler, 0, ctx.Done()); err != nil {

// fail early for secure handlers, removing the old error loop from above

return fmt.Errorf("failed to start secure server: %v", err)

}

}

// 5. Start the informer of all previously registered objects and start synchronizing object resources

// Start all informers.

go cc.PodInformer.Informer().Run(ctx.Done())

cc.InformerFactory.Start(ctx.Done())

// 6. Wait for the synchronization of all informer objects to make the local cache data consistent with the data in etcd

// Wait for all caches to sync before scheduling.

cc.InformerFactory.WaitForCacheSync(ctx.Done())

// 7. Judge whether to enable the leader election function according to the component startup parameters

// If leader election is enabled, runCommand via LeaderElector until done and exit.

if cc.LeaderElection != nil {

cc.LeaderElection.Callbacks = leaderelection.LeaderCallbacks{

OnStartedLeading: sched.Run,

OnStoppedLeading: func() {

klog.Fatalf("leaderelection lost")

},

}

leaderElector, err := leaderelection.NewLeaderElector(*cc.LeaderElection)

if err != nil {

return fmt.Errorf("couldn't create leader elector: %v", err)

}

leaderElector.Run(ctx)

return fmt.Errorf("lost lease")

}

// 8. Call sched The run method starts the Kube scheduler component

// Leader election is disabled, so runCommand inline until done.

sched.Run(ctx)

return fmt.Errorf("finished without leader elect")

}

1.2.1 scheduler.New

The instantiation of scheduler object is divided into three parts:

(1) Instantiate infomer of pod, node, pvc, pv and other objects;

(2) Call configurator Createfromconfig: instantiate the scheduler according to the previously registered built-in scheduling algorithm (or according to the scheduling policy provided by the user);

(3) Register eventHandler for infomer object;

// pkg/scheduler/scheduler.go

func New(client clientset.Interface,

informerFactory informers.SharedInformerFactory,

podInformer coreinformers.PodInformer,

recorder events.EventRecorder,

stopCh <-chan struct{},

opts ...Option) (*Scheduler, error) {

stopEverything := stopCh

if stopEverything == nil {

stopEverything = wait.NeverStop

}

options := defaultSchedulerOptions

for _, opt := range opts {

opt(&options)

}

// 1. Instantiate infomer of node, pvc, pv and other objects

schedulerCache := internalcache.New(30*time.Second, stopEverything)

volumeBinder := volumebinder.NewVolumeBinder(

client,

informerFactory.Core().V1().Nodes(),

informerFactory.Storage().V1().CSINodes(),

informerFactory.Core().V1().PersistentVolumeClaims(),

informerFactory.Core().V1().PersistentVolumes(),

informerFactory.Storage().V1().StorageClasses(),

time.Duration(options.bindTimeoutSeconds)*time.Second,

)

registry := options.frameworkDefaultRegistry

if registry == nil {

registry = frameworkplugins.NewDefaultRegistry(&frameworkplugins.RegistryArgs{

VolumeBinder: volumeBinder,

})

}

registry.Merge(options.frameworkOutOfTreeRegistry)

snapshot := nodeinfosnapshot.NewEmptySnapshot()

configurator := &Configurator{

client: client,

informerFactory: informerFactory,

podInformer: podInformer,

volumeBinder: volumeBinder,

schedulerCache: schedulerCache,

StopEverything: stopEverything,

hardPodAffinitySymmetricWeight: options.hardPodAffinitySymmetricWeight,

disablePreemption: options.disablePreemption,

percentageOfNodesToScore: options.percentageOfNodesToScore,

bindTimeoutSeconds: options.bindTimeoutSeconds,

podInitialBackoffSeconds: options.podInitialBackoffSeconds,

podMaxBackoffSeconds: options.podMaxBackoffSeconds,

enableNonPreempting: utilfeature.DefaultFeatureGate.Enabled(kubefeatures.NonPreemptingPriority),

registry: registry,

plugins: options.frameworkPlugins,

pluginConfig: options.frameworkPluginConfig,

pluginConfigProducerRegistry: options.frameworkConfigProducerRegistry,

nodeInfoSnapshot: snapshot,

algorithmFactoryArgs: AlgorithmFactoryArgs{

SharedLister: snapshot,

InformerFactory: informerFactory,

VolumeBinder: volumeBinder,

HardPodAffinitySymmetricWeight: options.hardPodAffinitySymmetricWeight,

},

configProducerArgs: &frameworkplugins.ConfigProducerArgs{},

}

metrics.Register()

// 2. Call configurator Createfromconfig: instantiate the scheduler according to the previously registered built-in scheduling algorithm (or according to the scheduling policy provided by the user)

var sched *Scheduler

source := options.schedulerAlgorithmSource

switch {

case source.Provider != nil:

// Create the config from a named algorithm provider.

sc, err := configurator.CreateFromProvider(*source.Provider)

if err != nil {

return nil, fmt.Errorf("couldn't create scheduler using provider %q: %v", *source.Provider, err)

}

sched = sc

case source.Policy != nil:

// Create the config from a user specified policy source.

policy := &schedulerapi.Policy{}

switch {

case source.Policy.File != nil:

if err := initPolicyFromFile(source.Policy.File.Path, policy); err != nil {

return nil, err

}

case source.Policy.ConfigMap != nil:

if err := initPolicyFromConfigMap(client, source.Policy.ConfigMap, policy); err != nil {

return nil, err

}

}

sc, err := configurator.CreateFromConfig(*policy)

if err != nil {

return nil, fmt.Errorf("couldn't create scheduler from policy: %v", err)

}

sched = sc

default:

return nil, fmt.Errorf("unsupported algorithm source: %v", source)

}

// Additional tweaks to the config produced by the configurator.

sched.Recorder = recorder

sched.DisablePreemption = options.disablePreemption

sched.StopEverything = stopEverything

sched.podConditionUpdater = &podConditionUpdaterImpl{client}

sched.podPreemptor = &podPreemptorImpl{client}

sched.scheduledPodsHasSynced = podInformer.Informer().HasSynced

// 3. Register eventHandler for infomer object

AddAllEventHandlers(sched, options.schedulerName, informerFactory, podInformer)

return sched, nil

}

summary

Introduction to Kube scheduler

Kube scheduler component is one of the core components in kubernetes. It is mainly responsible for the scheduling of pod resource objects. Specifically, Kube scheduler component is responsible for scheduling the unscheduled pod to the appropriate and optimal node node according to the scheduling algorithm (including pre selection algorithm and optimization algorithm).

Kube scheduler architecture diagram

The general composition and processing flow of Kube scheduler are shown in the following figure. Kube scheduler lists / watches the pod, node and other objects, puts the unscheduled pod into the pod queue to be scheduled according to the informer, constructs the scheduler cache according to the informer (for quickly obtaining the required node and other objects), and then schedules The scheduleone method is the core processing logic of the Kube scheduler component for scheduling pods. A pod is taken from the unscheduled pod queue. After pre selection and optimization algorithm, an optimal node is finally selected, and then the cache is updated and the bind operation is performed asynchronously, that is, the nodeName field of the pod is updated. So far, the scheduling of a pod is completed.

Kube scheduler initialization and startup analysis flow chart